A Method for Evaluating Mission Risk for the National Fighter Procurement Evaluation of Options

Alternate Formats

Sean Bourdon

Directorate of Air Staff Operational Research

Defence R&D Canada – CORA

Technical Memorandum

DRDC CORA TM 2013-230(E)

April 2014

Principal Author

Original signed by Sean Bourdon

Sean Bourdon

Approved by

Original signed by Dr. R.E. Mitchell

Dr. R.E. Mitchell

Head Maritime and Air Systems Operational Research

Approved for release by

Original signed by P. Comeau

P. Comeau

Chief Scientist, Chair Document Review Panel

The information contained herein has been derived and determined through best practice and adherence to the highest levels of ethical, scientific and engineering investigative principles. The reported results, their interpretation, and any opinions expressed therein, remain those of the authors and do not represent, or otherwise reflect, any official opinion or position of DND or the Government of Canada.

Defence R&D Canada – Centre for Operational Research and Analysis (CORA)

© Her Majesty the Queen in Right of Canada, as represented by the Minister of National Defence, 2014

© Sa Majesté la Reine (en droit du Canada), telle que représentée par le ministre de la Défense nationale, 2014

Abstract

The Government of Canada stood up the National Fighter Procurement Secretariat (NFPS) in response to the 2012 Spring Report of the Auditor General of Canada. Part of the NFPS mandate is to review, oversee, and coordinate the implementation of the Government’s Seven-Point Plan with the fourth step in this plan being to conduct an evaluation of options to sustain a fighter capability well into the twenty-first century. The goal of the evaluation of options is to articulate the risks associated with various CF-18 replacement options relative to their ability to fulfill the mission set outlined in the Canada First Defence Strategy. This report describes the method used to undertake the evaluation of options as part of the process to inform the Government of Canada’s decision on the best way forward for replacing the CF-18.

A Method for Evaluating Mission Risk for the National Fighter Procurement Evaluation of Options:

Sean Bourdon; DRDC CORA TM 2013-230(E); Defence R&D Canada – CORA; April 2014.

Introduction

The evaluation of options method was designed to articulate the risks associated with various options for replacing Canada’s CF-18 aircraft in their ability to successfully execute the missions outlined in the Canada First Defence Strategy (CFDS). While stopping short of making any recommendations, the evaluation of options results will nonetheless help the Government make an informed decision as to the best way forward for Canada.

To ensure that the outcome would be credible, the evaluation of options needed to use a process that is as fair as possible to all industrial respondents. The following characteristics were deemed essential to the method and guided the choices made during its development:

Impartial: The method used could not favour any of the options. It needed to be neutral in its application and consistently applied to all options considered.

Comprehensive: The method needed to consider all aspects of fighter use along with the factors that enable the fighter operations. A comprehensive method provides less opportunity for bias in the overall assessment of the fighter replacement options.

Understandable: By being understandable both in its structure and in its application, the method would allow its findings to be easily traced back to the appropriate evidence, particularly in situations where professional military judgment was used. Defensibility of its conclusions additionally would provide credibility to the method being used.

Robust: A method is robust if it can be applied without failure under a wide range of conditions. It is important that it do so without sacrificing analytical rigour. In the context of this method, robustness included the ability to use proprietary or classified information as part of the evaluation process. The method also needed to be repeatable so that its conclusions remained consistent even if the assessors had changed or had the assessment process been carried out a second time.

The National Fighter Procurement Secretariat (NFPS) took several steps to foster an atmosphere of openness and to exercise due diligence in assessing potential options. As an example, the method described herein was briefed to several aircraft manufacturers prior to the assessment process. Also, NFPS used three questionnaires to solicit information from these companies to inform the evaluation of options. The first questionnaire covered the technical capabilities associated with fighter aircraft currently in production or scheduled to be in production, and the critical enabling factors (CEFs) required to acquire the fleet and sustain it throughout its lifespan. As such, the industry returns provided the foundation for assessing risks to executing the CFDS missions. The second and third questionnaires dealt with cost estimates of the aircraft and potential benefits to Canadian industry. This information was used in two separate streams of assessment activity that are beyond the scope of this summary.

Components of the Analysis

The method breaks down the Canadian Armed Forces (CAF) missions, as expressed in the CFDS, into components that can be evaluated in a fair manner using available data or subject matter expertise. Specifically, the structure consisted of the following components:

Fighter Mission Scenarios: The Department of National Defence (DND) uses detailed scenarios, based on the CFDS missions, against which military capability is assessed. For the evaluation of options, these scenarios were tailored to identify the expected contribution of the fighter force in each CFDS mission.

Aerospace Capabilities: As part of capability-based planning, DND maintains a current list of capabilities that can be delivered by the CAF. The evaluation of options only considered the subset of these capabilities that the fighter force contributes to as part of the assessment process.

Measures of Effectiveness (MOEs): MOEs are high-level indicators of how well the various fighter options were expected to deliver their portion of the required Aerospace Capabilities. They are intimately linked to the specific mission context.

Measures of Performance (MOPs): MOPs were used to assess how well each class of aircraft systems was expected to perform in an absolute sense.[a]

A foundational element in being able to develop this structure was a mission needs analysis. This analysis was based on previous DND analyses of overall CAF capability, and resulted in fighter mission scenarios, aerospace capabilities, and MOEs that are consistent with existing DND guidance and documentation.

Introducing this structure benefited the evaluation of options in several ways, including making the assessment comprehensive and improving the accuracy of the assessment questions by making them more specific.

Evaluation Framework

The method was applied to fighter operations occurring in two timeframes: 2020–2030, the period during which the Royal Canadian Air Force will transition its fighter force from the CF-18 to its new aircraft with no degradation in capability, and 2030+, when it is expected that there will be increased proliferation of existing threats in addition to the introduction of new and upgraded threats, both of which make operating within this period more challenging. The feasibility and expected costs of safely operating the CF-18 beyond 2020 were carefully analyzed as part of the overall assessment.

In practice, the evaluation began with MOPs, followed by MOEs, Aerospace Capabilities, and Fighter Mission Scenarios. In this way, each level of the structure informed subsequent assessments, which provided another benefit: traceability of evidence throughout the entire process.

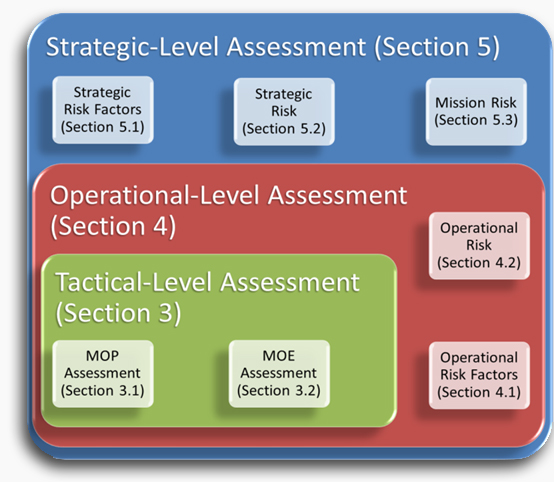

The structure additionally allowed the evaluation process to be easily mapped to three levels that permeate many facets of the military: tactical, operational, and strategic. For the purposes of this assessment, the three levels were distinguished as follows:

Tactical: The starting point for the tactical-level assessment is a properly configured aircraft, airborne in the area of operations, regardless of the logistics required to get it there and any regulatory or resource constraints that would need to be overcome in order to realize this situation. This portion of the evaluation concentrated on assessing fighters as they execute very specific functions in support of the missions specified in the CFDS.

The assessment process at this level began with an evaluation of the technological components of the fighter aircraft using the MOPs and, using the MOEs, finished with an assessment of how these technologies combine in helping the fighter deliver on its obligations within defined scenarios. The MOEs relied critically on the results of a threat analysis which provided realistic limitations on the expected threats in each mission scenario. The threat analysis also forecasted the proliferation of existing threats into new regions and how sophisticated existing or new threats might become during the relevant timeframes.

Operational: The operational-level assessment put the tactical assessments into proper context by taking a more complete view of fighter operations. The fighters’ contribution to the successful execution of the entire CFDS mission set, as defined through the use of specific representative scenarios, was assessed.

This level of the assessment ensured that important fighter functions were treated as such, and helped determine whether the fighter options provide capability in keeping with the mission needs. Like the tactical-level assessment, this component of the evaluation assumed that the conditions required to have the fighter applied in its roles are in place. The output was a risk assessment describing each option’s ability to execute the CFDS missions in both timeframes, mitigated using any reasonable[b] means at the RCAF’s disposal. The mitigations proved to be extremely important because they allowed for a more common sense application of fighter assets to the mission scenarios.

Strategic: The strategic-level assessment built off of the operational-level assessment by taking into consideration all the conditions which need to be in place for the fighter to perform its functions. Risks were identified relative to four CEFs: aircraft acquisition, supportability and force management, integration, and growth potential. The absence of one or more of these conditions may preclude the fighter aircraft from being used in CAF operations. Once again, assessors were able to apply measures to mitigate risks where feasible.

The end result of the strategic-level assessment was an integrated risk assessment associated with using the various fighter options to the successful execution of the CFDS missions. These risks can originate from a lack of operational capability as identified in the operational-level assessment or the additional considerations related to the CEFs. To produce the integrated risk assessment, the assessors additionally had to determine whether the risks attributable to the CEFs made any risks identified at the operational level worse, or whether these risks did not interact. In cases where there were interactions, the combined risk was assessed.

Subject Matter Expertise

In the absence of being able to fly the aircraft under the conditions specified in the fighter mission scenarios, the expert judgment of subject matter experts (SMEs) was a critical component of the assessment process. Consequently, a great deal of care was used to ensure that this did not introduce bias into the process.

Breaking down CFDS missions into components helped ensure that appropriate SMEs could be identified and that these experts were never asked to evaluate anything outside of their area of specialty. Multiple assessment teams were also used whenever feasible. This added a variety of opinion and helped reduce the possibility of phenomena such as groupthink. Also, SMEs were required to provide substantiation to validate each of their assessments, with oversight from NFPS, and their findings were scrutinized by a panel of third-party independent reviewers.

Conclusion

A mission risk assessment method has been developed which breaks down the CFDS mission set into components. Of necessity, the method requires the professional judgment of subject matter experts, and great care has been taken to ensure that the method is impartial, comprehensive, understandable, and robust.

Table of contents

- Introduction

- Background

- Tactical-Level Assessment

- Operational-Level Assessment

- Strategic-Level Assessment Context

List of tables

- Table 1: Operational score for Aircraft A in Mission n. 22

- Table 2: Converting operational scores into minimum raw operational risk. 22

- Table 3: Risk translation matrix. 23

- Table 4: Raw operational risk calculation for Aircraft A in Mission n. 23

- Table 5: Interim operational risk. 24

- Table 6: Operational risk aggregation (independent risks) 25

- Table 7: Operational risk aggregation (compounding risks) 26

- Table 8: Descriptors of likelihood for strategic risk assessment 28

- Table 9: Mission risk aggregation (independent risks) 30

- Table 10: Mission risk aggregation (compounding risks) 31

Acknowledgements

This report merely describes the final outcome resulting from many hours of discussion on how to best address the myriad challenges faced in the development of the method used to assess replacement options for the CF-18 Hornet. The author was one of many contributors to several teams that conceived its implementation, tested it, and brought it to fruition. As such, he would like to acknowledge the roles of the following individuals (in alphabetical order) in the development of the method through its many iterations:

- Benoît Arbour, Maj Mike Ayling, Darren Benedik, Maj Rob Butler, LCol Trevor Campbell, Maj Haryadi Christianto, Maj Seane Doell, LCol Shayne Elder, LCol Kevin Ferdinand, Maj Nicholas Griswold, Maj Jameel Janjua, Peter Johnston, Maj Rich Kohli, Maj Sébastien Lapierre-Guay, Maj Francis Mercier, Maj Warren Miron, Maj Brandon Robinson, LCol Bernard Rousseau, LCol Tim Shopa, and Maj David Wood.

The following individuals generously agreed to participate in various dry runs of the different parts of the method:

- LCol Guy Armstrong, Slawek Dreger, LCol Paul Fleury, LCol (ret’d) Kelly Kovach, LCol Glenn Madsen, Maj Irvin Marucelj, LCol Charles Moores, Capt Tim Pistun, LCol Luc Sabourin, LCol Pat Sabourin, Terry Sullivan, Maj Dave Turenne, and Maj Paul Whalen.

Their astute feedback made the method more robust and user-friendly.

The author would also like to acknowledge the insightful comments provided by those who have reviewed this document. These comments have helped improve the accuracy and the clarity of the material it contains. Specifically, he would like to thank:

- Dr. Ross Graham, Dave Mason, BGen Stephan Kummel, Vance Millar, Dr. Roy Mitchell, and BGen Alain Pelletier, as well as the members of the Independent Review Panel: Dr. Keith Coulter, Dr. Philippe Lagassé, Dr. James Mitchell, and Mr. Rod Monette.

However, any outstanding errors or inaccuracies are attributable to the author alone.

Context

In response to the 2012 Spring Report of the Auditor General of Canada [1], the Government of Canada announced a Seven-Point Plan to “ensure that the Royal Canadian Air Force [RCAF] acquires the fighter aircraft it needs to complete the missions asked of it by the Government, and that Parliament and the Canadian public have confidence in the open and transparent acquisition process that will be used to replace the CF-18 fleet.” [2] The National Fighter Procurement Secretariat (NFPS) was created to review, oversee, and coordinate the implementation of the Seven-Point Plan.

The fourth step in this plan is the evaluation of options to sustain a fighter capability well into the twenty-first century [3]. The goal of the evaluation of options is to provide advice to the Government of Canada with respect to the strengths and risks associated with all assessed fighter aircraft. The process was not intended to make a recommendation. Rather, the process was designed to articulate the risks associated with the assessed options in order to help the Government to make an informed decision as to the best way forward for Canada. The overall evaluation of options has three parallel streams of activity relative to each of the fighter options: one to assess the risk to successful execution of the Canadian Armed Forces’ (CAF) mission set as expressed in the Canada First Defence Strategy (CFDS), one to assess the acquisition and sustainment costs, and one to assess the potential Industrial and Regional Benefits (IRBs) offered by each manufacturer.

This report describes the method used to assess the risk to the successful execution of CFDS missions. This method, while developed, implemented, and executed under the leadership of the RCAF, underwent significant independent third party consultation and review. To this end, an Independent Review Panel (IRP) was created [3]. As described in the Terms of Reference for the Evaluation of Options [4], this panel was engaged at key milestones in the work to assess the methodology used and the analyses performed to help ensure that this work was both rigorous and impartial. In addition, the IRP’s involvement throughout the process was integral in making the publicly released results comprehensive and understandable [5].

Overarching Principles

Above all else, the evaluation of options needed to use a process that is as fair as possible to all industrial respondents for the outcome to be credible. The following characteristics were deemed essential to the method and guided the choices made during its development:

Impartial: The method used could not favour any of the options. It needed to be neutral in its application and consistently applied to all options considered.

Comprehensive: The method needed to consider all aspects of fighter employment along with the factors that enable the fighter operations. By being comprehensive, there would be less opportunity for bias in the method.

Understandable: By being understandable both in its structure and in its application, the method would allow its findings to be easily traced back to the appropriate evidence. Defensibility of its conclusions additionally would provide credibility to the method being used.

Robust: A method would be deemed robust if it could be applied without failure under a wide range of conditions. It is important that it do so without sacrificing rigour. In the context of this method, robustness included the ability to use proprietary or classified information as part of the evaluation process. The method also needed to be repeatable so that its conclusions remained consistent even if the assessors had changed or had the assessment process been carried out a second time.

These characteristics permeate the design of the method as will be seen in later sections of this report. Similar processes used by allied countries such as Australia and Denmark were consulted extensively during the development of this method both to calibrate the scope of the assessment and to help establish the validity of the approach from a scientific perspective. While uniquely Canadian, the method shares many important features with those employed by other nations.

Industrial Engagement

To foster an atmosphere of openness and to exercise due diligence, NFPS initiated a dialogue with several original equipment manufacturers (OEMs) to inform the evaluation of options. As an example, the method described in this report was briefed to industry prior to the formal evaluation of the proposed aircraft.

The OEMs were also asked to provide information to answer specific questions pertaining to their aircraft and support systems as part of a market analysis. Three questionnaires were prepared to allow industry the opportunity to provide information for replacing the CF-18. The Capability, Production and Supportability Information Questionnaire [6] covered the technical capabilities associated with fighter aircraft currently in production or scheduled to be in production and the associated strategic elements required to acquire the fleet and sustain it throughout its lifespan. As such, the industry returns provided the foundation for assessing risks to executing the CFDS missions.

All companies were free to submit both proprietary and classified information if desired. The second and third questionnaires dealt with cost estimates of the aircraft [7] and potential benefits to Canadian industry [8].

Companies received drafts of each of the three questionnaires for comments to help ensure that each company was provided an opportunity to highlight the potential capabilities of its fighter aircraft to fulfill the roles and missions outlined in the CFDS. These comments were reflected in the final questionnaires. The three questionnaires also benefited from the oversight and guidance of the Independent Review Panel. In addition, plenary and bilateral meetings with companies were organized in order to present and discuss each of the three questionnaires as well as the methodology for the assessment. Ongoing consultation with companies continued as the assessment of the responses progressed. In this way, industry engagement offered companies the opportunity to best present all their information relevant to the analysis of aircraft capabilities in support of a full and fair evaluation of all assessed options.

Overview of Evaluation Method

Many facets of the military, including organization and doctrine, are designed around a structure anchored in three levels: tactical, operational, and strategic [9]. It was therefore natural to structure the evaluation of options along the same lines. For the purposes of this assessment, the three levels were distinguished as follows:

Tactical: The tactical-level assessment of fighter options assumed that all the conditions necessary to have a fighter being applied in its roles are in place.[1] This portion of the evaluation concentrated on assessing fighters as they execute very specific functions in support of the missions specified in the CFDS. As such, this level constitutes the foundational piece on which the higher-level assessments were based. The assessment process began with an evaluation of the technological components of the fighter aircraft and finished with an assessment of how these technologies combine in helping the fighter deliver on its obligations within defined scenarios. This assessment considered the fighter capability in a way that does not depend on the expected frequency of the functions involved, nor their relative importance.

Operational: The operational-level assessment put the tactical assessments into proper context by taking a holistic view of fighter operations. Capability-based planning [10], [11], [12] was used as the basis for comparing the relative importance of the fighter contribution to the successful execution of the entire CFDS mission set as defined through the use of specific representative scenarios. This level of the assessment ensured that important fighter functions were treated as such, and helped determine whether the fighter options provide capability commensurate with expectations of the fighter force. Like the tactical-level assessment, this component of the evaluation assumed that the conditions required to have the fighter applied in its roles are in place.

Strategic: The strategic-level assessment built off of the operational-level assessment by taking into consideration all the conditions which need to be in place for the fighter to perform its functions. Factors stemming from areas such as aircraft acquisition, supportability and force management, integration, and growth potential considerations were assessed. The absence of one or more of these conditions may preclude the fighter aircraft from being used in CAF operations. The end result was an assessment of the risks involved with using the various fighter options to the successful execution of the CFDS missions. These risks can originate from a lack of operational capability as identified in the operational-level assessment or the additional considerations needed to enable that capability.

The method was applied to fighter operations occurring in two timeframes: 2020–2030, and 2030+. The first of these represents the period during which the RCAF will transition its fighter force from the CF-18 to its follow-on capability with no degradation in fighter capability during the transition period. In the 2030+ timeframe, it is expected that there will be increased proliferation of existing threats in addition to the introduction of new threat capabilities, both of which make operating within this period more challenging. The feasibility and expected costs of safely operating the CF-18 beyond 2020 were carefully analyzed as part of the overall assessment.

Organization of the Report

Section 1 provides the context for the evaluation of options to replace the CF-18 Hornet, along with a high-level overview of how the method is structured and the overarching principles which guided the development and implementation of the method. Section 2 provides the background information needed to place the overall method into context so that the parts described within the remainder of this report can be better understood. Sections 3, 4, and 5 describe the tactical, operational, and strategic levels of the assessment method, respectively. In each case, the process is described in terms of its four most important components: the inputs to the process, the staff that executed the process, a description of the process itself, and the outputs that the process produced. The conclusion of this report can be found in Section 6.

Background

Fighter aircraft are complex machines that can operate in a highly complex and dynamic environment. Therefore, it should not come as a surprise that the method described herein, in an effort to be comprehensive and fair, required a significant amount of work to complete. The Terms of Reference for Step 4 of the Government’s Seven-Point Plan [4] identifies the six tasks that comprise the evaluation of options:

- Threat Analysis – including technological trends to cover the 2020–2030 and 2030+ timeframes;

- Mission Needs Analysis – relying on the Canada First Defence Strategy (CFDS) and 2012 Capability-Based Planning (CBP) work as a basis;

- Fighter Capability Analysis – assessing fighter options at the tactical level;

- CF-18 Estimated Life Expectancy (ELE) Update – including cost estimates for necessary upgrades to maintain safe and effective operations beyond 2020;

- Market Analysis – obtaining capability and costing information from industry and Government sources to inform the entire process; and,

- Mission Risk Assessment – assessing the risk associated with performing the missions specified by the CFDS for each of the fighter options.

In parallel, the cost of each fighter option was to be estimated and the expected Industrial and Regional Benefits examined. It is beyond the scope of this report to describe each of these tasks in complete detail other than to explain how each piece contributes to the end state of the evaluation of fighter capability.

Figure 1 provides an overview of the entire process and where each piece fits in the tactical, operational, and strategic levels. Each component of the process to assess mission risk will be described in a later section of this report, however before doing so, a few definitions that help establish the parameters and context for the evaluation are provided in the balance of this section. Together, these definitions may be a bit difficult to fully digest on a first pass. The first-time reader may find it easier to skip to Section 3 and use the remaining material in this section as a reference when necessary.

Fighter Mission Scenarios

The 2030 Security Environment document [13] describes key trends in the future security environment and the challenges that these will pose for the CAF when operating in this environment. The evaluation method is based on a structured decomposition of the fighter mission within the context of the 2030 Security Environment document. The decomposition breaks the mission into meaningful components, each of which can be properly assessed by a panel of Defence experts. The top level of the decomposition consists of the missions specified in the CFDS. The Force Planning Scenarios developed by Chief of Force Development (CFD) staff as part of their capability-based planning (CBP) work are representative of the CFDS mission set and were used for the purposes of this assessment process.[2] Because these scenarios were designed to analyze the CAF holistically, RCAF staff identified the fighter-specific component of each scenario in order to facilitate the assessment of fighter aircraft. These Fighter Vignettes are as follows:

- 1 – Conduct daily domestic and continental operations, including in the Arctic and through the North American Aerospace Defense Command (NORAD):

- The Canadian fighter is conducting normal daily and contingency NORAD missions at normal alert levels, and is prepared to react to elevated alert levels. Operations are conducted from Main Operating Bases as well as Forward Operating Locations, and missions may be over land and over water. The threats for these missions can be air- and maritime-based.

- 2 – Support a major international event in Canada:

- The CAF are being employed in support to a major international event being held in Canada. Canada’s fighter assets are based in Deployed Operating Bases and/or civilian airfields that are closer to the expected area of operations. The Canadian fighter will be used to prevent disruption during a major international event held in Canada. Given an identified threat, the fighter will prosecute any potential land, maritime, and air threats. If an attack does materialize, the fighter, combined with other joint[1] assets, will be used to maintain overwatch and negate further attacks.

- 3 – Respond to a major terrorist attack:

- A terrorist threat to Canada has been identified. This threat is in the form of an attack that is being planned abroad, is underway with weapons in transit, or has just taken place. Canada’s fighter assets are based as per normal posture, and can be moved forward to Deployed Operating Bases or civilian airfields as dictated by the threat and intelligence on the situation. In the case of the attack being planned abroad, an expeditionary fighter unit will deploy to a sympathetic country in the region in preparation to support a pre-emptive, joint force attack. In the case of weapons in transit the Canadian fighter will be used to prevent the attack in progress, or respond to this major terrorist attack after it has occurred. Given the identified terrorist threat, the Canadian fighter will prosecute asymmetric land, maritime, and air terrorist threats before they strike. If an attack has already taken place, the Canadian fighter, combined with other joint assets, will maintain over watch and negate further attacks.

- 5b – Complex Peace Enforcement Operation in Coastal Country:

- The CAF have been tasked to deploy in support of multi-national North Atlantic Treaty Organization (NATO) operations in a failed state. An expeditionary fighter unit is included in this deployment, and will be based in a nearby nation that is sympathetic to NATO forces. The Canadian fighter will participate in this peace enforcement operation as part of a joint coalition effort. Civilian population and key infrastructure must be protected, and an environment conducive to an influx of humanitarian relief must be established. The Peace Enforcement Operation will also use the Canadian fighter to locate and destroy known terrorist cells. Instability in the region may lead to a requirement to use the Canadian fighter to maintain sovereignty in the face of threatening neighbour states.

- 5c – Coalition Warfighting (State on State):

- Canada has committed the CAF as part of a coalition responding to the threat of aggression from a foreign state. Included in the CAF contribution to the allied force is a fighter expeditionary force aimed at helping to deter aggression from the threatening state. If deterrence fails, the threatening state will be defeated. State on state war fighting will require the conduct of the full-spectrum of operational capabilities in a joint coalition. The Canadian fighter aircraft will be deployed to a forward coalition base, and will make use of coalition support assets in any ensuing air campaign.

- 6 – Deploy forces in response to crises elsewhere in the world for shorter periods:

- Canada has offered CAF units to assist in response to an international, UN-led humanitarian crisis or disaster. Included in this response is a deployed expeditionary fighter unit, which will be based alongside of other assistance efforts. The Canadian fighter will contribute to stabilization and policing missions, in support of international aid efforts. Relief efforts are hampered by criminal activity and general lawlessness, which pose a threat to the successful execution of the relief assistance.

- Note that CFDS Mission 4 (Support civilian authorities during a crisis in Canada such as a natural disaster) was not explicitly included in this list owing to its similarity, from a fighter operations perspective, to Mission 2. Mission 5a (Peace Support Operation) is also absent from the list given that the fighter capability was assessed as not applicable in this particular scenario.

Aerospace Capabilities

The second layer in the decomposition consists of Capabilities, which were used to deliver the effects needed to execute the CFDS Missions. CFD staff identified, as part of the ongoing CBP work, a taxonomy of capabilities that are in use by the CAF. As expected, the effects delivered by multi-role fighter aircraft do not contribute to every one of these capabilities. Of those on the list, fighter aircraft were assessed to provide meaningful effects in seven capabilities, referred to as Aerospace Capabilities henceforth, the definitions of which closely match those from Aerospace Doctrine [14]:

Defensive Counter Air (DCA): All measures designed to nullify or reduce the effectiveness of hostile air action. Operations conducted to neutralize opposing aerospace forces that threaten friendly forces and/or installations through the missions of Combat Air Patrol, Escort and Air Intercept.

Offensive Counter Air (OCA): Operations mounted to destroy, disrupt or limit enemy air power as close to its source as possible. This includes tasks such as Surface Attack, Suppression of Enemy Air Defences, and Sweep.

Strategic Attack: Capabilities that aim to progressively destroy and disintegrate an adversary’s capacity or will to wage war (as seen in the initial phases of both US-led campaigns in Iraq). Such missions are normally conducted against the adversary’s centre of gravity i.e. those targets whose loss will have a disproportionate impact on the enemy, such as national command centres or communication facilities.

Close Air Support (CAS): Air attacks against targets which are so close to friendly ground forces that detailed integration of each air mission with the fire and movement of those forces is required.

Land Strike:[4] Counter-surface operations using aerospace power, in cooperation with friendly surface and sub-surface forces to deter, contain or defeat the enemy’s land and maritime forces in the littoral.

Tactical Air Support for Maritime Operations (TASMO): Operations in which aircraft, not integral to the requesting unit, are tasked to provide air support to maritime units.

Intelligence, Surveillance, and Reconnaissance (ISR): The activity that synchronizes and integrates the planning and operation of all collection capabilities, with exploitation and processing, to disseminate the resulting (i.e. decision-quality) information to the right person, at the right time, in the right format, in direct support of current and future operations.

Measures of Fighter Effectiveness

The third level of the fighter mission decomposition consists of Measures of Effectiveness (MOEs). MOEs were used in order to ascertain how well the various fighter options provide the effects needed to deliver the required Aerospace Capabilities. Loosely, MOEs are high-level aggregates of each aircraft’s effectiveness in contributing to the Aerospace Capabilities, when put in a mission-specific context. The MOEs used for this analysis are defined as follows:

Awareness: The ability to gather, assimilate and display real-time information from on-board and off-board sensors. Awareness is largely dependent on the fidelity and capability of all onboard sensors. In addition, the capability of the entire system to display comprehensive information derived from inputs produced from multiple sensors is beneficial.

Survivability: The ability to operate within the operational battle space by denying or countering the enemy’s application of force. Survivability can be increased by detecting, classifying, and locating threats and subsequently evading them or negating their effectiveness. Once alerted to a threat (via radar warning receiver, missile approach warning system, etc.), a variety of active (electronic attack, decoys, chaff/flares, etc.) or passive (signature reduction, manoeuvres, etc.) means can deny an engagement by that threat.

Reach and Persistence: The distance and duration across which a fighter aircraft can successfully employ air power. Factors such as speed, endurance, range, and internal and external fuel loads should contribute when determining Reach and Persistence.

Responsiveness: The timely application of air power, including the ability to re-target while prosecuting multiple tracks, and potentially even re-role from one Aerospace Capability to another, within a Core Mission. Responsiveness should also include the potential for the weapons system to conduct more than one function simultaneously. For example, while conducting defensive counter-air missions, can a given aircraft also continue to contribute to the ISR picture? Also, the speed at which an aircraft can re-target, re-role, and reposition within the area of operations should be considered.

Lethality: The combined ability to obtain an advantageous position and negate/prosecute a target or threat. Obtaining an advantageous position speaks to the performance of an aircraft. Once there, it is desirable that a weapon system be able to negate or prosecute the target via a variety of kinetic and non-kinetic means. This process of employing kinetic and non-kinetic means may require supporting sensors and other avionics sub-systems to support weapons.

Interoperability: The ability to operate and exchange information with a variety of friendly forces in order to contribute to joint and coalition operations. Interoperability with other coalition and organic ground, sea, and air assets is critical. This interaction can include, but is in no way limited to, the use of data link and communications.

Measures of Fighter Performance

At the last layer of the decomposition are Measures of Performance (MOPs). Each class of aircraft systems has an associated metric that was used to assess the performance of the aggregate aircraft systems in an absolute sense, irrespective of the threat present and the context of the mission being considered. The Communications MOP, for example, is not meant to capture how well the voice communications systems work. Rather, it is meant to capture how well the collection of all communications systems works together in the context of generic fighter operations. It is useful to think of MOPs as measuring performance in a “laboratory” setting, and MOEs as measuring how well the fighters perform their functions in a mission specific context.

The specific question that was used for each MOP to guide this assessment process is given in Section 3.1.3. The definitions that were used for the classes of systems assessed in the MOPs are:

Radio Frequency (RF): Any sensor, or collection of sensors, that emits and/or receives in the RF spectrum. RF Sensors include, but are not limited to: Radar, Combined Interrogator Transponder (CIT), and Radar Warning Receiver (RWR).

Electro-Optical (EO)/Infrared (IR) Sensors: Any sensor, or collection of sensors, that emits and/or receives in the EO/IR spectrum. EO/IR Sensors include, but are not limited to: Advanced Targeting Pod (ATP), Infrared Search and Track (IRST), and Distributed Aperture System (DAS).

Air-to-Air (A/A) Weapons: Weapons employed against airborne targets. This encompasses weapons that are employed beyond and within visual range. Weapon capacity and guidance capability in the intended configuration should also be considered.

Air-to-Ground (A/G) Weapons: Weapons employed against land-based targets. Weapon capacity and guidance capability in the intended configuration should also be considered.

Air-to-Surface (A/S) Weapons: Weapons employed against sea-based targets. Note that some aircraft do not have dedicated A/Su weapons, but it may be possible to employ traditional A/G weapons to some degree. Weapon capacity in the intended configuration should also be considered.

Non-Kinetic Weapons: Offensive weapons that do not rely on kinetic engagements. Examples include, but are not limited to: lasers and Electronic Attack.

RF Self-Protection: Non-deployable self-protection that exploits the RF spectrum and protects against threats in the RF spectrum. Examples include, but are not limited to: RWR and Electronic Protection (EP).

IR Self-Protection: Non-deployable self-protection that exploits the IR spectrum and protects against threats in the IR spectrum. Examples include, but are not limited to: Missile Approach Warning System (MAWS) and DAS.

Countermeasures: Any devices deployed by the aircraft to negate or disrupt and attack. These devices can work in any spectrum. Examples include, but are not limited to: Chaff, Flares, Bearing-Only Launch IR (BOL IR) decoys, towed decoys, and expendable decoys.

Data Link: The means of connecting the aircraft to another or other assets in order to send or receive information. Data link can be used to share stored or real-time sensor and track information, to pass non-verbal orders, to pass full-motion video, or any other transmissions. Consideration should be given to the ability to send this information in a secure manner.

Communications: The means of sending and receiving voice communications between the platform and other aircraft or assets, both within and beyond line of sight. Consideration should be given to the ability to send and receive secure and jam-resistant voice communications. In addition, the number and type of devices on-board should be considered.

Sensor Integration: The ability to use all available sensors to build a more complete picture of the situation. Combining data from multiple sensors to achieve improved accuracies when compared to those achieved from the use of individual sensors should also be considered.

Pilot Workload: This includes any means of transferring information between the pilot and the platform, and vice versa, and any means of reducing pilot workload. Examples include, but are not limited to, Helmet Mounted Cueing System (HMCS) and Hands-On Throttle and Stick (HOTAS).

RF Signature: The relative amount of RF energy reflected and emitted by the platform.

IR Signature: The amount of IR energy emitted by the platform.

Engine/Airframe: A measure of the aircraft’s kinematic and aerodynamic performance. Items such as thrust to weight, instantaneous and sustained turn rates and radii, ‘g’ available and sustainable, and other general performance measures would fall in this category. In addition, new enabling technologies such as thrust-vectoring control would also be included here.

Combat Radius and Endurance: The unrefuelled distance over which an aircraft can be employed, and/or the time that it is able to remain airborne.[5]

Critical Enabling Factors

The end goal was to assess risk to successful completion of the missions outlined in the CFDS. The framework described to date can be used to assess these risks from an operational perspective. However, there are additional factors which can induce risk that are not covered in the operational risk assessment. These Critical Enabling Factors (CEFs), previously known as Military Strategic Assessment Factors (or SAFs), capture the major elements which enable the fighter force to execute its mission set. The CEFs used in this analysis are described below.

Aircraft Acquisition: This CEF captures factors other than cost that would affect acquisition of the various fighter options. These factors include:

- planned production periods for each replacement option;

- the manufacturers’ ability to continue or re-establish production periods;

- the ability of the manufacturers to offer a complete “cradle to grave” program, including training, in-service/life-cycle support and disposal;

- the manufacturers’ successful completion of any required developmental work before the aircraft are ready for acquisition; and

- the ability of each aircraft to be certified for airworthiness under CAF regulations.

Supportability and Force Management: An assessment of the overall/long-term supportability and aspects related to management of the fighter force for each replacement option. This includes factors such as:

- the required quantity of aircraft;

- managed readiness postures and sustainment ratios;

- the training system and production of appropriately trained pilots and maintenance/support personnel, including the use of simulators;

- training considerations for maintenance personnel and aircrew;

- aircraft mission availability rates and aircrew/technician workload;

- long-term availability of components needed for aircraft maintenance;

- implementation and sustainment of supporting infrastructure;

- the ability to operate from required locations;

- weapons compatibility and support; and

- the suitability of a reprogramming capability, including its flexibility and responsiveness for new threats and/or theatres of operation or new capabilities.

Integration: This CEF assesses broad interoperability within the Canadian Armed Forces and with allied forces for each replacement option. This includes interoperability with air-to-air refuelling services (other than CAF), common ground/spares support with allies and their supply lines, training systems, data sharing, communications, Standardization Agreements (STANAGs), and the ability to feed data into CAF and Government of Canada networks taking into consideration national security requirements.

Growth Potential: This CEF assesses the growth potential and technological flexibility of each course of action to respond to unforeseen future advances in threat capabilities, to implement required enhancements to fighter technology, and to evolve as needed to meet the Canadian Armed Forces’ needs. Factors include analysis of the architecture of aircraft types, power, and cooling capabilities for new systems.

Tactical-Level Assessment

The tactical assessment of the fighter options is encapsulated entirely within the domain of Task 3. It was meant to capture how well each of the aircraft perform, individually and separately, in executing the Aerospace Capabilities in the context of the set of Mission Scenarios. It is clear that not all Aerospace Capabilities are of equal importance in accomplishing each of the Mission Scenarios. However, at this stage of the process, fighter effectiveness was assessed irrespective of the importance of the Aerospace Capability. The Mission Scenarios were very important at this stage since they framed many of the considerations needed to properly assess the fighters, such as the expected threat and the geographic extent of the area of operations.

The tactical-level of the evaluation of options consists of a two-step process: assessment of measures of fighter performance and assessment of measures of fighter effectiveness.

Measures of Performance

Assessing the MOPs listed in Section 2.4 was the first step in the overall process. The MOPs were all assessed as described in this section. This part of the process was meant to assess the unmitigated performance of the aircraft systems in absolute terms. Good performance is good performance, irrespective of the threat being faced. The MOP assessments were a key input into the assessment of fighter effectiveness.

Inputs

There were three main inputs to the evaluation of MOPs. These are:

- Industry Engagement Request (IER) Returns: As discussed previously, the NFPS requested information from several OEMs[1] using three questionnaires to inform the evaluation of options as part of the Task 5 market analysis. The first questionnaire [6] closely mirrored this part of the evaluation of options; it covered the technical capabilities[2] associated with fighter aircraft as well as the associated strategic elements related to the acquisition of a fighter fleet and the sustainment of its capability throughout its lifespan. The IER returns were foundational in assessing risks to executing the CFDS missions, although only the portions directly related to fighter capability were used at this stage.

- Open Source Data: Another important component of the Task 5 market analysis was the collation of open source information on each of the fighter aircraft that the RCAF anticipated would be proposed as viable replacements for the CF-18. Using Open Source Intelligence (OSINT) information helped assessors supplement the information provided by industry through the IER.

- Other Data: The Government of Canada had additional information on some of the proposed aircraft through exchanges or other government-to-government interactions. This information is often of a classified nature.

Personnel

The personnel involved with the evaluation of MOPs generally fell into one of three categories: subject matter experts (SMEs) with in-depth knowledge of aircraft systems and performance, facilitators, and others.

The SMEs were divided into groups largely consisting of engineers (both military and civilian), operators, and scientists from Defence Research and Development Canada (DRDC). In most cases, specific individuals were chosen based on their specialized knowledge of the systems involved. In an effort to minimize any potential bias, personnel having firsthand experience with any of the proposed fighter aircraft, as well as those who helped design and build the assessment process, were expressly forbidden from participating as SMEs. Collectively, they were responsible for assessing each of the fighter options for this part of the process.

The facilitators consisted of staff from the Directorate of Air Requirements 5 – Fighters and Trainers (DAR 5). They were responsible for ensuring that the assessors had all the information they required, including providing in-briefs and acting as a conduit to industry, through NFPS, regarding any clarifications that may have been required on information submitted through the IER. In addition to other standard facilitator functions, the facilitators also ensured that the assessment teams provided adequate substantiation for any assessments that they made.

Other people who took part in the process included staff from NFPS who provided oversight of the process and staff from the DRDC Centre for Operational Research and Analysis (CORA) who helped assemble and process the information.

Method

The process for evaluating MOPs was straightforward. SMEs worked in groups to find relevant data in the IER returns, the open source data, and other data. Using this data as a basis, the groups scored their assigned MOP(s). Specifically, the questions they answered are the following:

In the very broad context of the CFDS Missions and Aerospace Capabilities to be performed, the expected performance of the system is:

- 9–10 Excellent – without appreciable deficiencies;

- 7–8 Very Good – limited only by minor deficiencies;

- 5–6 Good – limited by moderate deficiencies;

- 3–4 Poor – limited by major deficiencies;

- 1–2 Very Poor – significantly limited by major deficiencies;

- 0 Non-existent; or,

- N/A Not applicable – not able to score based on this scale.

In answering the question, it was vital that the assessment teams captured their rationale for the scores they assigned, irrespective of their values. Given that many of the assessors were not physically posted within the National Capital Region and had to come from across Canada in order to conduct the assessments, the information produced at this stage of the process needed to be as standalone as possible. Therefore, any assumptions or limitations needed to be noted and captured to prevent misinterpretation of their outputs and to allow traceability if reconstruction of these results was ever required.

The rating scale used by the SMEs is best thought of as a five-point scale (Excellent, Very Good, Good, Poor, Very Poor), with a Not Applicable rating available if a system was not part of the fighter being considered. Assessors used this scale as their reference when scoring the MOPs. Each point in the scale has two numerical ratings associated with it, such as the link between 7–8 and Very Good. This allowed assessors the opportunity to further discriminate when necessary between, e.g., a current capability, assessed as Very Good, and the capability of one of the options, also rated as Very Good, albeit somewhat better than the current capability.

When scoring the MOPs, the assessors were all encouraged to first score their MOP individually and then discuss their scores as a group in an effort to achieve consensus. The goal was to provide a consensus scoring for each MOP. In the event that consensus could not be achieved, the underlying reasons for the discrepancies were carefully documented for consideration during follow-on assessments. Should the assessors have encountered uncertainty in the scoring owing to missing or conflicting information, then the assessors were to work with the facilitators to obtain clarification where possible. Regardless of the information that was available, the assessors were required to provide as much insight as practicable. For instance, if they lacked information to make a sufficiently precise judgment regarding the performance of a given system but knew that generically the class of systems outperforms another known system, then they could use the second system to provide a lower bound for the MOP.

Outputs

Once complete, there were MOP assessments for each aircraft, in three configurations, across both timeframes. The assessments in the 2030+ timeframe considered the upgraded aircraft configurations as supplied by the manufacturers; otherwise, no additional mitigation measures were applied in the assessment of MOPs. Together, the MOP assessments formed the cornerstone for the subsequent assessment of MOEs.

Measures of Effectiveness

The evaluation of the MOEs from Section 2.3 is the activity that immediately followed the MOP assessment. It represents the second step in the overall process and the final step in the tactical level assessment. This section describes the process by which unmitigated fighter effectiveness was assessed during the evaluation of options. Unlike the MOPs, the MOEs are context-dependent which means that their scores can vary from one Mission Scenario to the next due to the changing nature of the threat in each situation.

Inputs

Since the evaluation of MOEs builds from the MOP assessment, the output of the MOP assessment was an input to the MOE assessment, as were the inputs to the MOP assessment (the IER returns, and open source and other data). The additional inputs to the evaluation of MOEs were:

- Task 1 Report [15]: This report defines the threat environment in each CFDS mission and how it may evolve over the two timeframes under consideration. This information was critical to assessing effectiveness of the fighter options because the adversarial capability varies significantly across the spectrum of CFDS Missions. To do so, specific exemplar air-, land-, and sea-based threats were identified for each Mission Scenario, in both timeframes.

- Task 2 Report [16]: The purpose of the Task 2 report is to assess mission needs in the context of probable mission requirements. It leverages heavily from a 17 month capability-based planning (CBP) effort; a pan-CAF force development analysis that was part of the strategic portfolio review.

Personnel

Just as it was for the MOP assessments, the personnel involved with the evaluation of MOEs fell into three categories: SMEs, facilitators, and others. The SMEs in this case were personnel with more direct experience with fighter operations. There were three assessment groups, each consisting of 2-3 fighter pilots currently in a position directly linked to operations and an air weapons controller. Although various qualification levels were being sought from the fighter community, personnel selection was not based on specific operational experience (e.g. Task Force Libeccio). Rather, the qualifications were indicative of the general level of operational experience each pilot had. The facilitators and other personnel were just as those described in the MOP assessment.

Method

There are many similarities in the method used to assess MOEs when compared against the method used in the MOP assessment, but there are important differences as well. Once again, the SMEs worked in groups to evaluate each of the MOEs in the context of Aerospace Capability being considered in support of a Mission Scenario, making sure all the while to provide due consideration to the threat that is expected to be present. The individual members worked within their team in an effort to score all MOEs according to the following guidelines:

In the context of the task to be performed and the threat that is present, the effectiveness of the aircraft is:

9–10 Excellent – no appreciable limitations in delivering the desired effect;

7–8 Very Good – only minor limitations in delivering the desired effect;

5–6 Good – moderate limitations in delivering the desired effect;

3–4 Poor – major limitations in delivering the desired effect;

1–2 Very Poor – severe limitations in delivering the desired effect; or,

N/A Not applicable – not able to score based on this scale.

Unlike the MOP rating scale, the MOE scale did not admit the possibility of non-existent capability since it was tacitly assumed that any fighter aircraft would, by design, be capable of providing the effects critical to mission success, no matter how modestly.

In using the above scale, it was critical that the assessment teams justify their responses using the MOP assessments along with any additional pertinent information. They also needed to capture any assumptions or limitations encountered in the application of the method. However, there is no explicit link between the MOPs and the MOEs. This is by design as there are multiple combinations of MOPs that can be used to deliver the desired effects. For example, a fighter aircraft can have a high degree of lethality in a DCA context if it can shoot at threat aircraft before the threat aircraft has a good opportunity to shoot back. This can be the case if the fighter has low signatures in the RF and IR spectra (RF Signature and IR Signature MOPs), if it is equipped with long-range A/A missiles (A/A Weapons MOP), if it is fast enough and manoeuvrable enough to render the threat missiles ineffective (Engine/Airframe MOP), or a combination thereof. While there are less difficult cases to assess, this example illustrates why it was unreasonable to be overly prescriptive in how MOPs combine to contribute to MOEs.

There were evidently a large number of combinations of fighter mission scenarios, aerospace capabilities, MOEs, and aircraft that were assessed across two timeframes. To make the process easier, the assessors worked on a single mission in any given day. However, they avoided holding the aerospace capability and MOE constant while cycling through each of the assessed aircraft. This was a self-imposed restriction employed to avoid comparing the individual aircraft against one another to the extent possible.

The teams of assessors periodically reconvened as a larger group to compare their assessments. The individual teams were used to ensure that there was as wide a cross-section of opinion as possible being captured. The larger group was used to ensure consistency of assumptions and parameters amongst the groups. The goal of the larger group was to achieve consensus on the MOE evaluations. In the event that consensus could not be reached, differences in the SME judgments were documented.

In the case of the MOEs, the scores were used directly in follow-on assessments at the operational level, so it was also important to capture the nature of the spread in the scores when consensus could not be reached. For example, if the most appropriate score for survivability was somewhere between 4 and 8, then it is a fundamentally different situation from the one in which the score was either 4 or 8, but not anywhere in between. Knowing the nature of the uncertainty in the scores allowed the analysts to appropriately characterize this uncertainty using statistical distributions and carry it forward through the ensuing calculations at the operational level.

Outputs

The end result of this stage of the process was MOE assessments for each aircraft, across each Aerospace Capability, in the context of each Mission Scenario in both timeframes. Although not every Aerospace Capability is required in the fulfillment of each Mission Scenario, it is easy to see why this step in the evaluation method was easily the most time consuming to complete. The output from these evaluations completed the assessment at the tactical level. The scores assigned to the MOEs served as key indicators of the unmitigated potential risks associated with each of the fighter options at the operational level.

Operational-Level Assessment

Assessing the fighter options at the operational level was accomplished in two steps. The first step is largely a mechanical process owing to the extensive body of work that was leveraged to perform it. The purpose of this first step was to aggregate the information from the MOE assessment to identify potential strengths and weaknesses associated with using the fighter options in each of the CFDS missions. The second step recasts the operational risk in the context of RCAF operations, applying measures to mitigate the risks identified in the first step. The process described in this section is part of the Task 5 work.

Identification of Potential Sources of Operational Risk

In general, risk is a function of the likelihood of an event occurring and the consequences of that event’s occurrence. Trying to apply this framework to the task at hand was difficult given the lack of specificity and the variability that exists within the CFDS missions. Instead, the first step in the operational risk assessment resulted from a fusion of Task 2 (Mission Needs) and Task 3 (Fighter Capability Analysis) outputs using a carefully selected set of rules that highlight each fighter option’s ability to meaningfully contribute to the Aerospace Capabilities required in the fulfillment of the CFDS mission set. By extension, any potential limitations in fighter capability were identified through this process. In this sense, this first step in the operational-level assessment is not a risk assessment in the strictest sense, but rather serves to inform the second step of this operational-level assessment of any potential operational limitations associated with each fighter option.

Inputs

In addition to the MOE assessments stemming from the tactical-level assessment and their associated inputs, this step in the assessment process required two new additional inputs to be completed. These are:

- Expected Fighter Usage: As part of the CBP effort, RCAF SMEs were asked to provide the anticipated distribution of effort (as percentages) across all applicable capability areas for all fleets, including fighter aircraft. The numbers provided spanned two time horizons that closely coincide with those used in this evaluation of options. The numbers for the fighter aircraft identify the percentage of fighter flying hours, exclusive of training, that the fighters are expected to be used in delivering each aerospace capability when used in each fighter mission scenario. In this way, the percentages for each fighter mission scenario must sum to 100%. The numbers used in the evaluation of options were recently corroborated by DAR 5 staff.

- Fighter Criticality: The data generated during the CBP effort includes an assessment of the criticality of the Aerospace Capabilities in helping successfully execute the CFDS missions. This data was useful, but was not fighter specific. For example, ISR may be a critical capability in some missions, but that does not necessarily imply that it is critical for the fighter aircraft to perform ISR functions to achieve success in that mission. In order to help correct for this, staff from the Chief of Force Development (CFD) organization, the RCAF, and DRDC CORA assigned criticality to the fighter contribution in achieving Aerospace Capabilities for each Mission Scenario. The options available included:

- – Mission Critical: A fighter capability is Mission Critical if it delivers direct effect as part of its primary function and this effect is assessed as critical to mission success. Failure to employ this effect will pose severe risk to mission success.

- – Mission Essential: A fighter capability is Mission Essential if it is an essential enabler to Mission Critical Capabilities; the lack of one or more Mission Essential capabilities will pose risk to mission success.

- – Mission Routine: A fighter capability is Mission Routine if the effect it delivers is required for the mission but either has a routine supporting function or a very low likelihood of employment. Only in cases where multiple Mission Routine capabilities experience a systems failure will any significant risk be posed to the mission.

Personnel

Given the mechanical nature of this step in the process, only minimal personnel were required. Staff from DAR 5 and DRDC CORA used simple spreadsheet tools to perform the aggregation described below. The DAR staff provided the narratives to explain the outcomes of this step of the overall process.

Method

The method was applied in two sequential steps. The first step used the expected fighter usage and MOE assessments to derive operational scores for each fighter option against each Mission Scenario. The second step identified potential sources of operational risk using this score and the fighter criticality to identify any important weaknesses that may have been masked by the arithmetic performed in computing the operational scores. The output was dubbed a ‘raw operational risk’ to distinguish it from the mitigated risk identified in the following step as described in Section 4.2.Obtaining the operational scores was a straightforward weighted average calculation. For each Aerospace Capability used within the context of each Mission Scenario, the operational score was calculated by weighting the MOE scores using the expected fighter usage. An example is shown in Table 1. In the example, only DCA, OCA, TASMO, and ISR are required to execute Mission n. The MOE scores for a hypothetical Aircraft A are shown in the table, as are the expected fighter usage percentages. The operational score is computed as 8.0, owing primarily to its strong DCA capability. The interpretation of this result is similar to the ones seen previously, except that in this case the score provides a minimum for the raw operational risk as shown in Table 2 below. Based on this, the raw operational risk associated with using Aircraft A in executing Mission n is at least Medium.

| Aerospace Capability | |||||||

|---|---|---|---|---|---|---|---|

| DCA | OCA | Strat Attack | CAS | Land Strike | TASMO | ISR | |

| MOE Score | 8 | 9 | n/a | n/a | n/a | 6 | 9 |

| Expected Fighter Usage | 70% | 10% | 0% | 0% | 0% | 10% | 10% |

| Operational Score | 8.0 (= 8 × 70% + 9 × 10% + 6 × 10% + 9 × 10%) | ||||||

| Operational Score | Minimum Raw Operational Risk |

|---|---|

| 9–10 | Low |

| 7–8 | Medium |

| 5–6 | Significant |

| 3–4 | High |

| 1–2 | Very High |

The astute reader will notice at this point the escalation in the language used to describe deficiencies at this stage when compared to the MOP and MOE assessments. For example, an aircraft that has its effectiveness rated as “Good” will be expected to induce “Significant” risk to fighter operations. This type of escalation is not unusual in a military context where minor disadvantages in capability can make the task of winning an engagement much more difficult, if not impossible.

The second and final step in obtaining the raw operational risk was to combine the MOE scores and Fighter Criticality to see if the risk increases. Table 4 shows the same MOE data as Table 1, incorporating the fighter criticality information instead of the expected fighter usage. The two quantities are combined using the set of rules shown in Table 3, dubbed a ‘risk translation matrix.’The matrix is meant to identify Aerospace Capabilities that may put the mission at risk, despite what the operational score may indicate. Using the risk translation matrix, it is seen that the risk associated with TASMO is Significant. The raw operational risk must therefore be Significant at minimum. Since this is the highest risk value obtained, the conclusion is that the risk associated with using Fighter A in Mission n is Significant because of its inability to provide the desired effects in a mission critical capability.

Outputs

The output of this process was an assessment of unmitigated potential risks associated with using the fighter options to fulfill each of the Mission Scenarios in both timeframes. These raw operational risk assessments fed directly into the next step which determined operational risk in a more holistic sense.

| MOE | Fighter Criticality | Minimum Raw Operational Risk | ||

|---|---|---|---|---|

| Excellent | + | Mission Critical | = | Low |

| Very Good | Medium | |||

| Good | Significant | |||

| Poor | High | |||

| Very Poor | Very High | |||

| Excellent | + | Mission Essential | = | Low |

| Very Good | Low | |||

| Good | Medium | |||

| Poor | Significant | |||

| Very Poor | High | |||

| Excellent | + | Mission Routine | = | Low |

| Very Good | Low | |||

| Good | Low | |||

| Poor | Low | |||

| Very Poor | Medium |

| Aerospace Capability | |||||||

|---|---|---|---|---|---|---|---|

| DCA | OCA | Strat Attack | CAS | Land Strike | TASMO | ISR | |

| MOE Score | 8 (VG) |

9 (Exc.) |

n/a | n/a | n/a | 6 (Good) |

9 (Exc.) |

| Fighter Criticality | MC | MR | n/a | n/a | n/a | MC | ME |

| Min Op Risk | Med. | Low | n/a | n/a | n/a | Sig. | Low |

Exc. = Excellent, VG = Very GoodMC = Mission Critical, ME = Mission Essential, MR = Mission RoutineSig. = Significant, Med. = Medium

Section 4.2

As stated earlier, the output from the previous step did not truly represent a proper risk assessment. Two important considerations were missing, namely those of likelihood, as part of any risk assessment process, and risk mitigation,[8] which is an integral part of most risk management frameworks. Introducing likelihood generally helps distinguish between catastrophic events which are extremely unlikely to occur from those with lesser potential consequences which are expected to occur more routinely. Risk mitigation is important to include as well, since it helps present operational risk in a manner that is more in keeping with how the RCAF employs its aircraft. Together, these considerations added detail to improve the fidelity of the operational risk assessments.

Inputs

The inputs to this step included the raw operational risk assessments from the previous step, along with all the inputs that were used to deduce them. In addition, since risk will be looked at from a more pan-RCAF perspective, any relevant documentation that describes how the CAF and RCAF conduct operations, such as Doctrine, Concepts of Operations, and Concepts of Employment, was consulted as required.

Personnel

A panel consisting of senior RCAF leaders with command and/or fighter experience performed this assessment. These SMEs needed to have relevant experience to connect the identified potential sources of risk to fighter operations. Moreover, their experience was necessary to properly frame the likelihood of the issues identified in the first step of operational risk assessment to prioritize them appropriately and decide what mitigation strategies were best suited to the identified issues. Staff from the RCAF facilitated this part of the process.

Method

To begin with, the panel considered each of the operational limitations identified in the previous step to assess the likelihood that the given operational scenario could arise and the consequence of it arising. These were then combined in the manner described in the DND Integrated Risk Management framework (see [17] and Table 5) to obtain an interim operational risk.

| Likelihood | ||||||

|---|---|---|---|---|---|---|

| Rare | Unlikely | Possible | Likely | Almost Certain | ||

| Risk | ||||||

| Consequence | Severe | Significant | High | High | Very High | Very High |

| Major | Medium | Significant | High | High | Very High | |

| Medium | Low | Medium | Significant | Significant | High | |

| Minor | Low | Low | Medium | Medium | Significant | |

| Insignificant | Low | Low | Low | Medium | Medium | |

Next, mitigation strategies were considered.[9] Generally, risks can be mitigated by modifying, amongst other things, procedures, technology, budgets, schedules, or policy. In the present context, mitigation could involve modifications to the aircraft and their payloads, modifications to the way the aircraft are flown, or modifications to the way the RCAF conducts its operations (e.g. offloading some tasks onto other aircraft platforms). The assessors deliberated several possible risk mitigation strategies for each previously identified risk.

Next, the risks associated with each of these mitigation strategies was also assessed to ensure that the proposed mitigation strategies did not increase risk to the CAF. For example, one could propose overcoming an aircraft’s deficiencies by developing and integrating a new sensor pod. If the sensor pod is expensive, then the solution introduces financial risk, potentially making it difficult to achieve other DND/CAF priorities. As a second example, one could recommend allowing tanker aircraft to operate closer to the combat zone to reduce the risks associated with limitations in fighter reach and persistence. However, this simply transfers the risk from the fighters to the tankers, which are more vulnerable aircraft, and therefore may increase risk in an overall sense.

Investigating the effects of mitigating risks was a challenging, but highly beneficial, task. It allowed the assessors to remove some artificialities within the assessment framework which resulted from too rigid an interpretation of the mission scenarios or the aircraft configurations. In some cases, multiple risk mitigation strategies were considered viable options. The experience of the SMEs that performed this part of the assessment was crucial. Their acumen afforded them the ability to weigh the available options and select the most appropriate one to minimize the overall operational risk, including the option to leave the risk unmitigated.Finally, the interim operational risk and the risk associated with the mitigation strategies were combined using the rules of thumb shown below as a guideline to give the final operational risk assessment. The end result depended on the nature of the linkages between the mitigation and interim operational risks. If the two types of risk were completely independent of one another, then the aggregate risk was obtained simply by taking the higher of the two as shown Table 6. If, on the other hand, the risks compounded one another, then Table 7 shows the aggregate risk. The only differences occur in the four cells where significant and/or high risks are combined.

| Overall Operational Risk | ||||||

|---|---|---|---|---|---|---|

| Interim Operational Risk | ||||||

| Low | Medium | Significant | High | Very High | ||

| Mitigation Risk | Very High | Very High | Very High | Very High | Very High | Very High |

| High | High | High | High | High | Very High | |

| Significant | Significant | Significant | Significant | High | Very High | |

| Medium | Medium | Medium | Significant | High | Very High | |

| Low | Low | Medium | Significant | High | Very High | |

| Overall Operational Risk | ||||||

|---|---|---|---|---|---|---|

| Interim Operational Risk | ||||||

| Low | Medium | Significant | High | Very High | ||

| Mitigation Risk | Very High | Very High | Very High | Very High | Very High | Very High |

| High | High | High | Very High | Very High | Very High | |

| Significant | Significant | Significant | High | Very High | Very High | |

| Medium | Medium | Medium | Significant | High | Very High | |

| Low | Low | Medium | Significant | High | Very High | |