Chloramines in Drinking Water - Guideline Technical Document for Public Consultation

Consultation period ends January 25, 2019

Table of Contents

- Purpose of consultation

- Part I. Overview and Application

- 1.0 Proposed guideline

- 2.0 Executive summary

- 3.0 Application of the guideline

- Part II. Science and Technical Considerations

- 4.0 Identity, use and sources in the environment

- 5.0 Exposure

- 6.0 Analytical methods

- 7.0 Treatment technology and distribution system considerations

- 8.0 Kinetics and metabolism

- 9.0 Health effects

- 10.0 Classification and assessment

- 11.0 Rationale

- 12.0 References

- Appendix A: List of acronyms

Download the entire report

(PDF format, 600 KB, 63 pages)

Organization: Health Canada

Type: Consultation

Date published: 2018-11-23

Purpose of consultation

The available information on chloramines has been assessed with the intent of updating the current drinking water guideline and guideline technical document. The draft guideline technical document proposes that it is no longer considered necessary to establish a guideline for chloramines in drinking water. However, based on the use of chloramines in the disinfection of drinking water, a guideline technical document is still considered necessary.

The document is being made available for a 60-day public consultation period. The purpose of this consultation is to solicit comments on the proposed approach, as well as to determine the availability of additional exposure data. Comments are appreciated, with accompanying rationale, where required. Comments can be sent to Health Canada via email at HC.water-eau.SC@canada.ca. If this is not feasible, comments may be sent by mail to the Water and Air Quality Bureau, Health Canada, 269 Laurier Avenue West, A.L. 4903D, Ottawa, Ontario K1A 0K9. All comments must be received before January 25, 2019.

The existing guideline on chloramines, last updated in 1995, established a maximum acceptable concentration (MAC) of 3.0 mg/L (3,000 μg/L) based on reduction in body weight gain. This new document provides updated scientific data and information related to the health effects of chloramines, and proposes that it is no longer considered necessary to establish a guideline for chloramines in drinking water, based on recent studies that show a very low toxicity for monochloramine in drinking water. These studies also show that the health effect observed in earlier studies was due to a decreased water consumption related to taste aversion to monochloramine in drinking water.

Comments received as part of this consultation will be shared with members of the Federal-Provincial-Territorial Committee on Drinking Water (CDW), along with the name and affiliation of their author. Authors who do not want their name and affiliation to be shared with CDW should provide a statement to this effect along with their comments.

It should be noted that this guideline technical document on chloramines in drinking water will be revised following evaluation of comments received. This document should be considered as a draft for comment only.

Part I. Overview and Application

1.0 Proposed guideline

It is not considered necessary to establish a guideline for chloramines in drinking water, based on the low toxicity of monochloramine at concentrations found in drinking water. Any measures taken to limit the concentration of chloramines or their by-products in drinking water supplies must not compromise the effectiveness of disinfection.

2.0 Executive summary

The term "chloramines" refers to both inorganic and organic chloramines. This document focuses on inorganic chloramines, which consist of monochloramine, dichloramine and trichloramine. Unless specified otherwise, the term "chloramines" will refer to inorganic chloramines throughout the document.

Chloramines are found in drinking water mainly as a result of treatment, either intentionally as a disinfectant in the distribution system, or unintentionally as a by-product of the chlorination of drinking water in the presence of natural ammonia. As monochloramine is more stable and provides longer-lasting disinfection, it is commonly used in the distribution system, whereas chlorine is more effective at disinfecting water in the treatment plant. Chloramines have also been used in the distribution system to help reduce formation of common disinfection by-products such as trihalomethanes and haloacetic acids. However, chloramines also react with natural organic matter to form other disinfection by-products.

All drinking water supplies should be disinfected, unless specifically exempted by the responsible authority. Disinfection is an essential component of public drinking water treatment; the health risks associated with disinfection by-products are much less than the risks from consuming water that has not been adequately disinfected. Most Canadian drinking water supplies maintain a chloramine residual below 4 mg/L in the distribution system.

This guideline technical document focuses on the health effects related to exposure to chloramines in drinking water supplies, also taking in consideration taste and odour concerns. It does not review the benefits or the processes of chloramination; nor does it assess the health risks related to exposure to by-products formed as a result of the chloramination process. The Federal-Provincial-Territorial Committee on Drinking Water has determined that an aesthetic objective is not necessary, since levels commonly found in drinking water are within an acceptable range for taste and odour, and since protection of consumers from microbial health risks is paramount.

During its Fall 2017 meeting, the Federal-Provincial-Territorial Committee on Drinking Water reviewed the guideline technical document for chloramines and gave its endorsement for this document to undergo public consultation.

2.1 Health effects

The International Agency for Research on Cancer and the United States Environmental Protection Agency (U.S. EPA) have classified monochloramine as "not classifiable as to its carcinogenicity to humans" based on inadequate evidence in animals and in humans. The information on dichloramine and trichloramine is insufficient to establish any link with unwanted health effects in animals or in humans. These forms are also less frequently detected in drinking water. Studies have found minimal effects in humans and animals following ingestion of monochloramine in drinking water, with the most significant effect being decreased body weight gain in animals. However, this effect is due to reduced water consumption caused by taste aversion.

2.2 Exposure

Human exposure to chloramines primarily results from their presence in treated drinking water; monochloramine is usually the predominant chloramine. Intake of monochloramine and dichloramine from drinking water is not expected through either skin contact or inhalation. Intake of trichloramine from drinking water might be expected from inhalation; however, it is relatively unstable in water and is only formed in specific conditions (at very high chlorine to ammonia ratios or under low pH), which are unlikely to occur in treated drinking water. Consequently, exposure to chloramines via inhalation and skin contact during showering or bathing is expected to be negligible.

2.3 Analysis and treatment

Although there are no U.S. EPA-approved methods for the direct measurement of chloramines, there are several such methods for the measurement of total and free chlorine. The results from these methods can be used to calculate the levels of combined chlorine (or chloramines). However, it is also important to determine the levels of organic chloramines to avoid overestimating the disinfectant residual.

For municipal plants, a change in disinfectant (such as changing the disinfectant residual to chloramine) can impact water quality. When considering conversion to chloramine, utilities should assess the impacts on their water quality and system materials, including the potential for corrosion, nitrification and the formation of disinfection by-products.

Chloramines may be found in drinking water at the treatment plant, in the distribution system and in premise plumbing. For consumers that find the taste of chloramines objectionable, there are residential drinking water treatment devices that can decrease concentrations of chloramines in drinking water. However, removal of the disinfectant residual is not recommended.

2.4 International considerations

Drinking water quality guidelines, standards and/or guidance established by foreign governments or international agencies may vary due to the science available at the time of assessment, as well as the utilization of different policies and approaches, such as the choice of key study, and the use of different consumption rates, body weights and allocation factors.

Several organizations have set guidelines or regulations for chloramines in drinking water, all based on the same study which found no health effects at the highest dose administered.

The U.S. EPA has established a maximum residual disinfectant level of 4 mg/L for chloramine, recognizing the benefits of adding a disinfectant to water on a continuous basis and of maintaining a residual to control for pathogens in the distribution system. The World Health Organization and Australia National Health and Medical Research Council both established a drinking water guideline of 3 mg/L.

3.0 Application of the guideline

Note: Specific guidance related to the implementation of drinking water guidelines should be obtained from the appropriate drinking water authority in the affected jurisdiction.

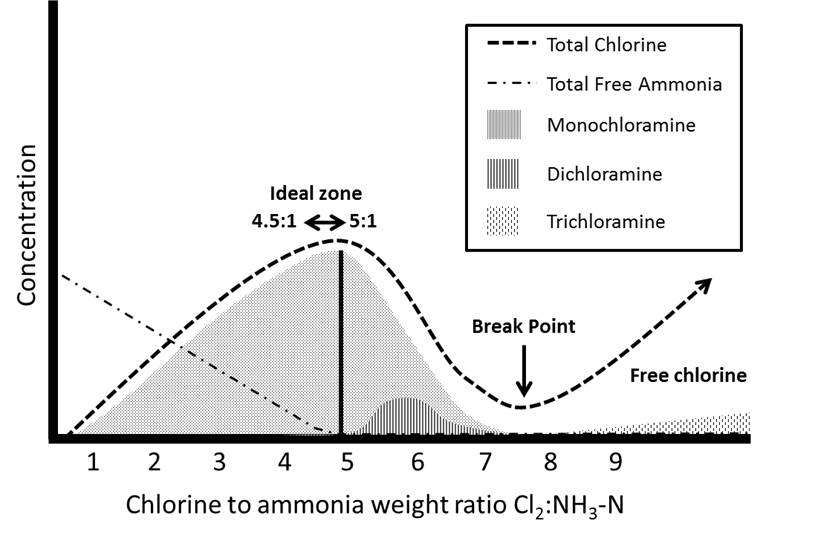

Chloramines are formed when chlorine and ammonia are combined in water and comprise three chemical species: monochloramine (NH2Cl), dichloramine (NHCl2) and trichloramine (NCl3). The relative amounts formed are dependent on numerous factors, including pH, chlorine:ammonia ratio (Cl2:NH3), temperature and contact time. When chloramines are used as a disinfectant in drinking water systems, the desired species is monochloramine. When treatment processes are optimized for monochloramine stability (Cl2:NH3 weight ratio of 4.5:1–5:1, pH >8.0), almost all of the chloramines are present as monochloramine. Since chloramines can also be formed when ammonia is present in source water, utilities should characterize their source water to assess the presence of and variability of ammonia levels. When utilities are considering conversion from chlorine to chloramine, they should assess the impacts on their water quality and system materials, including the potential for corrosion, nitrification and the formation of disinfection by-products.

Maintenance of adequate disinfectant residual will minimize bacterial regrowth in the distribution system and provide a measurable level of chloramine; therefore, a drop in monochloramine level, suggesting unexpected changes in water quality, can be more quickly detected. Specific requirements for chloramine residual concentrations are set by the regulatory authority and may vary among jurisdictions. Monochloramine, used as a secondary disinfectant, should be applied so as to maintain a stable residual concentration throughout the distribution system. The appropriate amount of disinfectant needed to maintain water quality in the distribution system will depend on (among other factors), the characteristics of the distribution system, the species of bacteria, the presence of biofilms, the temperature, the pH and the amount of biodegradable material present in the treated water. Water utilities should be aware that a minimum target chloramine residual of "detectable" will not be sufficient to effectively limit bacterial growth in the distribution system. Regular monitoring of distribution system water quality (e.g., disinfectant residual, microbial indicators, turbidity, pH) and having operations and maintenance programs in place (water mains cleaning, cross-connection control, replacements and repairs) are important for ensuring that drinking water is transported to the consumer with minimum loss of quality.

Depending on the water system, chloramine residual concentrations of >1.0 mg/L may be required to maintain lower heterotrophic bacterial counts, to reduce coliform occurrences and to control biofilm development. Some utilities may require monochloramine concentrations much higher than this to address their specific distribution system water quality. Nitrification in the distribution system is also a potential problem for municipal systems that chloraminate. The concerns for utilities from nitrification are the depletion of the disinfectant residual, increased bacterial growth and biofilm development in the distribution system, as well as decreased pH, which can result in corrosion issues. When used as part of a program for nitrification prevention and control, suggested best operational practices for a chloramine residual are 2 mg/L leaving the treatment plant and preferably greater than 1.5 mg/L at all monitoring points in the distribution system. Information on strategies for controlling nitrification can also be found in the guideline technical document for ammonia.

Most Canadian drinking water supplies maintain a chloramine residual range below 4 mg/L in the distribution system. At these concentrations, taste and odour related to chloramines are generally within the range of acceptability for most consumers. Individual sensitivities in the population are widely variable, but generally, taste and odour complaints have resulted at levels of 3–3.7 mg/L monochloramine. Taste and odour concerns should be taken into account during the selection of operational and management strategies for the water treatment and distribution systems, although they do not make the water unsafe to consume. The protection of public health by maintaining the microbiological safety of the drinking water supply during distribution is the primary concern when using monochloramine for secondary disinfection.

Taste and odour issues can be indicators that operational changes may be required to address causal issues (i.e., water age, loss of monochloramine stability, formation of dichloramine, etc.). Utilities should establish operational targets for a disinfectant residual concentration appropriate for their system: one that allows them to meet their water quality objectives (i.e., microbial protection, minimal formation of disinfection by-products, nitrification prevention, biological stability and corrosion control).

Dialysis treatment providers at all levels (e.g., large facilities/hospitals, small community facilities, mobile units, providers for independent/home dialysis) should be notified that water is chloraminated.

3.1 Monitoring

Utilities using chloramine for secondary disinfection should, at minimum, monitor total chlorine residual daily in water leaving the treatment plant and throughout the distribution system. Disinfectant residual sampling should be conducted at the point of entry (baseline) and throughout the distribution system. This ensures that the target chloramine level is being applied at all times and provides a comparison for residual levels observed throughout the distribution system. Sample locations should be chosen to represent all areas of the distribution system. Key points for sampling also include entry point to distribution system (baseline), storage facilities, upstream and downstream of booster stations, in areas of low flow or high water age, in areas of various system pressures, in mixed zones (blended water) and in areas with various sizes and types of pipe material. Some utilities should also consider increasing the frequency of sampling during warmer months. Targeting more remote locations with fewer samples versus taking more samples at fewer locations can be a useful strategy for providing a more representative assessment of residual achieved and for detecting problem areas. Dedicated sampling taps are an ideal approach for residual sampling, and customer taps can be used as an alternative. In the absence of suitable tap access, hydrants can be used for residual sampling, although taking a meaningful hydrant sample can be challenging. Additional samples can be added for investigative purposes. Having operators well trained in the use of field testing methods for free and total chlorine will also be important for ensuring the accuracy of measurements.

Operational parameters (including finished water pH, free chlorine, free ammonia, temperature, total organic carbon and alkalinity) should be monitored at the treatment plant when using chloramines. It is also recommended that water leaving the treatment plant and throughout the distribution system be tested at least weekly for nitrite and ammonia. Nitrite should also be analyzed weekly at storage facilities and in areas of low flow and high water age to monitor for nitrification. Utilities should also monitor weekly for free ammonia at locations such as reservoir outlets and areas with long water detention times (e.g., dead ends). Changes in the trends of nitrification parameters in the distribution system (i.e., total chlorine residual, nitrite and nitrate) should trigger more frequent monitoring of free ammonia. Utilities that undertake comprehensive preventive measures and have baseline data indicating that nitrification does not occur in the system may conduct less frequent monitoring of free ammonia and nitrite. Heterotrophic plate count (HPC) monitoring on a monthly basis, at minimum, is also useful as a tool to assess system water quality.

More information on monitoring for nitrite, ammonia and HPCs can be found in the guideline technical documents on nitrate and nitrite and on ammonia and in the guidance document on the use of HPCs in Canadian drinking water supplies.

Part II. Science and Technical Considerations

4.0 Identity, use and sources in the environment

Chloramines are oxidizing compounds containing one or more chlorine atoms attached to a nitrogen atom. In the literature, the term "chloramine" refers to both inorganic and organic chloramines. Health effects of organic chloramines are beyond the scope of the present document and will not be discussed. Throughout the document, the term "chloramines" will refer only to inorganic chloramines, unless otherwise specified.

Inorganic chloramines consist of three chemically related compounds: monochloramine, dichloramine and trichloramine. Only mono- and dichloramine are really soluble in water. The volatility varies depending on the compound, with trichloramine being the most volatile. Their physical properties are provided in the table below:

| Parameter | Inorganic chloramine compounds | ||

|---|---|---|---|

| Monochloramine | Dichloramine | Trichloramine | |

| Synonym | ChloramideFootnote d | ChlorimideFootnote d | Nitrogen trichlorideFootnote b |

| CAS No. | 10599-90-3 | 3400-09-7 | 10025-85-1 |

| Molecular formula | NH2Cl | NHCl2 | NCl3 |

| Molecular weightFootnote a | 51.48 | 85.92 | 120.37 |

| Water solubilityFootnote a | Soluble | Soluble | Limited to hydrophobic |

| Boiling point | 486°C (predicted)Footnote e | 494°C (predicted)Footnote e | NA |

| pKFootnote a | 14 ± 2 | 7 ± 3 | NA |

| Henry's Law constant—Kaw (estimated, at 25°C)Footnote c | 0.00271 | 0.00703 | 1 |

| Vapour pressure (at 25°C) | 1.55 × 10 –7 PaFootnote c | 8.84 × 10 –8 PaFootnote c | 19.99 kPaFootnote b |

Chloramines have been used for almost 90 years as disinfectants to treat drinking water. Although chloramines are less efficient than free chlorine in killing or inactivating pathogens, they generate less trihalomethanes (THMs) and haloacetic acids (HAAs). They are also more stable than free chlorine, thus providing longer disinfection contact time within the drinking water distribution system. Because of these properties, chloramines are mainly used as secondary disinfectants to maintain a disinfectant residual in the distribution system and are generally not used as primary disinfectants (White, 1992).

Of the three chloramines, monochloramine is the preferred species for use in disinfecting drinking water because of its biocidal properties and relative stability, and because it rarely causes taste and odour problems when compared with dichloramine and trichloramine (Kirmeyer et al., 2004).

As a secondary usage, monochloramine has been used for the organic synthesis of amines and substituted hydrazines used as pharmaceutical intermediates, while dichloramine has been used for the preparation of diazirine, a labelling reagent (Graham, 1965; Kirk-Othmer, 2004; El-Dakdouki, 2014). Trichloramine gas was previously used in the food industry for bleaching flour (agene method), but the practice was discontinued in 1950 in the United States and the United Kingdom due to adverse health effects seen in animals (Shaw and Bains, 1998). Other uses have included paper manufacturing and fungicide treatment for fruit (Kirk-Othmer, 2004).

Chloramines do not occur naturally (IARC, 2004). They may be intentionally produced or generated as by-products of drinking water chlorination, including in groundwater systems that undergo chlorination in the presence of natural ammonia, as well as in chlorinated wastewater effluents (WHO, 2004; Hach, 2017).

For disinfection purposes, chloramines are formed through a process called chloramination (U.S. EPA, 1999). Chloramination involves the addition of ammonia (NH3) to free aqueous chlorine (hypochlorous acid, HOCl). This mixture can lead to the formation of inorganic compounds, such as monochloramine, dichloramine, and trichloramine (NHMRC, 2011).

NH3 + HOCl –> NH2Cl (monochloramine) + H2O

NH2Cl + HOCl –> NHCl2 (dichloramine) + H2O

NHCl2 + HOCl –> NCl3 (trichloramine) + H2O

Chloramine speciation mainly depends on the chlorine to ammonia ratio (Cl2:NH3) and the pH, but also depends on the temperature and the contact time (U.S. EPA, 1999). Cl2:NH3 ratios of ≤5:1 by weight (equivalent to ratios of ≤1:1 by mole) are optimum for monochloramine formation. The Cl2:NH3 ratio by weight is defined as the amount of chlorine added in proportion to the amount of ammonia added (in milligrams); all the Cl2:NH3 ratios presented in the following document are reported by weight. Ratios between 5:1 and 7.6:1 favour dichloramine production, whereas trichloramine is produced at higher ratios. Neutral to alkaline conditions (pH 6.5–9.0) are optimum for monochloramine formation (monochloramine formation occurs most rapidly at a pH of 8.3), whereas acidic conditions are optimum for the formation of dichloramine (pH 4.0–6.0) and trichloramine (pH <4.4) (Kirmeyer et al., 2004).

Under typical drinking water treatment conditions (pH 6.5–8.5) and with a Cl2:NH3 ratio of <5:1 (a ratio of 4:1 is typically accepted as optimal for chloramination), both monochloramine and dichloramine are formed with a much higher proportion of monochloramine (U.S. EPA, 1999). For example, when water is chlorinated with a Cl2:NH3 ratio of 5:1 at 25°C and pH 7.0, the proportions of monochloramine and dichloramine are 88% and 12%, respectively (U.S. EPA, 1994a). For its part, trichloramine can be formed in drinking water at pH 7.0 and 8.0, but only if the Cl2:NH3 ratio is increased to 15:1 (Kirmeyer et al., 2004). Thus, under usual water treatment conditions, monochloramine is the principal chloramine encountered in drinking water. Elevated levels of dichloramine and trichloramine in drinking water may occur, but would be due to variations in the quality of the raw water (e.g., pH changes) or accidental changes in the Cl2:NH3 ratio (Nakai et al., 2000; Valentine, 2007).

Chloramines (mono-, di- and trichloramines) can be found in media other than drinking water. In swimming pools, for example, they are disinfection by-products (DBPs) incidentally formed from the decomposition, via chlorination, of organic-nitrogen precursors, such as urea, creatinine and amino acids, originating from human excretions (e.g., sweat, feces, skin squama, urine) (Li and Blatchley, 2007; Blatchley and Cheng, 2010; Lian et al., 2014).

Chloramines are also formed when wastewater effluents or cooling waters are treated with chlorine (U.S. EPA, 1994a). In the food industry, they may result from the reaction between hypochlorite and nitrogen compounds coming from the proteins released by vegetables or animals (Massin et al., 2007). At home, chloramine fumes (a combination of monochloramine and dichloramine forming a noxious gas) can be produced when bleach and ammonia are accidentally mixed for cleaning purposes (Gapany-Gapanavicius et al., 1982).

4.1 Environmental fate

Because chloramines are found mainly in the aqueous phase, their fate in the environment will be ruled by processes relevant to this media.

The (auto) decomposition of monochloramine in water is affected by many factors (Wilczak et al., 2003b); its rate will increase as the temperature and inorganic carbon increase, the Cl2:NH3 ratio increases and the initial chloramine concentration, as well as the pH decrease. Vikesland et al. (2001), using decay experiments, reported that at pH 7.5, monochloramine has a half-life of over 300 h at 4°C, whereas it decreases to 75 h at 35°C. Autodecomposition of aqueous monochloramine to dichloramine will occur by one of two pathways: hydrolysis and acid-catalyzed disproportionation, both of which are described in Wilczak et al. (2003b).

When the pH is neutral, trichloramine in water will slowly decompose by autocatalysis to form ammonia and HOCl (U.S. EPA, 1994a). Trichloramine has limited solubility. Since it is extremely volatile, it will volatilize into air (U.S. EPA, 1994b; Environment Canada and Health Canada, 2001). By contrast, according the physico-chemical properties listed in Table 1, mono- and dichloramine are very soluble in water and not very volatile.

4.1.1 Impact of chloramines on aquatic life

Chloramines enter the Canadian aquatic environment primarily through municipal wastewater release (73%) and drinking water release (14%), along with other minor sources (Pasternak et al., 2003). Release of chloraminated water (total chlorine = 2.53 mg/L and 2.75 mg/L) as a result of a drinking water main break reportedly caused two large fish kills in the Lower Fraser River watershed (Nikl and Nikl, 1992). To mitigate the impact of chlorine or chloramines, aquarium owners must ensure the use of proper aeration or chlorine/chloramine quenching (Roberts and Palmeiro, 2008).

4.2 Terminology

This section provides definitions for some relevant terms used in this document, as adapted from the American Water Works Association (AWWA, 1999; Symons et al., 2000):

- Total chlorine: all chemical species containing chlorine in an oxidized state; usually the sum of free and combined chlorine concentrations present in water;

- Free chlorine: the amount of chlorine present in water as dissolved gas (Cl2), hypochlorous acid (HOCl), and/or hypochlorite ion (OCl-) that is not combined with ammonia or other compounds in water;

- Combined chlorine: the sum of the species resulting from the reaction of free chlorine with ammonia (NH3), including inorganic chloramines: monochloramine (NH2Cl), dichloramine (NHCl2), and trichloramine (nitrogen trichloride, NCl3);

- Chlorine residual: the concentration of chlorine species present in water after the oxidant demand has been satisfied;

- Primary disinfection: the application of a disinfectant at the drinking water treatment plant, with a primary objective to achieve the necessary microbial inactivation; and

- Secondary disinfection: the subsequent application of a disinfectant, either at the exit of the treatment plant or in the distribution system, with the objective of ensuring that a disinfectant residual is present throughout the distribution system.

4.3 Chemistry in aqueous media

The objectives of chloramination are to maximize monochloramine formation, to minimize free ammonia, and to prevent excess dichloramine formation and breakpoint chlorination. Chloramine formation is governed by the reactions of ammonia (oxidized) and chlorine (reduced); its speciation is principally determined by the pH and the Cl2:NH3 weight ratio. The reaction rate of monochloramine formation depends on the pH, the temperature and the Cl2:NH3 weight ratio. Ideally, a weight ratio of 4.5:1–5:1 will help minimize free ammonia and reduce the risk of nitrification (AWWA, 2006b).

The breakpoint chlorination curve can be used to illustrate the ideal weight ratio where monochloramine production can be maximized. For a utility wishing to produce monochloramine, the breakpoint ratio should be determined experimentally for each water supply (Hill and Arweiler, 2006). Figure 1 below shows an idealized breakpoint curve that occurs between pH 6.5 and 8.5 (Griffin and Chamberlin, 1941; Black and Veatch Corporation, 2010). Initially, monochloramine is formed, and once the Cl2:NH3 weight ratio is greater than 5:1, monochloramine formation decreases, because no free ammonia is available to react with the free chlorine being added. The reaction of free chlorine with monochloramine leads to the formation of dichloramine. When high enough Cl2:NH3 weight ratios are achieved, breakpoint chlorination will occur. The breakpoint curve is characterized by the “hump and dip” shape (Figure 1). Dichloramine undergoes a series of decomposition and oxidation reactions to form nitrogen-containing products, including nitrogen, nitrate, nitrous oxide gas and nitric oxide (AWWA, 2006b). Trichloramine, or nitrogen trichloride, is an intermediate during the complete decomposition of chloramines. Its formation depends on the pH and the Cl2:NH3 weight ratio and may appear after the breakpoint (Kirmeyer et al., 2004; Hill and Arweiler, 2006; Randtke, 2010).

After breakpoint, free chlorine is the predominant chlorine residual, not monochloramine. However, the reaction rate of breakpoint chlorination is determined by the formation of monochloramine and the formation and decay rates of dichloramine and trichloramine, reactions that are highly dependent on pH. The theoretical Cl2:NH3 weight ratio for breakpoint chlorination is 7.6:1; however, the actual Cl2:NH3 ratio varies from 8:1 to 10:1, depending on the pH, the temperature and the presence of reducing agents. The presence of iron, manganese, sulphide and organic compounds creates a chlorine demand; i.e., they compete with the free chlorine added, potentially limiting the chlorine available to react with ammonia (Kirmeyer et al., 2004; AWWA, 2006b; Muylwyk, 2009). It is therefore important that each utility generates a site-specific breakpoint curve, experimentally. Additionally, periodic switching to chlorine (breakpoint chlorination) for seasonal nitrification control has also been observed to increase DBPs such as trihalomethanes (chloroform) (Vikesland et al., 2006; Rosenfeldt et al., 2009). However, Rosenfeldt et al. (2009) also observed that flushing was an effective mitigation strategy.

Automated systems can be used to monitor and maintain weight ratio. Hutcherson (2007) reported that the application of programmable logic control and human-to-machine interface resulted in an intuitive and effective dosing control strategy for Newport Beach, California. The 5:1 ideal weight ratio was maintained and monitored 24 h/day. At the time of the report, the system had been in use for 2 years of successful operation.

Figure 1 - Text Description

A graph that shows the idealized breakpoint chlorination curve for achieving maximum monochloramine formation while also minimizing free ammonia. The verticle axis shows concentration with no units and the horizontal axis shows a scale of chlorine to ammonia weight ratios (Cl2:NH3-N) ranging from 1 to 9. The graph shows that at a weight ratio ranging from 4.5:1 to 5:1 (the ideal zone), monochloramine formation and total chlorine are at a maximum while free ammonia reaches a concentration of zero. As the weight ratio increases, dichloramine formation begins increasing after a ratio of about 5.2:1, reaches a maximum at about 5.8:1 and drops to zero at about 7.2:1, where monochoramine formation also reaches zero. At a ratio of about 7.7:1, break point chlorination is depicted with the total chlorine curve reaching its minimum concentration. Beyond break point chlorination and beyond a ratio of 9:1, both total chlorine and trichloramine formation steadily increase.

4.4 Application to drinking water treatment

This document focuses on the health effects related to exposure to chloramine in drinking water supplies, whether formed intentionally as a secondary disinfectant, or unintentionally as a by-product of chlorination. It does not review the benefits or the processes of chloramination, nor does it assess the health risks related to exposure to by-products formed as a result of the chloramination process.

4.4.1 Chloramines in water treatment

The mechanisms by which monochloramine inactivates microbiological organisms are not fully understood (Jacangelo et al., 1991; Coburn et al., 2016). It is suggested that free chlorine and chloramines react with different functional groups in the cell membrane (LeChevallier and Au, 2004). The proposed mode of action of monochloramine is the inhibition of such protein-mediated processes as bacterial transport of substrates, respiration and substrate dehydrogenation (Jacangelo et al., 1991; Coburn et al., 2016). Experiments with bacteria indicated monochloramine was most reactive with sulphur-containing amino acids (LeChevallier and Au, 2004; Rose et al., 2007). Monochloramine did not severely damage the cell membrane or react strongly with nucleic acids. Monochloramine is a more selective reactant than free chlorine and seems to act in more subtle ways at drinking water concentrations (Jacangelo et al., 1991). Inactivation with monochloramine appears to require reactions at multiple sensitive sites (Jacangelo et al., 1991). More research is needed for a better understanding of the process behind chloramine disinfection.

4.4.2 Primary disinfection

Primary disinfection is the application of a disinfectant in the drinking water treatment plant, with the primary objective of achieving the necessary microbial inactivation.

Monochloramine is much less reactive than free chlorine, has lower disinfecting power and is generally not used as a primary disinfectant because it requires extremely high CT valuesFootnote 1 to achieve the same level of inactivation as free chlorine (Jacangelo et al., 1991, 2002; Taylor et al., 2000; Gagnon et al., 2004; LeChevallier and Au, 2004; Rose et al., 2007; Cromeans et al., 2010).

4.4.3 Secondary disinfection

Secondary disinfection may be applied to the treated water as it leaves the treatment plant or at rechlorination points throughout the distribution system, to introduce and maintain a disinfectant residual in the drinking water distribution system.

The main function of the residual is to protect against microbial regrowth (LeChevallier and Au, 2004). The disinfectant residual can also serve as a sentinel for water quality changes. A drop in residual concentration can provide an indication of treatment process malfunction, inadequate treatment or a break in the integrity of the distribution system (LeChevallier, 1998; Haas, 1999; Health Canada, 2012a, 2012b).

Monochloramine is slower to react and, in treated drinking water, can provide a more stable and longer-lasting disinfectant residual in the distribution system (Jacangelo et al., 1991; U.S. EPA, 1999; LeChevallier and Au, 2004; Cromeans et al., 2010). However, combined chlorine residuals are less functional as sentinels of potential post-treatment contamination events than free chlorine residuals. Declines in combined chlorine measurements may not always be large enough or rapid enough to alert utilities that a contamination problem has occurred within the distribution system (Snead et al., 1980; Wahman and Pressman, 2015). Also, a drop in residual may be due to nitrification and not post-treatment contamination (Wahman and Pressman, 2015). Disinfectant residual monitoring should be conducted alongside other parameters as part of broader programs for microbiological quality and nitrification.

Organic chloramines provide little to no disinfection (Feng, 1966; Donnermair and Blatchley, 2003). In bench-scale experiments, Lee and Westerhoff (2009) demonstrated that organic chloramines comprise a greater proportion of the chlorine residual in systems where chloramines are formed by adding ammonia after a 10-min contact time with free chlorine than in those where preformed monochloramine is used. Organic chloramines formed more rapidly in the presence of free chlorine than in the presence of monochloramine. However, as it is common practice to provide short chlorine contact time followed by ammonia addition, the risk of generating organic chloramines is an important consideration.

4.4.4 Formation of chloraminated disinfection by-products

Chloramines are often used to meet DBP compliance based on HAAs and THMs; however, chloramines also react with natural organic matter (NOM) to form other DBPs such as iodinated disinfection by-products (I-DBPs) and nitrosamines (Richardson and Ternes, 2005; Charrois and Hrudey, 2007; Hua and Reckhow, 2007; Richardson et al., 2008; Nawrocki and Andrzejewski, 2011). Hydrazine can also form as a result of abiotic reactions of ammonia and monochloramine (Najm et al., 2006).

I-DBPs are more readily formed in chloraminated systems. Monochloramine oxidizes iodide to hypoiodous acid quickly, but the reaction with NOM is slow, allowing sufficient time for the formation of I-DBPs (Singer and Reckhow, 2011). Chlorine and ozone can also oxidize iodide to hypoiodous acid; however, the iodide is further oxidized to iodate, forming only trace to minimal amounts of I-DBPs (Hua and Reckhow, 2007). In bench-scale formation experiments using simulated raw water, Pan et al. (2016) found that chloraminated water formed more polar I-DBPs than water treated with either chlorine dioxide or chlorine. The authors also noted that as pH increased (from 6 to 9), the formation of polar I-DBPs decreased. Water quality factors such as pH and ratios of dissolved organic carbon, iodide and bromide have been demonstrated to play an important role in determining the species and abundance of iodated trihalomethanes formed under drinking water conditions (Jones et al., 2012).

N-Nitrosodimethylamine (NDMA) is a nitrogen-containing DBP that may be formed during the treatment of drinking water, particularly during chloramination and, to a lesser extent, chlorination (Richardson and Ternes, 2005; Charrois and Hrudey, 2007; Nawrocki and Andrzejewski, 2011). The key to controlling the formation of NDMA lies in limiting its precursors, including dichloramine; thus optimization and control of free ammonia are important elements in preventing NDMA formation (Health Canada, 2011). Additionally, Krasner et al. (2015) demonstrated that several pre-oxidation technologies were effective in destroying the watershed-derived NDMA precursors (ozone > chlorine > medium pressure UV > low pressure UV > permanganate). Uzen et al. (2016) observed that site-specific factors such as upstream reservoirs, wastewater discharge, and mixing conditions can affect NDMA formation potential and should be characterized for each individual site. More detailed descriptions of precursors and treatment options can be found in that report (Krasner et al., 2015; Uzen et al., 2016). Cationic polymers containing diallyldimethylammonium chloride, used in water treatment, can also act as a source of NDMA precursors (Wilczak et al., 2003a).

Under certain conditions, hydrazine can form through a reaction between ammonia and monochloramine. Najm et al. (2006) found that at low concentrations of free ammonia-nitrogen (<0.5 mg/L) and at pH <9, less than 5 ng/L of hydrazine was formed, but that increasing either ammonia concentrations or pH also increased hydrazine formation. Davis and Li (2008) obtained 13 samples from six chloraminated drinking water utilities and found hydrazine above the detection limit of 0.5 ng/L in 7 of the samples (0.53–2.5 ng/L). Hydrazine production was found to be greatest where treatment processes had high pH (i.e., lime softening). Several treatment practices were identified to minimize hydrazine production, including delaying chloramination until the pH was adjusted (i.e., recarbonation step) and managing the Cl2:NH3 ratio to minimize the free ammonia concentration (Najm et al., 2011).

4.4.5 Taste and odour considerations

Chloramines have been described as having a flavour profile of chlorine-like, musty and/or chalky, ammonia, slat, soapy-slimy feel, sour, bitter, dry mouth. Trichloramine has also been described as having a geranium-like odour (White, 1972). Consumer concerns regarding chloramines in drinking water are often related to taste and odour issues, although the taste and odour of chloramines are generally less noticeable and less offensive to consumers than those of free chlorine. The principal chloramine species, monochloramine, normally does not contribute significantly to the objectionable taste and odour of drinking water when present at concentrations of less than 5 mg/L (Kirmeyer et al., 2004). Di- and tri- chloramines are more likely to cause complaints, especially if they comprise more than 20% of the chloramine concentration in the drinking water (Mallevialle and Suffett, 1987).

Several studies conducted with panels or volunteers to determine the taste and odour thresholds of chloramines in water showed that taste and odour were highly subjective. Krasner and Barrett (1984) used linear regression of data compiled from a trained panel of moderate- to highly-sensitive individuals to derive a taste threshold of 0.48 mg/L and an odour threshold of 0.65 mg/L for monochloramine. Only the most sensitive panelists could detect monochloramine in the range of 0.5–1.5 mg/L (Krasner and Barrett, 1984). By contrast, a taste threshold of 3.7 mg/L was determined using untrained volunteers from the public (Mackey et al., 2004). Similarly, White (1992) found that concentrations of 3 mg/L and even 5 mg/L of monochloramine in drinking water were unlikely to cause taste and odour complaints. Lubbers and Bianchine (1984) found a wide variability in individual perception of chloramine taste. Although a dose of 24 mg/L was slightly (6/10) to very (2/10) unpleasant to most volunteer test subjects (n = 10), one subject could not detect a taste and another did not find it objectionable.

By contrast, the presence of dichloramine and trichloramine was detected at much lower concentrations. Krasner and Barrett (1984) determined the taste and odour thresholds for dichloramine to be 0.13 mg/L and 0.15 mg/L, respectively. Taste and odour problems were not expected when dichloramine was below 0.8 mg/L (White, 1992). However, Krasner and Barrett (1984) felt that 0.5 mg/l was a better cutoff since objectionable taste and odour were noted at 0.9–1.3 mg/L, and at a lesser level at 0.7 mg/L (Krasner and Barrett, 1984). A similar odour threshold concentration was seen for trichloramine at 0.02 mg/L (White, 1972).

Taste and odour complaints should be monitored and tracked. Utilities can address taste and odour issues through a variety of operational strategies to address water age, disinfection demand, hydraulic issues (such as dead ends and low-flow areas), bacteria growth and dosing issues (Kirmeyer et al., 2004).

Optimizing treatment for monochloramine production reduces the potential to form dichloramine and trichloramine, resulting in water with the least flavour. Reactions of chloramines with organic compounds in water can form by-products that also cause tastes and odours.

Operational strategies to reduce tastes and odours include treating the water to remove taste and odour precursors, flushing the distribution system and reducing the detention time and water age in the distribution system. When using monochloramine for secondary disinfection the primary concern is the protection of public health by maintaining the microbiological safety of the drinking water supply during distribution.

Available studies (see Section 9.0) and surveys have not indicated evidence of adverse health effects associated with exposure to monochloramine at concentrations used in drinking water disinfection. Although levels commonly found in drinking water are within an acceptable range for taste and odour, individual sensitivities regarding the acceptability of water supplies can be varied. In addition, where elevated chloramine concentrations are required in order to maintain an effective disinfectant residual throughout the distribution system, the median taste thresholds may be exceeded. Therefore it is important that utilities contemplating a conversion to monochloramine remain aware of the potential for taste and odour concerns during the selection of operational and management strategies.

Communication with consumers is a key part of assessing and promoting the acceptability of drinking water supplies with the public. Consumer feedback on drinking water quality is an important source of data for utilities to assess the quality of drinking water distributed to residences and businesses. Evaluation of consumer acceptability and knowledge of consumer complaints are also recognized as important components of water quality verification under a Water Safety Plan approach to drinking water delivery. Guidance material to help water utilities develop programs for communication and consumer feedback is available elsewhere (Whelton et al., 2007 ).

5.0 Exposure

No environmental data were found for inorganic chloramines in sediments, soils and ambient air (Environment Canada and Health Canada, 2001). As a result, drinking water is considered the primary source of exposure for this assessment.

5.1 Water

Chloramines are usually measured as combined chlorine residuals, corresponding to the difference between total chlorine residual and free chlorine residual. This method has limitations, because the combined chlorine value does not determine the individual concentrations of mono- and dichloramines present in drinking water, and because the free chlorine measurement is not always accurate in the presence of high levels of chloramines. Individual chloramines can be differentiated using multi-stage procedures, but interferences such as organic chloramines may in some cases result in misleading measurements (e.g., overestimation of monochloramine concentrations) (Lee et al., 2007; Ward, 2013) (see Section 6 for additional information).

Limited provincial data are reported in Table 2. Generally, levels of chloramines or combined chlorine are below 3 mg/L; only a few values exceeding 3 mg/L were reported.

The City of Ottawa (2017) uses chloramines for secondary disinfection, and results from 2016 show an average monochloramine residual of 1.62 mg/L (range 1.21–2.12 mg/L) for the Britannia Water Purification Plant (WPP) and 1.56 mg/L (range 1.21–2.03 mg/L) for the Lemieux Island WPP. The remaining chloramines measured (i.e., total of di- and trichloramines) only make up a small portion of the chloramine residual for each of the two treatment plants (averages of 0.06 mg/L and 0.12 mg/L, respectively).

| Province(Year) | # of sites | Range: min–max (No. non-detects/total no. of samples) | ||||||

|---|---|---|---|---|---|---|---|---|

| Chlorine | Chloramines | |||||||

| Total | Free | Combined | Chloramines | Mono- | Di- | Tri- | ||

| NSFootnote e (2008–2015) |

39 | — | — | — | <0.00002–1.75 mg/L (1/81) |

<0.1–0.1 mg/L (0/9) |

<0.1 (0/9) | <0.1–0.4 mg/L (0/9) |

| QCFootnote f (2013–2015) |

3Footnote a | — | — | — | 0.07–1.80 mg/L (0/7) |

— | — | — |

| QCFootnote f,Footnote j (2013–2015) |

5Footnote b | 0.01–4.6 mg/L (0/3432) |

0–4.2 mg/L (544/3432) |

— | 0–3.24 mg/L (9/3432) |

— | — | — |

| QCFootnote f (2013–2015) |

11Footnote c | 0–7.05 mg/L (8/2924) |

0–5.5 mg/L (239/2924) |

— | 0–2.47 mg/L (8/2924) |

— | — | — |

| ONFootnote g (2012–2017) |

108 | — | — | 0–2.64 mg/L (2/1500) |

— | — | — | |

| SKFootnote d,Footnote h,Footnote j (2006–2015) |

18 | 0.62–3.24 mg/L (0/25) |

0.01–1.18 mg/L (0/24) |

— | 0.01–3.9 mg/L (0/28) |

1.28–3.11 mg/L (0/24) |

— | — |

| BCFootnote i (2015) |

37 | 0.01–1.86 ppm (10/1923) |

0–0.97 ppm (10/1918) |

— | — | 0.6–1.51 mg/L (0/51) |

0–0.7 µg/L (4/51) |

— |

| BCFootnote i (2016) |

37 | 0.03–1.89 ppm (0/1931) |

0–0.93 ppm (11/1929) |

— | — | 0–1.3 mg/L (1/51) |

0–0.61 µg/L (2/50) |

— |

5.2 Air

Chloramines may be encountered in the ambient air of food industry facilities that typically use large quantities of disinfecting products. For example, total concentrations of chloramines (mainly trichloramine) have been reported in the ambient air of green salad processing plants (e.g., 0.4–16 mg/m3; Hery et al., 1998) and of turkey processing plants (e.g., 0.6–1 mg/m3 average concentrations; Kiefer et al., 2000).

5.3 Swimming pools and hot tubs

Chloramines are present in indoor swimming pools as DBPs of chlorination. Due to its high volatility and low solubility, trichloramine is the predominant species present in the swimming pool atmosphere. Numerous papers report trichloramine concentrations in the atmosphere of indoor swimming pools (including water parks), with mean concentrations ranging from approximately 114 µg/m3 to 670 µg/m3 (Carbonnelle et al., 2002; Thickett et al., 2002; Jacobs et al., 2007; Dang et al., 2010; Parrat et al., 2012). The levels of airborne trichloramine are influenced by such factors as number of swimmers, organic compounds (mainly urine and sweat) introduced into the water by swimmers, air ventilation, as well as water temperature, circulation and movement (splashing, waves, etc.) (Carbonnelle et al., 2002; Jacobs et al., 2007; Parrat et al., 2012).

5.4 Multiroute exposure through drinking water

Mono- and dichloramine do not possess the physico-chemical characteristics to consider their contribution via the dermal or inhalation route, since both are water soluble but not volatile (see Table 1). Conversely, trichloramine is very volatile and not soluble in water. In addition, trichloramine is relatively unstable in water and is only formed beyond breakpoint (see Figure 1) or under low pH, conditions that are unlikely to occur in treated drinking water. Therefore under normal usage conditions, the ratio of trichloramine to total chloramines is very low and would not contribute significantly to the dermal or inhalation routes of exposure. Consequently, exposure to chloramines via inhalation and dermal routes during showering or bathing is expected to be negligible.

6.0 Analytical methods

There are no U.S. EPA approved methods for the direct measurement of chloramines. There are, however, several U.S. EPA approved methods for the measurement of total and free chlorine (Table 3). Free chlorine is the sum of chlorinated species that do not contain ammonia or organic nitrogen (i.e., Cl2, HOCl, OCl-, and Cl3-), whereas combined chlorine is the sum of chlorine species that are combined with ammonia (NH2Cl, NHCl2, and NCl3) (Black and Veatch Corporation, 2010). Since total chlorine is often used as an assumed proxy for combined chlorine (chloramines), it is important that free chlorine be measured to validate the assumption that none is present. Although there is no method for directly measuring chloramines, equation 1 below shows how chloramines can be determined by subtracting free chlorine from total chlorine:

Combined chlorine (chloramines) = Total chlorine – Free chlorine (1)

When using the DPD colorimetric test, it is important to ensure that field staff are well trained to do both free and total chlorine measurements. This ensures that false positive results are not inadvertently reported (Spon, 2008). Users should consult with the manufacturer regarding method interferences, interfering substances and any associated corrective steps that may be necessary. Although they are not approved by the U.S. EPA for direct measurement of chloramine species, several methods (SM 4500 D, 4500 F, and 4500 G) have additional steps (beyond total, free and combined chlorine) that can be used to distinguish between the various chloramine species. Both dichloramine and trichloramine are relatively unstable, and their formation reactions do not proceed to completion under typical drinking water conditions (Black and Veatch Corporation, 2010). Specific instructions for mitigating effects of interfering agents (including interference from other chlorine species), optimal analytical performance (including the use of reagent blanks), and reaction times for accurate sample readings are available in the method documents.

| Method(Reference) | Methodology | Residual measured (MDL) | Comments |

|---|---|---|---|

| ASTM D1253 (ASTM, 1986, 2003, 2008, 2014) |

Amperometric titration | Total, free, combined (NA) | Reaction is slower at pH >8 and requires buffering to pH 7. A maximum concentration of 10 mg/L is recommended. Interferences include cupric, cuprous and silver ions; trichloramine; some N-chloro compounds; chlorine dioxide; dichloramine; ozone; peroxide; iodine; bromine; ferrate; Caro's acid. |

| SM 4500-Cl D (APHA et al., 1995, 1998, 2000, 2005, 2012) |

Amperometric titration | Total, free, combined (NA) | Dilutions are recommended for concentrations above 2 mg/L. Interferences are trichloramine, chlorine dioxide, free halogens, iodide, organic chloramines, copper, silver. Monochloramine can interfere with free chlorine, and dichloramine can interfere with monochloramine. Method can also be used to characterize species (monochloramine and dichloramine). |

SM 4500-Cl G (colorimetric) SM 4500-Cl F

(ferrous) |

N,N-diethyl-p-phenylenediamine (DPD) | Total, free, combined

Total, free, combined |

Interferences include oxidized manganese, copper, chromate, iodide, organic chloramines. Method can also be used to characterize species (monochloramine, dichloramine, and trichloramine) in a laboratory setting. |

| Hach 10260 Rev 1.0 (HACH, 2013) |

DPD Chemkey | Total (0.04 mg/L), free (0.04 mg/L), combined (NA) | Interferences include acidity >150 mg/L CaCO3, alkalinity >250 mg/L as CaCO3 highly buffered or extreme pH samples, bromine, chlorine dioxide, iodine, ozone, organic chloramines, peroxides, oxidized manganese, oxidized chromium. |

Organic chloramines are formed when dissolved organic nitrogen reacts with either free chlorine or inorganic chloramines (Lee and Westerhoff, 2009) and are known interfering agents for both the amperometric and the DPD methods (APHA et al., 2012). Wahman and Pressman (2015) highlighted that organic chloramines can provide false positives. Lee and Westerhoff (2009) estimated that utilities are likely to overestimate chloramine residuals by approximately 10% as a result of interference from organic chloramines. Similarly, in Gagnon et al. (2008), a pipe loop distribution system study identified that organic chloramines comprised approximately 10–20% of the total chlorine residual in a chloraminated system. The contribution of organic chloramines to the total chlorine residual can be calculated by subtracting the monochloramine concentration from the total chlorine residual. Although not approved by the U.S. EPA, the Hach 10241 U.S. EPA Indophenol method (Hach, 2017) can be used to measure monochloramine using a DPD method. This method could be used to help inform day-to-day operations by providing an approximation of organic chloramines.

6.1 Recommended method

Key points for sampling also include the entry point to the distribution system (baseline), storage facilities, upstream and downstream locations of booster stations, areas of low flow or high water age, areas of various system pressures, mixed zones (blended water), and areas with various sizes and types of pipe material. Dedicated sampling taps are an ideal approach for residual sampling (AWWA, 2013), and customer taps can be used as an alternative. In the absence of suitable tap access hydrants can be used for residual sampling. However, taking a meaningful hydrant sample can be challenging. The U.S. EPA (2016a) has developed a hydrant sampling best practice manual that can be used to estimate the flush time required for a representative sample. Alexander (2017) recommended targeting remote locations of a distribution system, suggested that it is preferable to target more locations with fewer samples at those locations vs. more samples at fewer locations, and recommended taking additional investigative samples. An investigation of disinfectant residuals in Flint, Michigan, revealed that the previous number and location of sampling sites (10 sites) were insufficient. These few locations demonstrated adequate disinfectant (chlorine) residual; however, expanding the number and location of sample sites to more representative locations (an additional 24 sites) revealed that the disinfectant residual was problematic (Pressman, 2017). Although chloramine residuals are consumed less readily in the distribution system, it is still important to have an adequate and representative set of sampling sites. Resources outlining approaches for determining the number and location of sampling points for monochloramine disinfectant residual monitoring in the distribution system are available elsewhere (Louisiana Department of Health and Hospitals, 2016).

Alexander (2017) highlighted the importance of proper field testing techniques. Sample vials can become scratched (during transportation in a truck) or dirty, leading to inaccurate readings. Additionally, plastic vials are prone to the formation of fine bubbles, which can be resolved with slow inversion of the sample. It is important that operators be aware of the challenges of disinfectant residual sampling.

Sampling programs should be reviewed annually and the review should examine historical data, water use patterns/changes, as well as any changes in water treatment or distribution system operation (AWWA, 2013).

7.0 Treatment technology and distribution system considerations

As chloramines are added to drinking water to maintain a residual concentration in the distribution system, or are formed as a by-product of the chlorination of drinking water in the presence of natural ammonia, they are expected to be found in drinking water at the treatment plant, as well as in the distribution and plumbing systems.

7.1 Municipal scale

The literature indicates that monochloramine CT requirements are one to many orders of magnitude greater than those required for free chlorine for achieving similar levels of inactivation of heterotrophic bacteria, E. coli, nitrifying bacteria, enteric viruses and Giardia (LeChevallier and Au, 2004; Wojcicka et al., 2007; Cromeans et al., 2010; Health Canada, 2012b, 2017, 2018a). Reported CT values also demonstrate that similarly to free chlorine, monochloramine is not effective for inactivation of Cryptosporidium (LeChevallier and Au, 2004; Health Canada, 2017).

Given the operational benefits of secondary disinfection, operators should strive to maintain a stable disinfectant residual throughout the system. Regularly monitoring distribution system water quality (e.g., disinfectant residual, microbial indicators, turbidity, pH) and having operational and maintenance programs in place (water mains cleaning, cross-connection control, replacements and repairs) are important for ensuring that drinking water is transported to the consumer with minimum loss of quality (Kirmeyer et al., 2001, 2014).

7.1.1 Disinfectant residual and microbial control

To control bacterial regrowth and biofilm formation, a strong, stable disinfectant residual is needed that is capable of reaching the ends of the distribution system. The amount of disinfectant needed to maintain water quality depends on the characteristics of the distribution system, the species of bacteria, the type of disinfectant used, the temperature, the pH and the amount of biodegradable organic material present (Kirmeyer et al., 2004). When applying monochloramine as a disinfectant, utilities should be aware of their target disinfectant residual value, and adjustments should be made to address monochloramine demand and decay. Manuals have been developed to guide water utilities in establishing goals and disinfection objectives. These manuals recommend that utilities include setting system-specific disinfectant residual targets based on their water quality objectives and system characteristics, and that they ensure chloramine concentrations leaving the treatment plant are sufficient to achieve their established target residual (Kirmeyer et al., 2004; AWWA, 2006a). Full-scale and laboratory-scale studies have been conducted to examine the effectiveness of monochloramine residual concentrations in controlling biofilm growth in drinking water distribution systems. Camper et al. (2003) observed that a monochloramine residual of 0.2 mg/L did not completely control biofilm growth on coupons of epoxy, ductile iron, polyvinyl chloride (PVC) or cement. Similarly, Pintar and Slawson (2003) noted that maintaining a low monochloramine residual of 0.2–0.6 mg/L was not sufficient to inhibit the establishment of biofilms containing nitrifying bacteria and heterotrophic bacteria on PVC coupons. Wahman and Pressman (2015) have noted that when using inorganic chloramines (monochloramine), a “detectable” total chlorine residual is not sufficient to effectively limit bacterial growth in the distribution system. In full-scale investigations conducted by Norton and LeChevallier (1997) and Volk and LeChevallier (2000), water samples from drinking water systems maintaining monochloramine residual concentrations of >1.0 mg/L maintained lower heterotrophic bacterial counts and substantially fewer coliform occurrences than systems with lower monochloramine levels. In laboratory experiments a monochloramine residual of >1.0 mg/L reduced viable counts by greater than 1.5 log for biofilms grown on PVC, galvanized iron, copper and polycarbonate (LeChevallier et al., 1990; Gagnon et al., 2004; Murphy et al., 2008). When biofilms were grown on iron pipe, a monochloramine residual of >2.0 mg/L was needed to reduce bacterial numbers by greater than 2 log (LeChevallier et al., 1990; Ollos et al., 1998; Gagnon et al., 2004). Monochloramine residual concentrations of >2 mg/L may similarly be required to control the development of nitrifying biofilms.

Monochloramine has been perceived to penetrate biofilms better because of its low reactivity with polysaccharides—the primary component of the biofilm matrix (Vu et al., 2009; Xue et al., 2014). In disinfection transport experiments using chlorine/monochloramine-sensitive microelectrodes, monochloramine was shown to penetrate biofilm faster and further than free chlorine but not to result in complete inactivation (Lee et al., 2011, Pressman et al., 2012). Work by Xue et al. (2013, 2014) suggests that monochloramine disinfection efficacy and persistence may be affected by the combination of polysaccharide extracellular polymeric substances (EPSs) obstructing monochloramine reactive sites on bacterial cells, and protein EPS components reacting with monochloramine. Free chlorine, on the other hand, was more effective near the biofilm surface, where it penetrated but had little biofilm penetration (Lee et al., 2011). The authors noted that these findings highlight the challenges for chloraminating drinking water utilities that employ free chlorine application periods for nitrification control and also help explain why nitrification is so hard to stop once it has started.

An understanding of system-specific factors that may make it difficult to achieve target residuals is important for maximizing the effectiveness of strategies for secondary disinfection.

7.1.2 Considerations when changing disinfection practices to chloramination

A change in disinfectant (oxidant), including changing secondary disinfection to chloramine, can impact water quality. It is always recommended that site-specific water quality/corrosion studies be conducted to capture the complex interactions of water quality, distribution system materials and treatment chemicals used in each individual water system. Some considerations when applying chloramine treatment include nitrification, biofilm, residual loss, the formation of DBPs, the degradation of elastomer materials and the impacts the distribution system/premise plumbing materials may have on corrosion.

7.1.3 Presence of ammonia in source water

Ammonia may be present in source water and strategies to treat it will depend on many factors, including the characteristics of the raw water supply, the source and concentration of ammonia (including variation), the operational conditions of the specific treatment method and the utility's treatment goal. In some cases, utilities will form chloramines as a strategy to remove naturally occurring ammonia in the raw water supply, while others may use breakpoint chlorination. In both cases, frequent monitoring of relevant parameters (ammonia; combined, total and free chlorine) is needed to ensure that objectives are achieved at all times. More details on ammonia removal technologies and strategies, including biological treatment, can be found in Health Canada (2013a).

7.2 Distribution system considerations

The use of chloramines as a secondary disinfectant can have impacts on the distribution system water quality, including corrosion, nitrification, microbial regrowth and the growth of opportunistic pathogens.

7.2.1 Residual loss

Chloramines auto-decompose through a series of reactions (Jafvert and Valentine, 1992). However, decomposition of chloramines can also be impacted by interactions with pipe materials and NOM, resulting in loss of residual.

Vikesland and Valentine (2000) demonstrated a direct reaction between Fe(II) and the monochloramine molecule, ultimately forming ammonia. Iron oxides play an autocatalytic role in the oxidation of Fe(II) by monochloramine (Vikesland and Valentine, 2002). In bench-scale experiments, Westbrook and DiGiano (2009) compared the rates of chloramine loss at two pipe surfaces (cement-lined ductile iron and tuberculated, unlined cast iron pipe). Chloramine decay occurred faster (from 3.8 mg/L to <1 mg/L in 2 h) for the unlined cast iron pipe compared with the cement-lined ductile iron pipe (from 3.7 mg/L to 1.5 mg/L in 2 h).

Duirk et al. (2005) described two reaction pathways between chloramines and NOM: one fast reaction and a slow reaction where chloramines were hypothesized to hydrolyze to HOCl. The slow reaction was reported to account for the majority of the chloramine loss in this mechanism. Work by Zhou et al. (2013) reported that the mixing ratio of chloramines impacted their decay rate in the presence of NOM: a higher decay rate was observed for chloramines mixed in a ratio of 3:1 than for those mixed at 4:1. Wilczak et al. (2003b) suggested that the nature and characteristics of NOM are important factors in determining how reactive chloramine is with NOM. Additionally, the authors observed that bacterial cell fragments shed from full-scale biologically active granular activated carbon (GAC) filters exerted a significant chloramine demand.

Carbonate has been observed to accelerate monochloramine decay by acting as a general acid catalyst (Vikesland et al., 2001). This study showed that nitrite exerted a significant long-term monochloramine demand and demonstrated that although bromide affects chloramine loss, at low concentrations (<0.1 mg/L), bromide did not have a significant role. Wahman and Speitel (2012) explored the important role of HOCl in nitrite oxidation and found that it peaked in the pH range of 7.5–8.5. A 5:1 Cl2:NH3 ratio was also associated with increased nitrite oxidation (compared with a 3:1 Cl2:NH3 ratio). Increased oxidation of nitrite by HOCl was also associated with lower nitrite concentration (0.5 mg N/L compared with 2 mg N/L) and decreased monochloramine concentrations (1 mg Cl2/L compared with 4 mg Cl2/L).

Factors such as mixing ratios, pH, and temperature have also been shown to play an important role in chloramine decay rates. In experiments by Zhou et al. (2013), chloramine decay rate was to shown to increase over time (72 h). At pH >7, the decay rate was higher for chloramines mixed at a ratio of 3:1 than for those mixed at 4:1. However, at pH <7, that ratio did not play an obvious role. Chloramine loss was greater at low pH. Generally, chloramine loss increased with increasing temperature, and the decay rate was higher for chloramines mixed at a ratio of 3:1 than at 4:1. Research conducted by the Philadelphia Water Department similarly showed monochloramine decay was slow at lower water temperatures (38% decay at 7°C) and increased at higher temperatures (84% decay at 30°C) (Kirmeyer et al. 2004). Web-based chloramine formation and decay models have been developed by Wahman (2015), based on the unified models described by Jafvert and Valentine (1992) and Vikesland et al. (2001), as well as NOM reactions described by Duirk et al. (2005). A user-input model is also publicly available (U.S. EPA, 2016b).

7.2.2 Rechlorination and temporary breakpoint chlorination

As part of a comprehensive distribution management plan, both rechlorination and temporary breakpoint chlorination could be considered as potential tools. Maintaining sufficient disinfectant residual is important, and recombining the (released) free ammonia in the distribution system by booster chlorination can be used in order to maintain the ratio near 5:1 throughout the system (Wilczak, 2006). Free ammonia residual needs to be measured before chemical addition. If sufficient free ammonia is still present, only chlorine needs to be added.

A temporary disinfectant switch to chlorine as a method for controlling nitrification is common practice (Vikesland et al., 2006). Depending on whether nitrification is localized or widespread, the practice may range from a short-term targeted breakpoint of an isolated area of the distribution system to a longer system-wide chlorine burnout (Hill and Arweiller, 2006; Skadsen and Cohen, 2006). Periodic free chlorination has not been demonstrated to be effective for long-term nitrification control (Vikesland et al., 2006). In a full-scale study, the process of switching from chloramines to chlorine and back to chloramines created a breakpoint chlorination reaction, which resulted in a low chlorine residual “transitional front” in the distribution system. Although the HPC temporarily decreased, THMs also increased upon switching back to chloramines. While conducting breakpoint chlorination experiments in distribution systems, Rosenfeldt et al. (2009) observed that incorporating systematic flushing was important to achieving chlorine residual and decreasing chloroform production.

7.2.3 Opportunistic pathogens

The microbiological ecology of distribution systems and piped plumbing supplies can be influenced by factors such as the disinfection strategy, the operational and water quality parameters, the system materials and the age of the system (Baron et al., 2014; Ji et al., 2015). Research has shown the existence of differences in the relative abundance of certain bacterial groups in bulk water and biofilms in the distribution system when using monochloramine compared with free chlorine when providing a disinfectant residual (Norton et al., 2004; Williams et al., 2005; Hwang et al., 2012; Chiao et al., 2014; Mi et al., 2015).

Distribution system and premise plumbing biofilms can serve as reservoirs for opportunistic premise plumbing pathogens (OPPPs) such as Legionella pneumophila, non-tuberculous mycobacteria (e.g., M. avium, M. intracellulare), Pseudomonas aeruginosa and Acinetobacter baumanii (Fricker, 2003; Falkinham, 2015). In developed countries, Legionella and non-tuberculous mycobacteria are becoming increasingly recognized as important causes of waterborne disease for vulnerable sections of the population (Wang et al., 2012, Beer et al., 2015; Falkinham, 2015).

It has been proposed that the use of chloramines in the distribution system may contribute to environmental conditions that are less favourable for Legionella and more favourable for non-tuberculous mycobacteria (Norton et al., 2004; Williams et al., 2005; Flannery et al., 2006; Moore et al., 2006; Gomez-Alverez et al., 2012, 2016; Revetta et al., 2013; Baron et al., 2014; Chiao et al., 2014; Gomez-Smith et al., 2015; Mancini et al., 2015). The free-living amoeba Naegleria fowleri has also received an increased profile as a drinking water pathogen of emerging concern in the southern United States as a result of recent infections linked to municipal drinking water supplies in Arizona and Louisiana (Bartrand et al., 2014). N. fowleri causes primary amoebic meningoencephalitis (PAM), an extremely rare, but commonly fatal disease that has been predominantly associated with water inhalation during recreational water activities at warm freshwater locations (Yoder et al., 2012; Bartrand et al., 2014). Two cases in Louisiana represent the first time disinfected tap water has been implicated in N. fowleri infection (Yoder et al., 2012). Recommendations for maintaining a minimum monochloramine residual of 0.5 mg/L throughout the distribution system and monitoring for nitrification have been included within strategies developed for the control of N. fowleri at impacted water utilities that use chloramines (Robinson and Christy, 1984; NHMRC, 2011; Louisiana Department of Health and Hospitals, 2016).

A well-maintained distribution system is an important component in minimizing microbiological growth in distributed water in premise plumbing systems. Utilities also need to be aware that changes in the microbiological diversity of drinking water systems can occur with changes to disinfection strategies. An understanding of the effects different disinfectants may have on drinking water system ecology is necessary to maximize the effectiveness of disinfection strategies and minimize such unintended consequences as the potential enrichment of specific microbial groups (Williams et al., 2005; Baron et al., 2014).

7.2.4 Nitrification

Nitrification, the microbiological process whereby ammonia is sequentially oxidized to nitrite and nitrate by ammonia-oxidizing bacteria and nitrite-oxidizing bacteria, respectively, is a significant concern for utilities using chloramines for secondary disinfection. Free ammonia can be present as a result of treatment (e.g., improper dosing or incomplete reactions) or release during chloramine demand and decay (Cunliffe, 1991; U.S. EPA, 2002).

Water quality problems caused by nitrification include the formation of nitrite and nitrate, loss of disinfectant residual, bacterial regrowth and biofilm formation, DBP formation, and decreases in pH and alkalinity that can lead to corrosion issues, including the release of lead and copper (U.S. EPA, 2002; Cohen and Friedman, 2006; Zhang et al., 2009, 2010). Growth of nitrifying bacteria leads to a loss in disinfectant residual and increased biofilm production, which further escalates chlorine demand, ammonia release and bacterial regrowth (Kirmeyer et al., 1995; Pintar and Slawson, 2003; Scott et al., 2015). Cometabolism of monochloramine by ammonia-oxidizing bacteria and reactions between chloramines and nitrite are also significant mechanisms for the loss of chloramine residual (Health Canada, 2013b; Wang et al., 2014, Wahman et al., 2016).

Factors favouring nitrification include warm water temperatures, long detention times, and the presence of high levels of organic matter in the distribution system (Kirmeyer et al., 2004; AWWA, 2006a). Nitrification episodes have typically been reported during the summer months when water temperatures range from 20°C to 25°C (Pintar et al., 2000). Utilities should consider increasing the frequency of sampling during warmer months for this reason (AWWA, 2013). Full-scale distribution systems may also be impacted by nitrifying bacteria during typical North American winter conditions, in addition to the summer and fall seasons (Pintar et al., 2000).

In general, to prevent nitrification it is recommended that utilities maintain a minimum monochloramine concentration of 2 mg/L leaving the treatment plant and preferably greater than >1.5 mg/L at all monitoring points in the distribution system (U.S. EPA, 1999; Norton and LeChevallier, 1997; Skadsen and Cohen, 2006). Once it has started, nitrification cannot be easily stopped, even by applying high chloramine doses. Nitrifying bacteria have been detected in drinking water distribution systems in the presence of chloramine residuals exceeding 5 mg/L (Cunliffe, 1991; Baribeau, 2006). Nitrifying biofilms may harbour viable bacteria even after complete penetration with monochloramine at elevated concentrations (Wolfe et al., 1990; Pressman et al., 2012). Utilities should be aware of the chemistry of their water and system materials before considering conversion from chloramines to free chlorine and vice versa (Hill and Arweiler, 2006; Wilczak, 2006). When establishing monitoring programs for nitrification in the distribution system, the most useful parameters are total chlorine, free ammonia, nitrite, temperature and pH (Smith, 2006). HPCs or adenosine triphosphate measurements as indicators of biological growth or biostability are also considered valuable (Smith, 2006; LeChevallier et al., 2015; Health Canada, 2018b).

Detailed information on the causes of nitrification and its monitoring, prevention and mitigation are available elsewhere (AWWA, 2006a). It is important to note that even the stringent control of excess free ammonia and the maintenance of a proper Cl2:NH3 ratio may not always be effective in preventing nitrification. This is due to the fact that chloramine in the distribution system will start to decay based on water quality conditions and water age, releasing free ammonia into the water (Cohen and Friedman, 2006).

7.2.5 Lead and copper release