Enteric Viruses in Drinking Water

Download the alternative format

(PDF format, 1.02 MB, 112 pages)

Organization: Health Canada

Date published: 2017-10-27

Document for Public Consultation

Prepared by the Federal-Provincial-Territorial Committee on Drinking Water

Consultation period ends

December 29, 2017

Enteric Viruses in Drinking Water

Document for Public Consultation

Table of Contents

- Purpose of consultation

- Part I. Overview and Application

- 1.0 Proposed guideline

- 2.0 Executive summary

- 3.0 Application of the guideline

- Part II. Science and Technical Considerations

- 4.0 Description and health effects

- 5.0 Sources and exposure

- 6.0 Analytical methods

- 7.0 Treatment technology

- 8.0 Risk assessment

- 9.0 Rationale

- 10.0 References

- Appendix A: List of Acronyms

- Appendix B: Tables

- Table B.1. Characteristics of waterborne human enteric viruses

- Table B.2. Occurrence of enteric viruses in surface waters in Canada and the U.S.

- Table B.3. Occurrence of enteric viruses in groundwater in Canada and the U.S.

- Table B.4. Occurrence of enteric viruses in drinking water in Canada and the U.S.

- Table B.5. Selected viral outbreaks related to drinking water (1971–2012)

Purpose of consultation

The Federal-Provincial-Territorial Committee on Drinking Water (CDW) has assessed the available information on enteric viruses with the intent of updating the current drinking water guideline and guideline technical document on enteric viruses in drinking water. The purpose of this consultation is to solicit comments on the proposed guideline, on the approach used for its development and on the potential economic costs of implementing it, as well as to determine the availability of additional exposure data.

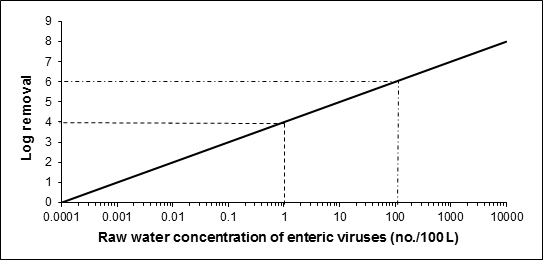

The existing guideline on enteric viruses, last updated in 2011, established a health-based treatment goal of a minimum 4-log reduction of enteric viruses. The 2011 document recognized that although there are methods capable of detecting and measuring viruses in drinking water, they are not practical for routine monitoring in drinking water because of methodological and interpretation limitations. This updated document proposes to maintain the health-based treatment goal of a minimum 4-log removal and/or inactivation of enteric viruses, but also indicates that a greater log reduction may be required, depending on the source water quality.

The CDW has requested that this document be made available to the public and open for comment. Comments are appreciated, with accompanying rationale, where required. Comments can be sent to the CDW Secretariat via email at water_eau@hc-sc.gc.ca. If this is not feasible, comments may be sent by mail to the CDW Secretariat, Water and Air Quality Bureau, Health Canada, 269 Laurier Avenue West, A.L. 4903D, Ottawa, Ontario K1A 0K9. All comments must be received by December 29, 2017.

Comments received as part of this consultation will be shared with the appropriate CDW member, along with the name and affiliation of their author. Authors who do not want their name and affiliation shared with their CDW member should provide a statement to this effect along with their comments.

It should be noted that this guideline technical document on enteric viruses in drinking water will be revised following evaluation of comments received, and a drinking water guideline will be established, if required. This document should be considered as a draft for comment only.

Part I. Overview and Application

1.0 Proposed guideline

The proposed guideline for enteric viruses in drinking water is a health-based treatment goal of a minimum 4 log removal and/or inactivation of enteric viruses. Depending on the source water quality, a greater log reduction may be required. Methods currently available for the detection of enteric viruses are not feasible for routine monitoring. Treatment technologies and watershed or wellhead protection measures known to reduce the risk of waterborne illness should be implemented and maintained if source water is subject to faecal contamination or if enteric viruses have been responsible for past waterborne outbreaks.

2.0 Executive summary

Viruses are extremely small microorganisms that are incapable of replicating outside a host cell. In general, viruses are host specific, which means that viruses that infect animals or plants do not usually infect humans, although a small number of enteric viruses have been detected in both humans and animals. Most viruses also infect only certain types of cells within a host; consequently, the health effects associated with a viral infection vary widely. Viruses that can multiply in the gastrointestinal tract of humans or animals are known as “enteric viruses.” There are more than 140 enteric virus serotypes known to infect humans.

Health Canada recently completed its review of the health risks associated with enteric viruses in drinking water. This guideline technical document reviews and assesses identified health risks associated with enteric viruses in drinking water. It evaluates new studies and approaches and takes into consideration the methodological and interpretation limitations in available methods for the detection of viruses in drinking water. Based on this review, the proposed guideline for enteric viruses in drinking water is a health-based treatment goal of a minimum 4-log (i.e., 99.99%) removal and/or inactivation of enteric viruses.

During its fall 2016 meeting, the Federal-Provincial-Territorial Committee on Drinking Water reviewed the guideline technical document on enteric viruses and gave approval for this document to undergo public consultation.

2.1 Health effects

The human illnesses associated with enteric viruses are diverse. The main health effect associated with enteric viruses is gastrointestinal illness. Enteric viruses can also cause serious acute illnesses, such as meningitis, poliomyelitis and non-specific febrile illnesses. They have also been implicated in chronic diseases, such as diabetes mellitus and chronic fatigue syndrome.

The incubation time and severity of health effects are dependent on the specific virus responsible for the infection. The seriousness of the health effects from a viral infection will also depend on the characteristics of the individual affected (e.g., age, health status). In theory, a single infectious virus particle can cause infection; however, it usually takes more than a single particle. For many enteric viruses, the number of infectious virus particles needed to cause an infection is low, or presumed to be low.

2.2 Exposure

Enteric viruses cannot multiply in the environment; however, they can survive for extended periods of time (i.e., two to three years in groundwater) and are more infectious than most other microorganisms. Enteric viruses are excreted in the faeces of infected humans and animals, and some enteric viruses can also be excreted in urine. Source waters can become contaminated by human faeces through a variety of routes, including effluents from wastewater treatment plants, leaking sanitary sewers, discharges from sewage lagoons, and septic systems. Viruses may also enter the distribution system as a result of operational or maintenance activities or due to system pressure fluctuations.

Enteric viruses have been detected in surface water and groundwater sources. They appear to be highly prevalent in surface waters, and their occurrence will vary with time and location. In the case of groundwater, viruses have been detected in both confined and unconfined aquifers, and can be transported significant distances (i.e., hundreds of meters) in short timeframes (i.e., in the order of hours to days or weeks). Confined aquifers have an overlying geologic layer that may act as a barrier to virus transport. However, these aquifers may still be vulnerable to viral contamination due to pathways, such as fractures, root holes or other discontinuities that allow viruses to be transported through the layer into the aquifer below. The occurrence of enteric viruses in groundwater is not generally continuous and can vary greatly over time. Consuming faecally contaminated groundwater that is untreated or inadequately treated has been linked to illness.

2.3 Analysis and treatment

A risk management approach, such as the multi-barrier approach or a water safety plan, is the best method to reduce enteric viruses and other waterborne pathogens in drinking water. Identifying the vulnerability of a source to faecal contamination is an important part of a system assessment because routine monitoring of drinking water for enteric viruses is not practical at this time. Collecting and analysing source water samples for enteric viruses is, however, important for water utilities that wish to conduct a quantitative microbial risk assessment. Validated cell culture and molecular methods are available for detection of enteric viruses.

Once the source has been characterized, pathogen removal and/or inactivation targets and effective treatment barriers can be established in order to reduce the level of enteric viruses in treated drinking water. In general, all water supplies derived from surface water sources or groundwater under the direct influence of surface water (GUDI) should include adequate filtration (or equivalent technologies) and disinfection to meet treatment goals for enteric viruses and protozoa. Subsurface sources determined to be vulnerable to viruses should achieve a minimum 4 log removal and/or inactivation of viruses.

The absence of indicator bacteria (i.e., E. coli, total coliforms) does not necessarily indicate the absence of enteric viruses. The application and control of a multi-barrier, source-to-tap approach, including process and compliance monitoring (e.g., turbidity, disinfection process, E. coli) is important to verify that the water has been adequately treated and is therefore of an acceptable microbiological quality. In the case of untreated groundwater, testing for indicator bacteria is useful in assessing the potential for faecal contamination, which may include enteric viruses.

2.4 Quantitative microbial risk assessment

Quantitative microbial risk assessment (QMRA) is a process that uses source water quality data, treatment barrier information and pathogen-specific characteristics to estimate the burden of disease associated with exposure to pathogenic microorganisms in a drinking water source. This process can be used as part of a multi-barrier approach for management of a drinking water system, or, it can be used to support the development of a drinking water quality guideline, such as setting the minimum health-based treatment goal for enteric viruses. Specific enteric viruses whose characteristics make them a good representative of all similar pathogenic viruses are considered in QMRA; and from these, a reference virus is selected. Ideally, a reference virus will represent a worst-case combination of high occurrence, high concentration and long survival time in source water, low removal and/or inactivation during treatment, and a high pathogenicity for all age groups. If the reference virus is controlled, it is assumed that all other similar viruses of concern are also controlled. Numerous enteric viruses have been considered. As no single virus has all the characteristics of an ideal reference virus, this risk assessment uses characteristics from several different viruses.

3.0 Application of the guideline

Note: Specific guidance related to the implementation of drinking water guidelines should be obtained from the appropriate drinking water authority in the affected jurisdiction.

Exposure to viruses should be reduced by implementing a risk management approach to drinking water systems, such as the multiple barrier or water safety plan approach. These approaches require a system assessment that involves: characterizing the water source; describing the treatment barriers that prevent or reduce contamination; highlighting the conditions that can result in contamination; and identifying control measure to mitigate those risks through the treatment and distribution systems to the consumer.

3.1 Source water assessments

Source water assessments should be part of routine system assessments. They should include: the identification of potential sources of faecal contamination in the watershed/aquifer; potential pathways and/or events (low to high risk) by which enteric viruses can make their way into the source water; and the conditions that are likely to lead to peak concentrations of enteric viruses. Subsurface sources should be evaluated to determine if the supply is vulnerable to contamination by enteric protozoa (i.e., GUDI) and enteric viruses. These assessments should ideally include a hydrogeological assessment and, at a minimum, an evaluation of well integrity and a survey of activities and physical features in the area. Subsurface sources determined to be vulnerable to virus contamination should achieve a minimum 4 log removal and/or inactivation of enteric viruses. For GUDI sources, additional treatment may be needed to address other microbiological contaminants such as enteric protozoa.

Where monitoring for viruses is feasible, samples are generally collected at a location that is representative of the quality of the water supplying the drinking water system, such as at the intake of the water treatment plant or, in the case of groundwater, from each individual water supply well. When monitoring for viruses, the viability and infectivity of viruses should be determined, as well as the recovery efficiency of the method used. For surface water, it is recommended to conduct monthly sampling through all four seasons to establish baseline levels and to characterize at least two weather events to understand peak conditions; due to the temporal variability of viruses in surface water, intensified sampling (i.e., five samples per week) may be necessary to quantify peak concentrations. For groundwater, including confined aquifers, it is difficult to predict the presence of viral contamination. Monthly sampling through all four seasons is recommended to adequately characterize the occurrence of viral contamination.

3.2 Appropriate treatment barriers

A minimum 4 log removal and/or inactivation of enteric viruses is recommended for all water sources, including groundwater sources. For many source waters, a reduction greater than 4 log may be necessary. A jurisdiction may choose to allow a groundwater source to have less than the recommended minimum 4-log reduction if the assessment of the drinking water system has confirmed that the risk of enteric virus presence is minimal or the aquifer is providing adequate in-situ filtration.

The physical removal of viruses (e.g., natural or engineered filtration) can be challenging due to their small size and variations in their surface charge. Consequently, disinfection is an important barrier in achieving the appropriate level of virus reduction in drinking water. Viruses are effectively inactivated through the application of various disinfection technologies, individually or in combination, at relatively low dosages. The appropriate type and level of treatment should take into account potential fluctuations in water quality, including short-term degradation, and variability in treatment performance. Pilot testing or optimization processes may be useful for determining treatment variability.

Individual households with a well should assess the vulnerability of their well to faecal contamination to determine if their well should be treated. General guidance on well construction, maintenance, protection and testing is typically available from provincial/territorial jurisdictions. When considering the potential for viral contamination specifically, well owners should have an understanding of the well construction, type of aquifer material surrounding the well and location of the well in relation to sources of faecal contamination (i.e., septic systems, sanitary sewers, animal waste, etc.).

3.3 Appropriate maintenance and operation of distribution systems

Viruses can enter a distribution system during water main construction or repair or when regular operations and maintenance activities create pressure transients (e.g., valve/hydrant operation, pump start-up/shut-down). Typical secondary disinfectant residuals have been reported as being ineffective for inactivating viruses in the distribution system. As a result, maintaining the physical/hydraulic integrity of the distribution system and minimizing negative- or low-pressure events are key components of a multi-barrier or water safety plan approach. Distribution system water quality should be regularly monitored (e.g., microbial indicators, disinfectant residual, turbidity, pH), operations/maintenance programs should be in place (e.g., water main cleaning, cross-connection control, asset management) and strict hygiene should be practiced during all water main construction (e.g., repair, maintenance, new installation) to ensure drinking water is transported to the consumer with minimum loss of quality.

Part II. Science and Technical Considerations

4.0 Description and health effects

Viruses range in size from 20 to 350 nm, making them the smallest group of microorganisms. They consist of a nucleic acid genome core (either ribonucleic acid [RNA] or deoxyribonucleic acid [DNA]) surrounded by a protective protein shell, called the capsid. Some viruses have a lipoprotein envelope surrounding the capsid; these are referred to as enveloped viruses. Non-enveloped viruses lack this lipoprotein envelope. Viruses can replicate only within a living host cell. Although the viral genome does encode for viral structural proteins and other molecules necessary for replication, viruses must rely on the host’s cell metabolism to synthesize these molecules.

Viral replication in the host cells results in the production of infective virions and numerous incomplete particles that are non-infectious (Payment and Morin, 1990). The ratio between physical virus particles and the actual number of infective virions ranges from 10:1 to over 1000:1. In the context of waterborne diseases, a “virus” is thus defined as an infectious “complete virus particle,” or “virion,” with its DNA or RNA core and protein coat as it exists outside the cell. This would be the simplest form in which a virus can infect a host. Infective virions released in the environment will degrade and lose their infectivity, but can still be seen by electron microscopy or detected by molecular methods.

In general, viruses are host specific. Therefore, viruses that infect humans do not usually infect non-human hosts, such as animals or plants. The reverse is also true: viruses that infect animals and plants do not usually infect humans, although a small number of enteric viruses have been detected in both humans and animals (i.e., zoonotic viruses). Most viruses also infect only specific types of cells within a host. The types of susceptible cells are dependent on the virus, and consequently the health effects associated with a viral infection vary widely, depending on where susceptible cells are located in the body. In addition, viral infection can trigger immune responses that result in non-specific symptoms. Viruses that can multiply in the gastrointestinal tract of humans or animals are known as “enteric viruses.” Enteric viruses are excreted in the faeces of infected individuals, and some enteric viruses can also be excreted in urine. These excreta can contaminate water sources. Non-enteric viruses, such as respiratory viruses, are not considered waterborne pathogens, as non-enteric viruses are not readily transmitted to water sources from infected individuals.

There are more than 200 recognized enteric viruses (Haas et al., 2014); among which, 140 serotypes are known to infect humans (AWWA, 1999; Taylor et al., 2001). The illnesses associated with enteric viruses are diverse. In addition to gastroenteritis, enteric viruses can cause serious acute illnesses, such as meningitis, poliomyelitis and non-specific febrile illnesses. They have also been implicated in the aetiology of some chronic diseases, such as diabetes mellitus and chronic fatigue syndrome.

Enteric viruses commonly associated with human waterborne illnesses include noroviruses, hepatitis A virus (HAV), hepatitis E virus (HEV), rotaviruses and enteroviruses. The characteristics of these enteric viruses, along with their associated health effects are discussed below, and summarized in Table B.1 in Appendix B. Some potentially emerging enteric viruses are also discussed.

4.1 Noroviruses

Noroviruses are non-enveloped, single-stranded RNA viruses, 35-40 nm in diameter, belonging to the family Caliciviridae. Noroviruses are currently subdivided into seven genogroups (GI to GVII), which are composed of more than 40 distinct genotypes (CDC, 2013a; Vinjé, 2015). However, new norovirus variants continue to be identified; over 150 strains have been detected in sewage alone (Aw and Gin, 2010; Kitajima et al., 2012). Genogroups GI, GII and GIV contain the norovirus genotypes that are usually associated with human illnesses (Verheof et al, 2015), with genogroup II noroviruses, specifically, GII.4, accounting for over 90% of all sporadic cases of acute gastroenteritis in children (Hoa Tran et al., 2013).

Although most noroviruses appear to be host specific, there have been some reports of animals being infected with human noroviruses. GII variants, for example, have been isolated from farm animals (Mattison et al., 2007; Chao et al., 2012) and dogs (Summa et al., 2012); raising the question of whether norovirus transmission can occur between animals and humans. There have been no reports of animal noroviruses in humans; and other genogroups, such as GIII, GV and GVI, have been detected only in non-human hosts (Karst et al., 2003; Wolf et al., 2009; Mesquita et al., 2010).

Norovirus infections occur in infants, children and adults. The incubation period is 12–48 h (CDC, 2013a). Health effects associated with norovirus infections are self-limiting, typically lasting 24–48 h. Symptoms include nausea, vomiting, diarrhoea, abdominal pain and fever. In healthy individuals, the symptoms are generally highly unpleasant but are not considered life threatening. In vulnerable groups, such as the elderly, illness is considered more serious. Teunis et al. (2008) reported a low infectious dose (≥18 viral particles) for norovirus. However, Schmidt (2015) identified study limitations, and concluded that infectivity may be overestimated (see Section 8.3.1). Several studies have reported an inherent resistance in some individuals to infection with noroviruses. It is thought that these individuals may lack a cell surface receptor necessary for virus binding or may have a memory immune response that prevents infection (Hutson et al., 2003; Lindesmith et al., 2003; Cheetham et al., 2007). Immunity to norovirus infection seems to be short-lived, on the order of several months. However, a recent transmission model estimate suggests that immunity may last for years (Simmons et al., 2013).

Noroviruses are shed in both faecal matter and vomitus from infected individuals and can be transmitted through contaminated water. Infected persons can shed norovirus before they have symptoms, and for 2 weeks or more after symptoms disappear (Atmar et al, 2008; Aoki et al., 2010). Noroviruses are also easily spread by person-to-person contact. Many of the cases of norovirus gastroenteritis have been associated with groups of people living in a close environment, such as schools, recreational camps, institutions and cruise ships. Infections can also occur via ingestion of aerosolized particles (CDC, 2011; Repp and Keene, 2012). Infections show strong seasonality, with a peak in norovirus infections most common during winter months (Ahmed et al., 2013).

4.2 Hepatitis viruses

To date, six types of hepatitis viruses have been identified (A, B, C, D, E and G), but only two types, hepatitis A (HAV) and hepatitis E (HEV), appear to be transmitted via the faecal–oral route and therefore associated with waterborne transmission. Although HAV and HEV can both result in the development of hepatitis, they are two distinct viruses.

4.2.1 Hepatitis A virus

HAV is a 27- to 32-nm non-enveloped, small, single-stranded RNA virus with an icosahedral symmetry. HAV belongs to the Picornaviridae family and was originally placed within the Enterovirus genus; however, because HAV has some unique genetic structural and replication properties, this virus has been placed into a new genus, Hepatovirus, of which it is the only member (Carter, 2005).

The incubation period of HAV infection is between 15 and 50 days, with an average of approximately 28 days (CDC, 2015a). The median dose for HAV is unknown, but is presumed to be low (i.e., 10-100 viral particles) (FDA, 2012). HAV infections, commonly known as infectious hepatitis, result in numerous symptoms, including fever, malaise (fatigue), anorexia, nausea and abdominal discomfort, followed within a few days by jaundice. HAV infection can also cause liver damage, resulting from the host’s immune response to the infection of the hepatocytes by HAV. In some cases, the liver damage can result in death.

Infection with HAV occurs in both children and adults. Illness resulting from HAV infection is usually self-limiting; however, the severity of the illness increases with age. For example, mild or no symptoms are seen in younger children (Yayli et al., 2002); however, in a study looking at HAV cases in persons over 50 years of age, a case fatality rate 6-fold higher than the average rate of 0.3% was observed (Fiore, 2004). The virus is excreted in the faeces of infected persons for up to 2 weeks before the development of hepatitis symptoms, leading to transmission via the faecal–oral route (Chin, 2000; Hollinger and Emerson, 2007; CDC, 2015a). HAV is also excreted in the urine of infected individuals (Giles et al., 1964; Hollinger and Emerson, 2007; Joshi et al., 2014). Convalescence may be prolonged (8–10 weeks), and in some HAV cases, individuals may experience relapses for up to 6 months (CDC, 2015a).

The highest incidence of HAV illness occurs in Asia, Africa, Latin America and the Middle East (Jacobsen and Wiersma, 2010). In Canada, the incidence of HAV has declined significantly since the introduction of the HAV vaccine in 1996 (PHAC, 2015a). Seroprevalence studies have reported a nationwide prevalence of 2% and 20% in unvaccinated Canadian-born children and adults, respectively (Pham et al., 2005; PHAC, 2015b). Non-travel related HAV is rare in Canada.

4.2.2 Hepatitis E virus

HEV is a non-enveloped virus with a diameter of 27–34 nm and a single-stranded polyadenylated RNA genome, belonging to the family Hepeviridae. Although most human enteric viruses do not have non-human reservoirs, HEV has been reported to be zoonotic (transmitted from animals to humans, with non-human natural reservoirs) (AWWA, 1999; Meng et al., 1999; Wu et al., 2000; Halbur et al., 2001; Smith et al., 2002; Smith et al., 2013, 2014). Human-infectious HEV strains are classified into four genotypes. Genotypes 1 and 2 are transmitted between humans, whereas genotypes 3 and 4 appear to be zoonotic (transmitted to humans from deer, pigs and wild boars) (Smith et al., 2014). These genotypes were further subdivided into at least 24 subtypes (Smith et al., 2013), however, this classification is under review (Smith et al., 2014).

HEV infection is clinically indistinguishable from HAV infection. Symptoms include malaise, anorexia, abdominal pain, arthralgia, fever and jaundice. The median dose for HEV is unknown. The incubation period for HEV varies from 15 to 60 days, with a mean of 42 days (CDC, 2015b). HEV infection usually resolves in 1–6 weeks after onset. Virions are shed in the faeces for a week or more after the onset of symptoms (Percival et al., 2004). The illness is most often reported in young to middle-aged adults (15–40 years old). The fatality rate is 0.5–3%, except in pregnant women, for whom the fatality rate can approach 20–25% (Matson, 2004). Illnesses associated with HEV are rare in developed countries, with most infections being linked to international travel.

4.3 Rotaviruses

Rotaviruses are non-enveloped, double-stranded RNA viruses approximately 70 nm in diameter, belonging to the family Reoviridae. These viruses have been divided into eight serological groups, A to H (Marthaler et al., 2012), three of which (A, B and C) infect humans. Group A rotaviruses are further divided into serotypes using characteristics of their outer surface proteins, VP7 and VP4. There are 28 types of VP7 (termed G types) and approximately 39 types of VP4 (P types), generating great antigenic diversity (Mijatovic-Rustempasic et al., 2015, 2016). Although most rotaviruses appear to be host specific, there is some research indicating the potential for their zoonotic transmission (Cook et al., 2004; Kang et al., 2005; Gabbay et al., 2008; Steyer et al., 2008; Banyai et al., 2009; Doro et al., 2015; Mijatovic-Rustempasic et al., 2015, 2016); however, it is thought to be rare, and likely does not lead to illness (CDC, 2015c).

In general, rotaviruses cause gastroenteritis, including vomiting and diarrhoea. Vomiting can occur for up to 48 h prior to the onset of diarrhoea. The severity of the gastroenteritis can range from mild, lasting for less than 24 h, to, in some instances, severe, which can be fatal. In young children, extra-intestinal manifestations, such as respiratory symptoms and seizures can occur and are due to the infection being systemic rather than localized to the jejunal mucosa (Candy, 2007). The incubation period is generally less than 48 hours (CDC, 2015c). The illness generally lasts between 5 and 8 days. The median infectious dose for rotavirus is 5.597 (Haas et al., 1999). The virus is shed in extremely high numbers from infected individuals, possibly as high as 1011/g of stool (Doro et al., 2015). Some rotaviruses may also produce a toxin protein that can induce diarrhoea during virus cell contact (Ball et al., 1996; Zhang et al., 2000). This is unusual, as most viruses do not have toxin-like effects.

Group A rotavirus is endemic worldwide and is the most common and widespread rotavirus group; it is the main cause of acute diarrhea (and related dehydration) in humans and several animal species (Estes and Greenberg, 2013). Infections are referred to as infantile diarrhoea, winter diarrhoea, acute non-bacterial infectious gastroenteritis and acute viral gastroenteritis. Children 6 months to 2 years of age, premature infants, the elderly and the immunocompromised are particularly prone to more severe symptoms caused by infection with group A rotavirus. Group A rotavirus is the leading cause of severe diarrhoea among infants and children and accounts for about half of the cases requiring hospitalization, usually from dehydration. In the United States, prior to the introduction of a rotavirus vaccine, approximately 3.5 million cases occurred each year (Glass et al., 1996). Asymptomatic infections can occur in adults, providing another means for the virus to be spread in the community. In temperate areas, illness associated with rotavirus occurs primarily in the cooler months, whereas in the tropics, it occurs throughout the year (Moe and Shirley, 1982; Nakajima et al., 2001; Estes and Kapikian, 2007). Illness associated with group B rotavirus, also called adult diarrhoea rotavirus, has been limited mainly to China, where outbreaks of severe diarrhoea affecting thousands of persons have been reported (Ramachandran et al., 1998). Group C rotavirus has been associated with rare and sporadic cases of diarrhoea in children in many countries and regions, including North America (Jiang et al., 1995). The first reported outbreaks occurred in Japan and England (Caul et al., 1990; Hamano et al., 1999).

4.4 Enteroviruses

The enteroviruses (EV) are a large group of (over 250) viruses belonging to the genus Enterovirus and the Picornaviridae family. They are some of the smallest viruses, consisting of a 20- to 30-nm non-enveloped, single-stranded RNA genome, with an icosahedral symmetry. The genus Enterovirus consists of 12 species, of which seven have been associated with human illness: EV-A to EV-D and rhinovirus (RV)-A, B and C (Tapparel et al., 2013; Faleye et al., 2016; The Pirbright Institute, 2016). Further enterovirus serotypes continue to be identified.

The incubation period and the health effects associated with enterovirus infections are varied. The incubation period for enteroviruses ranges from 2 to 35 days, with a median of 7–14 days. Many enterovirus infections are asymptomatic. However, when symptoms are present, they can range in severity from mild to life threatening. Viraemia (i.e., passage in the bloodstream) often occurs, providing transport for enteroviruses to various target organs and resulting in a range of symptoms. Mild symptoms include fever, malaise, sore throat, vomiting, rash and upper respiratory tract illnesses. Acute gastroenteritis is less common. The most serious complications include meningitis, encephalitis, poliomyelitis, myocarditis and non-specific febrile illnesses of newborns and young infants (Rotbart, 1995; Roivainen et al., 1998). Other complications include myalgia, Guillain-Barré syndrome, hepatitis and conjunctivitis. Enteroviruses have also been implicated in the aetiology of chronic diseases, such as inflammatory myositis, dilated cardiomyopathy, amyotrophic lateral sclerosis, chronic fatigue syndrome and post-poliomyelitis muscular atrophy (Pallansch and Roos, 2007; Chia and Chia, 2008). There is also research supporting a link between enterovirus infection and the development of insulin-dependent (Type 1) diabetes mellitus (Nairn et al., 1999; Lönnrot et al., 2000; Latinen et al., 2014; Oikarinen et al., 2014). Although many enterovirus infections are asymptomatic, it is estimated that approximately 50% of coxsackievirus A infections and 80% of coxsackievirus B infections result in illness (Cherry, 1992). Coxsackievirus B has also been reported to be the non-polio enterovirus that has most often been associated with serious illness (Mena et al., 2003). Enterovirus infections are reported to peak in summer and early fall (Nwachuku and Gerba, 2006; Pallansch and Roos, 2007).

Enteroviruses are endemic worldwide, but few water-related outbreaks have been reported (Amvrosieva et al., 2001; Mena et al., 2003; Hauri et al, 2005; Sinclair et al., 2009). The large number of serotypes, the usually benign nature of the infections, and the fact that they are highly transmissible in a community by person-to-person contact, likely masks the role that water plays in transmission (Lodder et al., 2015).

4.5 Adenoviruses

Adenoviruses are members of the Adenoviridae family. Members of this family include 70- to 100-nm non-enveloped icosahedral viruses containing double-stranded linear DNA. At present, there are seven recognised species (A to G) of human adenovirus, consisting of over 60 (sero)types (Robinson et al., 2013). The majority of waterborne isolates are types 40 and 41 (Mena and Gerba, 2009); however, other serotypes have also been isolated (Van Heerden et al., 2005: Jiang, 2006; Hartmann et al., 2013). The incubation period is from 3-10 days (Robinson et al., 2007).

Adenoviruses can cause a range of symptoms. Serotypes 40 and 41 are the cause of the majority of adenovirus-related gastroenteritis. Adenoviruses are a common cause of acute viral gastroenteritis in children (Nwachuku and Gerba, 2006). Infections are generally confined to children under 5 years of age (FSA, 2000; Lennon et al., 2007) and are rare in adults. Infection results in diarrhoea and vomiting which may last a week (PHAC, 2010).

The viral load in faeces of infected individuals is high (~106 particles/g of faecal matter) (Jiang, 2006). This aids in transmission via the faecal–oral route, either through direct contact with contaminated objects or through recreational water and, potentially, drinking water. In the past, adenoviruses have been implicated in drinking water outbreaks, although they were not the main cause of the outbreaks (Kukkula et al., 1997; Divizia et al., 2004). Drinking water is not the main route of exposure to adenoviruses.

4.6 Astroviruses

Astroviruses are members of the Astroviridae family. Astroviruses are divided into eight serotypes (HAst1-8), and novel types continue to be discovered (Finkbeiner et al., 2009a,b; Kapoor et al., 2009; Jiang et al., 2013). Astroviruses are comprised of two genogroups (A and B) capable of infecting humans (Carter, 2005). Members of this family include 28- to 30-nm non-enveloped viruses containing a single-stranded RNA. Astrovirus infection typically results in diarrhoea lasting 2–3 days, with an initial incubation period of anywhere from 1 to 5 days (Lee et al., 2013). Infection generally results in milder diarrhoea than that caused by rotavirus and does not lead to significant dehydration. Other symptoms that have been recorded as a result of astrovirus infection include headache, malaise, nausea, vomiting and mild fever (Percival et al., 2004; Méndez and Arias, 2007). Serotypes 1 and 2 are commonly acquired during childhood (Palombo and Bishop, 1996). The other serotypes (4 and above) may not occur until adulthood (Carter, 2005). Outbreaks of astrovirus in adults are infrequent, but do occur (Oishi et al., 1994; Caul, 1996; Gray et al., 1997). Healthy individuals generally acquire good immunity to the disease, so reinfection is rare. Astrovirus infections generally peak during winter and spring (Gofti-Laroche et al., 2003).

4.7 Potential emerging viruses in drinking water

Sapoviruses were first identified in young children during a gastroenteritis outbreak in Sapporo, Japan (Chiba et al., 1979), and have become increasingly recognized as a cause of gastroenteritis outbreaks worldwide (Chiba et al., 2000; Farkas et al., 2004; Johansson et al., 2005; Blanton et al., 2006; Gallimore et al., 2006: Phan et al., 2006; Pang et al., 2009) . Like noroviruses, they are members of the Caliciviridae family (Atmar and Estes, 2001). Sapoviruses have been detected in environmental waters, and raw and treated wastewaters in Japan (Hansman et al., 2007; Kitajima et al., 2010a), Spain (Sano et al., 2011) and Canada (Qui et al., 2015). However, they have not been detected in drinking water (Sano et al., 2011).

Aichiviruses are members of the Picornaviridae family. Like sapoviruses, they were first identified in stool samples from patients with gastroenteritis in Japan (Yamashita et al., 1991). However, they have since been detected in the feces of individuals from several countries, including France, Brazil and Finland (Reuter et al., 2011). Although aichivirus has been detected in raw and treated wastewater (Sdiri-Loulizi et al., 2010), very little is known about its occurrence in source waters.

Polyomaviruses are members of the Polymaviridae family. This family includes a number of species that infect humans, including BK polyomavirus and JC polyomavirus. Although these viruses have been detected in environmental waters and sewage (Vaidya et al., 2002; Bofill-Mas and Girones, 2003; AWWA, 2006; Haramoto et al., 2010), their transmission through water has not yet been documented. Contaminated water as a possible route of transmission is supported by the fact that JC polyomavirus is also excreted in urine. Polyomaviruses have been associated with illnesses in immunocompromised individuals, such as gastroenteritis, respiratory illnesses and other more serious diseases, including cancer (AWWA, 2006).

It is important to note that new enteric viruses continue to be detected and recognized.

5.0 Sources and exposure

5.1 Sources

5.1.1 Sources of contamination

The main source of human enteric viruses in water is human faecal matter. Enteric viruses are excreted in large numbers in the faeces of infected persons (both symptomatic and asymptomatic). They are easily disseminated in the environment through faeces and are transmissible to other individuals via the faecal–oral route. Infected individuals can excrete over 1 trillion (1012) viruses/g of faeces (Bosch et al., 2008; Tu et al., 2008). The presence of these viruses in a human population is variable and reflects current epidemic and endemic conditions. Enteric virus concentrations have been reported to peak in sewage samples during the autumn/winter, suggesting a possibly higher endemic rate of illness during this time of year or better survival of enteric viruses at cold temperatures. Faecal contamination of water sources can occur through various routes, including wastewater treatment plant effluent, disposal of sanitary sewage or sludge on land, leaking sanitary sewers, septic system effluents and infiltration of surface water into groundwater aquifers (Vaughn et al., 1983; Bitton, 1999; Hurst et al., 2001; Powell et al., 2003; Borchardt et al., 2004; Bradbury et al., 2013). Some enteric viruses (e.g., HAV) can also be excreted in urine from infected individuals (see Section 4.7).

Human enteric viruses are commonly detected in raw and treated wastewater. Bradbury et al. (2013) reported sewage concentrations ranging from 1.3 × 104 genomic copies (GC)/L to 3.6 × 107 GC/L, with a mean concentration of 2.0 × 106 GC/L. A recent Canadian study (Qiu et al., 2015) examined the presence of multiple human enteric viruses throughout the wastewater treatment process; mean concentrations in raw sewage ranged from 46 to 70 Genomic Equivalent copies/L for enterovirus and adenovirus, respectively. Despite a significant reduction in virus concentration throughout the wastewater treatment process, viruses were still detected in discharges (Qui et al, 2015). These findings are consistent with those of others (Sedmak et al., 2005; He et al., 2011; Li et al., 2011; Simmons et al, 2011; Edge et al., 2013; Hata et al., 2013; Kitajima et al., 2014; Kiulia et al., 2015), and highlight the role that treated wastewater discharges may play in the contamination of surface waters.

Human enteric viruses can also survive septic system treatment (Hain and O’Brien, 1979; Vaughn et al., 1983). Scandura and Sobsey (1997) seeded enterovirus into four septic systems located in sandy soils. Viruses were detected in groundwater within one day of seeding and persisted for up to 59 days (the longest time studied); concentrations ranged from 8 to 908 plaque-forming units/L. The authors reported up to a 9 log reduction of viruses under optimum conditions (not specified) and extensive sewage-based contamination for systems with coarse sand and high water tables. Borchardt et al. (2011) measured norovirus in septic tank waste (79,600 GC/L) and in tap water (34 to 70 GC/L) during an outbreak investigation at a restaurant. The restaurant septic system and well both conformed to state building codes but were situated in a highly vulnerable hydrogeological setting (i.e., fractured dolomite aquifer). Tracer dye tests confirmed that septic system effluent travelled from the tank (through a leaking fitting) and infiltration field to the well in six and 15 days, respectively. Bremer and Harter (2012) conducted a probabilistic analysis to assess septic system impacts on private wells. The probability that wells were being recharged by septic system effluent was estimated to range from 0.6% for large lots (i.e., 20 acres) with low hydraulic conductivity to almost 100% for small lots (i.e., 0.5 acres) with high hydraulic conductivities. For one-acre lots, the probability ranged from 40% to 75% for low to medium hydraulic conductivities, respectively. Kozuskanich et al. (2014) assessed the vulnerability of a bedrock aquifer to pollution by septic systems for a village of 500 persons relying on on-site servicing and found sewage-based contamination of the groundwater to be ubiquitous. Morrissey et al. (2015) reported that the thickness of the subsoil beneath the septic system infiltration field is a critical factor influencing groundwater contamination. Several occurrence studies have reported the presence of enteric viruses in a variety of water supplies relying on on-site services (i.e., private and semi-public wells and septic systems) (Banks et al., 2001; Banks and Battigelli, 2002; Lindsey et al., 2002; Borchardt et al., 2003; Francy et al., 2004; Allen, 2013). Banks et al. (2001) sampled 27 semi-public water supplies in a semi-confined sand aquifer and detected viruses in three wells (11%). Banks and Battigelli (2002) reported the presence of viruses in one of 90 semi-public water supplies in a confined crystalline rock aquifer using molecular methods; no wells tested virus-positive using cell culture methods. Lindsey et al. (2002) sampled 59 semi-public water supplies in various unconfined bedrock (54 wells) and unconfined sand-gravel (5 wells) aquifers; and detected enteric viruses in 5 wells (8%) using cell culture methods. Borchardt et al. (2003) sampled 50 private wells in seven hydrogeologic districts, on a seasonal basis, over a one year period. Viruses were detected, using molecular methods, in four wells (8%) that were in close proximity to a septic system; one well was located in a permeable sand-gravel aquifer, while the other three wells were located in fractured bedrock with minimal overburden cover. Francy et al. (2004) sampled 20 semi-public wells 5–6 times over a two year period in southeastern Michigan in unconfined and confined sand and gravel aquifers. Enteric viruses were detected in 7 wells (35%) by either cell culture or molecular methods. The study also included sampling in urban areas; the authors noted that samples were more frequently virus-positive at sites served by septic systems than those with sanitary sewers.

Leaking sanitary sewers are also an important source of enteric viruses. Wells in areas underlain with a network of sanitary sewers are considered to be at increased risk of viral contamination due to leaking sanitary sewers (Powell et al., 2003). Borchardt et al. (2004) sampled four municipal wells in a sand and gravel aquifer on a monthly basis from March 2001 to February 2002 and determined that enteric viruses were more frequently detected in wells located in areas underlain with a network of sanitary sewers than those located in an area without sanitary sewers. Similar findings were reported by Borchardt et al. (2007) where two out of three municipal wells drawing water from a confined bedrock aquifer tested positive in seven of 20 samples using molecular methods. The virus-positive wells were located in urban area with numerous sewer lines in proximity whereas the third well, which was open to both unconfined and confined aquifers but not located near a source of human faecal waste, was virus-negative throughout the study period. Bradbury et al. (2013) reported a temporal relationship between virus serotypes present in sewage and those in a confined aquifer suggesting very rapid transport, in the order of days to weeks, between sewers and groundwater systems. Hunt et al. (2014) attributed this to preferential pathways such as fractures in the aquitard, multi-aquifer wells and poorly grouted wells.

Animals can be a source of enteric viruses; however, the enteric viruses detected in animals generally do not cause illnesses in humans, although there are some exceptions. As mentioned above, one exception is HEV, which may have a non-human reservoir. To date, HEV has been an issue in developing countries, and therefore most of the information on HEV occurrence in water sources results from research in these countries. There is limited information on HEV presence in water and sewage in developed countries (Clemente-Casares et al., 2003; Kasorndorkbua et al., 2005). Recently, Gentry-Shields et al. (2015) reported the presence of HEV in a single surface water sample obtained from a location proximal to a swine concentrated animal feeding operation spray field in North Carolina (2015), suggesting that these operations may be associated with the dissemination of HEV.

5.1.2 Presence in water

As noted above, enteric viruses can contaminate source water through a variety of routes. The following section details occurrence studies in surface water and groundwater, as well as in drinking water. It is important to note that the majority of these occurrence data were obtained through targeted studies, since source water and drinking water are not routinely monitored for enteric viruses, and may not be representative of the current situation. It is also important to consider that various detection methods were used (i.e., culture-based, molecular) (see Section 6.0), and that the infectivity of detected viruses was not always assessed. Given these varying study approaches, occurrence data cannot be readily compared.

Several studies have reported the presence of enteric viruses in surface waters around the world, including Canada (Sattar, 1978; Sekla et al., 1980; Payment et al., 1984, 2000; Raphael et al., 1985a,b; Payment, 1989, 1991, 1993; Payment and Franco, 1993; Pina et al., 1998, 2001; Sedmak et al., 2005; Van Heerden et al., 2005; EPCOR, 2010, 2011; Gibson and Schwab, 2011; Edge et al., 2013; Corsi et al., 2014; Pang et al., 2014). Table B.2 in Appendix B highlights a selection of enteric virus occurrence studies in Canadian and U.S. surface water sources. Enteric viruses appear to be highly prevalent in surface waters; and their occurrence exhibits a significant temporal and spatial variability. This variability is largely a reflection of whether the pollution source is continuous or the result of a sudden influx of faecal contamination (see Section 5.5). Viral prevalence in surface water is also influenced by environmental factors, such as the amount of sunlight, temperature and predation (Lodder et al., 2010) (see Section 5.2.1).

Enteric viruses were detected in a variety of groundwater sources, using molecular and/or cell culture techniques, with prevalence rates ranging from less than 1% to 46% (Abbaszadegan et al., 1999, 2003; Banks et al., 2001; Banks and Battigelli, 2002; Lindsey et al., 2002; Borchardt et al., 2003; Fout et al., 2003; Francy et al., 2004; Locas et al., 2007, 2008; Hunt et al., 2010; Gibson and Schwab, 2011; Borchardt et al., 2012; Allen, 2013; Bradbury et al., 2013; Pang et al., 2014). Table B.3 in Appendix B highlights a selection of enteric virus occurrence studies for Canadian and U.S. groundwater sources. Viruses were detected in different aquifer types, including semi-public wells in a semi-confined sand aquifer (Banks et al., 2001) and confined crystalline rock aquifer (Banks and Battigelli, 2002), as well as deep municipal wells (220 – 300 m) in a confined sandstone/dolomite aquifer (Borchardt et al., 2007; Bradbury et al., 2013). Bradbury et al. (2013) reported that virus concentrations in deep municipal wells were generally as high as or higher than virus concentrations in lake water. In general, virus occurrence in groundwater can be characterized as transient, intermittent or ephemeral, because wells are often not virus-positive for two sequential samples and the detection frequency is low on a per sample basis (Borchardt et al., 2003; Allen, 2013).

In targeted studies in the U.S., enteric viruses have been detected in drinking waters, including UV disinfected groundwaters (Borchardt et al., 2012; Lambertini et al., 2011). Table B.4 in Appendix B highlights some of these studies. Borchardt et al. (2012) reported the presence of enteric viruses in almost 25% of the over 1,200 tap water samples analyzed from 14 communities relying on untreated groundwater. Adenovirus was the most prevalent (157/1,204) virus detected, although it was found at concentrations one to two orders of magnitude lower than norovirus and enterovirus. Enterovirus was the virus found at the highest concentration, with a mean and maximum concentration of 0.8 GC/L and 851 GC/L, respectively. The authors were able to show an association between the mean concentration of all viruses and acute gastrointestinal illness (AGI) in the community (see Section 5.4.1). In a companion study, Lambertini et al. (2011) showed that enteric viruses can enter into distribution systems through common events (e.g., pipe installation). Enteroviruses, noroviruses GI and GII, adenovirus, rotavirus and hepatitis A virus were enumerated at the wellhead, post-UV disinfection (minimum dose = 50 mJ/cm2) and in household taps. Viruses were detected in 10.1% of post-UV disinfection samples (95th percentile virus concentration ≤ 1.1 GC/L). In contrast, viruses were detected in 20.3% of household tap samples (95th percentile virus concentration ≤ 8.0 GC/L). This increase in virus detection and concentration between UV disinfection and household taps was attributed to viruses directly entering the distribution system (see Section 5.4.1). Previous studies conducted in Canada did not detect enteric viruses in treated water (Payment and Franco, 1993; Payment et al., 1984).

5.2 Survival

As noted above, viruses cannot replicate outside their host’s tissues and therefore cannot multiply in the environment. However, they can survive for extended periods of time (i.e., 2 to 3 years; Banks et al., 2001; Cherry et al., 2006) and can be transported over long distances (as indicated below; Keswick and Gerba, 1980).

Virus survival is affected by the amount of time it takes for a virus to lose its ability to infect host cells (i.e., inactivation process) and the rate at which a virus permanently attaches, or adsorbs, to soil particles (Gerba, 1984; Yates et al., 1985, 1987, 1990; Yates and Yates, 1988; Bales et al., 1989, 1991, 1997; Schijven and Hassanizadeh, 2000; John and Rose, 2005). Both processes are virus-specific (Goyal and Gerba, 1979; Sobsey et al., 1986) and generally independent of each other (Yates et al., 1987; Schijven and Hassanizadeh, 2000; John and Rose, 2005). Although virus concentrations are known to decay in the environment, the inactivation and adsorption processes are very complex and not well understood (Schijven and Hassanizadeh, 2000; Azadpour-Keeley et al., 2003; Gordon and Toze, 2003; Johnson et al., 2011a; Hunt et al., 2014; Bellou et al., 2015). One of the major challenges is that viruses are colloidal particles that can move as independently suspended particles or by attaching to other non-living colloidal particles such as clay or organic macromolecules (Robertson and Edberg, 1997). Another issue is that decay rates are not always linear (Pang, 2009). The decay rate of the more resistant viruses has been observed to decline with time (Page et al., 2010). Adsorption is reported to be the dominant process for groundwater sources (Gerba, 1984; Schijven and Hassanizadeh, 2000; Schijven et al., 2006), but also plays an important role in river and lake bank filtration (refer to Section 7.1.2) (Schijven et al., 1998; Harvey et al., 2015).

Many of the studies evaluating virus inactivation rates and/or adsorption characteristics, including those discussed below, have used surrogates. Surrogates can comprise an organism, particle or substance that is used to study the fate of a pathogen in a natural environment (i.e., inactivation or adsorption processes) or in a treatment environment (i.e., filtration or disinfection processes) (Sinclair et al., 2012). Bacteriophages or coliphages have been suggested as surrogates for viruses (Stetlar, 1984; Havelaar, 1987; Payment and Franco, 1993). For example, bacteriophage PRD1 and coliphage MS2 are similar in shape and size to rotavirus and poliovirusFootnote 1, respectively (Azadpour-Keeley et al., 2003). Both survive for long periods of time and have a low tendency for adsorption (Yates et al., 1985). In contrast, LeClerc et al. (2000) reported numerous shortcomings regarding the use of bacteriophages or coliphages as viral surrogates. Since inactivation and adsorption vary significantly by virus type, it is generally accepted that no single virus or surrogate can be used to describe the characteristics of all enteroviruses. The use of cell culture or molecular methods (see Section 6.1.2) also confounds the interpretation of results (de Roda Husman et al., 2009). One solution is to use a range of sewage-derived microorganisms (Schijven and Hassanizadeh, 2000). Sinclair et al. (2012) describes a process to select a representative surrogate(s) for natural or engineered systems.

5.2.1 Inactivation in the environment

Viruses are inactivated by disruptions to their coat proteins and degradation of their nucleic acids. Critical reviews of the factors influencing virus inactivation indicate that the most important factors include temperature, adsorption to particulate matter and microbial activity (Schijven and Hassanizadeh, 2000; Gordon and Toze, 2003; John and Rose, 2005).

In general, as temperature increases, virus inactivation increases, however, this trend occurs mainly at temperatures greater than 20°C (John and Rose, 2005). Poliovirus incubated in preservative medium was reduced by 2 log after 1,022 days at 4°C versus 4 log reduction after 200 days at 22°C (de Roda Husman et al., 2009). Laboratory experiments have demonstrated the long term infectivity of select viruses in groundwater stored in the dark as follows: rotavirus up to seven months (the longest time studied) and human astrovirus at least 120 days, both stored at 15°C (Espinosa et al., 2008); poliovirus and coxsackievirus for at least 350 days at 4°C (de Rosa Husman et al., 2009); adenovirus for 364 days at 12°C (Charles et al., 2009); and norovirus for at least 61 days at 12°C (Seitz et al., 2011). Viral genomes can be detected for significantly longer periods, namely: at least 672 days for adenovirus stored at 12°C (Charles et al., 2009) and at least 1,266 days for norovirus stored at room temperature (Seitz et al., 2011).

Gerba (1984) reported that viruses associated with particulate matter generally persist for a longer period of time. This effect is influenced by the virus type and nature of the particulate matter. Clay particles are particularly effective at protecting viruses from natural decay (Carlson et al., 1968; Sobsey et al., 1986). Some types of organic matter (i.e., proteins) are also reported to better protect viruses from inactivation (Gordon and Toze, 2003).

Herrmann et al. (1974) reported that viruses are inactivated faster in the presence of indigenous microflora, particularly proteolytic bacteria such as Pseudomonas aeruginosa. The authors observed a 5 log reduction of coxsackievirus and poliovirus in a natural lake after 9 and 21 days, respectively. In contrast, less than a 2 log reduction was observed for both viruses, over the same time frame, in sterile lake water. Gordon and Toze (2003) found that the presence of indigenous microflora was the main reason for virus inactivation in groundwater. No decay of poliovirus was observed in sterile groundwater at 15ºC whereas 1 log reduction occurred after 5 days in non-sterile groundwater; for coxsackievirus, 1 log reduction was observed after 528 and 10.5 days for sterile and non-sterile groundwater, respectively.

Viruses tend to survive longer in groundwater due to the lower temperature, protection from sunlight and less microbial activity (Keswick et al., 1982; John and Rose, 2005). Banks et al. (2001) indicates a conservative estimate for virus survival in groundwater is three years, whereas Cherry et al. (2006) indicates a reasonable estimate is one to two years. Hunt et al. (2014) states that the presence of viral genomes in groundwater demonstrates travel times in aquifers of two to three years between the faecal contamination source and the well. Virus concentrations in surface water have been observed to vary seasonally with higher concentrations at lower temperatures. Schijven et al. (2013) suggest this may be linked to lower biological activity due to the lower temperature.

5.2.2 Adsorption and migration

Considerable research has been conducted to study the mechanisms of the adsorption process (Carlson et al., 1968; Bitton, 1975; Duboise et al., 1976; Goyal and Gerba, 1979; Keswick and Gerba, 1980; Gerba et al., 1981; Vaughn et al., 1981; Gerba, 1984; Gerba and Bitton, 1984; Yates et al., 1987; Yates and Yates, 1988; Bales et al., 1989, 1991, 1995, 1997; Powelson et al., 1991; Rossi et al., 1994; Song and Elimelech, 1994; Loveland et al., 1996, Pieper et al., 1997; Sinton et al., 1997; DeBorde et al., 1998, 1999; Ryan et al., 1999; Schijven et al., 1999, 2002; Schijven and Hassanizadeh, 2000; Woessner et al., 2001; Borchardt et al., 2004; Pang et al., 2005; Michen and Graule, 2010; Bradbury et al., 2013; Harvey et al., 2015). The adsorption process in subsurface environments is primarily controlled by electrostatic and hydrophobic interactions (Bitton, 1975; Gerba, 1984). The hydrologic properties of the aquifer, the surface properties of the virus as a function of water chemistry, and the physical and chemical properties of the individual soil particles (DeBorde et al., 1999) all play a part in adsorption dynamics.

In general, virus adsorption is favoured by low pH and high ionic strength, conditions that reduce the electrostatic repulsive forces between the virus and soil particle (Bitton, 1975; Duboise et al., 1976; Gerba, 1984). Positively charged mineral phases (e.g., iron, aluminum or manganese oxides) promote virus adsorption because most viruses are negatively charged in natural waters (Bitton, 1975; Goyal and Gerba, 1979; Keswick and Gerba, 1980). Clay particles also provide strong positively charged bonding sites and significantly increase the surface area available for virus adsorption (Carlson et al., 1968). In contrast, clay soils are susceptible to shrinking and cracking which allows fractures to form thereby allowing rapid transport of viruses (Pang, 2009). The presence of organic matter is believed to be responsible for many of the uncertainties in the adsorption process (Schijven and Hassanizadeh, 2000). Organic matter can both disrupt hydrophobic interactions and provide hydrophobic adsorption sites, depending on the combination of soil and virus type (Gerba, 1984; Schijven and Hassanizadeh, 2000). Humic substances, for example, are negatively charged like viruses and, therefore, compete for the same adsorption sites as the viruses (Powelson et al., 1991; Pieper et al., 1997).

Hydraulic conditions also play an important role in virus adsorption (Berger, 1994; Azadpour-Keeley et al., 2003). The groundwater velocity must be slow enough to allow viruses to contact and stick to the soil particle; otherwise the virus stays in the water and is transported down gradient. Several researchers have reported that viruses can travel significant distances in short timeframes, through preferential pathways, due to pore size exclusion. This phenomenon means that particles, such as viruses, are transported faster than the average groundwater velocity because they are forced to travel through larger pore sizes where velocities are higher (Bales et al. 1989; Sinton et al., 1997; Berger, 1994; DeBorde et al., 1999; Cherry et al., 2006; Bradbury et al., 2013; Hunt et al., 2014). Well pumping conditions may also create significant hydraulic gradients and groundwater velocities. Bradbury et al. (2013) reported virus transport on the order of weeks from a contaminant source to municipal wells that were 220 to 300 m deep. Using a dye test, Levison and Novakowski (2012) reported that in wells located in fractured bedrock with minimal overburden cover, solute breakthrough occurred within 4 hours at depths between 19 and 35 metres. It is clear that rapid transport of solutes, and by extension, viruses, occurs in fractured bedrock with minimal overburden cover.

In general, the adsorption process does not inactivate viruses; and adsorption is a reversible process (Carlson et al., 1968; Bitton, 1975). Since virus-soil interactions are very sensitive to surface charge, any water quality change that is sufficient to cause a charge reversal will result in the desorption of potentially infectious viruses (Song and Elimelech, 1994; Pieper et al., 1997). Water quality changes that can result in desorption include an increase in pH, a decrease in ionic strength, and the presence of sufficient organic matter (Carlson et al., 1968; Duboise et al., 1976; Bales et al., 1993; Loveland et al., 1996). For example, when alkaline septic effluent mixes with groundwater, the increased pH allows rapid transport of viruses, especially under saturated flow conditions (Scandura and Sobsey, 1997). Rainfall recharge after a storm may decrease ionic strength and cause viruses to desorb and be transported down gradient; desorbed infectious viruses can, thus, continue to contaminate water sources long after the initial contamination event (Sobsey et al., 1986; DeBorde et al., 1999) (see Section 5.5). Organic matter reduces the capacity of subsurface media to adsorb pathogens by binding to available adsorption sites thereby preventing the adsorption of pathogens (Pang, 2009).

The published literature reports a significant range in virus transport distances (U.S. EPA, 2006d). Transport distances of ca. 400 m have been reported for sand and gravel aquifers while the furthest distance (1,600 m) was observed in a karst formation. Water supply wells in karst and fractured bedrock aquifers are considered highly vulnerable to contamination because groundwater flow and pathogen transport can be extremely rapid, on the order of hours (Amundson et al., 1988; Scandura and Sobsey, 1997; Powell et al., 2003; Borchardt et al., 2011; Levison and Novakowski, 2012; Kozuskanich et al., 2014). Management of groundwater resources in karst and fractured bedrock should not be conducted in the same way as sand and gravel aquifers (Crowe et al., 2003).

5.3 Exposure

Enteric viruses are transmitted via the faecal–oral route. Vehicles for transmission can include water, food (particularly shellfish and salads), fomites (inanimate objects, such as door handles that, when contaminated with an infectious virion, facilitate transfer of the pathogen to a host) and person-to-person contact. Enteric viruses can also be spread via aerosols. Norovirus, for example, becomes aerosolized during vomiting, and can result in the release of as many as 30 million viruses in a single episode of vomiting (Caul, 1994; Marks et al., 2000; Marks et al., 2003; Lopman et al., 2012; Tung-Thompson et al., 2015). Poor hygiene is also a contributing factor to the spread of enteric viruses. In addition, the high incidence of rotavirus infections, particularly in young children, has suggested to some investigators that rotavirus may also be spread by the respiratory route (Kapikian and Chanock, 1996; Chin, 2000). For many of the enteric viruses discussed above, outbreaks have occurred both by person-to-person transmission and by common sources, involving contaminated foods, contaminated drinking water supplies or recreational water.

5.4 Waterborne illness

As noted in Section 4.0, certain serotypes and/or genotypes of enteric viruses are most commonly associated with human illness. In the case of noroviruses, genogroups GI, GII and GIV are associated with human illness, and infections usually peak in the winter. Group A rotavirus is endemic worldwide and is the most common and widespread rotavirus group; with group B rotavirus found mainly in China. Group C rotavirus has been associated with rare and sporadic cases of diarrhoea in children in many countries, including North America (Jiang et al., 1995). In the case of enteroviruses, several human infectious types have been implicated in human illness. Enterovirus infections are reported to peak in summer and early fall (Nwachuku and Gerba, 2006; Pallansch and Roos, 2007). Adenovirus serotypes 40 and 41 are the cause of the majority of adenovirus-related gastroenteritis. Both genogroups A and B of astroviruses are associated with human illness (Carter, 2005), and infections peak in the winter and spring.

Exposure to enteric viruses through water can result in both an endemic rate of illness in the population and waterborne disease outbreaks.

5.4.1 Endemic illness

The estimated burden of endemic acute gastrointestinal illness (AGI) annually in Canada, from all sources (i.e., food, water, animals, person-to-person), is 20.5 million cases (0.63 cases/person-year) (Thomas et al., 2013). Approximately 1.7% (334,966) of these cases, or 0.015 cases/person-year, are estimated to be associated with the consumption of tap water from municipal systems that serve >1000 people in Canada (Murphy et al., 2016a). Over 29 million (84% of) Canadians rely on these systems; with 73% (approximately 25 million) on a surface water source, 1% (0.4 million) on a groundwater under the direct influence of surface water (GUDI) supply; and, the remaining 10% (3.3 million) on a groundwater source (Statistics Canada, 2013a,b). Murphy et al. (2016a) estimated that systems relying on surface water sources treated only with chlorine or chlorine dioxide, or GUDI sources with no or minimal treatment, or groundwater sources with no treatment, accounted for the majority of the burden of AGI (0.047 cases/person-year). In contrast, an estimated 0.007 cases/person-year were associated with systems relying on lightly impacted source waters with multiple treatment barriers in place. The authors also estimated that over 35% of the 334,966 AGI cases were attributable to the distribution system.

An estimated 103, 230 (0.51 % of total) AGI cases per year, or 0.003 cases/person-year, are due to the presence of pathogens in drinking water from private and small community water systems in Canada (Murphy et al., 2016b). Private wells accounted for over 75% (78,073) of these estimated cases, or 0.027 cases/person-year. Small community water systems relying on groundwater accounted for an additional 13,034 estimated AGI cases per year, with the highest incidence, 0.027 cases/person-year, amongst systems without treatment. In contrast, small community water systems relying on surface water sources were attributable to 12,123 estimated annual cases of AGI, with the highest incidence, 0.098 cases/person-year, noted for systems without treatment. The authors estimated that the majority of these predicted AGI cases are attributed to norovirus. More specifically, of the 78,073 estimated cases of AGI/year resulting from consumption of drinking water from untreated privates wells in Canada, norovirus is estimated to be responsible for over 70% of symptomatic cases (i.e., 55,558). Similar to private wells, norovirus was responsible for the vast majority of estimated cases associated with consumption of drinking water from small groundwater community systems, accounting for 83% (10,869) of estimated cases; and small surface water community systems (9,003 cases, >74%). Overall, these estimates suggest that Canadians served by untreated or inadequately treated small surface water supplies are at greatest risk of exposure to pathogens, particularly norovirus, and, as a result, greater risk of developing waterborne AGI.

Studies have shown the presence of enteric viruses in a variety of groundwater sources (see Section 5.1.2), however, little is known regarding the contribution of these viruses to waterborne illness in the community. Borchardt et al., (2012) estimated the AGI incidence in 14 communities, serving 1,300 to 8,300 people, supplied by untreated groundwater. Tap water samples were tested for the presence of adenovirus, enterovirus and norovirus (see Section 5.1.2), and AGI symptoms were recorded in health diaries by households. Over 1,800 AGI episodes and 394,057 person-days of follow-up were reported. The AGI incidence for all ages was 1.71 episodes/person-year; children ≤ 5 had the highest incidence (2.66 episodes/person-year). Borchardt et al. (2012) determined that three summary measures of virus contamination were associated with AGI incidence: mean concentration, maximum concentration, and proportion positive samples. These associations were particularly strong for norovirus; adenovirus exposure was not positively associated with AGI. In an attempt to further characterize the relationship between virus presence and enteric illness, the authors estimated the fraction of AGI attributable to the viruses present in the communities’ tap water, using quantitative microbial risk assessment (QMRA) (see Section 8.0). They determined that between 6 to 22% of the AGI in these communities was attributable to enteric viruses (Borchardt et al., 2012).

Lambertini et al. (2011, 2012) estimated the risk of AGI due to virus contamination of the distribution system in the same 14 municipal groundwater systems studied by Borchardt et al. (2012). In their study, UV disinfection was implemented (without a chlorine residual). Enteric viruses were enumerated at the wellhead, post-UV disinfection (minimum dose = 50 mJ/cm2) and in household taps. The authors observed an increase in virus detection and concentration between UV disinfection and household taps; and attributed this finding to viruses entering the distribution system. The AGI risk from distribution system contamination was calculated and ranged from 0.0180 to 0.0611 episodes/person-year.

5.4.2 Outbreaks

Waterborne outbreaks caused by enteric viruses have been reported in Canada, and worldwide (Hafliger et al. 2000; Boccia et al. 2002; Parshionikar et al. 2003; Hoebe et al. 2004; Nygard et al. 2004; Kim et al. 2005; Yoder et al. 2008; Larsson et al. 2014). Some of these outbreaks are detailed in Table B.5 In Appendix B. The true prevalence of outbreaks is unknown, primarily because of under-reporting and under-diagnosis. Comprehensive outbreak surveillance and response systems are essential to our understanding of these outbreaks.

Norovirus is one of the most commonly reported enteric viruses in North America and worldwide. Outbreak-related norovirus infection became a nationally reportable disease in Canada in 2007 (PHAC, 2015a). However, source attribution information is not available, therefore, it is unclear how many reported cases are attributable to water.

In Canada, between 1974 and 2001, there were 24 reported outbreaks and 1382 confirmed cases of waterborne illness caused by enteric viruses (Schuster et al., 2005). Ten of these outbreaks were attributed to HAV, 12 were attributed to noroviruses and 2 to rotaviruses (O’Neil et al., 1985; Health and Welfare Canada, 1990; Health Canada, 1994, 1996; INSPQ, 1994, 1998, 2001; Boettger, 1995; Beller et al., 1997; De Serres et al., 1999; Todd, 1974-2001; BC Provincial Health Officer, 2001). There were also 138 outbreaks of unknown aetiology, a portion of which could be the result of enteric viruses, and a single outbreak that involved multiple viral pathogens. Of the 10 reported outbreaks attributed to waterborne HAV, 4 were due to contamination of public drinking water supplies, 2 were the result of contamination of semi-public suppliesFootnote 2 and the remaining 4 were due to contamination of private water supplies. Only 4 of the reported 12 waterborne outbreaks of norovirus infections in Canada occurred in public water supplies, and the remainder were attributed to semi-public supplies. Both rotavirus outbreaks arose from contamination of semi-public drinking water supplies. Contamination of source waters from human sewage and inadequate treatment (e.g., surface waters having poor or no filtration, relying solely on chlorination) were identified as the major contributing factors (Schuster et al., 2005). Weather events tended to exacerbate these issues. No Canadian waterborne viral outbreaks have been reported since 2001.

In the United States, between 1991 and 2002, 15 outbreaks and 3487 confirmed cases of waterborne viral illness were reported. Of these, 12 outbreaks and 3361 cases were attributed to noroviruses, 1 outbreak and 70 cases were attributed to “small round-structured virus” and 2 outbreaks and 56 cases were attributable to HAV (Craun et al., 2006). During this period, 77 outbreaks resulting in 16 036 cases of unknown aetiology were also reported. It is likely that enteric viruses were responsible for a significant portion of these outbreaks (Craun et al., 2006). Between 2003 and 2012, the U.S. Centers for Disease Control and Prevention (CDC) reported 138 infectious disease outbreaks associated with consumption of drinking water (Blackburn et al., 2004; Liang et al., 2006; Yoder et al., 2008; Brunkard et al., 2011; CDC, 2013b, 2015d); accounting for 8,142 cases of illness. Enteric viruses were identified as the single causative agent in 13 (9.4%) of these outbreaks, resulting in 743 cases of illness. Norovirus was responsible for 10 (of 13) outbreaks, while HAV was implicated in the remainder. Norovirus was also identified in three mixed outbreaks (i.e., those involving multiple causative agents). These outbreaks were associated with 1,818 cases of illness. The vast majority of viral outbreaks were attributed to the consumption of untreated or inadequately treated groundwater, as reported by others (Hynds et al., 2014a,b; Wallender et al., 2014).

Waterborne outbreaks of noroviruses are common worldwide (Brugha et al., 1999; Brown et al., 2001; Boccia et al., 2002; Anderson et al., 2003; Carrique-Mas et al., 2003; Maunula et al., 2005; Hewitt et al., 2007; Gunnarsdóttir et al., 2013; Giammanco et al., 2014). HAV outbreaks also occur throughout the world (De Serres et al., 1999; Hellmer et al., 2014). Groundwater sources are frequently associated with international outbreaks of noroviruses and HAV (Häfliger et al., 2000; Maurer and Stürchler, 2000; Parchionikar et al., 2003). Major waterborne epidemics of HEV have occurred in developing countries (Guthmann et al., 2006), but none have been reported in Canada or the United States (Purcell, 1996; Chin, 2000). Astroviruses and adenoviruses have also been implicated in drinking water outbreaks, although they were not the main cause of the outbreaks (Kukkula et al., 1997; Divizia et al., 2004).

5.5 Impact of environmental conditions

The concentration of virusesin a water source is influenced by numerous environmental conditions and processes, many of which are not well characterized or are not transferable between watersheds. Environmental conditions that may cause water quality variations include precipitation, snowmelt, drought, upstream incidents (for surface water), farming and wildlife (Dechesne and Soyeux, 2007).