Guidelines for Canadian drinking water quality: Iron

Download the alternative format

(PDF format, 1.0 MB, 78 pages)

Organization: Health Canada

Published: 2023

Iron

Guideline Technical Document

for Public Consultation

Consultation period ends

November 28, 2023

Purpose of consultation

This guideline technical document evaluated the available information on iron with the intent of updating the guideline value for iron in drinking water. The purpose of this consultation is to solicit comments on the proposed guidelines, on the approach used for its development, and on the potential impacts of implementing them. The existing guideline technical document on iron in drinking water, developed in 1978, recommended an aesthetic objective (AO) of ≤ 0.3 mg/L (300 µg/L). A health-based guideline was not established at that time, as there was no consistent, convincing evidence that iron in drinking water could cause adverse health effects in humans. The review of the literature confirms there is still no need for a health-based guideline. This document proposes an of AO ≤ 0.1 mg/L (100 µg/L) for total iron in drinking water based on aesthetic and treatment considerations. The document was reviewed by external experts and subsequently revised.

This document is available for a 60-day public consultation period. Please send comments (with rationale, where required) to Health Canada via email at water-eau@hc-sc.gc.ca.

If email is not feasible, comments may be sent by postal mail to this address:

Water and Air Quality Bureau

Health Canada

269 Laurier Ave West, A.L. 4903D

Ottawa ON K1A 0K9

All comments must be received before November 28, 2023. Comments received as part of this consultation will be shared with members of the Federal-Provincial-Territorial Committee on Drinking Water (CDW), along with the name and affiliation of their author. Authors who do not want their name and affiliation shared with CDW members should provide a statement to this effect along with their comments.

It should be noted that this guideline technical document may be revised following the evaluation of comments received, and a drinking water guideline will be established, if required. This document should be considered as a draft for comment only.

Proposed guideline

An aesthetic objective (AO) of ≤ 0.1 mg/L (100 µg/L) is proposed for total iron in drinking water.

Executive summary

This guideline technical document was prepared in collaboration with the Federal-Provincial-Territorial Committee on Drinking Water and assesses all available information on iron.

Exposure

Iron is a ubiquitous metal that enters the environment from both natural sources and human activities. It occurs mostly in the form of organic and inorganic compounds and, to a lesser extent, in its metallic form. Iron is used mainly for steel production and industrial, commercial and consumer product applications, such as water mains, batteries, pesticides, fertilizers, cosmetics, food additives and multivitamin supplements.

Canadians are exposed to iron mainly through food, and to a lesser extent via drinking water, principally because of corrosion in the distribution system. Exposure through drinking water contributes less than 10% of the total daily iron intake. In most Canadian sources of water, the median iron concentration is below 1 mg/L. Higher concentrations are typically found in groundwater. The iron content in treated water entering the distribution system is generally very low. Elevated iron concentrations are likely to result in an off-flavor (bitter or metallic taste) and discoloured water.

Health effects

Iron is an essential element for humans. However, oral exposure to very high levels may cause adverse health effects, with gastrointestinal distress being the most sensitive endpoint. The overall weight of scientific evidence indicates that iron is neither a reproductive toxicant nor a developmental toxicant nor a carcinogen.

Aesthetic considerations

Concerns about iron in drinking water are often related to consumer complaints regarding discoloured water. The proposed AO of ≤ 0.1 mg/L (100 µg/L) is intended to minimize the occurrence of discoloured water due to the presence of iron oxides and to improve consumer confidence in drinking water quality. It is important to note that when both iron and manganese (Mn) are present, the removal of iron generally improves the removal of Mn and thus will reduce the health risk associated with this metal.

Analytical and treatment considerations

The development of a drinking water guideline takes into consideration the ability to measure the contaminant and to remove it from drinking water supplies. Several analytical methods are available for measuring iron in water at concentrations well below the proposed AO. Total iron, which includes both the dissolved and particulate forms of iron in a water sample, should be measured.

At the municipal level, treatment technologies that are available to effectively decrease iron concentrations in drinking water include aeration, chemical oxidation followed by filtration, coagulation, adsorption, membrane filtration, and coagulation followed by ultrafiltration. The performance of these technologies depends on factors such as iron species, pH, coagulant type, coagulant dose and type of adsorbent. Using appropriate process controls, these technologies can achieve treated water concentrations well below the proposed AO. Most well-operated and optimized treatment plants can achieve iron concentrations of 0.1 mg/L or less in the treated water. The proposed AO of ≤ 0.1 mg/L would minimize the occurrence of discoloured water and taste complaints, aid in the removal of co-occurring Mn, ensure that a disinfectant residual is maintained and improve consumer confidence in drinking water quality. Prior to full-scale implementation, bench- and/or pilot-scale studies should be conducted using source water to ensure sufficient iron removal and to optimize performance.

In cases where iron removal is desired at a small-system or household level, for example, a private well, a residential drinking water treatment unit may be an option. Although there are no treatment units currently certified for the removal of iron from drinking water, technologies that are expected to be effective include ion exchange, oxidizing filters and reverse osmosis. When using a residential drinking water treatment unit, it is important to take samples of water entering and leaving the treatment unit and send them to an accredited laboratory for analysis, to ensure that adequate iron removal is achieved. Routine operation and maintenance of treatment units, including replacement of filter components, should be conducted according to manufacturer specifications.

Distribution system

It is recommended that water utilities develop a distribution system management plan to minimize the release of iron and the potential for co-occurring contaminants in the system. This includes minimizing the iron concentration entering the distribution system and distribution system maintenance (main cleaning). It is particularly important to maintain stable chemical and biological water quality conditions throughout the system and minimize physical and hydraulic disturbances that can release iron corrosion by-products.

Application of the guidelines

Note that specific guidance on implementing drinking water guidelines should be obtained from the appropriate drinking water authority.

All water utilities should implement a risk management approach, such as the source-to-tap or water safety plan approach, to ensure water safety. These approaches require a system assessment to characterize the source water; describe the treatment barriers that prevent or reduce contamination; identify the conditions that can result in contamination; and implement control measures. Operational monitoring is then established and operational and management protocols, such as standard operating procedures, corrective actions and incident responses, are instituted. Other protocols to validate the water safety plan, such as record keeping and consumer satisfaction, are also implemented. Operator training is also required to always ensure the effectiveness of the water safety plan.

Considering that iron levels can vary significantly in source water, within treatment plants, and especially in distribution systems, monitoring programs should be system-specific to enable utilities to have a good understanding of iron levels from source to tap. Monitoring programs should be designed based on risk factors that contribute to the likelihood of finding elevated iron levels in the drinking water system. These factors may include historical infrastructure (for example, presence of unlined cast iron mains), lack of treatment, limited distribution system maintenance and variable source and distribution system water chemistry. The sampling locations, frequency and type of samples that should be collected will differ depending on the desired goal (such as identifying sources of iron, minimizing accumulation and removal of co-occurring Mn) and site-specific considerations. Suggested monitoring details for different points in a drinking water system are provided in section 5.2.

Total iron in drinking water should be monitored at the tap when discolouration (coloured water) events occur. Discolouration events may be accompanied by the release of accumulated contaminants, including arsenic (As), lead (Pb), Mn and radiological contaminants. Iron oxides can adsorb and accumulate these contaminants and release them into the bulk water and plumbing systems. Therefore, discoloured water events should not be considered only an aesthetic issue; they should trigger sampling for iron and other metals and possibly distribution system maintenance.

Table of Contents

- Proposed guideline

- Executive summary

- 1.0 Exposure Considerations

- 2.0 Health Considerations

- 3.0 Derivation of a Health-Based Value (HBV)

- 4.0 Analytical and Treatment Considerations

- 5.0 Management Strategies

- 6.0 International considerations

- 7.0 Rationale

- 8.0 References

- Appendix A: List of abbreviations

- Appendix B Canadian water quality data.

- Appendix C: Summary of iron removal for residential scale technologies

1.0 Exposure Considerations

1.1 Substance identity

Iron (Fe) is an essential metal that is highly reactive and abundant in the Earth's crust. Divalent (Fe(II) or ferrous) and trivalent (Fe(III) or ferric) iron are the most common species of iron, and the most biologically and toxicologically relevant species (PubChem, 2004; Ponka et al., 2015; U.S. GS, 2017; ECCC, 2019). Table 1 presents select physicochemical properties of iron.

| Property | Value | Interpretation |

|---|---|---|

| CAS RN | 7439-89-6 | Not applicable |

| Molecular weight (g/mol) | 55.84 | Not applicable |

| Water solubility (mol/L) | 11.2Footnote * | Insoluble in water at room temperature, neutral and basic pH |

| Vapour pressure (Pa) | 1 at 1 455°C | Solid, non-volatile at room temperature |

| Octanol-water partition coefficient (Kow) | -0.77Footnote * | Insoluble in fat-like solvents |

Source: PubChem (2004), unless specified otherwise.

Footnotes

- Footnote *

-

From the online U.S. CompTox Chemicals Database.

Iron occurs in water in nonheme form, whereas in food it is present as both heme and nonheme iron (see section 1.3). Drinking water levels and dietary recommendations for iron are expressed in terms of total iron, that is, Fe(II) and Fe(III). Ferrous iron is highly water soluble and the most bioavailable species. In the sections below, "total iron" is used when referring to the sum of all the major oxidation states of nonheme iron (that is, Fe(II), Fe(III)). The term "iron" (Fe) is used when referring to either Fe(II) or Fe(III), and iron cycles between these two oxidative states. In all other cases, the iron species is specified.

1.2 Uses, sources and environmental fate

Uses: Iron is still mined in Canada with most of the ore production in Quebec and Newfoundland and Labrador. In 2018, Canada was the eighth largest world producer of iron ore with a total production of 52.4 million tonnes (Mt), exports totalling around 48 Mt and imports estimated at 10.1 Mt (ECCC, 2019; NRC, 2019). Globally, up to 98% of mined iron ore is used to make steel. The rest is used in many non-steel applications, for example, as a construction material in cast-iron water pipes and galvanized (steel) iron pipes in plumbing systems, an active ingredient in pesticides or a nutrient in fertilizers (WHO, 2003; PubChem, 2004; Ponka et al., 2015; Health Canada, 2017, 2018; ECCC, 2019; NRC, 2019).

Sources: Iron may enter the environment from both natural and anthropogenic sources. Except for some rare deposits in Canada, such as the Labrador Trough in northern Labrador and northeastern Quebec, iron is usually found in soils, rocks and sediments as compounds in which the oxide minerals (for example, hematite) are predominant (WHO, 2003; PubChem, 2004; NHMRC and NRMMC, 2011; Ponka et al., 2015; U.S. GS, 2017; ECCC, 2019). Iron is released to the environment from these natural sources through volcanic activity, weathering and leaching. Agricultural activities (for example, use of pesticides and fertilizers) may also contribute to iron levels in soil. Iron in air is mainly due to emissions from industrial activities such as mining, smelting and combustion of fossil fuels, including coal and coke. Finally, in natural waters, iron may be present at natural background levels due to geological processes (for example, soil percolation, runoff) and, to a lesser extent, human activities, including mine drainage water, acid mine effluents and agricultural runoff (WHO, 2017; ECCC, 2019). However, iron-based materials such as cast iron, ductile iron, galvanized iron, and steel are the principal sources of iron in drinking water distribution systems. Iron may be released directly from iron-based materials or indirectly as iron corrosion by-products, or tubercles, which form during the corrosion process (Civardi and Tompeck, 2015). It may also be present in drinking water because of its use for water treatment (for example, iron-based coagulants) (WHO, 2017).

Environmental fate: In the air, iron compounds are transported as windblown particulate matter (that is, dust or volcanic ash) that end up on land and water by wet and dry deposition. Although iron is unlikely to volatilize from soil and water owing to its physicochemical properties, it can be removed from soil and sediments via adsorption to sludge.

In the environment, iron is subject to a series of redox reactions, also known as iron cycling, in which zero-valent iron (Fe0) is oxidized to Fe(II) and Fe(III), which in turn cycles between these two oxidative states. This usually results in the formation of mixed ferrous/ferric compounds including hydroxides and oxides. However, the fate and prevalence of each oxidation state will depend on a number of physicochemical factors, including redox potential, temperature, presence of organic and inorganic sequestering agents (such as dissolved organic carbon and organic matter), exposure to sunlight and presence of iron-metabolizing microorganisms (Peeters et al., 2016; ECCC, 2019).

In anaerobic environments such as groundwater, sediment pore water and acidic streams, ferrous iron is the predominant species. Although stable at neutral to alkaline pH, hydroxylated ferrous iron complexes (for example, Fe(II)-minerals) are soluble under acidic conditions where they readily dissolve to release ferrous ions (Fe2+). Therefore, while iron levels may increase in the absence of complexing ligands, they will decrease in their presence, favouring the formation of black mineral slimes (Peeters et al., 2016). When rivers and streams are impounded, iron levels increase. The amount of iron dissolved in the surface water depends on the characteristics of the soil and the amount of plant life present. Decomposition of organic matter (algae, leaves and other plant materials) in the lower sections of a reservoir may lead to anaerobic conditions (that is, near-zero dissolved oxygen [DO]), which promote the conversion of iron (and manganese [Mn]) compounds in those zones to soluble compounds (Civardi and Tompeck, 2015).

In aerobic environments, such as the upper layers of soils and aerated surface water, Fe(III)is usually the predominant species. Nonetheless, iron levels tend to be low in oxygenated waters. Although iron can exist as the ion (Fe3+) under strongly acidic conditions (for example, pH < 3), it will precipitate out mainly in the form of sparingly soluble organic and inorganic Fe(III)-complexes (for example, with organic acids) under neutral to alkaline pH (Peeters et al., 2016; ECCC, 2019). Thus, dissolved iron is generally found in (aerated) aquatic environments in the form of insoluble suspensions of ferric hydroxide (Fe(OH)n) particles, which can settle out as rust-colored deposits (for example, in tanks and pipes) and silts (for example, in waterways) or form yellow-brown deposits on bottom sediments and in many streams draining coal mining regions (WHO, 2003; Ponka et al., 2015; WHO, 2017). Dissolved iron may also occasionally be found as ferric oxide (Fe2O3), which precipitates to form red waters. This results in turbidity and decreased light penetration (ECCC, 2019).

Finally, iron can also promote the growth of iron bacteria within the distribution system or waterworks, resulting in the deposition of slimy coatings on piping (WHO, 2017).

1.3 Exposure

The main sources of iron exposure for the Canadian general population are food and, to a lesser extent, drinking water (IOM, 2001; Health Canada, 2009; Health Canada, 2010; Health Canada, 2017). Although rare, exposure to iron in the air may occur near industries (for example, foundries), with intakes of approximately 25 µg/day reported for urban areas (WHO, 2003).

In Canada, the mean total intake of iron ranges from 14.3 to 18.3 mg per day, mostly from food. Exposure through drinking water constitutes less than 10% of the average total daily intake. Drinking water containing a mean iron level of 1 mg/L would contribute only about 1.5 mg to daily intake as the potential for inhalation and dermal exposure through drinking water is negligible.

Drinking water: The provinces and territories provided water monitoring data (municipal and non-municipal supplies). Where indicated, data were separated into groundwater and surface water sources. When the source type could not be discerned, it was classified as ground and/or surface water. Samples were divided into raw, treated and distribution water. When not indicated or not possible to determine, samples were classified as RTD (raw/treated/distributed). Total iron concentrations were also obtained from the National Drinking Water Survey (Health Canada, 2017). The exposure data provided reflect the different method detection limits (MDL) used by accredited laboratories within and among the different jurisdictions, as well as their respective monitoring programs. As a result, the statistical analysis of the data provides only a limited picture of exposure.

Table 2. Occurrence of total iron in Canadian drinking water

| Jurisdiction (DL mg/L) | Municipal/ Non-municipal | Water type | # Detects/ samples | % Detects | Concentration (mg/L) | ||

|---|---|---|---|---|---|---|---|

| Median | Mean | 90th percentile | |||||

| British ColumbiaFootnote 1 (0.01–0.1) [2010–2020] | Municipal | Ground – raw | 166/197 | 84.3 | 0.10 | 0.72 | 1.63 |

| Ground – treated | 3/5 | 60.0 | 0.16 | 0.62 | NC | ||

| Ground – distribution | 83/83 | 100 | 0.10 | 0.25 | 0.43 | ||

| Ground – RTD | 406/500 | 81.2 | 0.05 | 0.35 | 0.85 | ||

| Surface – raw | 45/49 | 91.8 | 0.06 | 0.10 | 0.21 | ||

| Surface – treated | 9/10 | 90.0 | 0.02 | 0.04 | 0.13 | ||

| Surface –distribution | 18/18 | 100 | 0.10 | 0.20 | 0.83 | ||

| Surface – RTD | 150/173 | 86.7 | 0.05 | 0.19 | 0.39 | ||

| Ground and/or Surface – raw | 22/30 | 73.3 | 0.12 | 0.50 | 2.0 | ||

| Ground and/or Surface – treated | 2/5 | 40.0 | < DL | 0.12 | NC | ||

| Ground and/or Surface – distribution | 7/7 | 100 | 0.10 | 0.15 | NC | ||

| Ground and/or Surface – RTD | 169/240 | 70.4 | 0.03 | 0.35 | 0.54 | ||

| ManitobaFootnote 2 (0.01–0.1) [2010–2020] | Semi-Public | Ground – raw | 176/207 | 85.0 | 0.43 | 0.95 | 2.54 |

| Ground – treated | 141/214 | 65.9 | 0.05 | 0.31 | 0.81 | ||

| Ground – distribution | 10/14 | 71.4 | 0.06 | 0.28 | 1.4 | ||

| Ground – not disinfected | 338/427 | 79.2 | 0.16 | 0.60 | 1.65 | ||

| Surface – raw | 44/49 | 89.8 | 0.17 | 0.41 | 0.80 | ||

| Surface – treated | 34/50 | 68.0 | 0.05 | 0.13 | 0.32 | ||

| Surface-distribution | 6/10 | 60.0 | 0.02 | 0.17 | 1.06 | ||

| GUDI – raw | 37/44 | 84.1 | 0.14 | 1.18 | 1.29 | ||

| GUDI – treated | 32/55 | 58.2 | 0.02 | 0.09 | 0.26 | ||

| GUDI – distribution | 2/2 | 100 | 0.07 | 0.07 | NC | ||

| GUDI – not disinfected | 38/46 | 82.6 | 0.09 | 0.45 | 1.47 | ||

| Municipal | Ground – raw | 676/778 | 86.9 | 0.44 | 0.91 | 2.44 | |

| Ground – treated | 486/745 | 65.2 | 0.07 | 0.35 | 1.06 | ||

| Ground – distribution | 162/224 | 72.3 | 0.05 | 0.40 | 1.11 | ||

| Ground – not disinfected | 4/4 | 100 | 0.33 | 0.40 | NC | ||

| Surface – raw | 546/622 | 87.8 | 0.19 | 0.44 | 0.87 | ||

| Surface – treated | 290/613 | 47.3 | < DL | 0.12 | 0.32 | ||

| Surface – distribution | 128/178 | 71.9 | 0.03 | 0.13 | 0.29 | ||

| GUDI – raw | 119/166 | 71.7 | 0.21 | 0.53 | 1.48 | ||

| GUDI – treated | 72/166 | 43.3 | < DL | 0.15 | 0.47 | ||

| GUDI – distribution | 21/51 | 41.2 | < DL | 0.21 | 0.52 | ||

| GUDI – not disinfected | 6/6 | 100 | 0.15 | 0.31 | NC | ||

| New BrunswickFootnote 3 (0.001–0.02) [2010–2020] | Semi-public | Ground – raw | 157/201 | 78.1 | 0.75 | 0.56 | 1.1 |

| Ground – treated | 247/742 | 33.3 | < DL | 0.04 | 0.05 | ||

| Ground – distribution | 128/250 | 51.2 | 0.01 | 0.05 | 0.15 | ||

| Ground – RTD | 191/420 | 45.5 | < DL | 0.23 | 0.17 | ||

| Municipal | Ground – raw | 1 327/2 178 | 60.9 | 0.02 | 0.13 | 0.34 | |

| Ground – treated | 4/31 | 12.9 | < DL | 0.01 | 0.02 | ||

| Ground – distribution | 324/821 | 39.5 | < DL | 0.03 | 0.05 | ||

| Surface – raw | 163/191 | 85.3 | 0.06 | 0.07 | 0.14 | ||

| Surface – distribution | 236/297 | 79.5 | 0.03 | 0.07 | 0.14 | ||

| Ground and Surface – raw | 125/155 | 80.7 | 0.14 | 0.40 | 1.17 | ||

| Ground and Surface – treated | 3/4 | 75.0 | 0.01 | 0.01 | NC | ||

| Ground and Surface – distribution | 782/942 | 83.0 | 0.04 | 0.08 | 0.17 | ||

| NewfoundlandFootnote 4 (0.03 and 0.05) [2013–2020] | Municipal | Ground – raw | 106/295 | 35.9 | < DL | 0.22 | 0.44 |

| Ground – distribution | 483/2 001 | 24.1 | < DL | 0.07 | 0.11 | ||

| Surface – raw | 839/1 004 | 83.6 | 0.10 | 0.17 | 0.42 | ||

| Surface – distribution | 3 767/5 109 | 73.7 | 0.08 | 0.16 | 0.36 | ||

| Nova ScotiaFootnote 5 (0.05) [2000–2020] | Semi – Public | Ground – raw | 59/155 | 38.1 | < DL | 0.46 | 0.87 |

| Ground – treated | 138/331 | 41.7 | < DL | 0.31 | 0.53 | ||

| Ground – RTD | 399/726 | 55.0 | 0.04 | 0.43 | 0.84 | ||

| Surface – raw | 13/19 | 68.4 | 0.05 | 0.08 | 0.20 | ||

| Surface – treated | 5/9 | 55.6 | 0.03 | 0.13 | NC | ||

| Municipal | Ground – raw | 141/300 | 47 | < DL | 0.21 | 0.43 | |

| Ground – treated | 56/243 | 23.0 | < DL | 0.07 | 0.13 | ||

| Ground – RTD | 6/18 | 33.3 | < DL | 0.18 | 0.87 | ||

| Surface – raw | 139/178 | 78.1 | 0.16 | 0.24 | 0.47 | ||

| Surface – treated | 206/565 | 36.5 | < DL | 0.07 | 0.14 | ||

| Surface – distribution | 3/25 | 12.0 | < DL | 0.04 | 0.11 | ||

| Ground – raw | 74/91 | 91.3 | 0.33 | 1.08 | 2.6 | ||

| OntarioFootnote 6 (0.0005–0.1) [2018–2020] | Non-municipal | Ground – treated | 89/142 | 62.7 | 0.09 | 0.27 | 0.64 |

| Ground – distribution | 45/73 | 61.6 | 0.03 | 0.20 | 0.79 | ||

| Surface – raw | 4/10 | 40.0 | < DL | 0.03 | 0.16 | ||

| Surface – distribution | 4/5 | 80.0 | 0.01 | 0.02 | NC | ||

| Ground and Surface – distribution | 3/14 | 21.4 | < DL | 0.06 | 0.12 | ||

| Ground – raw | 3 324/4 238 | 78.4 | 0.13 | 0.41 | 0.95 | ||

| Municipal | Ground – treated | 1 826/2 292 | 79.7 | 0.05 | 0.17 | 0.49 | |

| Ground – distribution | 1 682/2 326 | 72.3 | 0.05 | 0.08 | 0.20 | ||

| Surface – raw | 941/1 098 | 85.7 | 0.09 | 0.23 | 0.34 | ||

| Surface – treated | 730/1 461 | 50.0 | 0.01 | 0.03 | 0.08 | ||

| Surface – distribution | 2 439/4 101 | 59.5 | 0.03 | 0.05 | 0.10 | ||

| Ground and Surface – treated | 30/52 | 57.7 | 0.01 | 0.03 | 0.03 | ||

| Ground and Surface – distribution | 657/931 | 70.6 | 0.02 | 0.06 | 0.11 | ||

| Ground – RTD | 1 769/ 24 331 | 7.3 | < DL | 0.03 | < DL | ||

| Prince Edward IslandFootnote 7Footnote a (0.002) [2019–2022] | Non-municipal and Municipal | Ground – raw | 2 121/ 15 119 | 14.0 | 0.003 | 0.048 | 0.003 |

| SaskatchewanFootnote 8 (0.0005–0.1) [2010–2020] | Municipal | Surface – raw | 3 784/3 937 | 96.1 | 0.02 | 0.31 | 0.54 |

| Ground and/or Surface – treated | 84/92 | 91.3 | 0.01 | 0.29 | 0.27 | ||

| Ground and/or Surface – distribution | 2 059/2 396 | 85.9 | 0.02 | 0.37 | 1.03 | ||

| Ground – treated | 2 375/3 316 | 71.6 | 0.05 | 0.20 | 0.59 | ||

| CanadaFootnote b | Municipal | Ground – distribution | 2 734/5 455 | 50.1 | 0.03 | 0.08 | 0.18 |

| Surface – treated | 1 235/2 649 | 46.6 | < DL | 0.06 | 0.15 | ||

| Surface – distribution | 6 591/9728 | 67.8 | 0.06 | 0.11 | 0.24 | ||

DL – Detection Limit; < DL – below detection limit (for median with < 50% detects; for 90th percentile with <10% detects and mean with 0% detects); NC -not calculated due to insufficient sample size; RTD – Raw, treated or distributed water (Not specified); GUDI – groundwater under the direct influence of surface water.

For analytical purposes, values below DL were assigned half of DL.

- Footnote a

-

All values analyzed were for dissolved iron.

- Footnote b

-

Canadian values were calculated as the weighted mean of Fe concentrations from the above provinces/territories (P/T) [Sum of (P/T No. of samples) × (P/T mean Fe concentration)]/Total no. of samples.

- Footnote 1

-

British Columbia Ministry of Health (2021).

- Footnote 2

-

Manitoba Sustainable Development (2021).

- Footnote 3

-

New Brunswick Department of Environment and Local Government (2019).

- Footnote 4

-

Newfoundland and Labrador Department of Municipal Affairs and Environment (2019).

- Footnote 5

-

Nova Scotia Environment (2019).

- Footnote 6

-

Ontario Ministry of the Environment, Conservation and Parks (2019).

- Footnote 7

-

PEI Department of Communities, Land and Environment (2019).

- Footnote 8

-

Saskatchewan Water Security Agency (2019).

| Water Type | Summer (mg/L) | Winter (mg/L) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Detects/Samples | % Detect | Median | Mean | 90th | Detect/ Samples | % Detect | Median | Mean | 90th | |

| Well – raw | 4/17 | 23.5 | < RDL | 0.20 | 0.70 | 0/9 | 0 | < RDL | <RDL | < RDL |

| Well – treated | 1/16 | 6.3 | < RDL | 0.07 | <RDL | 0/9 | 0 | < RDL | <RDL | < RDL |

| Well – distribution | 6/51 | 11.8 | < RDL | 0.08 | 0.15 | 4/27 | 14.8 | < RDL | 0.09 | 0.24 |

| Lake – raw | 5/16 | 31.3 | < RDL | 0.19 | 0.81 | 1/11 | 9.1 | < RDL | 0.10 | < RDL |

| Lake – treated | 1/16 | 6.3 | < RDL | 0.05 | < RDL | 1/11 | 9.1 | < RDL | 0.06 | < RDL |

| Lake – distribution | 6/51 | 11.8 | < RDL | 0.07 | 0.15 | 2/16 | 12.5 | < RDL | 0.07 | 0.10 |

| River – raw | 16/28 | 57.1 | 0.13 | 0.58 | 1.61 | 5/19 | 26.3 | < RDL | 0.37 | 0.73 |

| River – treated | 2/22 | 9.1 | < RDL | 0.07 | < RDL | 0/11 | 0 | < RDL | <RDL | < RDL |

| River – distribution | 8/77 | 10.4 | 0.05 | 0.07 | 0.10 | 1/30 | 3.3 | < RDL | 0.05 | < RDL |

RDL – Reporting Detection Limit; < RDL – below reporting detection limit (for median with < 50% detects; for 90th percentile with < 10% detects and mean with 0% detects).

RDL= 0.1 mg/L. For analytical purposes, values below RDL were assigned half of RDL.

Food: Iron is naturally present in a variety of food of plant or animal origin. It may also be added to certain foods and infant formula for fortification and may be available as (or in) dietary supplements and prescription medicines. In general, while supplements may increase the median daily intake of iron by approximately 1 mg for both men and women, food can increase the daily intake by 1 mg/day for women and by 5 mg/day for men (IOM, 2001).

The most up-to-date data on dietary iron exposure of Canadians are from the Canadian Community Health Survey (CCHS) on nutrition, which provides estimates of usual intakes for iron from food using data collected at the national, regional, and provincial levels from Canadians aged one year and older, by 16 dietary reference intake age-sex groups, using 24-hour dietary recalls, in 2004 and 2015 (CCHS–Nutrition). The 2004 and 2015 CCHS– Nutrition data excluded supplements as well as pregnant and breastfeeding individuals since they are subject to a different set of nutritional recommendations. According to the CCHS–Nutrition data, in 2004 Canadians' dietary intake of iron (mean ± standard error [SE]) ranged from 9.6 ± 0.2 to 18.8 ± 0.4 mg/day with males having the highest intakes. In 2015, the highest intake values were still obtained for males, with a small reduction in dietary intakes (mean ± SE: 8.3 ± 0.2 to 16.3 ± 0.4 mg/day). Intakes ranged from 12.3 ± 0.2 to 16.3 ± 0.4 mg/day for males 9 to 71 years old, and from 9.9 ± 0.2 to 12 ± 0.2 mg/day for females in the same age category. Dietary intakes were 8.3 ± 0.2 mg/day for children of both sexes from 1 to 3 years old, and 11.4 ± 0.2 mg/day for those from 4 to 8 years old. Similar levels (that is, 10–20 mg/day) were reported in the literature for a typical Western mixed diet (Geisser and Burckhardt, 2011; Fonseca-Nunes et al., 2014).

Foods commonly consumed in Canada were analyzed for iron levels and the estimated concentrations were reported in the Canadian Nutrient File (CNF) database used for the 2015 CCHS–Nutrition (2015 CNF). According to the 2015 CNF database, iron levels range from low (that is, < 1 to ≤ 5 mg/kg) for fats and oils, many fruits, non-green vegetables, coffee, tea, uncontaminated municipal water, dairy products, to medium (that is, > 5 to ≤ 10 mg/kg) for meat (for example, red meat, poultry, pork), eggs (yolks), fish, green vegetables, grains, seeds, nuts, beans, and so on, to high (that is, > 10 to 123.6 mg/kg) for foods such as liver and offal, game meat, seaweed, spices, bread, breakfast cereals, grain products, and other fortified foods. The 2015 CNF database did not explicitly report iron levels in infant formula or human milk. However, average iron levels of 0.35 to 6 mg/L for infant formula and 0.2 to 0.5 mg/L for human milk have been reported elsewhere (IOM, 2001; Quinn, 2014; EFSA, 2015; Hare et al., 2018).

Iron generally occurs in foods in two main forms, both of which contain the mineral in the ferric state: heme (organic) and nonheme (inorganic) iron. Nonheme iron is the most prevalent form found in foods of plant or animal origin, fortified foods and supplements, with about 60% of iron in foods of animal origin being nonheme iron (Collard, 2009; Health Canada, 2009; Fonseca-Nunes et al., 2014; Anderson and Frazer, 2017). Nonheme iron is the main source of the nutrient for infants (Collard, 2009). Heme iron is mostly found in foods of animal origin and its contribution to total daily iron intake is estimated to be between 4% and 6% (IOM, 2001; Collings et al., 2013; Ashmore et al., 2016; Chang et al., 2019).

Other exposure pathways: Canadians may be exposed to iron through soil and ambient air. Iron is found in soil at varying levels and mostly in the form of oxy or hydroxyl complexes. However, due to its physicochemical properties and anticipated use pattern, soil is not expected to be a significant source of exposure for the general population in Canada (Health Canada, 2017).

Data on iron levels in Canadian ambient air were not found in the literature search. However, iron is a non-volatile metal and airborne levels of iron are expected to be negligible in non-occupational settings. Levels ranging from 50 to 90 ng/m3 in remote areas and as high as 1.3 µg/m3 in urban sites have been reported. Higher levels may be found near industrial settings, such as foundries. Levels 10 times higher than the mentioned urban levels were reported in the vicinity of iron- and steel-producing plants (WHO, 2003; Ponka et al., 2015).

Biomonitoring: Serum ferritin, an acute phase reactant, is considered a valid measure for body stores of total iron (van der A et al., 2006; Cooper et al., 2012; Fonseca-Nunes et al., 2014; Ginzburg and Vinchi, 2019). A serum ferritin concentration below 12 µg/L is associated with totally depleted body iron stores, whereas levels above 200 µg/L (in women) and 300 µg/L (in men) may indicate (biochemical) iron overload. A serum ferritin level greater than or equal to 30 µg/L and less than 200 µg/L is usually associated with adequate iron status (that is, level of iron stores) (IOM, 2001; EFSA, 2004; EFSA, 2015; Ashmore et al., 2016).

Serum ferritin concentrations have been measured in Canadian Health Measures Survey (CHMS) cycles 2 through 6 (Statistics Canada, 2019). Data from those surveys indicate that more than 95% of Canadians from 3 to 79 years old had adequate serum ferritin levels (suggesting high iron stores) and that males had higher levels than females. Serum ferritin concentrations (as central tendency measures) reported for Canadians of both sexes from 6 to 19 years old were as follows: 42, 36, 33, 31 and 33 µg/L in 2011, 2013, 2015, 2017 and 2019, respectively. Higher levels were reported (as central tendency measures) for both Canadian men and women 20 to 79 years old (96, 80, 75, 76 and 77 µg/L in 2011, 2013, 2015, 2017 and 2019, respectively (Statistics Canada, 2021).

2.0 Health Considerations

2.1 Essentiality

Iron is a trace element that is required for the biosynthesis of various heme-containing proteins (for example, hemoglobin, myoglobin) and enzymes (for example, cytochromes, catalases, peroxidases, ribonucleotide reductases) as well as a number of other iron-binding proteins and prosthetic groups (for example, iron-sulphur clusters) which are essential at every stage of life for the biological processes of many living organisms, including humans. These processes include erythropoiesis; oxygen transport and storage; mitochondrial processes; DNA synthesis and repair; neurotransmitter and myelin synthesis; host defense; cell replication, metabolism (for example, glucose homeostasis) and growth (IOM, 2001; WHO, 2003; EFSA, 2004; Health Canada, 2009; Beguin et al., 2014; Ashmore et al., 2016; Czerwonka and Tokarz, 2017; Muckenthaler et al., 2017).

In healthy individuals, the optimal functioning of these processes depends on iron homeostasis. The latter is regulated by the hepatic hormone hepcidin, a type II acute-phase reactant, and maintained through tight regulation of iron (intestinal) uptake, distribution, storage, metabolism and release (for example, release of recycled iron from macrophages) (Andrews and Schmidt, 2007; Collard, 2009; Beguin et al., 2014; Kew, 2014; Schrier, 2015; Anderson and Frazer, 2017).

Homeostatic mechanisms are not fully operational in infants aged 6 months or less. However, initial iron stores established during pregnancy are generally sufficient to support the needs of healthy term neonates (born from a normal pregnancy) during the first 6 months of life. No study to date has found an increase in serious iron-induced health risks in breast-fed infants, even when they were given significant oral iron supplementation. This is thought to be attributable to lactoferrin, an iron-chelating glycoprotein found abundantly in human breast milk (Collard, 2009; Health Canada, 2009; Health Canada, 2009; Lipinski et al., 2013).

To keep iron levels within optimal physiologic ranges and avoid the adverse health consequences of both iron deficiency and iron overload in otherwise healthy Canadians, health authorities have established life stage and gender-specific dietary reference intakes (IOM, 2001; Health Canada, 2010). Recommended dietary allowances (RDA) between 7 and 27 mg/day have been set to meet the iron requirements of nearly all healthy Canadians. Intakes at the low end (that is, RDA 7–9 mg/day) are recommended for children up to age 13, male adults and nursing women over 19 years old, and post-menopausal women over 51 years old. Intakes in the mid-range (that is, RDA 10–18 mg/day) are recommended for children (4–8 years old), teenagers (14–18 years old), nursing women under 18 years old, and non-pregnant women of reproductive age (menstruating women 19–50 years old). Pregnant women aged 18 years and over have the highest dietary allowance of iron (27 mg/day). The iron requirement for vegetarians is about 1.8 times higher than for people that eat meat. Finally, tolerable upper intake levels have been established as follows: 40 mg/day for infants, children and teenagers up to 13 years of age; and 45 mg/day for teenagers 14 years and older and adults, including pregnant and nursing women (IOM, 2001; Health Canada, 2010).

2.2 Toxicokinetics

The pharmacokinetics of iron differ between humans and other animal species (IOM, 2001; WHO, 2003; ECHA, 2020). In humans, nonheme iron exhibits oral non-linear pharmacokinetics with maximum serum concentrations that depend on iron compounds and administered doses, and apparent serum half-lives varying between 4 and 6 hours (Tenenbein, 1998; Whittaker et al., 2002; Geisser and Burckhardt, 2011; Bateman et al., 2018; ECHA, 2020). Body iron is highly conserved with a small amount being absorbed daily to offset very small basal losses estimated at around 0.05% of body iron content (approximately 1–2 mg/day) for non-menstruating adults (IOM, 2001; WHO, 2003; EFSA, 2004; EFSA, 2015; Schrier, 2015; Czerwonka and Tokarz, 2017; Weiss et al., 2018; Zhang et al., 2019). Average additional losses of about 15 to 70 mg per month through menstruation are estimated for premenopausal adults (WHO, 2003; EFSA, 2004).

Absorption: In humans, nonheme iron is mainly absorbed in the duodenum. In intestinal cells, it is absorbed as ferrous iron at the apical membrane and Fe(III) at the basolateral membrane (Andrews and Schmidt, 2007; Dunn et al., 2007; Collard, 2009; Anderson and Frazer, 2017; Czerwonka and Tokarz, 2017). The divalent metal transporter-1, expressed on the apical membrane, and the ferroportin carrier, on the basolateral membrane, transport iron across the enterocyte. Hepcidin inhibits both intestinal carriers (Tenenbein, 1998; Geisser and Burckhardt, 2011; Kew, 2014; Ginzburg and Vinchi, 2019). Nonheme iron bioavailability is estimated to be 2% to 20% for typical North American diets. While absorption rates are expected to be lower for vegetarians and infants aged 7 to 12 months, they increase during pregnancy (IOM, 2001; Domellof, 2007; Collings et al., 2013; Fonseca-Nunes et al., 2014; Schrier, 2015; Ashmore et al., 2016; Czerwonka and Tokarz, 2017; Rodriguez-Ramiro et al., 2019). In healthy individuals, many dietary and host-related (for example, luminal) factors can modulate the absorption of nonheme iron. These include enhancers, such as ascorbic acid, citric acid and meat, and inhibitors, such as polyphenols, phytates, tannins, and calcium, which also affect heme iron, zinc and copper (Collings et al., 2013; Fonseca-Nunes et al., 2014; Schrier, 2015; Anderson and Frazer, 2017; Czerwonka and Tokarz, 2017; Rodriguez-Ramiro et al., 2019).

Distribution: Free iron is almost always sequestered in a non-toxic form through binding to proteins throughout the body (Geisser and Burckhardt, 2011; Anderson and Frazer, 2017). Within the enterocytes, nonheme iron can be stored as ferritin to meet the body's needs. Once in circulation, Fe(III), the circulating form of iron, is bound to the plasma transport protein transferrin and distributed to different organs for direct utilization or storage, depending on needs (Geisser and Burckhardt, 2011). Plasma transferrin has a normal saturation rate of approximately 30% in healthy humans, which leaves enough buffering capacity against circulating non-transferrin-bound iron. Labile transferrin-bound iron is the main source of free iron (which is highly reactive and toxic at high doses) accumulation in organs (mainly the liver and heart) and is associated with an iron overload when plasma transferrin saturation exceeds 60% (Geisser and Burckhardt, 2011; Ashmore et al., 2016; Anderson and Frazer, 2017; Muckenthaler et al., 2017; Ginzburg and Vinchi, 2019).

In healthy adults, total body iron typically ranges from 40 to 70 mg/kg body weight (bw), with men having higher levels than women. Body iron is primarily (60%–80%) distributed to erythroid marrow, followed by ferritin stores mainly in the liver, then in the reticuloendothelial system such as the spleen, the gastrointestinal (GI) tract, muscles, hemosiderin and a variety of enzymes (IOM, 2001; Health Canada, 2009; Geisser and Burckhardt, 2011; Collings et al., 2013; Kew, 2014; Schrier, 2015; Anderson and Frazer, 2017; Czerwonka and Tokarz, 2017; Zhang et al., 2019).

Metabolism: In the GI tract, ingested Fe(III) is reduced to ferrous iron by the ferrireductase duodenal cytochrome B on the apical membranes of enterocytes for uptake. Upon leaving the gut, the newly translocated ferrous iron is re-oxidized to ferric iron by hephaestin, a membrane-bound ferroxidase, for absorption. Within cells, in addition to being stored, iron participates in several metabolic processes. For example, the major pool of iron is directed to mitochondria where it is involved in the biogenesis of ion-sulphur clusters and heme (Andrews and Schmidt, 2007; Dunn et al., 2007; Collard, 2009; Anderson and Frazer, 2017; Muckenthaler et al., 2017).

Elimination: There is no clear and specific excretion mechanism for iron in humans (Tenenbein, 1998; Domellof, 2007; Kew, 2014; Ashmore et al., 2016; Ginzburg and Vinchi, 2019). Small losses (1–2 mg/day) of the nutrient occur through desquamated cells in feces, urine, sweat and skin, with losses from the three last media being negligible. Additional losses occur during menstrual bleeding (WHO, 2003; EFSA, 2004; Geisser and Burckhardt, 2011; Kew, 2014; Ashmore et al., 2016; Zhang et al., 2019). In addition to enterocyte sloughing, intestinal secretions may also contribute to iron loss in feces (IOM, 2001; WHO, 2003; Andrews and Schmidt, 2007; Anderson and Frazer, 2017; Weiss et al., 2018). There is some indication that GI tract iron losses are higher in toddlers (0.022 mg/kg bw per day) than in adolescents and adults (0.014 mg/kg bw per day) (Domellof, 2007).

Physiologically based pharmacokinetic modelling: No data available.

2.3 Health effects

Systemic iron overload, usually defined in humans as an increase in total body iron that leads to levels exceeding 5 g (Kew, 2014), may result in iron accumulation in body organs (specifically the liver, heart and endocrine organs) with the potential to cause detrimental health outcomes. It can occur because of environmental factors, genetics or a combination thereof. It is usually associated with the well-documented health outcomes of iron metabolism disorders, including primary (or inherited) and secondary (or acquired) iron overload syndromes (Agrawal et al. 2017; Ginzburg and Vinchi, 2019; Zhang et al., 2019). Most of these inherited (for example, hereditary hemochromatosis - HH) and acquired syndromes (for example, African dietary iron overload or Bantu siderosis; β-thalassemia) are uncommon to rare. The prevalence rate for HH ranges from about 0.25% to 0.5% (EFSA, 2004; Anderson and Frazer, 2017). Hereditary hemochromatosis is also commonly associated with liver cirrhosis (sometimes accompanied by hepatocellular carcinoma and severe fibrosis), cardiomyopathy, diabetes mellitus and other endocrinopathies (Beguin et al., 2014; Ashmore et al., 2016). Hereditary hemochromatosis patients may also have an increased risk of extrahepatic cancer, including breast and colorectal cancer (Torti and Torti, 2013).

Iron overload from oral iron exposure is rare in iron-replete healthy individuals, due to homeostasis (EFSA, 2015; Weiss et al., 2018). However, some data suggest that oral exposure to excess nonheme iron may result in a range of local and systemic adverse effects. It has also been associated with interference with the absorption or metabolism of other nutrients and infections. Thus, the focus in the following sections is on the adverse health effects of exposure to excess oral nonheme iron.

2.4 Health effects in humans

Human data indicate that overexposure to oral nonheme iron may induce toxicity to many organs with the GI tract being the most sensitive. Adverse effects on growth, cognitive and motor development, and infections in iron-replete infants and young children have also been suggested (Iannotti et al., 2006; Domellof, 2007; Lönnerdal, 2017).

2.4.1 Acute exposure

Acute toxicity from oral exposure to nonheme iron is rare in humans and, when it occurs, the effects are usually worse when iron is taken alone. Cases of poisoning have been reported after accidental or suicidal ingestion of medicinal iron or adult iron supplements (and iron-containing multivitamins), especially by children under 6 years of age (Pestaner et al. 1999; IOM, 2001; EFSA, 2004; U.S. EPA, 2006; Chang and Rangan, 2011; Bateman et al., 2018). Several cases of iron pill-induced gastritis have also been reported from complications related to therapeutic doses of iron tablets/pills, especially in elderly patients (Hashash et al., 2013; Liabeuf et al., 2014; Morais et al. 2017; Sunkara et al., 2017; Onorati et al., 2020).

The clinical course of poisoning is usually described in five stages: GI toxicity, relative stability, circulatory shock and acidosis, hepatotoxicity, and GI scarring (Tenenbein, 1998; Whittaker et al., 2002; EFSA, 2004; Chang and Rangan, 2011; EFSA 2015; Bateman et al., 2018).

Toxicity is usually not observed at oral doses equal to or less than 20 mg of Fe/kg bw. While oral doses as low as 20 to 60 mg Fe/kg bw are potentially toxic, lethal doses are generally considered to be in the range of 200 to 300 mg Fe/kg bw (IOM, 2001; Whittaker et al., 2002; Makrides et al., 2003; WHO, 2003; EFSA, 2004; U.S. EPA, 2006; Bateman et al., 2018). Among the supplements most studied, carbonyl iron and ferrous sulphate are the most toxic, followed by ferrous gluconate and ferrous fumarate (Radtke et al., 2004; Geisser and Burckhardt, 2011; Cancelo-Hidalgo et al. 2013; Anderson and Frazer, 2017).

2.4.2 Short-term exposure

Short-term overexposure to oral nonheme iron may cause GI distress (for example, abdominal pain, constipation, nausea, vomiting, and diarrhea) and may, to a lesser extent, interfere with the absorption of other nutrients, notably zinc (Makrides et al., 2003; EFSA, 2004; Tolkien et al., 2015; Low et al., 2016). Nonheme iron oral toxicity has mostly been investigated through short-term clinical studies of 2 weeks on average. Subjects in those studies, usually healthy or iron-deficient, were usually given medicinal or supplemental iron as iron salts (mostly ferrous sulphate) or carbonyl iron, in either tablet, capsule or liquid formulation (Tolkien et al., 2015; Low et al., 2016).

Of the few studies carried out in healthy individuals, the Tolkien et al. (2015) study provides the most comprehensive analysis of the health outcomes in the general population of ingestion of nonheme iron. This Cochrane systematic review and meta-analysis of 20 placebo and randomized controlled trials (RCT), in which 3 168 non-pregnant adults, men and women were orally randomized to 20 to 222 of mg Fe/day (iron was provided as ferrous sulphate) for 1 to 26 weeks, found a significantly increased risk of GI side effects (odds ratio [OR], 2.32; 95% CI, 1.74–3.08; p < 0.0001) (Tolkien et al., 2015). The most reported GI symptoms included nausea, constipation and diarrhea. Nineteen out of 20 of the studies included in the review were double-blind RCTs with (generally) healthy non-anemic participants. The review found that the association between dose and GI side effects was not significant (p = 0.17) (Tolkien et al., 2015).

The results from this review are supported by the findings from another Cochrane systematic review and meta-analysis, which involved 33 RCTs and quasi-RCTs in non-pregnant women of reproductive age (Low et al., 2016). They are also supported by findings from other prospective studies showing GI side effects at similar doses in healthy individuals (see IOM, 2001), iron deficiency patients (Ulvik et al., 2013) or healthy blood donors (Radtke et al., 2004; Bialkowski et al., 2015). In a double-blind placebo-controlled randomized parallel study in which healthy men and women blood donors consumed 60 mg Fe/day as supplemental nonheme iron for one month, there was a significant increase in the frequency of constipation (p < 0.05) and total incidence of GI side effects (p < 0.01) (Frykman et al., 1994). In that study, a lowest-observed-adverse-effect level (LOAEL) of 60 mg Fe/day was identified based on GI distress.

Similarly, four other RCTs (Radtke et al., 2004; Ulvik et al., 2013; Pereira et al., 2014; Bialkowski et al., 2015) found that the ingestion of ≥ 40 mg nonheme Fe/day for a short period resulted in GI side effects that were mostly mild.

All of these findings are consistent with the conclusions from previous assessments that GI side effects from oral exposure to nonheme iron are commonly observed at iron levels above 50 to 60 mg of Fe/day, particularly if the iron is taken alone (EFSA, 2015).

Overall, there is evidence of a causal relationship between short-term high intake of oral nonheme iron and adverse GI effects, and these effects are generally observed at doses exceeding 20 mg of Fe/day, that is, starting at 30 to 40 mg of Fe/day. The risk of GI adverse effects is higher when iron is taken orally as compared to intravenously (Makrides et al., 2003; EFSA, 2004; EFSA, 2015; Markova et al., 2015; Tolkien et al., 2015; Low et al., 2016). It has been reported that iron supplementation at levels below 30 mg Fe/day is not expected to affect serum zinc concentrations (Makrides et al., 2003). A LOAEL of 60 mg of Fe/day, based on (transient) gastrointestinal side effects in humans, was determined for risk assessment (Frykman et al., 1994).

2.4.3 Chronic exposure

The adverse health outcomes from chronic exposure to oral iron have been studied mainly in individuals with iron metabolism disorders. However, it is argued that chronic overexposure may be toxic for iron-replete, apparently healthy individuals. As such, the association between iron and chronic toxicity including hepatotoxicity, cardiovascular toxicity, hormonal toxicity, neurotoxicity and cancer has gained considerable attention in recent research.

Hepatotoxicity: The evidence to date, which is based mainly on expert consensus, does not support an association between chronic overexposure to nonheme iron and liver toxicity in iron-replete healthy individuals. According to the available data, chronic exposure to nonheme iron rarely results in liver damage in such individuals (EFSA, 2004; Chang and Rangan, 2011; Kew, 2014; Bloomer and Brown, 2019).

Cardiovascular toxicity (coronary heart diseases): Consistent with the conclusions from previous assessments (IOM, 2001; EFSA, 2004), the current evidence does not support an association between nonheme iron and cardiovascular toxicity in iron-replete healthy individuals although this type of iron might be a risk factor for coronary heart disease (CHD). However, the epidemiological data on the relationship between CHD risk and various variables related to iron intake and status have been inconsistent. Positive associations were found between CHD and measures of iron exposure in some studies (Salonen et al., 1992; Salonen et al., 1998; de Valk and Marx, 1999; van der A et al., 2005; Alissa et al., 2007; Hunnicutt et al., 2014), while others reported either negative associations (van der A et al., 2006; Mørkedal et al., 2011; Hunnicutt et al., 2014; Das De et al., 2015; Gill et al., 2017) or no association (Danesh and Appleby, 1999; van der A et al., 2005; Kaluza et al., 2014; Reyes et al., 2020).

Type 2 diabetes mellitus: The current evidence does not support an association between nonheme iron and type 2 diabetes in iron-replete healthy individuals. Significantly positive associations were reported between this health outcome and ferritin in systematic reviews and meta-analyses of prospective (Bao et al., 2012; Kunutsor et al., 2013) and observational (Zhao et al., 2012; Orban et al., 2014; Kataria et al., 2018) studies. Positive associations were also found between type 2 diabetes and iron body stores in large prospective nested case-control studies (Salonen et al., 1998; Jiang et al., 2004b). However, these findings were not supported by other prospective studies, which found no significant association with more closely related exposure variables such as dietary total iron, nonheme iron, and supplemental iron intakes (Jiang et al., 2004a; Bao et al., 2012; Kataria et al., 2018).

Neurotoxicity: There is no convincing evidence of the neurotoxicity of excess oral nonheme iron in apparently healthy individuals. However, the evidence from mechanistic studies and some magnetic resonance imaging (MRI) findings (for example, iron accumulation in the substantia nigra of patients with pantothenate kinase-associated neurodegeneration, Parkinson's disease and Alzheimer's disease) is still inconclusive. MRI can easily detect brain iron accumulation in some cases of neurodegeneration with brain iron accumulation despite the absence of a clear etiological relationship to the disease, which calls into question the role of excess iron as the primary cause of these multifactorial diseases (Hayflick and Hogarth, 2011; Chen et al., 2019). Overall, the evidence for iron chronic neurotoxicity in humans, which comes mainly from a few studies in infants and children, has been inconsistent (Agrawal et al., 2017). While no association was found between iron and cognitive development in infants fed iron-fortified milk formula (Iglesias et al., 2018), positive associations were reported for child neurodevelopment (including mental, psychomotor and behavioural aspects) and behaviour later in life, along with worse neuropsychological (that is, cognitive and visual-motor) scores (Lozoff et al., 2012; Iglesias et al., 2018; Gahagan et al., 2019).

Cancer: Despite the evidence of iron carcinogenicity based on mechanistic data (Chan et al., 2005; Torti and Torti, 2013; Fonseca-Nunes et al., 2014; Manz et al., 2016) and data on HH and African dietary overload patients (Fonseca-Nunes et al., 2014; Kew, 2014; Chua et al., 2016), the association between oral nonheme iron and human cancer is still inconclusive. Consistent with the conclusions from earlier assessments (EFSA, 2004; IOM 2001), the epidemiological evidence is still equivocal and does not support a causal relationship between oral nonheme iron and cancer in the general population (Chan et al., 2005; Shyu et al., 2013; Fonseca-Nunes et al., 2014; Chua et al., 2016).

Mixed results have been reported for an association between dietary iron, supplemental iron and iron biomarkers and either colorectal, breast, gastric or esophageal cancer (Tseng et al., 1997; Fonseca-Nunes et al., 2014; Ashmore et al., 2016). No association was found between higher levels of dietary, supplemental or total iron intake and several biomarkers of iron status and either colorectal (Chan et al., 2005), gastric (Cook et al., 2012), breast (Kabat et al., 2007; Kabat et al., 2010; Chang et al., 2019) or endometrial (Kabat et al., 2008) cancer or all cancer sites combined (Hercberg et al., 2005).

A reduced risk has been reported between (i) intake of dietary iron or iron supplements and cancer of the upper digestive tract (EFSA, 2004; Dawsey et al., 2014); (ii) intake of iron supplements and oesophageal cancer; or (iii) biomarkers of iron stores (mostly serum ferritin) and cancer in general (Fonseca-Nunes et al., 2014).

Finally, some studies have pointed to positive associations between dietary and supplemental iron and iron biomarkers and either colorectal (EFSA, 2004; Bastide et al., 2011; Meng et al., 2019), gastric (Dawsey et al., 2014) or breast (Moore et al., 2009; Chua et al., 2016; Diallo et al., 2016; Chang et al., 2019) cancer or cancer in general (Beguin et al., 2014; Chua et al., 2016).

2.5 Health effects in experimental animals

Studies have shown that the acute oral toxicity of oral nonheme iron ranges from low to moderate, and that this iron is not a reproductive or developmental toxicant or a carcinogen.

2.5.1 Acute toxicity

Studies have shown that nonheme iron has a low to moderate acute oral toxicity, with ferrous salts generally being more toxic than ferric salts. Toxicity varies with animal species and gender, as well as with iron formulation, compound or complex. Carbonyl Fe is the least toxic and ferrous sulphate among the most toxic. Also, males are less sensitive than females (Kawabata et al., 1989; Whittaker et al., 2002; WHO, 2003; Wan et al., 2015; Zhu et al., 2016). Depending on the compound, oral median lethal dose (LD50) values of 246 to 600 mg Fe/kg bw per day have been reported in mice (Sato et al., 1992; WHO, 2003; EC HA, 2020) and values of 220 to > 2 000 mg Fe/kg bw per day, in rats (Sato et al., 1992; Whittaker et al., 2002; WHO, 2003; Wan et al., 2015; Zhu et al., 2016; ECHA, 2020). Also depending on the compound, acute oral exposure to iron may cause adverse effects with varying degrees of severity in the GI tract, liver, and potentially the kidney and spleen (Whittaker et al., 2002; Fang et al., 2018). GI tract effects are usually reversible upon the cessation of exposure (Wan et al., 2015).

2.5.2 Subchronic toxicity

Subchronic exposure to nonheme iron has consistently resulted in damage to several organs, including the liver, spleen, kidneys and GI tract, and has been demonstrated in several experimental animal models. Systemic toxicity is generally dose related and observed following iron overload at very high doses (Appel et al., 2001; Dongiovanni et al., 2013; Toyoda et al., 2014; Wan et al., 2015; Zhu et al., 2016; Fang et al., 2018) (Table 2). Hepatotoxicity is the most reported adverse outcome (Bacon et al., 1985; Kawabata et al., 1989; Myers et al., 1991; Toyoda et al., 2014; Zhu et al., 2016; Ding et al., 2021).

Table 4. Selected data on the repeated dose subchronic toxicity of oral iron

| NOAEL/ (mg Fe/ kg per day) | Species, sex, number | Exposure duration | Compound and dose(s) | Critical effect(s) | Ref. |

|---|---|---|---|---|---|

| NOAEL > 196 mg/kg/d | Male Wistar rat (10/group) | 90 days | 100, 200 mg/kg as carbonyl iron. Gavage. | No toxicologically relevant effect. | Zhu et al. (2016) |

| NOAEL = 95 (males); 117.4 (females) | F344 rats, Males and females (10/sex) | 90 days | Ferric chloride hexahydrate: 80, 154, 277, 550, 1231 mg/kg/d in males / 88, 176, 314, 571, 1034 mg/kg/d in females. Drinking water. | Reduced body weight gain. | Sato et al. (1992) |

| NOAEL = 55 (males); 110.1 (females) | Rat (125 males/ 250 Females) | Males: 0–42 days Females: 0–4 days post-partum | Anhydrous iron (di)chloride: 125 (male), 250 (female), 500 mg/kg/d (500 ~ 220.5 mg Fe/kg/d) Gavage. Conducted following OECD TG 422 and GLP. | Males: changes in organ weight. Females: changes in organ weight and histologic findings. | Beom (2004) Footnote * |

| NOAEL = 20.1 | Rat (OECD 422) | See OECD 422 | FeSO4.7H2O: 1, 30, 100, 300, 1 000 mg/kg/d. (1 000 ~ 200 mg Fe/kg/d) Gavage. Conducted following OECD TG 422 and GLP. | Males: extramedullary hematopoiesis of the spleen. Females: increased levels of inorganic phosphate | Furuhashi (2002) Footnote * |

| NOAEL = 8 mg/kg bw | Male Sprague Dawley Rat (10/grp): 100–105 g each. | 30 days | Solution of FeSO4: 0, 8, 16, 24 mg Fe in vehicle (1 mL of 0.01 mol/L HCl). Gavage | Reduced body weight. Liver and intestinal damage. | Fang et al. (2018) |

| NOAEL = 137.5 mg/kg bw | Male and Female F344 rats | 13 weeks | Ferric citrate: 0, 143.9, 595.9, 2 834.7 mg Fe/kg bw/d in males / 0, 147.7, 601.4, 2 845.6 mg Fe/kg bw/d in females. | Significant reduction of body weight in both sexes at the highest dose. | Toyoda et al. (2014) |

| NOAEL > 77.9 mg/kg/d | Sprague Dawley Rat (10/sex/group) | 28 days (GLP study) | Ferrous N-carbamylglycinate: 0, 150, 300, 600 mg/kg bw/d Diet | No effect | Wan et al. (2015) |

| NOAEL > 11.5 mg/kg/d | Rat, males, 40/group | 61 days | Iron sulphate: 2.84, 5.69, 11.54 mg/kg/d Sodium iron EDTA: 2.81, 5.67, 11.19 mg/kg/d Diet | No toxicologically significant effects. | Appel et al. (2001) |

EDTA – Ethylenediaminetetraacetic acid; GLP – Good Laboratory Practice; GLP – Good Laboratory Practice; NOAEL – no-observed-adverse- effect level; OECD TG 422 – Organisation for Economic Cooperation and Development Test Guideline 422 - Combined Repeated Dose Toxicity Study with the Reproduction/Developmental Toxicity Screening Test.

- Footnote *

-

As reported in ECHA (2020) report.

2.5.3 Chronic toxicity

Based on the limited data available, hepatotoxicity is the main adverse outcome from chronic exposure to oral nonheme iron, although it typically occurs following iron overload and at very high doses (Plummer et al., 1997; Tjalkens et al., 1998; Stål et al., 1999; Asare et al., 2006; Bloomer and Brown, 2019). Hepatotoxicity, as evidenced by increased serum levels of aminotransferases (Alanine Aminotransferase and Aspartate Aminotransferase) and hepatic markers of oxidative stress and preneoplastic nodules or liver foci, was observed after rats or mice were fed a diet high in iron compounds (Ramm et al., 1995; Tjalkens et al., 1998; Stål et al., 1999; Asare et al., 2006). The limited data also suggest that the severity of the effects depends on the dose of elemental iron (Sato et al., 1992; Inai et al., 1994; Plummer et al., 1997; Asare et al., 2006).

2.5.4 Carcinogenicity

There is no evidence of nonheme iron carcinogenicity by the oral route (Stål et al., 1997; ECHA, 2020). Lifetime oral administration of iron salts to mice and rats had no significant effect on tumour incidence or on the distribution of different types of tumours in either females or males (Sato et al., 1992; Inai et al., 1994; Stål et al., 1999; Asare et al., 2006).

2.5.5 Reproductive and developmental toxicity

There is no evidence that iron is toxic to reproduction and development. Data from experimental animal studies indicate that iron compounds are neither reprotoxic nor embryotoxic nor teratogenic (WHO, 2003; U.S. EPA, 2006; ECHA, 2020).

2.6 Iron and infection

Iron is essential for many intestinal pathogenic enterobacteria (for example, E. coli, Escherichia/Shigella, and Clostridium species) and oral exposure to excess nonheme iron may promote their growth, abundance and virulence (Yilmaz and Li, 2018). This may impair the GI tract microbiome and barrier functions (EFSA, 2004; Dostal et al., 2014; Gies et al., 2018; Brabin et al., 2019). In turn, this may increase the risk of GI tract (and respiratory) inflammation and infections as evidenced by data on iron-replete young children (Sazawal et al., 2006; Zimmermann et al., 2010; Soofi et al., 2013; Jaeggi et al., 2015; Paganini et al., 2017) and experimental animals (Constante et al., 2017; Fang et al., 2018; Hoffmann et al., 2021).

2.7 Genotoxicity

The currently available data indicate no evidence of genotoxicity for iron (WHO, 2003; U.S. EPA, 2006; NHMRC and NRMMC, 2011; Wan et al., 2015; ECHA, 2020). Many inorganic iron salts produced negative results in both in vivo (for example, ferric chloride, ferrous chloride, ferrous sulphate) and in vitro (for example, ferric chloride, ferric diphosphate, ferric orthophosphate, ferrous chloride, ferrous sulphate) genotoxicity tests (Stål et al., 1997; WHO, 2003; Toyoda et al., 2014; Wan et al., 2015; ECHA, 2020). For example, negative results were obtained for ferric chloride, ferrous chloride and ferrous sulphate in the in vivo mammalian chromosome aberration test. These salts all gave negative results in vitro in the bacterial gene mutation test, the mammalian gene mutation test, and the mammalian chromosomal aberration test (ECHA, 2020). In most genotoxicity tests, negative results were also obtained for iron carbonyl and numerous iron complexes including ferric citrate, ferrous lactate, ferrous gluconate, iron dextran, and ferrous N-carbamylglycinate (WHO, 2003; EFSA, 2004; Toyoda et al., 2014).

2.8 Mode of action

The mode of action by which excess oral nonheme iron induces gastrointestinal injury, a local effect, has yet to be fully characterized. To date, the most supported hypothesis for mode of action is direct and local injury (for example, simple irritation, erosions, or more severe inflammation) to the GI tract mucosa (EFSA, 2004; U.S. EPA, 2006; Onorati et al., 2020). Some studies have also mentioned changes to the composition or metabolism of the bowel microbiota, but these effects have not been fully explored to date (Tolkien et al., 2015; Qi et al., 2020).

Compelling evidence from observations of iron deposits in the GI tract in cases involving therapeutic iron ingestion or in patients with a history of oral iron supplementation supports the finding that nonheme iron ingestion induces and exacerbates erosive or corrosive mucosal injury (Sunkara et al., 2017). Erosions (often with non-bleeding ulcers), erythema and yellowish-brown discolouration of the GI tract mucosa are the main (macroscopic) features of oral nonheme ion related-GI tract injury. This is evidenced by GI tract endoscopy and esophagogastroscopy results from several cases of iron poisoning, including patients with iron pill-induced gastritis (Cimino-Mathews et al., 2010; Liabeuf et al., 2014; Morais et al., 2017; Sunkara et al., 2017; Onorati et al., 2020).

Histological findings from GI tract biopsies have confirmed crystalline iron deposits, sometimes heavy, usually brown-black, in the GI tract mucosa of patients with erosive gastritis and ulcers related to oral iron (for example, tablets, pills) (Haig and Driman, 2006; Cimino-Mathews et al., 2010; Sunkara et al., 2017; Ching et al., 2019; Onorati et al., 2020). Such iron deposits can be extracellular or intracellular and occur in macrophages, glandular epithelium and blood vessels (Morais et al., 2017; Onorati et al., 2020).

Two key events are identified for oral nonheme iron-induced corrosive mucosal injury: (i) formation of hydrated ferric oxide (a corrosive species, also known as rust) and reactive oxygen species (ROS), and (ii) direct damage to the GI tract mucosa (Tenenbein et al., 1998; IOM, 2001; Jiang et al., 2004b; Collard, 2009; Cimino-Mathews et al., 2010; Manz et al., 2016; Anderson et al., 2017; Sunkara et al., 2017; Fang et al., 2018; Bloomer and Brown, 2019; Chen et al., 2019; Ginzburg et al., 2019; Zhang et al., 2019; Onorati et al., 2020). Most evidence supporting these key events is based on expert consensus.

In cases of oral exposure to excess nonheme iron, most excess iron is left unabsorbed in the GI tract lumen and mucosa due to homeostasis. In the first key event (formation of hydrated ferric oxide), iron pro-oxidative properties and characteristics of the GI tract environment (for example, high level of cellular metabolic activity, pH) lead to any unabsorbed free iron participating to and catalyzing a series of reactions. These include the Haber Weiss reaction which will generate ROS (for example, hydroxyl radicals) and Fenton reactions, which will generate hydrated ferric oxide (Tenenbein, 1998; Qi et al., 2020). In vitro studies have shown a correlation between intracellular free iron and oxidative stress (EFSA, 2004). Reactive oxygen species have also been linked to mucosal alterations in gastric or intestinal injuries (Liabeuf et al., 2014).

The second key event results from the action of the newly formed chemically reactive species. When ROS production exceeds the capacity of the endogenous antioxidant systems, the reactive species start accumulating in the lumen or mucosal cells of the GI tract. Due to prolonged stasis there, they may directly injure the GI tract mucosa via corrosion (in the case of hydrated ferric oxide) or oxidative damage (in the case of ROS). This will lead to irritation (that is, erosive injury similar to chemical burns) and focal inflammation and ulceration, respectively (EFSA, 2004; Jiang et al., 2004b; Hashash et al., 2013; Liabeuf et al., 2014; Anderson and Frazer, 2017; Zhang et al., 2019; Onorati et al., 2020; Qi et al., 2020).

Overall, the resulting injury will depend on local concentrations of free iron in the GI tract and time of contact (IOM, 2001; EFSA, 2004; Hashash et al., 2013; Onorati et al., 2020).

2.9 Selected key study

The pharmacokinetics of iron differs between humans and other animal species. According to pharmacokinetic data for humans, infants and pregnant people are not more sensitive to iron than the general population. Even though older people might experience severe GI symptoms due to changes in dietary habits (among other factors), this is usually in the context of iron therapy supervised by their physicians. In a literature search, the lowest point of departure for GI toxicity was identified in a short-term clinical study (Frykman et al., 1994).

The selection of the placebo-controlled and double-blind study by Frykman et al. (1994) as the key study for the risk assessment of iron in drinking water is consistent with the approach used in other assessments (IOM, 2001). Despite some limitations (for example, short-term study) this study provides strong evidence for GI distress in iron-replete individuals. The population in this study includes healthy men and non-pregnant women, and the test chemical is relevant for drinking water applications. The findings from this study are supported by other clinical studies involving similar subjects (Radtke et al., 2004; Ulvik et al., 2013; Pereira et al., 2014; Bialkowski et al., 2015; Tolkien et al., 2015; Low et al., 2016).

In the study, 97 Swedish adult men and women who were regular blood donors were given either 60 mg total Fe/day (as nonheme iron only), 18 mg total Fe/day (as a mixture of nonheme and heme iron) or a placebo for one month to evaluate the side effects of these iron supplements. At the end of the supplementation period, the only major difference among the study participants was the significant difference observed in the side effects. The group of individuals who received nonheme iron alone presented with a significantly higher frequency of constipation (p < 0.05) and total incidence of all side effects (p < 0.01) than the other groups. A LOAEL of 60 mg total Fe/day (as nonheme iron only) was identified in this study for daily iron supplementation based on gastrointestinal distress (Frykman et al., 1994). To that LOAEL, the IOM (2001) added the estimated European mean daily intake of 11 mg total dietary iron, to estimate a LOAEL of 70 mg/day (rounded) for a total intake of iron and gastrointestinal effects. Considering the mild nature of the gastrointestinal effects observed the IOM (2001) applied an uncertainty factor of 1.5 to estimate a NOAEL of 45 mg/day for total intake of iron (rounded).

3.0 Derivation of a Health-Based Value (HBV)

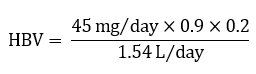

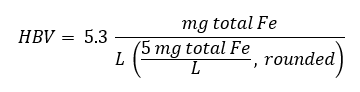

A tolerable upper intake level (UL) of 45 mg/day for total intake of iron, based on gastrointestinal toxicity in humans, has been established for apparently healthy adults (IOM, 2001; Health Canada 2010). Using this UL, an HBV for total iron (both Fe(II) and Fe(III)) in drinking water is calculated as follows:

Where:

Figure 1 - Text description

The HBV is calculated by multiplying 45 milligrams per day by 0.9, then by 0.2. This product is then divided by 1.54 litres per day.

Figure 2 - Text description

The HBV is equal to 5.3 milligrams total iron per litre.

- 45 mg/day (for total intake of iron) is the UL (IOM, 2001; Health Canada, 2010).

- 0.9 is the percent contribution of nonheme to total iron intake.

- 0.2 is the default allocation factor value as outlined in Krishnan and Carrier (2013) although data suggest that the drinking water contribution could be less (see section 1.3).

- 1.53 L/day is the drinking water daily intake for a Canadian adult (Health Canada, 2021). Due to its physicochemical properties, exposure to iron from showering or bathing is not expected to be significant. As such, a multi-route exposure assessment, as outlined by Krishnan and Carrier (2008), was not performed.

4.0 Analytical and Treatment Considerations

4.1 Analytical methods to detect iron

4.1.1 Standardized methods

The standardized methods available for the analysis of total iron in drinking water and their respective MDLs are summarized in Table 5. MDLs are dependent on the sample matrix, instrumentation and selected operating conditions and will vary between individual laboratories. Analyses for iron should be carried out as directed by the responsible drinking water authority. Water utilities should confirm that the method reporting limits are low enough to ensure accurate quantitation at concentrations below the proposed AO.

| Method (Reference) | Methodology | MDL (µg/L) | Interferences/Comments |

|---|---|---|---|

| U.S. EPA Methods | |||

| EPA 200.5 Rev 4.2 (U.S. EPA 2003) | Axially viewed inductively coupled plasma atomic emission spectrometry (AVICP-AES) | 3.3 | Matrix interferences: calcium, magnesium and sodium > 125 mg/L and silica > 250 mg/L |

| EPA 200.7 Rev 4.4 (U.S. EPA 1994a) | Inductively coupled plasma-atomic emission spectrometry (ICP-AES) | 30 | Matrix interferences: total dissolved solids > 0.2% weight per volume(w/v) |

| EPA 200.9 Rev.2.2 (U.S. EPA 1994b) | Graphite furnace atomic absorption (GFAA) | NA | NA |

| APHA Standard Methods | |||

| SM 3111 B (APHA et al, 2017) | Direct Air-Acetylene Flame Method | 20 | NA |

| SM 3113 B (APHA et al,, 2017) | Electrothermal atomic absorption spectrometric method (AAS) | 1 | NA |

| SM 3120 B (APHA et al., 2017) | Inductively coupled plasma mass spectrometry (ICP-MS) | 30 | Matrix interference: total dissolved solids > 1 500 mg/L |

| 3500-Fe B (APHA et al., 2017) | Phenanthroline method | 10 | Interfering substances: strong oxidizing agents, that is, cyanide, nitrate, phosphate, chromium and zinc at concentrations 10 times greater than the iron concentration. |

APHA – American Public Health Association; ICP-MS – inductively coupled plasma mass spectrometry; MDL – method detection limit; NA – not available.

4.1.2 Sample preparation

Total iron includes both the dissolved and particulate (suspended) fractions of iron in a water sample and is analyzed using methods for total recoverable iron. Analysis of total iron is needed for comparison to the proposed AO.

Accurate quantification of dissolved, particulate and total iron in samples is dependent on the proper sample preservation and processing steps. It is very difficult to prevent the oxidation of Fe(II) during the transport of a water sample that contains Fe(II) from a field site to a laboratory for analysis because Fe(II) is not stable and changes to the Fe(III) form in solution in contact with air. For this reason, determination of ferrous iron should be done in the field at the time of sampling, and great care must be taken to minimize exposure of the sample to the atmosphere.

Standard method SM 3030B provides guidance on filtration and preservation (acidification) procedures for the determination of dissolved or particulate metals (APHA et al., 2017). Importantly, to determine dissolved iron concentrations, samples should be filtered and the filtrate acidified to pH < 2 at the time of collection (not at the laboratory). The delineation between dissolved and particulate fractions in a sample is dependent on the filter type and pore size. Therefore, water utilities that may have smaller particles or colloids present in the water should consider whether the standard filter size (0.4–0.45 µm pore-diameter membrane) is suitable. Information on methods for fractionating constituents in water into dissolved, colloidal and particulate fractions is available elsewhere (Brandhuber et al., 2013).

Currently, United States Environmental Protection Agency (U.S. EPA) methods 200.5, 200.7 and 200.9 and SM 3113B do not require hot acid digestion for total recoverable metals unless the turbidity of the sample is greater than 1.0 nephelometric turbidity unit (NTU) (U.S. EPA, 2003, 1994a, b; APHA et al., 2017). Verification that adequate recovery of metals has occurred in different sample matrices by comparing digested and undigested samples is recommended (APHA et al., 2017).

4.1.3 Online and portable colorimetric analyzers

Commercial online analyzers and portable test kits are available for quantifying dissolved and total iron in source and drinking water and are generally based on the colorimetric method. Online analyzers can be used to obtain a rapid or continuous (online units only) indication of changes in iron concentrations.