Evaluation of Distributed Computing Services

Evaluation of Distributed Computing Services

© Her Majesty the Queen in Right of Canada, as represented by the Minister responsible

for Shared Services Canada, 2016

Cat. No. P118-12/2016E-PDF

ISBN 978-0-660-05578-7

Evaluation of Distributed Computing Services (PDF Version, 398 KB)

Free PDF download available

Executive Summary

What we examined

The evaluation team examined Distributed Computing Services (DCS) provided by Shared Services Canada (SSC) to a group of client organizations on a cost-recovery basis. DCS encompass a range of services that support the provisioning and functionality of employee workstations and computing environments, such as desktop engineering, deployment and ongoing technical support. SSC’s work in consolidating government-wide procurement of software and hardware was excluded from the evaluation.

DCS are identified in Sub-Program 1.1.1 of SSC’s 2014–2015 Program Alignment Architecture and were included in the 2014–2017 Risk-based Audit and 2014–2019 Evaluation Plan. The objective of this evaluation, as per the Treasury Board Policy on Evaluation, was to determine the relevance and performance of DCS. The objective was further refined to focus on assessing performance and identifying factors that affect the achievement of service outcomes.

Why is it important

DCS provide employees, or end users, within an organization with a functioning workstation and computing environment that enable end-user productivity in the execution of their work. DCS thus serve as an enabler to achieving an organization’s mandate and business objectives. SSC’s DCS presently support over 19,000 workstations across five client organizations. The evaluation team assessed the extent to which DCS service objectives were achieved with a view of improving service delivery and informing the development of the Workplace Technology Devices (WTD) strategy, which will transform and modernize the delivery of distributed computing in the Government of Canada.

What we found

The evaluation team found that SSC was making progress in the achievement of DCS outcomes, but identified a number of challenges, both intrinsic and external to DCS, that impacted service outcome and client satisfaction.

Overall, employees in client organizations have access to functioning devices, applications and services and to technical support. DCS are largely meeting commitments to provide a reliable and stable desktop environment and central agency requirements for upgrades.

Client satisfaction with the provision of received services and ongoing technical support was mixed, with larger client organizations reporting higher levels of satisfaction and smaller client organizations raising concerns over SSC’s ability to resolve issues for DCS in a timely manner. Client management acknowledged that DCS technical staff were professional and dedicated, but identified staffing as an issue. Other areas contributing to diminished client satisfaction were lengthy timelines associated with the execution of client requests, extensive administrative work, communications gaps, and high cost of services for small client organizations.

SSC has made some progress in sharing lessons learned across client organizations, implementing a number of new technologies and solutions and reducing cost for larger client organizations. Additional opportunities for standardization, automation and innovation are available within SSC’s DCS, as well as within government-wide distributing computing.

We also identified several factors that affected DCS service delivery. These included funding and resourcing challenges, a lack of standard SSC processes and tools for demand management and client communication, and limitations associated with the management of service agreements. The liaison function fundamental to understanding client needs and environment and driving DCS solutions was lacking, as was the presence of a service catalogue, costing methodology and cost per service line. Some of these limitations are expected to be addressed by SSC’s organizational restructuring that took effect in April 2015.

The future of SSC’s DCS will be aligned with a multi-year WTD strategy, which will transform government-wide DCS. We identified that a phased approach to transformation would work best in the government context, as would a hybrid approach with respect to the service delivery model. Strategic out-tasking, in which well-defined and routine components of DCS are farmed out to the private sector, has potential to avoid risks associated with a single mode of service delivery and to focus in-house experience on higher-value transactions, such as innovation or customer experience.

Yves Genest

Chief Audit and Evaluation Executive

Introduction

- This report presents the results of the evaluation of Shared Services Canada’s (SSC) Distributed Computing Services (DCS). The evaluation forms part of SSC’s 2014–2017 Risk-based Audit and 2014–2019 Evaluation Plan and was conducted in accordance with the Treasury Board (TB) Policy on Evaluation and the Standard on Evaluation for the Government of Canada.

- DCS are part of the Information Technology (IT) Infrastructure Services Program in the 2014–2015 SSC Program Alignment Architecture. DCS encompass a range of services that support the provisioning and functionality of employee/end-user workstations and computing environments. These include desktop engineering for applications and operating systems, end-user support through a service desk and deskside technical support, file and print services and remote access among others.Footnote 1 SSC provides these DCS to the Department and a small group of client organizations on a cost-recovery basis.Footnote 2

- There has been no previous evaluation of DCS. The current evaluation examines the relevance and performance of cost-recoverable DCS for the period from 2011–2012 to 2014–2015. SSC efforts in leading the development of the Workplace Technology Devices (WTD) strategy to transform and modernize the delivery of DCS in the Government of Canada are excluded from the present assessment.

Program Profile

Background

- SSC was created on August 4, 2011, to transform how the Government of Canada manages its IT infrastructure. Following the creation of SSC, Public Works and Government Services Canada (PWGSC), which provided some optional IT infrastructure services to federal organizations, transferred to SSC the control and supervision of operational domains related to email, data centres, network services, telecommunications, IT security and DCS, along with the associated funding and positions responsible for service delivery.

- Prior to the transfer, PWGSC managed its own DCS and signed agreements with the Canada School of Public Service (CSPS), Infrastructure Canada and the Canadian Human Rights Tribunal (CHRT) for the provision of DCS on a cost-recovery basis. All these organizations are now served by SSC, which also provides DCS to the Department’s employees on the SSC networkFootnote 3 SSC and client organizations sign Service Level Agreements (SLA) to ensure that the prior elements and commitments are in place to maintain the delivery of DCS and associated service support by SSC. Services included in SLAs are tailored to each client organization and provide configuration, deployment, installation and end-to-end lifecycle management of the workspace environment.

- Other federal departments and agencies continue to manage their DCS until the implementation of a multi-year WTD strategy for the Government of Canada.

Roles and Responsibilities

- The provision of DCS is a shared responsibility between the client (PWGSC, SSC, CSPS, Infrastructure Canada, and CHRT) and the service provider (SSC). Service-specific roles and responsibilities are outlined in each individual SLA in accordance with the agreed-upon service support model.

- In general, client organizations are responsible for:

- providing and reviewing the scope of DCS and service volume allocations (consumption metrics) covered by the SLA;

- identifying and providing timely and accurate information and requirements for adding, changing or removing end-user accounts, applications, shared drive access and workstations;

- procuring and storing a minimum pool of devices for deployment (in-stock assets);

- managing software licences;

- testing functionality of client business applications;

- supplying the list of names for executive support (VIP);

- submitting written service requests for new services to SSC; and,

- paying all charges associated with the SLA and cost-recovery agreements negotiated for new service requests.

- Within SSC, the delivery of DCS has been overseen by several functions: a team of client relationship managers and a team of technical experts responsible for day-to-day administration of DCS. The role of client relationship managers focuses on building an effective and sustained business relationship with clients, negotiating business arrangements (SLAs and cost-recovery agreements), managing the intake of business requests and client monitoring. The technical DCS experts are responsible for delivering DCS according to the consumption metrics and service level targets covered by signed agreements, providing technical advice to the client on business requirements, conducting a semi-annual review of the consumption metrics, certifying and testing infrastructure changes, communicating planed outages and/or service changes, and providing estimates for new services and projects.

- In April 2015, SSC realigned a number of business functions, including those for demand management, client relationship management, service design and delivery. Data collection and analysis for the evaluation were undertaken prior to the realignment.

Resources

- As of March 31, 2015, DCS full resource complement on the service delivery side consisted of 248 full-time equivalent positionsFootnote 4 (including one Senior Director and two Directors). Additional 80 call centre agents engaged through a contractual arrangement were assigned to end-user technical support in the National Capital Region. Every client organization also had access to a client relationship manager, who dedicated a portion of their time to DCS along with other SSC IT services provided to the client.

- DCS expenditures in 2013–2014 were $25.6 millionFootnote 5, which represent 1.3% of the departmental gross expenditures ($2,004 million) for that year.

- According to a revenue consolidation exercise undertaken by DCS management, DCS accounted for a total of $26.4 million in revenues in 2014–2015,Footnote 6 including revenues from SLAs and cost-recovery agreements for new services.

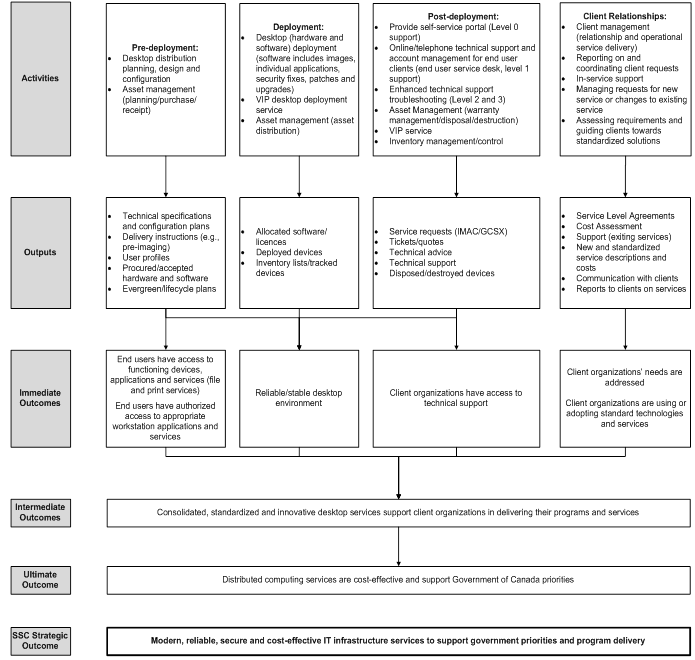

Activities

- DCS activities consist of pre-deployment, deployment and post-deployment activities that are supported by client relationship management. Pre-deployment activities involve desktop distribution planning, design and configuration that lead to the development of technical specifications and configuration plans, delivery instructions and plans for deployment, and definition of user profiles. Pre-deployment activities also include planning, purchasing and receipt of hardware and software and the creation of lifecycle and evergreen plans.

- Deployment is concerned with providing the end user with access to functioning devices, applications and services. This involves engineering, maintenance and delivery of the operating system and applications to workstations; monitoring security advisories and ensuring that all security fixes, patches and upgrades are tested and installed in a timely manner; hardware and software distribution; and asset tracking activities.

- Post-deployment activities focus on providing the end user with ongoing technical support and user account management. These services are delivered through a self-service web portal, online and telephone requests to the Service Desk and enhanced deskside support and troubleshooting by technical teams. On the asset management side, post-deployment activities involve warranty management, inventory control and asset disposal.

- Client relationship activities are client-facing and support the delivery of DCS. They include managing agreements and client expectations, coordinating and triaging requests for new services or changes to the existing ones, assessing client requirements and guiding clients towards appropriate solutions. Their role also involves reporting on performance and service utilization against commitments, as well as acting as contacts for issue escalation.

Logic Model

- The activities described above are graphically presented in the logic model developed for SSC’s DCS (Annex A). The logic model identifies service activities, outputs and outcomes and shows the relationships/linkages among these components to illustrate the logic of how DCS are expected to achieve immediate, intermediate and ultimate outcomes. The logic model was developed based on a document review, interviews with DCS stakeholders and a workshop with DCS management.

Focus of the Evaluation

- The overall objective of the evaluation was to determine the relevance and performance of DCS as per the TB Policy on Evaluation. Following consultations with SSC stakeholders involved in the delivery and transformation of distributed computing, two specific objectives were identified for the evaluation of cost-recoverable DCS:

- To assess the performance of services by examining issues pertaining to service effectiveness, efficiency and economy; and

- To identify factors enhancing or inhibiting the achievement of expected outcomes.

- We did not address the issue of relevance as SSC’s DCS will be transformed in accordance with the WTD strategy being developed for the Government of Canada. Analyses and business cases prepared for the transformation of government-wide DCS confirmed the ongoing relevance of DCS in a broader government context and the alignment of services with government priorities and federal roles and responsibilities.

- An evaluation matrix was developed during the planning phase to guide the evaluation. It identified a set of evaluation questions, indicators, research methods and data sources. Multiple lines of evidence gathered through quantitative and qualitative methods were used to assess DCS. These included a document and literature review, interviews with management representatives from five client organizations; DCS management and client relationship managers; case studies to examine the delivery of DCS in other larger government organizations; and, analyses of DCS performance and financial data. In addition, secondary data were used to inform the evaluation. The secondary data sources comprised the environmental scan and the current state assessment conducted for the WTD initiative, DCS reports on key performance indicators to client organizations, DCS activity-based costing data and Service Desk client satisfaction results.

- Annex B presents additional information on the approach and methodologies used to conduct this evaluation, as well as evaluation limitations and mitigation strategies.

Findings and Conclusions

- The findings and conclusions below are based on multiple lines of evidence collected during the evaluation. They are organized in the report according to the evaluation objectives and include a section on alternatives to DCS service delivery.

Performance

- The evaluation examined the performance of DCS, like the degree to which services achieved their expected immediate, intermediate and ultimate outcomes, and demonstrated efficiency and economy. The outcome achievement section is followed by an assessment of the factors that enhance or inhibit the achievement of service outcomes and of alternatives to service delivery.

Outcome Achievement

Immediate outcome: End users have access to functioning devices, applications and services (file and print services); End users have authorized access to appropriate workstation applications and services.

- Based on the documents reviewed, data reports from SSC databases and interviews with management representatives from client organizations, we found that employees/end users in client organizations had access to functioning devices, applications and services. The level of satisfaction with the achievement of this outcome was higher for DCS clients with a larger number of employees and lower for DCS clients with a smaller number of employees. The DCS team established processes around end-user authentication, access control, software authorization and distribution that ensured proper access to workstation applications and services. The transition to the new operating system has further brought enhanced security through hard drive encryption and efficiency through automated distribution of applications while maintaining end-user productivity.

- In 2014–2015, SSC’s DCS served over 19,000 workstationsFootnote 7 across five client organizations (Table 1). Some clients also received asset management services for their DCS assets (desktops, laptops, monitors and notebooks). Please refer to the table below for details.

| Client | # of workstations* | # of assets deployed** (including monitors) | # of assets in-stock inventory** (including monitors) |

|---|---|---|---|

| SSC | 3,613 | 7,866 | 2,435 |

| PWGSC | 13,921 | 29,058 | 2,870 |

| CSPS | 1,150 | 2,739 | 1,077 |

| Infrastructure Canada | 501 | no agreement | |

| CHRT | 30 | no agreement | |

Data Source: * as reported in the activity-based costing provided by DCS management. ** extracted from the Asset Management database by SSC’s IT asset management team.

- The DCS team migrated clients’ desktop operating systems to Windows 7 within the timeframe allocated to comply with the Treasury Board of Canada Secretariat’s (TBS) requirement to upgrade Government of Canada computers. The new operating system brought about notable benefits, such as enhanced security through BitLocker hard drive encryption, simplified login that authenticates users to their workstations, the Internet and email services,Footnote 8 less intrusive software updates that run in the background, and Wi-Fi access capability. DCS employed a new system configuration manager to support and simplify the distribution of approved applications, which can be installed to select end users or computers, thus restricting online downloads by non-administrators.

- Management representatives from all five client organizations interviewed agreed that their employees had access to functioning workstations and that this DCS outcome was achieved. They commented that end users within their organizations were generally satisfied with the functionality of provided devices, but also noted that there had been some security vulnerabilities in the past and that patch management and testing could be improved as it affected the functioning of some applications. Client representatives from three organizations emphasized the positive aspects of having the workstations locked (restricted administrative rights) that protected the government assets and network from unauthorized downloads and vulnerabilities. Representatives from large government departments interviewed for the case study similarly identified this as priority for implementation. Lengthy timelines for actioning client requests and lack of engineering resources were highlighted as another area for improvement, with pre-deployment viewed as a weakness by all client representatives interviewed. For the client organization with the smallest number of employees, some solutions (e.g. automatic updates) were not available due to the high cost of their implementation. As a result, some work was performed manually by a technician on site. Representatives from the three smaller client organizations also noted that they often were at the tail-end of deployment and could benefit from participating in earlier roll-outs.

- DCS management interviewed highlighted the improvements made to DCS to enable the achievement of this outcome. These included the new multi-level governance structure that allowed to share lessons learned from work with different client organizations, integration of DCS engineering teams, implementation of a new system configuration manager and virtualization technologies. They also underscored that greater emphasis should be placed on managing assets (providing space for assets, insurance funding, asset replacement/ever-greening). Another area identified as supporting the achievement of this immediate outcome was documentation of DCS processes. While this was emphasized less by SSC interviewees, interviewees from large government departments selected for the case study underscored that well-documented processes with clear service standards and service time and which reflect new services, policy requirements and new systems in place were fundamental to ensuring the functionality of end-user workstations.

Immediate outcome: Reliable and stable desktop environment.

- We found that DCS provided by SSC to five client organizations were generally reliable and stable. This finding was evidenced by the resolution rate of critical business impact incidents affecting DCS and interviews with management representatives from client organizations.

- Data on the performance of deskside support services in resolving incidents within committed resolution times show that, for a period from June 2013 to June 2014, SSC met or exceeded the operational level resolution rate target of 80%. All incidents classified as critical or high priority that could disrupt end-user desktop experience were addressed within established target times.

- Client relationship managers who act as contacts for resolving client issues with DCS client organizations reported that their clients had not raised issues related to DCS’ technical solutions or the reliability and stability of DCS with them. Indeed, management representatives from all five client organizations acknowledged the reliability, stability and security of DCS. Client interviewees commented that received services were stable and compared favourably in terms of reliability to other IT services provided by SSC. They perceived the management of incidents and vulnerabilities affecting DCS to be adequate, but suggested improvements in patch (updates) management and pre-release testing. The most commonly cited patch management issue was with Java updates and upgrades, which necessitated updates to clients’ business applications to be compatible with the new Java versions. As for pre-release testing, most client organizations indicated that they undertake some level of testing internally prior to releasing a DCS application and that at times they find coding errors or other defects (bugs), suggesting that SSC could improve its pre-release testing.

Immediate outcome: Client organizations have access to technical support.

- We observed that DCS service support models differed across five client organizations. Technical support options available to end users in client organizations are outlined in respective SLAs, including the type of support provided, contact mechanisms and hours of operations. All client organizations have access to the DCS Service Desk (Level 1 technical support), which serves as the central point of contact regarding the delivery of service operation to end users. Service Desk agents are accessible by telephone from 7:00 to 17:00 local time to troubleshoot/address the solution of a fault, provide “how-to” instructions and to manage requests for installations, moves and changes. They log all service requests and issues into a ticketing system. Two clients (PWGSC and SSC) have access to web-based portals available 24/7 to facilitate the submission of approved requests for installations, moves and changes to the Service Desk. These clients also have access to the self-serve service depots established in major centres across the country that enable the distribution and exchange of devices. At the time of the evaluation, PWGSC was the only client that had a self-serve web portal for password resets. Should client issues not be resolved at the first point of contact, Service Desk agents record them in the ticketing system and dispatch to another source of support, such as Level 2 (on-site support) and Level 3 (specialized) technical support. SSC uses the IT Infrastructure Library (ITIL®) as a framework to ensure consistent and repeatable processes for managing IT service and support.

- Based on data reports from SSC databases and interviews with management representatives from client organizations, we found that technical support provided by DCS was, in general, sufficient for the majority of end users, but was not always timely. End users in client organizations have experienced long wait times in accessing the Service Desk and smaller client organizations reported experiencing difficulties in receiving resolutions in a timely manner.

- As the first line of technical support, the DCS Service Desk aims to respond to end-user inquiries quickly and to resolve the majority of issues at the first point of contact. To measure the level of Service Deck responsiveness to incoming calls, DCS uses the percentage of calls answered within 120 secondsFootnote 9 (target is 70% or greater) and the percentage of calls abandoned by the end user before speaking to a live agent after a 60 second wait (target is 7.5% or lower). The achievement of these targets fluctuated across months and across client organizations in 2014–2015 (Table 2), with the overall rate of call answer before 120 seconds at 49% and the rate of call abandon after 60 seconds at 10%, which both point to longer wait times.

| Client | # of Calls answered | # of Calls answered ‹120 seconds | # of Calls abandoned after 60 seconds | Calls answered in ‹120 seconds (%) | Calls abandoned after 60 seconds (%) |

|---|---|---|---|---|---|

| SSC | 34,084 | 20,860 | 2,452 | 61% | 6% |

| PWGSC | 80,719 | 34,865 | 12,075 | 43% | 12% |

| CSPS | 7,443 | 4,247 | 635 | 57% | 7% |

| Infrastructure Canada | 2,291 | 1,609 | 198 | 70% | 7% |

| CHRT | 110 | 80 | 11 | 73% | 9% |

Data Source: Data reported in the Government Operations Portfolio IT Operations Metrics for the period from April 2014 to March 2015.

- According to DCS management, longer wait times were, in part, caused by the roll-outs of several major government initiatives, which affected end users’ operating systems and applications (e.g. transition to Windows 7 and Office 2010, introduction of the Public Service Performance Measurement Application, launch of the new email systemFootnote 10) and resulted in increased call volumes. Stakeholders interviewed identified better coordination, communication and testing of new releases and use of flexibility built into the Service Desk contractFootnote 11 as opportunities to improve Service Desk responsiveness to end users.

- To guide the resolution of client issues, Service Desk agents are trained in the use of established protocols and procedures, including step-by-step call scripts and general etiquette practices. DCS management confirmed that a document repository that contains over 1,750 individual procedures, which are updated periodically, is available to Service Desk agents. Data collected by Service Deck management in 2013–2014 from a post-call satisfaction survey show that end users were satisfied with the resolution of their issues over the phoneFootnote 12

- SSC targets to resolve 75% of end-user requests for DCS at the first point of contact with a live agent and to dispatch 18% of requests or less to other levels of technical support. In 2014–2015, DCS met these incident resolution targets overall, with 83% for resolution and 17% for dispatch. These performance results were driven by the two large client organizations (PWGSC and SSC), which account for the majority of problems reported by end users to the Service Desk (Table 3). For the three smaller clients, incident resolution rates were lower than the established targets for most months in 2014–2015 and resulted in high dispatch rates. DCS and client management interviewees perceived that the higher dispatch rate for smaller client organizations may be caused by the Service Desk agents’ limited access to client-specific applications as well as SSC work prioritization.

| Client | # of Tickets logged | # of Tickets resolved | Resolution rate (%) | Dispatch rate (%) |

|---|---|---|---|---|

| SSC | 27,593 | 23,386 | 85% | 15% |

| PWGSC | 40,874 | 35,153 | 86% | 14% |

| CSPS | 7,293 | 5,291 | 73% | 27% |

| Infrastructure Canada | 2,355 | 1,309 | 56% | 44% |

| CHRT | 112 | 67 | 60% | 40% |

Data Source: Data reported in the Government Operations Portfolio IT Operations Metrics for the period from April 2014 to March 2015.

- Management representatives from client organizations reported mixed satisfaction with technical support provided by DCS. All client interviewees acknowledged that DCS staff were professional, hard-working and dedicated, but noted that DCS resources appeared to be stretched across many priorities and projects and lacked employees in general. Larger clients considered that DCS support was adequate, timely and accessible for employees in their organization, as well as cost-efficient as it was provided during the core business hours only. They also reported satisfaction with the efficiency of the new self-serve service depot model and the cost savings associated with reduced deskside support (as demonstrated in DCS cost per workstation calculations for years 2013–2014 and 2014–2015 for these clients). Smaller client organizations, on the other hand, expressed concern over Service Desk agents’ limited understanding of client technical environment, ability to resolve issues in a timely manner, and ability to document resolutions/work-arounds for future action. Most client representatives expressed the need to have a dedicated person on the floor who understands the client environment as a key determinant for success. Other areas requiring attention from the clients’ perspective were diminished regional support due to staff shortages in the regions and improvements to the ticketing system, namely, allowing client access to the ticketing system to enable timely follow-up and reduce duplication in managing tickets.

- DCS managers and client relationship managers interviewed also considered technically-competent and dedicated staff to be core strength of DCS. However, one half of DCS managers interviewed noted a number of difficulties in managing their resources, such as staffing, change management and workload balance, due to funding restrictions and uncertainty about the future of the services. As of March 31, 2015, 79 out of the 248 positions or 32% within DCS were vacant and another 13 (5%) filled on a temporary basis. The vast majority of these vacancies were as the CS-1 (Support Technician) and CS 2 levels (Support Analyst). DCS managers interviewed named several challenges with staffing these positions.

- The literature review and case studies conducted for the evaluation highlighted best practices in the provision of end-user technical support. These include:

- the use of an integrated Service Desk accessible through one telephone number across all sites and types of end-user requests triaged to virtual teams for execution;

- reliance on self-serve web portals for simple and straightforward requests to reduce the volume of calls to the Service Desk;

- use of remote assistance technology, desktop virtualization and self-serve service depots for device distribution to reduce deskside support;

- provision of VIP support service for executives;

- management of an IT knowledge base for IT specialists and end users to support troubleshooting;

- rationalization and standardization of devices, operating systems and applications to increase efficiency and consistency;

- utilization of a single ticketing system; and

- periodic performance monitoring and reporting.

- While many of these practices are already in place for DCS (such as availability of IT knowledge base for IT specialists, utilization of a single phone number of the Service Desk), opportunities to improve technical support include advancing automation, standardization and reporting.

Immediate outcome: Client organizations’ needs are addressed; client organizations are using or adopting standard technologies and services.

- We found that management from client organizations held varied perceptions of the extent to which their needs were addressed by DCS. Generally, it was more favourable for existing services and less favourable for the management of new service requests and client projects. The timelines for resolving client issues and administering new requests were viewed as too lengthy and impacted client operations. The liaison function fundamental to understanding client organizations’ needs and environment and to driving DCS solutions was viewed as lacking by client management and DCS management. To compensate for this, management in some client organizations reported dedicating internal resources to managing DCS issues and identifying solutions and called for a dedicated on-site SSC specialist embedded within their organization.

- Client management, DCS management and client relationship managers interviewed acknowledged that client organizations’ needs with respect to existing services were met by SSC, whereas requests for new services or changes were not administered in a timely fashion and often required lengthy negotiations of agreements before the DCS technical team was able to proceed with implementation. All interviewees commended the DCS team for successfully migrating client organizations to Windows 7 and Office 2010 within the timeframe allocated by the TBS for the transition. Some client interviewees provided additional examples of successful and timely delivery of their DCS projects by SSC. Management representatives from all client organizations interviewed noted that day-to-day delivery of services is adequate and addresses their organizations’ needs. However, they also underscored that there had been a notable time lag and routine delays in the processing and execution of service requests and client projects. Many gave examples when a simple request took several months for SSC to action or when there was miscommunication on the timelines of the implementation of the request. They underscored that these delays had implications for the client organizations’ work and budgeting as IT technologies quickly become obsolete.

- From the client management’s perspective, delays in servicing their requests occurred because, firstly, client organizations relied on the same DCS resources who were overcommitted and, secondly, because communication channels between client relationship managers who scoped the services and signing agreements and DCS service delivery managers who implemented them were not optimal. Some client interviewees provided examples when they received differing advice from SSC employees, in the end opting to rely on personal networks and connections to get answers and advance projects. Representatives from smaller client organizations suggested that DCS did not deliver services to them well, because they were often at the end of deployment for new technologies and services. Representatives from four client organizations pointed to a lack of planning and communication with clients, for example, when SSC staff were unable to provide a description of the solutions being worked on or the timelines for delivery or cost. This made budgeting and funds commitment a difficult exercise for client organizations. Client management acknowledged DCS’ technical capacity, but underscored the need for better coordination, planning and communication within SSC.

- Client interviewees offered several solutions to enhancing the achievement of this outcome. One could be to ensure that the service provider understands client environment, preferably, by having a dedicated on-site IT specialist/manager who would oversee the implementation of the agreements, understands both SSC and the client organization and is capable of supporting the role of planning, monitoring and reporting. The other is to develop more flexible processes and mechanisms for dealing with client requests, as much time and effort is spent on paper work and approvals, and for coming out with out-of-box solutions or finding creative ways to address issues.

- Client relationship managers interviewed similarly acknowledged that their clients were unsatisfied with the timeliness of services, delays and SSC not providing certain services. They commented that those delays were, in part, caused by a lack of flexibility in processes and agreement mechanisms (e.g. no alternate mechanisms to bill a client for small requests).

- DCS management seconded the challenges observed by client relationship managers and clients, but also highlighted difficulties experienced by the technical team due to client requirements being not well articulated or unclear. In their view, management in client organizations tends to focus on technical requirements, as opposed to business requirements, which may hamper the development of solutions. There were also challenges with regards to changing client behaviour surrounding the new service delivery model, with some clients continuing to seek one-on-one support. Management of the DCS technical team commented on the need to find a balance between client requirements/preferences and efficiencies obtained though standardization. They viewed their role as advocating for and advancing the use and adoption of standard technologies and services across client organizations (discussed in the section below).

Intermediate outcome: Consolidated, standardized and innovative desktop services support client organizations in delivering their programs and services.

- We found that DCS, supported by the TB’s policy direction and client organizations’ focus on driving efficiency, have made progress in the achievement of this outcome. Many of the practices implemented by DCS, such as self-serve service depots, virtualization applications and remote assistance, are aligned with industry direction and have been shared, to some extent, across five client organizations. Additional opportunities to add value through greater standardization and consolidation within SSC’s DCS, as well as within government-wide distributed computing are available. Other opportunities for improvement involve developing a stronger collaboration with industry and dedicating resources to research and development.

- The Chief Information Officer Branch within TBS provides strategic direction and leadership for government-wide IT through policy development, monitoring, oversight and capacity building. The Branch issued a requirement to upgrade Government of Canada computers from the Windows XP operating system to Windows 7 and the Microsoft Office 2010 productivity suite by March 31, 2014, when Microsoft ended its support to the previous versions. DCS has successfully rolled out the new desktop environment (Windows 7 and Office 2010) for its client organizations ahead of the deadline. Transition to the standardized operating system and the office suite of desktop applications and services facilitates procurement, technical support and security management.

- Management representatives from five client organizations confirmed that they had completed migration to Windows 7 and Office 2010 successfully and acknowledged the benefits of the new desktop environment. The two clients who have transformed, with DCS’ assistance, their deskside support through the use of self-serve service depots reported a high level of satisfaction with the new support model and savings in productivity time and support costs. Another client expressed an interest in adopting the same support model.

- DCS management interviewees viewed consolidation, standardization and innovation of desktop services as their priorities. The majority of managers commented that technical solutions, novel ideas and lessons learned across clients get discussed at weekly management meetings and that DCS had successfully implemented a number of new tools, technologies and solutions in the recent past. For example, the DCS technical team has implemented new virtualization technologies that simplified application management and reduced interdependencies of software and hardware, upgraded clients’ operating systems and reduced customization levels, which supported the creation of self-serve service centres/exchange depots and reduced IT labour costs, and simplified end-user access to the Service Desk. Approximately one half of interviewees noted that work on standardizing and consolidating technologies was ongoing and was required for all of Government DCS, but which also called for additional investments. Other government departments interviewed as part of the evaluation reported that they focused on centralizing management of their DCS, reducing regional sites and clarifying their governance.

- Well-structured, multi-level governance that brings together directors and managers from across DCS functions on strategic and operational issues was identified by DCS management as strength and an area directly supporting standardization and innovation within DCS. Apart from governance, some managers thought that DCS was lacking the mandate and resources to innovate and evolve services. Many saw opportunities in enhancing service delivery through establishing linkages with industry stakeholders to keep abreast of best practices and participate in the piloting new technologies (to practice implementation and understand the technology behind it). Dedicating a portion of DCS resources to research and development, in managers’ view, would help establish a technology vision for DCS.

- Additional opportunities for standardization and innovation may involve enhancing automated support level and capitalizing on the use of the Service Desk as a central call tracking and service hub for all employee issues and inquiries. GartnerFootnote 13 estimates that 40% of most Service Desks’ contact volume could be resolved through IT self-serve, as password resets account for up to 30% of end-user requests. In the government context with multiple applications and requirements to change passwords periodically, this estimate may be higher. At the time of the evaluation, self-serve functionality was not available for most DCS client organizations. The benefits of utilizing a single service tool for managing employee requests, including those related to human resources, procurement and others, have been reported in the literature as simplifying access for end users and reducing service desk software and maintenance costs.

Ultimate Outcome: DCS are cost-effective and support Government of Canada priorities.

- We found that DCS costs varied greatly across client organizations due to different DCS support models and opportunities to leverage economies of scale within a client organization. DCS costs have been gradually reduced for some client organizations as a result of implementing self-serve service depots, reducing deskside support and clients undertaking device rationalization initiatives. DCS management reported limited opportunities to reduce DCS costs for client organizations with a small number of employees. Their DCS costs have remained high.

- According to the 2014–2015 revenue consolidation, SSC provides DCS to five client organizations in the amount of $26.4M, ranging from approximately $100,000 for the smallest client to $18.6M for the largest client. These revenues cover services provided under SLAs and cost-recovery agreements for new requests. DCS management undertakes an annual activity-based costing exercise to account for its expenditures and arrive at a cost per workstation for each client. The 2014–2015 cost per workstation calculations show that DCS cost varied significantly across clients due to client size and capacity for economies of scale (Table 4). Average cost per workstation across all five client organizations was $1,120. Representatives from all five client organizations interviewed identified reducing DCS costs as their priority, with several clients reporting that progress had been made in this area as a result of their internal efforts and their collaboration with the DCS technical team on desktop transformation initiatives.

| Client | Cost per workstation | # of workstations |

|---|---|---|

| SSC | $1,179 | 3,613 |

| PWGSC | $1,052 | 13,921 |

| CSPS | $1,469 | 1,150 |

| Infrastructure Canada | $1,663 | 501 |

| CHRT | $2,939 | 30 |

Data Source: 2014–2015 activity-based costing provided by DCS management.

- For instance, over the past three fiscal years, DCS costs per workstation for some clients have been lowered by 5% to as much as 30% and the cost for DCS’ largest two client organizations is now in line with the 2014 Gartner industry benchmark. Client and DCS management interviewees highlighted reductions in the cost of services made through the implementation of self-serve service depots, decrease in deskside support costs, clients’ focus on optimizing device-employee ratios, transition from desktop to laptop workstations and removal of certain services from the SLAs. The impact of these reductions on the end-user experience has not been studied by this evaluation; however, these cost reductions are consistent with the Government of Canada’s cost-containment initiatives and focus on reducing administrative expenses.

- The cost per workstation for smaller clients was 1.5 to three times higher than the industry benchmark. Management interviewees from small client organizations and SSC perceived that DCS were not well positioned to support small clients cost-effectively since technical solutions were commonly built on the assumption of large scope and had to be replicated on each client’s network (regardless on the number of employees). In their view, opportunities to lower cost for these clients will involve innovative approaches, not treating clients as silos or implementing a common network. The evaluation team was not able to establish the extent to which SSC would support the implementation of these approaches for smaller DCS client organizations.

- Additional opportunity to review service cost and cost drivers for SSC’s DCS could be achieved through participation in a benchmarking exercise. Interviewees in two of the three government departments selected for the case study reported engaging an external benchmarking organization to review their DCS and cost against industry standards with the view of right-sizing their resources and practices.

Factors Influencing Outcome Achievement

- We identified several factors that contributed to or, conversely, hindered DCS delivery and service excellence. Some of these factors were internal to DCS, while others related to SSC or the broader government context. The factors discussed below were acknowledged by most interviewees as requiring attention.

Service agreements and reporting

- We found that although DCS’ SLAs contained all essential elements as per the TBS Guideline on Service Agreements, such as the nature and scope of services, roles and responsibilities, service standards, performance metrics, financial arrangements and others, they have not been used as a tool to manage client relationships and service performance. Of note, most SLAs were signed well after the start of service delivery. Some of the initial SLAs were signed between the client organizations and PWGSC and no analysis of service targets and levels had been subsequently undertaken by SSC. Agreements were not standardized across client organizations in terms of their duration, service description and costs, and some agreements were signed for recurrent periods of one year, increasing administrative effort.

- The vast majority of client management, DCS management and client relationship managers interviewed commented that SLAs had not been used as performance monitoring and accountability arrangements, but rather simply as financial documents to transfer funds from the client to SSC. All client interviewees and some SSC interviewees suggested that shortcomings in managing SLAs limited both service provider accountability for results and client accountability for payment. Several clients expressed concern that the SLAs and DCS performance reports on the achievement of SLA targets did not provide a basis for recourse should DCS not meet established targets. The evaluation team did not find evidence to support that SSC used performance reporting to manage client expectations or DCS staff workload through the peaks and valleys.

Recommendation 1

The Assistant Deputy Minister, Networks and End User Branch, in collaboration with the Senior Assistant Deputy Minister, Service Management and Data Centres, should review the use of Service Level Agreements as a tool to monitor performance and assure accountability for Distributed Computing Services, including that agreement terms and conditions are agreed upon prior to the initiation of services.

- With respect to cost recovery agreements for new services, both client organizations and SSC teams experienced similar issues in that agreements were not signed on time, required many approvals as well as refinement to determine in- and out-of-scope services and negotiation of cost. Client management and client relationship managers interviewed highlighted the absence of a service catalogue with standard service descriptions, costing methodology and cost per service line (e.g. addition of a unit of storage space) as inhibiting the management of client relationships and contributing to inconsistency in service management. Most client organizations interviewed commented that this could be expected for a young organization, but for SSC in its fourth year of operation the availability of the service catalogue and a consistent approach to costing was expected and fundamental. This is further discussed below.

Client management

- Regarding the planning for and delivery of DCS, client organizations’ primary link to SSC was through a client relationship manager. While the raison d’être behind client relationship managers was to align clients and SSC as strategic collaborators and to drive value realization by strategically enabling IT for programs and priorities, interviews with DCS stakeholders suggested that their role has predominantly focused on administrative matters. Some interviewees commented on the diminished role and value-added of client relationship managers. For most management representatives from client organizations this was the least satisfactory aspect of their work with SSC on DCS. There was also some confusion on the part of client organizations and between SSC staff on the distribution of responsibilities between client relationship managers and service delivery managers housed within DCS.Footnote 14

- As mentioned earlier in this report, clients advocated for an on-site manager who is embedded in the client organization and is familiar with the client environment. In the absence of this role, some clients reporting relying more heavily on DCS service delivery managers for advice and guidance, resorting to escalating their requests and/or taking the lead role in managing their projects with SSC. Three-way communication and meetings between managers from the client organization, relationship management and DCS service delivery/technical team were reported to work best.

- Aside from better defining the client relationship function, DCS stakeholders interviewed noted a lack of processes and tools within SSC to support the work of client relationship managers. They suggested that this resulted in sub-optimal business intake and client communication. For instance, a lack of organizational charts and contact people across SSC functions has impacted the ability of client relationship managers to provide responses in a timely manner and has led to greater reliance on personal networks and relationships. Secondly, the processes for demand management were neither well documented, nor optimized at SSC. Most stakeholders pointed that SSC processes were unnecessarily labour-intensive, which could be addressed by having an automated web portal put in place for simple service requests or a service catalogue with established pricing. Finally, tools to support the client relationship function were not developed or standardized and the service request and revenue tracking system was regarded by SSC managers as inadequate.

- Similar observations were also made in a recent SSC audit of Demand and Relationship Management, which identified that standardized methodology, a centralized decision-making point for business intake and centralized tracking of requests through a single service window had not yet been established. It confirmed that, prior to the April 2015 realignment, there were inconsistent communication with SSC clients and that some client relationship management did not have a good understanding of the client environment and priorities. Another audit of SLAs for the House of Commons noted that SSC had no process to track and monitor client issues.

Recommendation 2

The Assistant Deputy Minister, Networks and End User Branch, in collaboration with the Senior Assistant Deputy Minister (SADM), Service Management and Data Centres, and the SADM, Strategy, should work together to ensure that an efficient and standardized approach to demand management and communication with Distributed Computing Services client organizations is established and implemented.

DCS funding

- Commonly, optional services in the Government of Canada are funded by full cost-recovery through a revolving fund or net-voting authority. Rates charged for these services recover the full costs to break even at the level of the overall operation over a reasonable period of time.

- Revenue and expenditure data contained in SSC’s financial systems specific to DCS were inconsistent, as some client requests and revenue had been inappropriately assigned. The DCS team has begun undertaking an exercise to validate revenue forecasts in 2014–2015 to ensure that proper revenues are allocated to DCS. DCS management also undertakes an annual activity-based costing exercise to account for their cost for each client organization.

- DCS management interviewees identified alignment between the revenues received and DCS expenditures as an area for improvement. They noted that difficulties they experienced in accessing funds affected their ability to deliver excellence in services. Funding restrictions within SSC created difficulties with planning, staffing and staff retention, contract management, as well as research and development activities. Day to day management was also affected by elevated level of delegated financial authority and travel restrictions, which led to delays. Funding to invest in new technologies or equipment was noted as insufficient.

- Management representatives from most client organizations commented on insufficient funding and understaffing for DCS within SSC, particularly in the regions. All clients also pointed out that workload management and staff turnover within DCS appeared to be a challenge for SSC. Some client interviewees perceived that their payments for agreed upon DCS were late in, or were not, being channeled to DCS. While client interviewees recognized and commented on the high technical expertise and work ethic of DCS’ technical staff, their observations with respect to SSC managing DCS resources diminished their satisfaction.

Recommendation 3

The Assistant Deputy Minister, Networks and End User Branch, in collaboration with the Senior Assistant Deputy Minister (SADM), Corporate Services and Chief Financial Officer, and the SADM, Service Management and Data Centres, should improve financial reporting on, and alignment of, Distributed Computing Services (DCS) revenues and expenditures to enable optimal planning, investment and management of DCS resources.

DCS mandate and vision

- While the Financial Administration Act authorizes departments to provide internal support services to other departments, DCS services are not explicitly referenced in SSC mandate, except for the acquisition and provision of hardware and software for end-user devices, which was granted to SSC in 2013. The current SLAs for DCS with five client organizations were inherited by SSC from PWGSC and have continued to be delivered as status quo. SSC has not pursued any new agreements with other clientsFootnote 15 which some DCS managers interviewees viewed as a limitation of current services, as other government organizations may benefit from SSC’s expertise in DCS and its unique alignment with other IT infrastructure services. To highlight DCS’ expertise internally, DCS management took some steps to position their services strategically (for example, through the support for the distribution of BlackBerry devices).

- In 2013, SSC was mandated to finalize the business case for the WTD initiative that would outline a multi-year strategy to transform the delivery of distributed computing across government, including efforts already underway to standardize and consolidate the procurement of distributed computing software and hardware. Through the WTD initiative, the Government of Canada seeks to consolidate and modernize distributed computing to reduce costs and increase security for 95 federal organizations. While providing DCS presently remains the responsibility of individual departments and agencies, SSC is also exploring how these services could be modernized, with a particular focus on reducing costs and improving the user experience while maintaining data and network security.

- The WTD initiative is being developed and managed independently and separately from the cost-recoverable DCS services within SSC. SSC’s DCS will be transformed in accordance with the government-wide strategy. DCS management within SSC and other government departments interviewed indicated that they were operating in a “waiting mode” until the announcement of the new strategy, which raises questions regarding the possible outsourcing options. In a climate of uncertainty and budget reductions, DCS within SSC and other government departments operate on a short-term vision in addressing client requests, with some initiatives temporarily put on hold.

Alternative Delivery

- Potential alternative service delivery options are examined in accordance with the TB Policy on Evaluation as a means of achieving better service outcomes and/or reducing costs. The options discussed below involve greater engagement of the private sector and optimization of internal efficiency.

- The delivery of DCS in the Government of Canada is presently decentralized. A survey to gather information on the delivery of DCS across federal departments and agencies was conducted to inform the development of the business case for the WTD initiative. The survey revealed that the majority of federal organizations delivered DCS internally or under an agreement with another organization. Some employed a hybrid model, in which specific components of DCS were out-tasked to the private sector. Of the most commonly out-tasked services are the Service Desk and Managed Print Services, which are both mature service offerings entering mainstream adoption according to 2014 Gartner research. Another IT service entering mainstream adoption is desktop outsourcing, which has not been widely used by federal organizations to date. Gartner identifies desktop outsourcing as a cost-effective service, but indicates that security, information protection and access control to workstations continue to be a concern.

- DCS management, client management representatives and case study interviewees pointed out that complete outsourcing or in-house delivery of DCS could limit organizational flexibility and control. Most advocated for a hybrid approach, wherein an organization retains some degree of technical capacity and expertise and farms out well-defined DCS components that are easy and cost-effective to out-task (e.g. Service Desk, computer imaging and distribution). SSC’s DCS have contracted out the Service Desk in the National Capital RegionFootnote 16 to the private sector.

- The literature review conducted for the evaluation similarly revealed that strategic out tasking has emerged as a viable alternative to full outsourcing as it allows to out-task routine transactions and to focus in-house expertise and management on higher-values transactions related to customer experience and innovation. It also helps to avoid many of the outsourcing risks, documented in the literature (e.g. vendor failure, reduced business flexibility, loss of control over cost).

- Apart from relying on increased private sector engagement, opportunities to optimize service delivery exist through greater optimization that could be achieved by leveraging technology, standardizing and rationalizing the number and type of business applications and tools used, and understanding the full cost of services. Benefits of internal optimization, for example, such as simplifying support functions, eliminating nonessential activities or business processes reengineering through streamlining activities and greater automation, are well documented in the literature and can result in significant cost-savings.

- Lessons learned from other government transformation initiatives (such as pension and pay transformation) point to the success of a phased approach to transformation, whereby services are centralized, modernized and a full cost is established before an analysis for outsourcing is considered. DCS client and management interviewees seconded that any alternative service delivery for DCS would require a phased approach, considering the vast complexity and variability in DCS across government organizations.

Conclusion

- Overall, we found that SSC was making progress in the achievement of DCS outcomes. DCS are largely meeting their technical commitments to provide stable and reliable end-user services to client organizations, whose employees have access to a functioning workspace environment and technical support. The DCS technical team has demonstrated awareness of industry best practices and has implemented a number of initiatives aimed at increasing service efficiency and lowering cost per workstation. Additional opportunities to advance innovation, standardization, automation and service for small client organizations were identified.

- We observed a number of challenges, both intrinsic and external to DSC delivery, that impacted service outcomes and client satisfaction. SSC provides DCS to client organizations using SLAs on a cost-recovery basis. However, SLAs were not used to manage business relationship and performance and there was a gap in SSC processes and tools used to manage client communication and demand management. Finally, delays in accessing DCS funds and resourcing issues presented a risk to the achievement of service objectives.

Management Response and Action Plans

Overall Management Response

- As of the reorganization of SSC in April 2015, the delivery of DCS is now led by the Workplace Technology Services (WTS) organization within the Workplace Technology Directorate of the Networks and End User Branch (NEUB). WTS leverages the services of other organizations within SSC (e.g. server hosting, storage, and remote access) to deliver DCS. DCS also leverages horizontal services within the Department, for example IT service management, demand management and financial management.

- The management and operations personnel within the WTS organization were directly responsible for the delivery of DCS at the time of the Evaluation in the Fall of 2014. These personnel were involved with data collection and validation, stakeholder interviews and a review of the analysis and recommendations in the final Evaluation Report. WTS agrees with the Evaluation information and recommendations as presented.

- The three recommendations in the report are related to processes and procedures that are applicable to all customer-facing services, and not just DCS. As such, the process owner of each horizontal process led the management response to the recommendation. WTS will follow the implementation of the management response, as will the service owners of all customer-facing services.

- The evaluation report also provides information (but no specific recommendations) applicable only to DCS: “Client satisfaction with the provision of received services and ongoing technical support was mixed, with larger client organizations reporting higher levels of satisfaction and smaller client organizations raising concerns over DCS’ ability to resolve issues in a timely manner. Client management acknowledged that DCS technical staff were professional and dedicated, but identified staffing as an issue”. WTS does agree that there are situations where a shared resource may be prioritized to address a customer need that affects organizations with a larger number of users. In 2015–2016, WTS plans to make minor, incremental improvements in their support technologies and processes to reduce the occurrences and impacts of these situations (e.g. support tool version upgrades). However, larger investments in automation and resourcing will not be made to address this situation given that SSC is leading the planning for a multi-year WTD strategy which will transform government-wide DCS. As such, WTS will wait for the WTD strategy to be defined and implemented prior to making significant investments.

Recommendation 1

The Assistant Deputy Minister, Networks and End User Branch (NEUB), in collaboration with the Senior Assistant Deputy Minister (SADM), Service Management and Data Centres (SMDC) and the SADM, Strategy, should work together to ensure that an efficient and standardized approach to demand management and communication with Distributed Computing Services (DCS) client organizations is established and implemented.

| MANAGEMENT RESPONSE | ||

|---|---|---|

| The Service Delivery Management (SDM) Directorate of the SMDC Branch is the responsible organization for the horizontal activities related to SLAs. SMDC works with the Service Leads and the Account Executive organization within the Strategy Branch to implement SLAs for each partner and client that receives services from SSC. It should be noted that SLAs will be used to define the service level targets for customized services specific to a particular partner or client. Service levels for enterprise services that are shared by partners and clients will be specified in the standard SSC Service Catalog. SMDC agrees with this recommendation. SSC’s enterprise demand management function, which was created April 1, 2015, will evolve to a centralized model with a standardized methodology and a centralized decision-making function. This will be achieved through the development of standard triage and prioritization criteria and the implementation of a Triage Review Committee. As part of the Terms of Reference (ToR), this committee would be responsible to review the use of SLAs as a tool to monitor performance and assure accountability for the delivery of SSC services. Terms and conditions of the applicable SLAs for a specific service (including DCS) for a particular partner or client will be reviewed are agreed upon by key stakeholders prior to the initiation of the service. |

||

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|

Director General, SDM, SMDC | March 31, 2015 |

|

Senior Director, WTS, NEUB | On-going |

Recommendation 2

The Assistant Deputy Minister, Networks and End User Branch (NEUB), in collaboration with the Senior Assistant Deputy Minister, Service Management and Data Centres (SMDC) and the SADM, Strategy, should review the use of Service Level Agreements (SLA) as a tool to monitor performance and assure accountability for Distributed Computing Services (DCS), including that agreement terms and conditions are agreed upon prior to the initiation of services.

| MANAGEMENT RESPONSE | ||

|---|---|---|

| The Service Delivery Management (SDM) Directorate of the SMDC Branch is the responsible organization for the horizontal activities related to demand management. SMDC is closely supported by the Account Executive organization within the Strategy Branch and the Service Leads in the overall management of the demand for all SSC services. SMDC agrees with this recommendation. SSC’s enterprise demand management function, which was created April 1, 2015, will evolve to a centralized model with a standardized methodology and a centralized decision-making function. This will be achieved through the development of standard triage and prioritization criteria and the implementation of a Triage Review Committee. The achievement of the management action plan tasks below will result in a standardized demand management process that will be consistent across all SSC services, including DCS. |

||

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

|

Director General, SDM, SMDC | March 31, 2015 |

|

Senior Director, WTS, NEUB | On-going |

Recommendation 3

The Assistant Deputy Minister, Networks and End User Branch (NEUB), in collaboration with the Senior Assistant Deputy Minister (SADM), Corporate Services (CS) and Chief Financial Officer (CFO), and the SADM, Service Management and Data Centres (SMDC), should improve financial reporting on, and alignment of, Distributed Computing Services (DCS) revenues and expenditures to enable optimal planning, investment and management of DCS resources.

| MANAGEMENT RESPONSE | ||

|---|---|---|

| The Finance Directorate of the CS Branch is the responsible organization for the horizontal activities related to financial reporting. Finance is closely supported by the Service Delivery Management (SDM) Directorate of the SMDC Branch and the Service Leads for the creation of this financial reporting. CS agrees with this recommendation. The key to improved financial reporting relies on the proper use of financial coding. Finance is committed to refining existing monitoring processes in order to improve financial coding (e.g. assignment of revenues and costs to the appropriate financial account), reporting, alignment of expenditures to revenues, planning, investment and management of resources across the department. |

||

| MANAGEMENT ACTION PLAN | POSITION RESPONSIBLE |

COMPLETION DATE |

| Through the work performed by Financial Management Advisors (FMA) as part of the monthly Financial Situation Report exercise, monitoring of financial coding is performed and recommendations on adjustments are communicated to delegated managers. These ongoing actions, combined with coaching on the proper use of financial coding, will allow for improved financial reporting. | CFO / Deputy Chief Financial Officer (DCFO), CS | On-going |

| As part of the monthly financial review, the Resource Management organization provides FMAs with timely reports on revenues. These reports include detailed information on agreements by partner/client as well as the services provided. These reports include key information required to accurately align expenditures to revenues. | CFO / DCFO, CS | On-going |

| A comprehensive revenue allocation process is being developed and will include instructions for Branches to use in regards to the use of proper coding. | CFO / DCFO, CS | March 31, 2016 |

Annex A: Distributed Computing Services

Annex B: About the Evaluation

Scope and Objectives

This evaluation was carried out in accordance with SSC’s 2014–2017 Risk-based Audit and 2014–2019 Evaluation Plan approved by the President of SSC upon recommendation by the Departmental Audit and Evaluation Committee. It was conducted by the evaluation team of the Office of Audit and Evaluation, under the overall direction of the Chief Audit and Evaluation Executive.

The evaluation team examined DCS delivered by SSC on a cost-recovery basis to a small group of federal organizations, namely SSC, PWGSC, CSPS, Infrastructure Canada and the CHRT.

The evaluation’s objectives were as follows:

- To assess the performance of DCS by examining issues pertaining to service effectiveness, efficiency and economy; and

- To identify factors enhancing or inhibiting the achievement of expected outcomes.

The evaluation did not address the issue of relevance as the Government of Canada has embarked on the government-wide transformation of distributed computing. DCS managed by SSC will be transformed in accordance with the government-wide strategy.

Approach and Methodology

The evaluation was conducted in four phases: planning, examination, reporting, and tabling and approval. A DCS logic model and an evaluation matrix were developed during the planning phase and were shared with SSC management for feedback and approval. The logic model outcome statements and the evaluation questions and indicators contained within the evaluation matrix were used to guide the evaluation. Multiple lines of evidence were used to gather evidence in support of the evaluation objectives. Qualitative and quantitative methodologies were employed. Evidence gathered through primary and secondary research was analysed to arrive at findings and conclusions. Findings are corroborated by triangulating data from multiple data sources and across different research methods, described below:

Primary data collection

Document/Literature Review: A document/literature review was conducted to gain an understanding of DCS and their context to assist in the planning phase of the evaluation. A more comprehensive review was subsequently conducted to collect and assess information about these services. Documents reviewed included legislative and policy documents; DCS service agreements and contracts; departmental documents, such as annual Reports on Plan and Priorities and Departmental Performance Reports; and all relevant program documents, such as manuals and procedures, performance reports to clients and others. Research and grey literature, as well as benchmarking studies were retrieved to provide comparative data and identify best practices. Documents reviewed were retrieved from internal, academic and research databases, including Gartner.

Stakeholder Interviews: Interviews were conducted with three key stakeholder groups for DCS: management representatives from client organizations (11 individuals from 5 client organizations); DCS management (N = 10) and client relationship managers (N = 4). Interviews provided information on client satisfaction, achievement of DCS outcomes and areas for improvement.

Data Analysis: Relevant performance and financial data were extracted from SSC databases. Data were analyzed to assess the extent to which DCS were meeting their expected results and were delivered in an efficient and economic manner. Where appropriate, the evaluation team highlighted any limitations associated with extracted data.

Case Studies: A review of the service delivery models used by other federal organizations to deliver DCS was undertaken to provide comparative qualitative and quantitative evidence. Three large federal organizations agreed to participate in the case study and provide required information. In total, 12 individuals were interviewed. Case study results were used to confirm best practices found in the literature and to make judgments about ways to maximize effectiveness, efficiency and economy in the delivery of such types of services.

Secondary data collection

The evaluation team leveraged existing data and reports to facilitate data collection and reduce duplication. These included DCS reporting, such as Service Desk post-survey client satisfaction results, Service Desk and IT performance reports to clients, SSC IT Operations Metrics Dashboard, as well as activity-based costing data calculated for each client. Additionally, the evaluation team used data from the Current State Assessment Questionnaire, which was distributed to federal organizations to gather data on their DCS device distribution, support service volumetrics, resource levels and managed service contracts. The data from the questionnaire were used as input to the updated business case for the Workplace Technology Devices initiative.

Limitations and Mitigation Strategies

As this was the first evaluation conducted at SSC, there was limited organizational awareness of evaluation requirements and processes. To accommodate for this, the evaluation team built in extra time for consultations and undertook broad engagement with all SSC Branches.

While the methodology used for this evaluation had a number of strengths in terms of the breadth of collected evidence and quality assurance measures, it was subject to several limitations. These limitations and the mitigation strategies put in place to ensure confidence in evaluation findings and conclusions are presented in the table on next page.

| Limitation | Mitigation Approach |

|---|---|

| DCS are connected with other IT infrastructure services provided by SSC, which made it difficult, at times, to attribute any successes or issues to DCS exclusively. | The evaluation team put effort into discerning DCS-specific issues by providing interviewees with clear definitions and examples of DCS and, when required, following-up and requesting clarifications to interviewees’ general references to SSC’s work. |

| The variability of DCS clients in terms of their size, maturity of IT management, organizational needs and history of their relationships with SSC made generalization of findings more difficult. | The evaluation team engaged all five client organizations directly to understand and document unique client context and profiles. Data were stratified by client. If specific issues affected a certain group of clients (e.g. large clients or clients with a small number of employees), this was referenced in the report. |