Evaluation of the Management Accountability Framework

The evaluation of the Management Accountability Framework (MAF) program is part of the Treasury Board of Canada Secretariat’s (TBS) five‑year evaluation plan. The Internal Audit and Evaluation Bureau carried out the evaluation between and , with the assistance of Goss Gilroy Inc. The evaluation assessed the relevance and performance of three MAF cycles from fiscal year 2014 to 2015 to fiscal year 2016 to 2017. The new MAF assessment approach (MAF 2.0) was implemented in 2013.

On this page

- Results at a glance

- Program background

- Evaluation context and scope

- Limitations of the evaluation

- Relevance

- Performance

- Design and delivery

- Efficiency

- Recommendations

- Management Response and Action Plan

- Appendix A: Management Accountability Framework (MAF) logic model

- Appendix B: Management Accountability Framework (MAF) evaluation methodology

- Appendix C: Jurisdictional review

Results at a glance

Relevance: The evaluation found that TBS should continue the annual MAF assessment of policy compliance and management performance of selected federal organizations. The government-wide information obtained through MAF is important for helping TBS understand strengths and gaps in compliance with Treasury Board policy instruments. However, the perceived relevance and usefulness of MAF varies by audience, with some organizations and deputy heads finding limitations in the degree to which MAF can inform organizational management practices. As well, there were some observations about the role of MAF in relation to the number of other federal oversight instruments.

Achievement of outcomes: The evaluation found that MAF is achieving its immediate outcomes by providing information to TBS and organizations about policy implementation through a process that involves consultation and discussion. Although the MAF process itself is transparent and results are available to federal employees, the context of open government suggests a need to increase public access to government‑wide results. The annual assessment of deputy head management performance continues to use MAF results as one input, but its influence is perceived to be declining. There is some evidence of management improvements in the elements assessed by MAF over the three‑year cycle, indicating that MAF does stimulate actions to address identified weaknesses or gaps. However, organizations and deputy heads view MAF as useful primarily for raising the profile of management functions, issues and potential risks facing the organization. Management excellence and decision-making depend on many factors, including the relevance of the organizational risks raised by MAF and the availability of resources to address them.

Design and delivery: The changes instituted under MAF 2.0 are well regarded, and no need for an overhaul of the approach has been identified. New features such as the use of comparators and notable practices have the potential to provide good value for organizations. Although a complete assessment of the validity and reliability of the MAF area of management (AoM) methodologies was beyond the scope of the evaluation, the feedback suggests that there is room for improvement in the rigour and strategic value of the MAF methodology questions. As well, the functionality of the MAF portal, the limited reporting capabilities and the time lags between the MAF reporting period and the publication of results are key challenges. From the perspective of departments and agencies, organizational context requires greater consideration in framing results.

Efficiency: Although some organizations have noted a reduction in the reporting burden associated with MAF 2.0, the impact on efficiency has been limited for TBS.

Program background

-

In this section

MAF was introduced in 2003 to clarify management expectations and strengthen deputy head accountabilities. The original purposes of MAF were to:

- clarify management expectations for deputy heads to support them in managing their own organizations

- develop a comprehensive and integrated perspective on management issues and challenges and guide TBS engagement with organizations

- determine enterprise-wide trends and systemic issues to set priorities and focus efforts to address them.

In early cycles of MAF, organizations were assessed in 41 AoMs. In later cycles, that number was reduced to 21. The assessment used a mix of qualitative and quantitative evidence and responses with associated rating criteria and definitions to derive an overall rating by AoM on a four‑point scale (strong, acceptable, opportunity for improvement, or attention required).

In fiscal year 2013 to 2014, in response to the 2009 evaluation and the 2013 review of MAF, the framework was updated substantially (MAF 2.0) to improve its usefulness for decision-making and its value in relation to cost. The renewed MAF was designed to be more data driven and to focus more on performance. Rather than using ratings, MAF 2.0 presents results within a comparative context, which allows benchmarking across federal organizations. The renewed MAF aims to reduce the reporting burden and to increase the predictability and stability of the process within a three‑year cycle by reducing the number of questions and by using central systems for some data points (see Figure 1).

Management Accountability Framework (MAF) 2.0 - Text version

- Leadership and Strategic Direction

- Articulates and embodies the vision, mandate and strategic priorities that guide the organization while supporting Ministers and Parliament in serving the public interest.

- Results and Accountability

- Uses performance results to ensure accountability and drive ongoing improvements and efficiencies to policies, programs, and services to Canadians.

- Public Sector Values

- Exemplifies the core values of the public sector by having respect for people and democracy, serving with integrity and demonstrating stewardship and excellence.

- Continuous Learning and Innovation

- Manages through continuous innovation and transformation, to promote organizational learning and improve performance.

- Governance and Strategic Management

- Maintains effective governance that integrates and aligns priorities, plans, accountabilities and risk management to ensure that internal management functions support and enable high performing policies, programs and services.

- People Management

- Optimizes the workforce and the work environment to enable high productivity and performance, effective use of human resources and increased employee engagement.

- Financial and Asset Management

- Provides an effective and sustainable financial management function founded on sound internal controls, timely and reliable reporting, and fairness and transparency in the management of assets and acquired services.

- Information Management

- Safeguards and manages information and systems as a public trust and a strategic asset that supports effective decision-making and efficient operations to maximize value in the service of Canadians.

- Management of Policy and Programs

- Designs and manages policies and programs to ensure value for money in achieving results.

- Management of Service Delivery

- Delivers client-centred services while optimizing partnerships and technology to meet the needs of stakeholders.

The objectives of the annual MAF 2.0 assessment are to:

- obtain an organizational and government‑wide view of the state of management practices and performance

- communicate and track progress on government‑wide management priorities

- continuously improve management capabilities, effectiveness and efficiency

- provide input to deputy head performance assessments

Under MAF 2.0, seven AoMs are assessed according to three themes (see Table 1), with core AoMs being assessed for most Government of Canada organizations and department-specific AoMs being assessed for a subset of organizations.

| AoM Type | Theme | AoM |

|---|---|---|

| Core AoMs | Management practices Based on policy expectations (for example, existence of a governance structure, plan, framework, reporting against a plan) | Financial management |

| People management | ||

| Information management and information technology (IM/IT) | ||

| Management performance Outcome and output measures Service delivery measures | Management of integrated risk, planning and performance | |

| Department-specific AoMs | Management milestones Progress against expectations (for example, phases for implementation of the Common Human Resources Business Process) | Management of acquired services and assets |

| Service management | ||

| Security management |

In total, 37 large departments and agencies are assessed annually on the four core AoMs and on a subset of department‑specific AoMs; 23 small departments and agencies are assessed on a subset of indicators for the core AoMs.

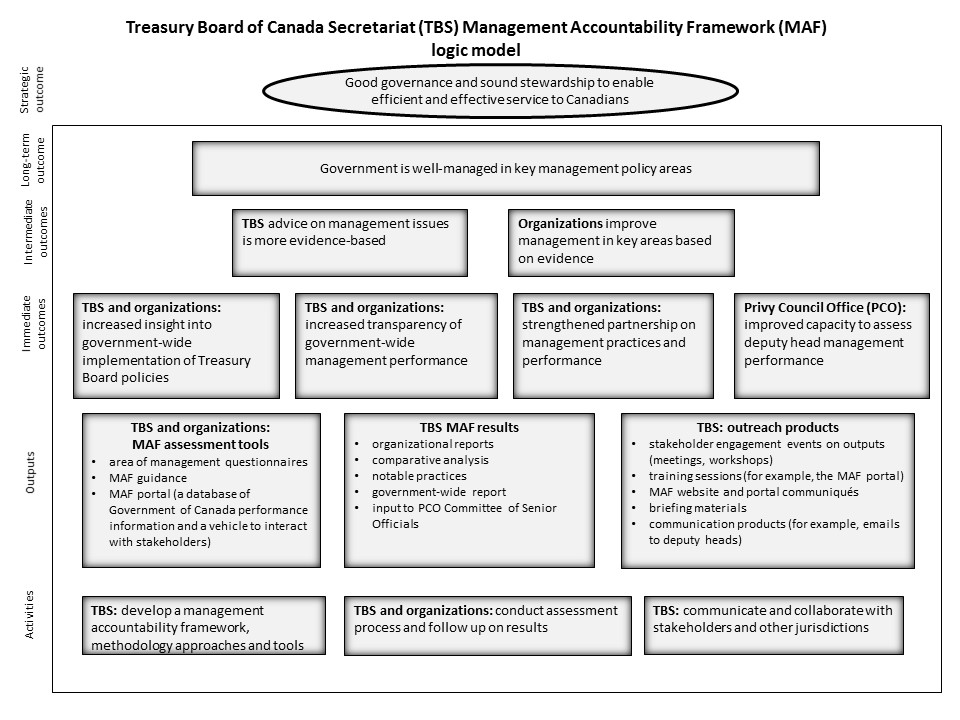

Expected outcomes

The expected outcomes of the MAF program, as outlined in its logic model (see Appendix A), are as follows.

Immediate outcomes

- TBS and organizations have increased insight into government-wide implementation of Treasury Board policies

- There is increased transparency of government‑wide management performance for TBS and organizations

- TBS and organizations have a strengthened partnership on management practices and performance

- The Privy Council Office (PCO) has improved capacity to assess deputy head management performance

Intermediate outcomes

- TBS advice on management issues is more evidence‑based

- Organizations improve management in key areas based on evidence

Long-term outcomes

- Government is well-managed in key management policy areas

Evaluation context and scope

The evaluation approach is consistent with the 2016 Treasury Board Policy on Results. The evaluation looked at three primary areas: relevance, performance (effectiveness and efficiency), and design and delivery.

The evaluation was based on 5 lines of evidence:

- a literature review

- a document review

- key informant interviews

- an online survey of representatives of departments

- case studies

See Appendix B for a more detailed description of the MAF evaluation methodology and an outline of the approach to the analysis of qualitative data.

The assessment of relevance determined the extent to which MAF meets an ongoing need for information on policy compliance and on management performance and practices. The assessment of performance examined the extent to which the program outcomes were achieved, as well as the efficiency of MAF 2.0.

The evaluation of design and delivery included an assessment of the overall MAF from the user perspective and was based on a survey of representatives from functional communities in departments and agencies as well as interviews with TBS stakeholders (see Appendix B). The evaluation did not include an analysis of individual AoM performance measures. It also did not examine MAF processes internal to departments and agencies.

Limitations of the evaluation

Although much of the literature looks at particular performance systems, initiatives or issues, comparative studies were not sufficiently granular or systematic to provide a detailed comparison with MAF. The literature review nonetheless contributed significantly to the evaluation by providing a broader perspective from which to view MAF.

A second limitation of the evaluation is that it obtained input from only nine deputy heads. Although the input provided valuable insight, it should not necessarily be considered as being representative of all deputy heads.

Relevance

-

In this section

Conclusion

The evaluation found that there continues to be a need for MAF, although its perceived relevance and usefulness varies among users of MAF results and is affected by a variety of other oversight instruments, such as internal audits and evaluations.

Continued need for MAF

Literature and experts confirm the importance of management performance exercises such as MAF to support public sector accountability and organizational management. The literature review found that many jurisdictions undertake regular assessments of management performance, although the specific approaches to these assessments are diverse. See Appendix C for a description of some approaches used in other jurisdictions. In comparative reviews of performance assessments, MAF has been found to be unique internationally, and Canada is generally seen as a strong performer in terms of commitment to measuring and managing performance.

Like many countries, Canada has a multi‑faceted performance system. MAF and other tools such as audits and evaluations form a broader suite of oversight and reporting instruments that meet the needs of different audiences. The extent to which these tools are integrated and whether they are duplicative or work at cross purposes are important questions. As observed by respondents, unlike audits and evaluations, MAF gathers information on performance in AoMs at the organizational level through standardized, government‑wide measures that are assessed annually.

However, according to representatives from some departments and agencies, in some cases MAF merely confirms information that users already know through other sources, which indicates that there may be overlap. Because of the new measures relating to internal services in the Treasury Board policy instruments on results, a few respondents raised the ongoing need for MAF to specifically assess these measures.

The relevance of MAF varies depending on the user of the results.

Departments and agencies: The evaluation found that the relevance of MAF for deputy heads was not clear, especially for small organizations. Consistently, however, these senior managers were reticent to say that MAF should be abandoned. According to deputy heads, MAF functions as a “health check” by drawing attention to back‑office systems that are fundamental but that often have a low profile (for example, financial or IM/IT systems). For functional communities, MAF can raise the profile of issues, particularly when compared with government-wide benchmarks. MAF is just one source of organization-level information among many competing sources of information and other drivers of organizational priorities. Weaknesses identified by MAF may result from an under-resourced function, but identifying weaknesses does not necessarily trigger additional resources to address them.

TBS: MAF is highly relevant among policy centres because it is their main source of information related to policy implementation and compliance for AoMs.

Although program sectors contribute to the analysis of MAF results by outlining each department’s contextual environment, they rarely use MAF results to inform their work.

PCO: MAF is one of a number of inputs into Committee of Senior Officials (COSO) deliberations on deputy head management performance for which there are no alternative sources of similar data at the organization level. However, according to key informant interviews, MAF’s influence on COSOFootnote 1 discussions appears to be declining.

Performance

Achievement of immediate outcomes

Conclusions

The evaluation found that MAF is achieving its immediate outcomes. It provides both TBS and organizations with information about policy implementation. In addition, the MAF process stimulates consultation and discussion among TBS and organizations.

MAF results are available to federal employees; however, the evaluation did not examine the extent to which employees know this. MAF results remain an input for the assessment of deputy head management performance, but the influence of the results may be declining.

Immediate outcome 1: TBS and organizations have increased insight into government‑wide implementation of Treasury Board policies

The evidence shows that both TBS and organizations have increased insight into the implementation of policy requirements that are assessed by MAF, even though MAF 2.0 moved away from a compliance focus. Overall, 22% of MAF questions for fiscal year 2015 to 2016 examined policy compliance (ranging from 6% for the service AoM to 40% for the acquired services and assets AoMs). Representatives from TBS policy centres indicated that MAF is their primary source of information about the implementation of Treasury Board policies in organizations, including information about gaps or challenges relating to policy requirements.

A limitation of MAF as a measure of policy compliance and performance is that the meaningfulness of the results depends on the accuracy and comprehensiveness of the organizational context provided. Organizational capacity and resources, as well as events outside the organization’s control (for example, extreme weather or international crises that require a federal response), can place additional or unexpected demands that affect performance.

For organizations, MAF appears to provide some insight into their own policy compliance, although senior managers are often already aware of issues identified. Moreover, the level of risk associated with weak compliance with some policy requirements is not necessarily considered to be high in light of the departmental context (business priorities, resources).

Functional communities rate the validity and reliability of the MAF assessment of policy compliance more positively than the assessment of performance and overall health of their AoM. These results are discussed in more detail in the design and delivery section. The case studies also found that MAF helps many organizations understand their compliance with Treasury Board policies and the state of implementation of those policies and confirms known areas of strength or weakness in their organization.

Immediate outcome 2: there is increased transparency of government-wide management performance for TBS and organizations

From the perspective of departments and agencies, the MAF process is transparent: 66% of functional community representatives surveyed agreed that MAF assesses the management performance of their organization transparently. Transparency is supported through open and frequent communications between TBS and departments about MAF methodology, as well as feedback on draft departmental results.

Organization-level MAF results are not posted publicly, but department- and government-wide MAF results are available through the MAF portal to any public servant who has a myKey (access credentialls). Public servant awareness of this access has not been evaluated.

Although MAF results are appropriately transparent to individuals in government, most of the individuals consulted for the evaluation felt that sharing the government‑wide MAF report with the general public would be appropriate and consistent with the spirit of open government.Footnote 2

There was less consensus on the sharing of departmental results publicly, because the complexity of contextual factors surrounding the data makes it difficult to communicate the data clearly. In addition, there is greater potential for blaming and shaming, which is counterproductive and which could undermine learning and lead to more opaque reporting and gaming of the data supplied.

Immediate outcome 3: TBS and organizations have a strengthened partnership on management practices and performance

Key informant interviews and case studies indicate that the level of consultation between TBS and organizations vary by AoM, but typically occur at many stages during the MAF process. These consultations include:

- methodology development

- clarification of questions

- indicators and supporting evidence

- draft results

- organizational context

Departments and agencies interviewed for the case studies described their interactions with TBS as collaborative and responsive.

The level of consultation varies by AoM and may not be sufficiently inclusive of departmental and agency representatives. For instance, 47% of survey respondents from functional communities agreed that the collaboration between their functional community and TBS was strengthened through the MAF process; 36% neither agreed nor disagreed; and 17% disagreed.

Therefore, despite some favourable findings from qualitative lines of evidence, there are nevertheless opportunities for improvement (for example, the degree of consultation on and support for certain AoMs).

Immediate outcome 4: The PCO has improved capacity to assess deputy head management performance

According to documentation and interviews, MAF continues to play a role in annual COSO deliberations on deputy head management performance. MAF is one of several inputs, including:

- 360‑degree reviews

- interviews with ministers

- self‑evaluations

- information from the Canadian Human Rights Commission and the Office of the Commissioner of Official Languages

- the achievement of mandate commitments

The evaluation found a general perception from case studies and interviews that the influence of MAF on deputy head management performance may be declining. There are several reasons for this, including:

- benchmarking does not differentiate between strong and weak performers as clearly as the previous iteration of MAF

- other inputs are available and are used to assess deputy head performance

- management strength has increased overall

Achievement of intermediate outcomes

Conclusions

The evaluation found that TBS advice on management practices is more evidence-based, although the usefulness of that advice depends on a comprehensive understanding of the organizational context, which was an area of concern for departments and agencies.

The evaluation also found that, to some extent, organizations are improving management in key areas based on evidence, which TBS respondents also observed. However, most deputy heads interviewed do not see MAF as a tool for influencing management excellence. Both experts and deputy heads who were interviewed believe that MAF is primarily useful for raising the profile of management issues and potential risks facing their organizations.

Intermediate outcome 1: TBS advice on management issues is more evidence-based

According to respondents in TBS policy centres, MAF results are used to identify AoM risk areas and notable practices. Several respondents provided concrete examples of how MAF results informed revisions to their policy guidance, as well as improvements to their policy advice role (for example, implementing improved tracking mechanisms, setting reporting standards).

Most respondents to the functional community survey agreed that the MAF assessment leads to actionable results (64%) and that the government‑wide report helps their community benchmark its practices (59%).

Organizations included in the case studies indicated that the MAF results and associated advice from TBS would be more meaningful if the MAF evidence was interpreted more clearly through the specific departmental or agency context (in other words, using contextual information to explain the meaning and relevance of MAF results to the organization). As well, a few organizations suggested that they would appreciate more direction from TBS in terms of which notable practices would apply to them and offer the greatest impact. This evidence was supported by the survey of functional communities, where only 56% agreed that they use MAF notable practices.

Intermediate outcome 2: organizations improve management in key areas based on evidence

Long-term outcome: government is well-managed in key management policy areasFootnote 3

A challenge for the evaluation was determining the impact of MAF on management practices, given the complexity of:

- management adaptations and improvements

- the multiple influences of mechanisms such as MAF

- ministerial mandate letters

- input from evaluations and audits

- the ongoing development of senior leaders’ managerial skills

Based on a realistic view and expectation of the use of information, as suggested by the literature, the impact of the information is often indirect and not decision-specific. Similarly, most of the experts interviewed for the evaluation did not expect that MAF would, on its own, lead to management excellence. Rather, most saw MAF as a tool for raising the profile of management risks.

Nevertheless, MAF results provide some evidence of improvements in policy compliance and management performance. During the administration of MAF 2.0, for example, the analysis of the first two years of government-wide results indicates a number of improvements in all AoMs (except for integrated risk, planning and performance, where indicators have not been tracked over time because of the introduction of the 2016 Treasury Board Policy on Results).

Examples of improvements:

- implementation of a risk-based, ongoing monitoring program (financial management)

- improvements in meeting requirements of the Directive on Performance Management (people management)

- maturity of recordkeeping in compliance with the Directive on Recordkeeping (IM/IT)

Evidence of management improvements as a result of MAF was also reported at the level of functional communities (through the survey and through case studies).

According to the survey of functional communities, 79% of respondents said their organization takes MAF results seriously, and the same proportion said they implement an action plan in response to MAF results. Of those with a formal action plan, 83% of respondents said they formally monitor progress. These practices were confirmed in the case studies; departments and agencies often prepare action plans or dashboards based on the MAF results, although there were fewer examples where resources were attached to those action plans. Functional community representatives noted a number of examples of MAF’s impact:

- increased awareness of Treasury Board policy requirements and management issues

- accelerated plans and prioritized actions to address weaknesses

- produced or updated management frameworks and plans

- implemented capacity‑building initiatives for staff or resourcing

- adopted new management practices

- increased diligence or accuracy in reporting

Although MAF was viewed as helping identify areas of risk or areas where management could be improved, most deputy heads did not see it as the primary driver for management excellence or decision-making on their mandate priorities (see Figure 2).

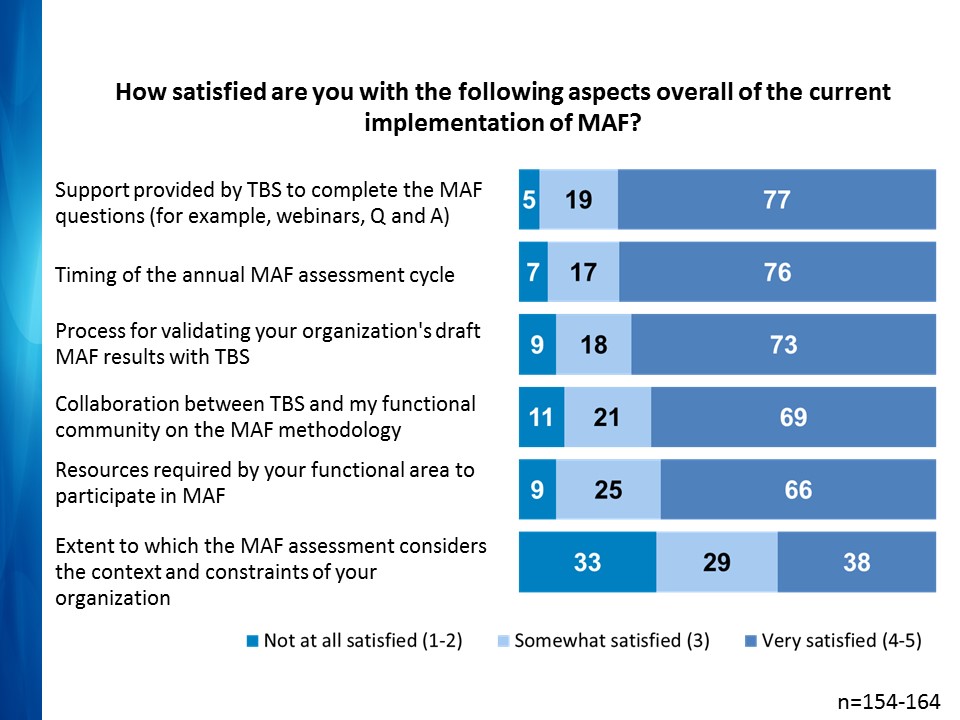

Figure 2 - Text version

The figure is a bar graph of the responses given by functional communities to a survey about 6 aspects of the MAF.

The main question is “How satisfied are you with the following aspects overall of the current implementation of the MAF?”

The first aspect is “Support provided by TBS to complete the MAF questions (for example, webinars, Q and A).”

The response is:

- 5 per cent are not at all satisfied

- 19 per cent are somewhat satisfied

- 77 per cent are very satisfied

The second aspect is “Timing of the annual MAF assessment cycle.” The response is:

- 7 per cent are not at all satisfied

- 17 per cent are somewhat satisfied

- 76 per cent are very satisfied

The third aspect is “Process for validating your organization’s draft MAF results with TBS.”

The response is:

- 9 per cent are not at all satisfied

- 18 per cent are somewhat satisfied

- 73 per cent are very satisfied

The fourth aspect is “Collaboration between TBS and my functional community on the MAF methodology.”

The response is:

- 11 per cent are not at all satisfied

- 21 per cent are somewhat satisfied

- 69 per cent are very satisfied

The fifth aspect is “Resources required by your functional area to participate in MAF.”

The response is:

- 9 per cent are not at all satisfied

- 25 per cent are somewhat satisfied

- 66 per cent are very satisfied

The sixth aspect is “Extent to which the MAF assessment considers the context and constraints of your organization.”

The response is:

- 33 per cent are not at all satisfied

- 29 per cent are somewhat satisfied

- 38 per cent are very satisfied

The number of respondents for each of the six questions varies between 154 and 164.

Source: Evaluation of MAF, Survey of functional communities, 2017

Some case study respondents and a few deputy heads mentioned that their organizations lack the resources to address some of the gaps identified by MAF. As well, a few deputy heads mentioned that the issues raised by MAF may not directly contribute to the achievement of their organization’s business objectives. In fact, a few deputy heads were concerned that MAF has the potential to subvert innovation or overstimulate the organization with multiple priorities. These deputy heads expressed concern that MAF could divert management attention away from achieving departmental objectives that are not measured by the annual assessment of MAF.

Design and delivery

Conclusion

The MAF process has improved since the implementation of MAF 2.0. Features include the comparative tables and notable practices. However, key challenges include:

- functionality of the MAF portal

- time lags between the MAF reporting period

- the publication of results

From the perspective of departments and agencies, organizational context requires greater consideration in framing results.

Although the MAF is moving in the right direction, evidence gathered in the evaluation suggests that the validity and reliability of the MAF AoM methodologies require attention to address weaknesses and to better meet the needs of MAF audiences for strategic information.

Extent to which MAF 2.0 operational aspects are meeting needs

Overall, stakeholders are satisfied with the implementation of MAF. Roles and responsibilities relating to MAF are clear, although some improvements were suggested for communications (for example, directing communications to relevant target audiences).

A benefit of MAF 2.0 has been the introduction of comparators. Departments and agencies find benefit in assessing their performance over time and in comparing themselves to similar organizations. They would like the comparators to include more homogenous clusters of organizations so that the results are more comparable. Departments and agencies indicated that they would like to:

- be more aware of these notable practices

- have more access to them

- have more frequent exchanges about them

The literature suggests that a direction taken by mature systems to assess management performance includes increased reliance on dashboards, visual display, and cascading data for different users.

Three challenges were commonly noted with MAF:

- The functionality of the MAF portal has limitations (for example, technical issues, poor reliability, responsiveness, reporting options). These limitations have introduced inefficiencies in TBS work and constrained options for organizing and presenting MAF results.

- The timing of the MAF cycle is driven by the timing of COSO deliberations and is not optimal for TBS. Departments and agencies also pointed out that a one-year lag, after the reporting period, by some AoMs in reporting MAF results constrains their ability to institute and record improvements until the following MAF cycle.

- There is insufficient integration of departmental context into MAF. Across a variety of aspects of the implementation of MAF, surveyed representatives of functional communities rated recognition of departmental context as the weakest aspect of MAF. This was also noted in interviews and case studies, during which participants indicated that the MAF portal and the subsequent interpretation of results and advice do not sufficiently consider departmental context.

Validity and reliability of MAF methodology

Fundamental to any performance assessment is a determination of whether the right indicators were chosen and whether they provide a balanced and meaningful view of performance. Overall, representatives from TBS and from departments and agencies see the MAF 2.0 approach and methodology as a step in the right direction (compared with the previous iteration of MAF). No significant issues were identified with the current AoMs, although some suggested that there would be value in reintroducing questions related to integrated risk management into the integrated risk, planning and performance AoM. Interest was also expressed in moving department-specific AoMs to core AoMs, most frequently with respect to security management.

Policy centres at TBS develop the methodology for their AoM. The process for developing the indicators is not systematic across policy centres, but it is quite robust for some AoMs (for example, financial management) because it includes internal brainstorming and structured, inclusive consultations with representatives of their functional communities. The methodology development process is hampered somewhat by a lack of opportunity to test some measures with departments and agencies before the cycle begins, as well as by restrictions in the MAF portal on the way in which questions may be posed.

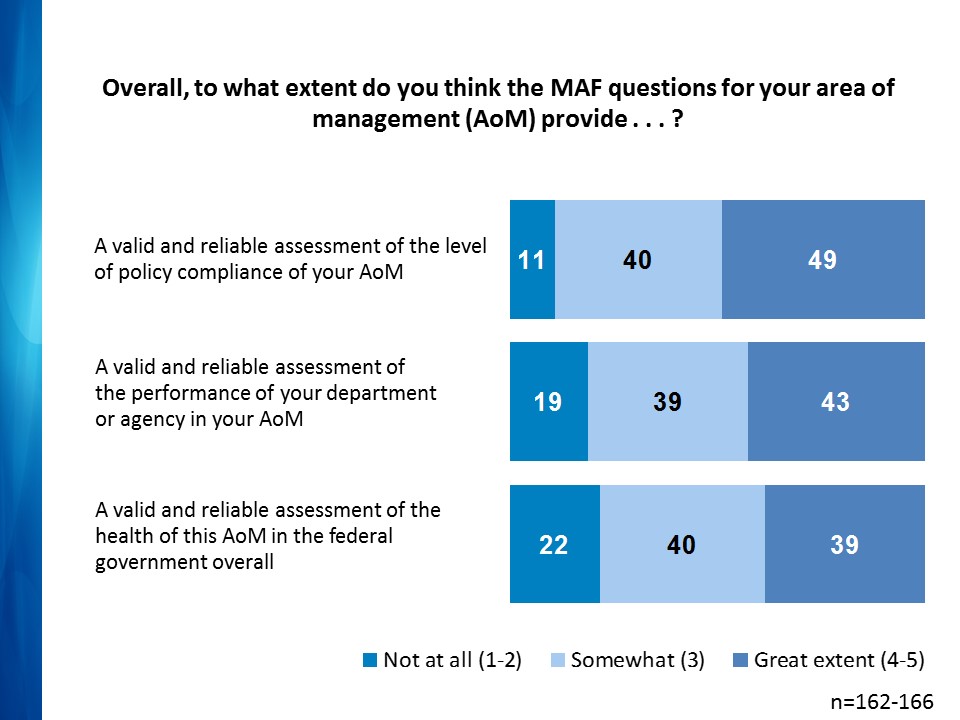

Although an assessment of the detailed performance measures for each AoM was beyond the scope of the evaluation, feedback from various stakeholders suggests that the MAF AoM methodologies are stronger in measuring policy compliance than in measuring performance (see Figure 3).

Figure 3: results for question about validity and reliability of MAF questions

Figure 3 - Text version

The figure is a bar graph showing how functional communities rated 3 statements about MAF questions.

The main question is “Overall, to what extent do you think the MAF questions for your area of management (AoM) provide…?”

The first statement is “A valid and reliable assessment of the level of policy compliance of your AoM.”

The response is:

- 11 per cent rated not at all

- 40 per cent rated somewhat

- 49 per cent rated a great extent

The second statement is “A valid and reliable assessment of the performance of your department or agency in your AoM.”

The response is:

- 19 per cent rated not at all

- 39 per cent rated somewhat

- 43 per cent rated a great extent

The third statement is “A valid and reliable assessment of the health of this AoM in the federal government overall.”

The response is:

- 22 per cent rated not at all

- 40 per cent rated somewhat

- 39 per cent rated a great extent

The number of respondents for each of the three questions varies between 162 and 166.

Source: Evaluation of MAF, Survey of functional communities, 2017

A common theme among respondents was a perceived disconnect between the MAF AoM methodology questions and an understanding of the quality or health of the function. This disconnect was evidenced by periodic false positives, where MAF results contradict other sources of evidence that highlight problems in a particular AoM. As illustrated in the chart above, less than 50% of respondents felt that, to a great extent, MAF questions provide a reliable and valid assessment of their particular AoM.

Other specific feedback from functional communities suggests a variety of limitations and gaps in some of the AoM methodologies. Common themes included:

- lack of clarity in some questions (definitions, terminology)

- 39% of functional community representatives surveyed disagreed that the MAF questions for their AoM “are likely to be interpreted the same way by functional areas across government”

- lack of stability in the methodology for some AoMs, which compromises the ability to track changes over time

- binary responses, which do not allow departments and agencies to document improvements

- horizontal accountability areas not measured well

- absence of clear, realistic benchmarks or thresholds for some questions

Efficiency

-

In this section

Conclusion

The perceived effect of MAF 2.0 on efficiency is mixed and has not been significant for TBS stakeholders. There is a continued desire for a lighter process to increase the MAF return on investment.

Efficiency of the implementation of MAF 2.0

A key objective of MAF 2.0 was to reduce the burden on departments and agencies associated with the preparation of responses to MAF questions. This reduction was accomplished through a data-driven approach that used central sources where feasible and through an overall reduction in the number of questions. In fiscal year 2009 to 2010, MAF contained 685 questions; in the 2015 to 2016 cycle, it contained 199 questions, 40% of which could be answered through central sources.

Despite the reduction in the number of questions, the perceived effect of efficiency for departments and agencies is mixed. The efficiency impact of MAF 2.0 for TBS (MAF Division, policy centres, program sectors) has not been significant. Almost half of the functional communities surveyed indicated no impact of MAF 2.0 on the amount of resources required to complete the questionnaire compared to the previous MAF, while 40% indicated that fewer resources are now required.

The modest impact on efficiency appears to stem from a number of factors, such as:

- increasingly complex calculations required for MAF 2.0

- adjustments or new questions from year to year

- continued requirement for leadership time for MAF briefings and approvals

It was not within the scope of the evaluation to examine the internal MAF processes of departments and agencies, but those processes may also affect the reporting burden.

Across the various lines of evidence, there are mixed views as to whether MAF is a worthwhile investment, despite the conclusion that there continues to be a need for it. Most functional community representatives indicated that MAF is a worthwhile investment to at least some degree. This view was echoed by many, but not all, case study departments and agencies. The evaluation evidence suggests that although MAF is useful, it has limitations. When compared against the resource requirements, these limitations undermine the return on investment. Many involved in MAF, including deputy heads, would like to see greater efficiency in the process, such as streamlining the AoM methodologies to increase the overall return on investment.

Recommendations

-

In this section

Recommendation 1

It is recommended that TBS increase MAF’s relevance and usefulness by better understanding its audience and by targeting MAF to meet the diverse needs of TBS and organizations.

Recommendation 2

It is recommended that TBS work with functional communities and subject matter experts to identify and implement processes that strengthen the validity and reliability of AoM methodologies, while continuing efforts to streamline them.

Recommendation 3

It is recommended that TBS increase the efficiency and effectiveness of the MAF process by:

- improving the MAF portal and its reporting capabilities

- engaging in discussions with appropriate stakeholders to shorten the turnaround time between reporting and distribution of results

Recommendation 4

It is recommended that TBS better reflect departmental and agency contexts in MAF results and in its advice to increase the value and meaning of the results to organizations.

Management Response and Action Plan

The Management Accountability Framework Division (MAFD) has reviewed the evaluation and agrees with the recommendations. Proposed actions to address the recommendations of the report are outlined below.

Note: Given the timing of the evaluation results, some actions will be taken during the upcoming cycle (2017 to 2018) while others will be finalized in the 2018 to 2019 cycle.

Recommendation 1: It is recommended that the Treasury Board of Canada Secretariat (TBS) increase MAF’s relevance and usefulness by better understanding its audience and by targeting MAF to meet the diverse needs of TBS and organizations.

| Proposed action | Start date | Targeted completion date | Office of primary interest |

|---|---|---|---|

|

MAFD | ||

|

2017 to 2018 MAF cycle | 2017 to 2018 MAF cycle | Policy centres |

|

2017 to 2018 MAF cycle |

2017 to 2018 MAF cycle |

Policy centres |

Recommendation 2: It is recommended that TBS work with functional communities and subject matter experts to identify and implement processes that strengthen the validity and reliability of area of management (AoM) methodologies, while continuing efforts to streamline them.

| Proposed action | Start date | Targeted completion date | Office of primary interest |

|---|---|---|---|

|

MAFD Policy centres |

||

|

MAFD Policy centres |

||

|

7 | MAFD |

Recommendation 3: It is recommended that TBS increase the efficiency and effectiveness of the MAF process by:

- improving the MAF portal and its reporting capabilities

- engaging in discussions with appropriate stakeholders to shorten the turnaround time between reporting and distribution of results

| Proposed action | Start date | Targeted completion date | Office of primary interest |

|---|---|---|---|

|

MAFD IMTD |

||

|

MAFD Policy centres |

||

|

MAFD |

Recommendation 4: It is recommended that TBS better reflect departmental and agency contexts in MAF results and in its advice to increase the value and meaning of results to organizations.

| Proposed action | Start date | Targeted completion date | Office of primary interest |

|---|---|---|---|

|

MAFD Policy centres Program sectors |

Appendix A: Management Accountability Framework (MAF) logic model

Figure 4 - Text version

The main components of the MAF logic model are:

- activities

- outputs

- immediate outcomes

- intermediate outcomes

- long-term outcome

- strategic outcome

The activities are:

- TBS develops a management accountability framework, methodology approaches and tools

- TBS and organizations conduct an assessment process and follow up on results

- TBS communicates and collaborates with stakeholders and other jurisdictions

The outputs are:

- MAF assessment tools for TBS and organizations, such as:

- area of management questionnaires

- MAF guidance

- MAF portal, which is a database of Government of Canada performance information and a vehicle to interact with stakeholders

- TBS MAF results, which include:

- organizational reports

- comparative analysis

- notable practices

- a government-wide report

- input to Privy Council Office Committee of Senior Officials

- TBS outreach products, which include:

- stakeholder engagement events on outputs (for example, meetings and workshops)

- training sessions (for example, the MAF portal)

- MAF website and portal communiqués

- briefing materials

- communication products (for example, emails to deputy heads)

The immediate outcomes for TBS and organizations are:

- increased insight into government-wide implementation of Treasury Board policies

- increased transparency of government-wide management performance

- strengthened partnership on management practices and performance

The immediate outcome for the Privy Council Office is:

- improved capacity to assess deputy head management performance

The intermediate outcomes are:

- TBS advice on management issues is more evidence-based

- organizations improve management in key areas based on evidence

The long-term outcome is that the government is well-managed in key management policy areas.

The strategic outcome is good governance and sound stewardship to enable efficient and effective service to Canadians.

Appendix B: Management Accountability Framework (MAF) evaluation methodology

The evaluation was based on the following 5 lines of evidence and looked at 3 primary issues: relevance, performance (effectiveness and efficiency), and design and delivery.

1. Literature review

A review of Canadian and international literature on performance measurement systems for management purposes was commissioned, and the review was conducted by Dr. Evert Lindquist. The purpose of the literature review was to:

- identify the best practices in accountability for government sector management in other jurisdictions

- assess, if possible, which countries have been the most effective with their systems

- assess whether the MAF is useful for Canada’s federal system of government

2. Document review

The following documents were reviewed:

- descriptive program information

- MAF AoM methodologies and assessments completed during the evaluation period

- other internal strategic, operational planning and evaluation documents

3. Key informant interviews

In total, 35 individual or group informant interviews were conducted to address relevance and performance issues. All relevant key stakeholder perspectives were considered in the key informant interview analysis to obtain a variety of views on program performance.

Distribution of interviews by respondent category:

- deputy heads (n=9)

- experts (n=6)

- PCO (n=1)

- TBS, including the MAF Division, policy centres, program sectors (n=19)

Within each respondent group, responses were analyzed qualitatively, and the following qualifiers were assigned to convey the prevalence of interviewees’ views:

- “a few” for <25% of respondents

- “some” for 25% to 45%

- “about half” for 45% to 55%

- “many” for 55% to 75%

- “most” for >75%

4. Online survey of functional communities

An online survey of representatives of AoM functional communities was conducted. The survey gathered respondents’ perspectives on the MAF, AoM methodologies and the implementation and impact of MAF in their department or agency. In total, 273 individuals were contacted by email to participate. Of these, 168 individuals completed the survey, resulting in a response rate of 62%.

5. Case studies

Case studies were conducted with 12 departments and agencies to understand the implementation of MAF for each AoM in terms of appropriateness, clarity and usefulness. These departments and agencies were selected to include a mix of strong and weak performers, sizes and sectors. Each case study focused on one AoM and included:

- a review of MAF documentation (for example, results, action plans)

- interviews with either or both of the following:

- the departmental MAF coordinator

- functional community representatives of the relevant AoM

A cross-case analysis was conducted to identify themes and trends, using a similar approach to the analysis of the qualitative interview data.

Appendix C: Jurisdictional review

-

In this section

This appendix provides an overview of performance measurement and management systems in other countries.

Australia

Australia’s performance management system functions within a decentralized but strongly centred governance environment. With some similarities to the Canadian MAF, the country’s State of Service Reports complement the performance reports regularly submitted to Parliament by departments and agencies. Notably, the State of Service Reports serve monitoring and accountability functions. They use granular data accrued through departmental and staff surveys, as well as through other means, to highlight performance issues. Most recently it has been observed that the quality of the service reports has declined. Australia has continued to follow in the United Kingdom’s steps and has implemented capability reviews, which assess the capability to handle future challenges, as opposed to assessing or auditing past performance.

Finland

Reforms introduced over the last several years were meant to shift the performance management system from one characterized by a lack of coherence and consistency among and between departments, to a more cohesive system implemented across departments. This move toward performance governance would allow for individual departments to report on their own identified indicators, while also ensuring that common performance targets, as determined by governmental priorities, are identified and reported against. Key anticipated features of the performance management system include performance management agreements in a web-based information system and a common framework with ministerial action plans, which are publicly available. Among the overall goals is the development of horizontal information linked to strategic needs and priorities. The progress of these initiatives is unknown.

South Korea

The South Korean government adopted a framework in 2006 that was intended to promote performance management system integration and align with wider governmental reforms aimed at increasing efficiency, effectiveness and accountability. The country has a performance management system that has well-defined features and oversight by a central authority, which includes civilian participation on its committees. Nonetheless, issues include:

- persistent top-down approaches to performance management

- challenges related to the performance management practices of officials

- an ensuing lack of horizontality and collaboration

United Kingdom

Although the United Kingdom is upheld as an example of model performance measurement approaches, its performance management system has experienced shifts in recent years. Among its most innovative approaches and processes was the creation of the Prime Minister’s Delivery Unit, which monitored performance across the public service by using the indicators set out in public service agreements. In addition, capability reviews were implemented. These reviews sought to assess the capacity of departments to realize strategic priorities. The global financial crisis in 2008 shifted the focus from performance management, as well as the evaluation of outputs and outcomes, to meeting both deficit reduction and wider budgetary targets. Consequently, spending reviews replaced performance accountability frameworks and more generally, the responsibility for performance reporting fell to individual departments, which currently use the single department plans that replaced public service agreements.

United States

The United States government implemented the Government Performance and Results Act (GPRA) in the early 1990s, which introduced government‑wide performance measurement and which was complemented in 2010 by the GPRA Modernization Act. The latter sought to enhance the profile of evaluation by looking across specific programs when measuring performance. The GPRA Modernization Act requires that departments post their reports and related data on a national website that is accessible to the public. The use of web portals is also found at the state level, and increasingly there is a trend toward determining how to best use real‑time information to meet and improve on performance-related targets.

© Her Majesty the Queen in Right of Canada, represented by the President of the Treasury Board, 2017,

ISBN: 978-0-660-24223-1