Artificial Intelligence at your fingertips – Do you know the risks of AI in Defence?

May 26, 2025 - Defence Stories

Artificial Intelligence (AI) is a powerful tool that can enhance productivity, however, it's crucial to establish boundaries to ensure safe usage. Before entering any information into a generative AI tool, consider the potential risks. Even seemingly insignificant details may be stored and accessible online.

In the context of DND/CAF, the stakes are even higher—malicious individuals could connect the dots and combine information in ways that pose a threat to national security.

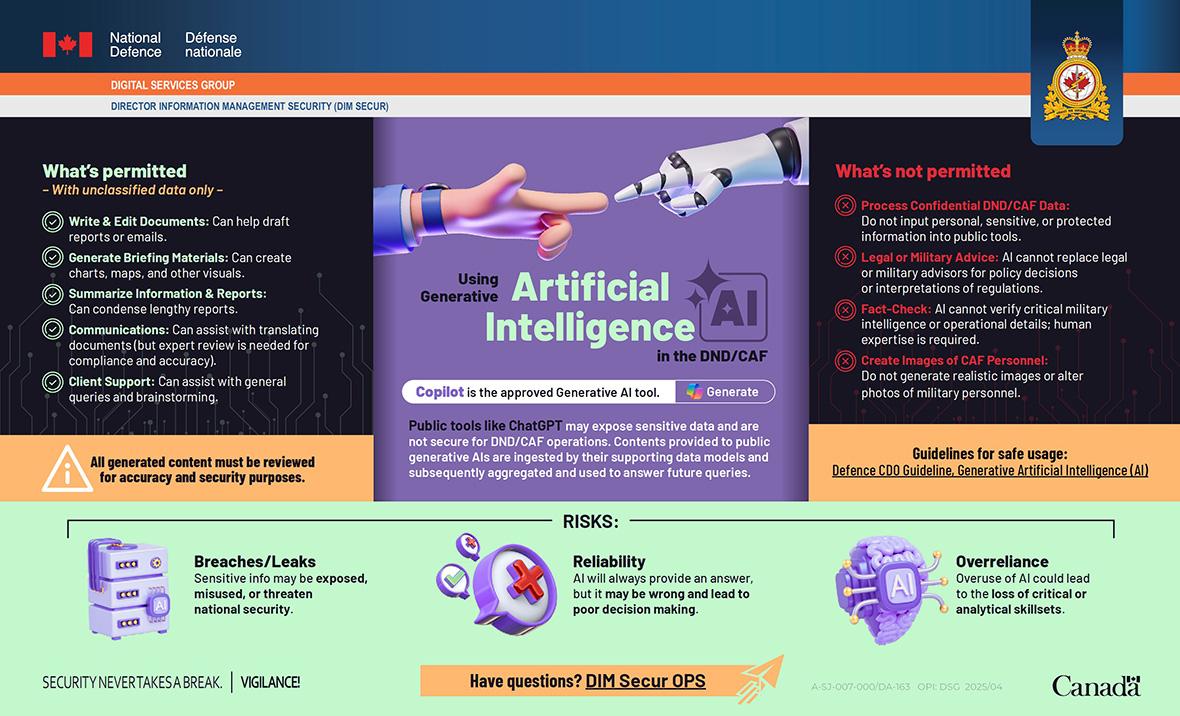

The infographic below outlines guidelines on what is permitted and what is not, while the motion graphic video illustrates the risks associated with AI use in DND/CAF.

For more information on security topics, please visit the Security Awareness Toolkit, available through the Director General Defence Security (DGDS) intranet page.

Questions?

Video / May 26, 2025

Transcript

Using Generative Artificial Intelligence in the DND/CAF

A Simple Edit can lead to a big Mistake

Capt. Smith needed to polish a sensitive briefing note on troop deployments. Short on time, she pasted a draft into an open public AI tool for grammar suggestions.

The AI refined it perfectly—but her input was now stored.

Weeks later, a journalist posted an online article containing strangely similar details which suggested that sensitive information had been leaked.

Capt. Smith hadn't meant for the information to become public - but AI made it available.

Public AI tools may seem harmless, but they are NOT secure for DND/CAF use.

Some shortcuts aren’t worth the risk.

Copilot is the approved AI but must be used responsibly (with unclassified data only).

Infographic – Text version

Using Generative Artificial Intelligence in the DND/CAF

Authorized Tools:

- Copilot is the official approved Generative AI tool

- Public tools like ChatGPT may expose sensitive data and are not secure for DND/CAF operations. Contents provided to public generative AIs are ingested by their supporting data models and subsequently aggregated and used to answer future queries.

What’s permitted:

With unclassified data only:

- Write & Edit Documents: Can help draft reports or emails.

- Generate Briefing Materials: Can create charts, maps, and other visuals.

- Summarize Information & Reports: Can condense lengthy reports.

- Communications: Can assist with translating documents (but expert review is needed for compliance and accuracy).

- Client Support: Can assist with general queries and brainstorming.

What’s not permitted:

- Process Confidential DND/CAF Data: Do not input personal, sensitive, or protected information into public tools.

- Legal or Military Advice: AI cannot replace legal or military advisors for policy decisions or interpretations of regulations.

- Fact-Check: AI cannot verify critical military intelligence or operational details; human expertise is required.

- Create Images of CAF Personnel: Do not generate realistic images or alter photos of military personnel.

Risks:

- Breaches/Leaks: Sensitive info may be exposed, misused, or threaten national security.

- Reliability: AI will always provide an answer, but it may be wrong and lead to poor decision making.

- Overreliance: Overuse of AI could lead to the loss of critical or analytical skillsets.

Guidelines for safe usage:

Have questions? dirimsecurops@forces.gc.ca