Escherichia coli in Drinking Water

Download the alternative format

(PDF format, 570 KB, 48 pages)

Organization: Health Canada

Date published: 2019-06-14

Document for public consultation

Consultation period ends

August 16, 2019

Table of Contents

- Purpose of consultation

- Part I. Overview and Application

- 1.0 Proposed guideline

- 2.0 Executive summary

- 3.0 Application of the guideline

- Part II. Science and Technical Considerations

- 4.0 Significance of E. coli in drinking water

- 5.0 Analytical methods

- 6.0 Sampling for E. coli

- 7.0 Treatment technology and distribution system considerations

- 8.0 Risk assessment

- 9.0 Rationale

- 10.0 References

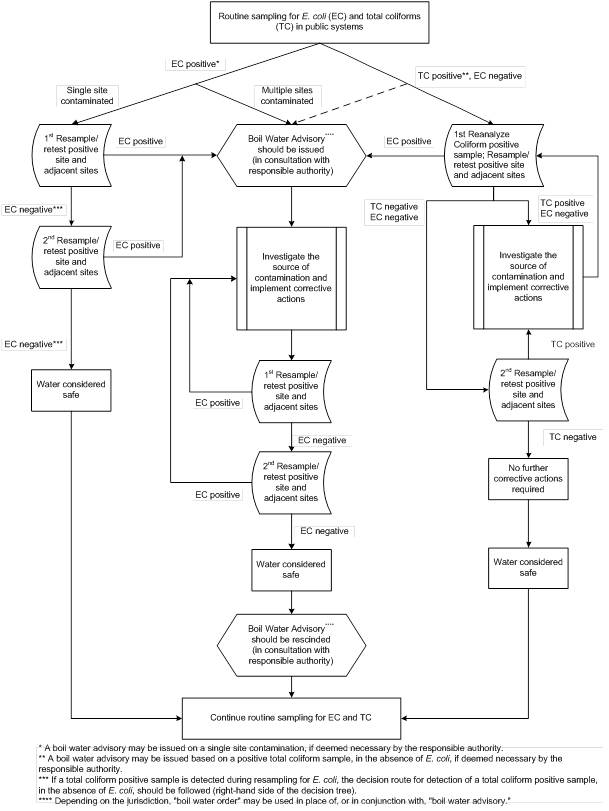

- Appendix A: Decision tree for routine microbiological testing of municipal-scale systems

- Appendix B: Decision tree for routine microbiological testing of residential-scale systems

- Appendix C: List of acronyms

Escherichia coli in Drinking Water

Purpose of consultation

The available information on E. coli has been assessed with the intent of updating the current drinking water guideline and the guideline technical document. The existing guideline on E. coli, last updated in 2013, established a maximum acceptable concentration (MAC) of none detectable per 100 mL, recognizing that E. coli is an indicator of fecal contamination. This updated document takes into consideration new scientific studies and provides information on the significance, sampling and treatment considerations for the use of E. coli as a bacteriological indicator in a risk management approach to drinking water systems. The document proposes to reaffirm a MAC for E. coli of none detectable per 100 mL in drinking water.

The document is being made available for a 60-day public consultation period. The purpose of this consultation is to solicit comments on the proposed guideline, on the approach used for its development and on the potential economic costs of implementing it, as well as to determine the availability of additional exposure data. Comments are appreciated, with accompanying rationale, where required. Comments can be sent to Health Canada via email at HC.water-eau.SC@canada.ca. If this is not feasible, comments may be sent by mail to the Water and Air Quality Bureau, Health Canada, 269 Laurier Avenue West, A.L. 4903D, Ottawa, Ontario K1A 0K9. All comments must be received before August 16, 2019.

Comments received as part of this consultation will be shared with members of the Federal-Provincial-Territorial Committee on Drinking Water (CDW), along with the name and affiliation of their author. Authors who do not want their name and affiliation to be shared with CDW should provide a statement to this effect along with their comments.

It should be noted that this guideline technical document on E. coli in drinking water will be revised following evaluation of comments received. This document should be considered as a draft for comment only.

Escherichia coli

Part I. Overview and Application

1.0 Proposed guideline

A maximum acceptable concentration (MAC) of none detectable per 100 mL is proposed for Escherichia coli in drinking water.

2.0 Executive summary

This guideline technical document was prepared in collaboration with the Federal-Provincial-Territorial Committee on Drinking Water and assesses all available information on Escherichia coli.

Escherichia coli (E. coli) is a species of bacteria that is naturally found in the intestines of humans and warm-blooded animals. It is present in feces in high numbers and can be easily measured in water, which makes it a useful indicator of fecal contamination for drinking water providers. E. coli is the most widely used indicator for detecting fecal contamination in drinking water supplies worldwide. In drinking water monitoring programs, E. coli testing is used to provide information on the quality of the source water, the adequacy of treatment and the safety of the drinking water distributed to the consumer.

2.1 Significance of E. coli in drinking water systems and their sources

E. coli monitoring should be used, in conjunction with other indicators, as part of a multi-barrier approach to producing drinking water of an acceptable quality. Drinking water sources are commonly impacted by fecal contamination from either human or animal sources and, as a result, may contain E. coli. Its presence in a water sample is considered a good indicator of recent fecal contamination. The ability to detect fecal contamination in drinking water is a necessity, as pathogenic microorganisms from human and animal feces in drinking water pose the greatest danger to public health.

Under a risk management approach to drinking water systems such as a multi-barrier or water safety plan approach, monitoring for E. coli is used as part of the water quality verification process to show that the natural and treatment barriers in place are providing the necessary level of control needed. The detection of E. coli in drinking water indicates fecal contamination and therefore that fecal pathogens may be present which can pose a health risk to consumers. In a groundwater source, the presence of E. coli indicates that the groundwater has been affected by fecal contamination, while in treated drinking water the presence of E. coli can signal that treatment is inadequate or that the treated water has become contaminated during distribution. If testing confirms the presence of E. coli in drinking water, actions that can be taken include notifying the responsible authorities, using a boil water advisory and implementing corrective actions.

Using multiple parameters in drinking water verification monitoring as indicators of general microbiological water quality (such as total coliforms, heterotrophic plate counts) or additional indicators of fecal contamination (enterococci) is a good way for water utilities to enhance the potential to identify issues and thus trigger responses.

2.2 Treatment

In drinking water systems that are properly designed and operated, water that is treated to meet the guidelines for enteric viruses (minimum 4 log removal of viruses) or enteric protozoa (minimum 3 log removal of protozoa) will be capable of achieving the proposed MAC of none detectable per 100 mL for E. coli. Detecting E. coli in drinking water indicates that there is a potential health risk from consuming the water; however E. coli testing on its own is not able to confirm the presence or absence of drinking water pathogens.

For municipal-scale systems, it is important to apply a monitoring approach which includes the use of multiple operational and water quality verification parameters (e.g., turbidity, disinfection measurements, E. coli), in order to verify that the water has been adequately treated and is therefore of an acceptable microbiological quality. For residential-scale systems, regular E. coli testing combined with monitoring of critical processes, regular physical inspections and a source water assessment can be used to confirm the quality of the drinking water supply.

2.3 International considerations

The proposed MAC for E. coli is consistent with drinking water guidelines established by other countries and international organizations. The World Health Organization (WHO), the European Union, the United States Environmental Protection Agency (U.S. EPA) and the Australian National Health and Medical Research Council have all established a limit of zero E. coli per 100 mL.

3.0 Application of the guideline

Note: Specific guidance related to the implementation of drinking water guidelines should be obtained from the responsible drinking water authority in the affected jurisdiction.

E. coli is the most widely used fecal indicator organism in drinking water risk management worldwide. For municipal-scale and residential-scale Footnote 1 systems, its primary role is as an indicator of fecal contamination during routine monitoring to verify the quality of the drinking water supply. The presence of E. coli indicates fecal contamination of the drinking water and as a result, there is an increased risk that enteric pathogens may be present. For treated, distributed drinking water, the detection of E. coli is a signal of inadequate control or of an operational failure in the drinking water treatment or distribution system. Consequently, the detection of E. coli in any drinking water system is unacceptable.

Fecal contamination is often intermittent and may not be revealed by the examination of a single sample. Therefore, if a vulnerability assessment or inspection of a drinking water system shows that an untreated supply or treated water (e.g., during distribution and storage) is subject to fecal contamination, or that treatment is inadequate, the water should be considered unsafe, irrespective of the results of E. coli analysis. Implementing a risk management approach to drinking water systems, such as the source-to-tap or water safety plan approach is the best method to reduce waterborne pathogens in drinking water. These approaches require a system assessment that involves: characterizing the water source; describing the treatment barriers that prevent or reduce contamination; highlighting the conditions that can result in contamination; and implementing control measures to mitigate those risks through the treatment and distribution systems to the consumer.

E. coli concentrations of none detectable per 100 mL of water leaving the treatment plant should be achieved for all treated water supplies. Treatment of surface water sources or groundwater under the direct influence of surface waters (GUDI) should include adequate filtration (or technologies providing an equivalent log reduction credit) and disinfection. Treatment of groundwater sources should include a minimum 4 log (99.99%) removal and/or inactivation of enteric viruses. A jurisdiction may choose to allow a groundwater source to have less than the recommended minimum 4 log reduction if the assessment of the drinking water system has confirmed that the risk of enteric virus presence is minimal. Water that is treated to meet the guidelines for enteric viruses (minimum 4 log or 99.99% removal and/or inactivation) or enteric protozoa (minimum 3 log or 99.9% removal and/or inactivation) should provide adequate removal and/or inactivation for E. coli. For many source waters, log reductions greater than these may be necessary.

The appropriate type and level of treatment should take into account the potential fluctuations in water quality, including short-term water quality degradation, and variability in treatment performance. Pilot testing or optimization processes may be useful for determining treatment variability. In systems with a distribution system, a disinfectant residual should be maintained throughout the system at all times. The existence of an adequate disinfectant residual is an important measure for controlling microbial growth during drinking water distribution. Under some conditions (e.g. the intrusion of viruses or protozoa from outside of the distribution system), the disinfectant residual may not be sufficient to ensure effective pathogen inactivation. More information on how source water assessments and, treatment technologies and distribution system operations are used to manage risks from pathogens in drinking water can be found in Health Canada's guideline technical documents on enteric protozoa and on enteric viruses. When verifying the quality of treated drinking water, the results of E. coli tests should be considered together with information on treatment and distribution system performance to show that the water has been adequately treated and is therefore of acceptable microbiological quality.

3.1 Municipal-scale drinking water supply systems

3.1.1 Monitoring E. coli in water leaving the treatment plant

E. coli should be monitored at least weekly in water leaving a treatment plant. If E. coli is detected, this indicates a serious breach in treatment and is therefore unacceptable. E. coli tests should be used in conjunction with other operational indicators, such as residual disinfectant and turbidity monitoring as part of a source-to-tap or water safety plan approach.

The required frequency for all testing at the treatment plant is specified by the responsible drinking water authority. Best practice commonly involves a testing frequency beyond these minimum recommendations based upon the size of system, the number of consumers served, the history of the system, and other site-specific considerations. Events that lead to changes in source water conditions (e.g., spring runoff, storms or wastewater spills) are associated with an increased risk of fecal contamination. Water utilities may wish to consider additional sampling during these events.

3.1.2 Monitoring E. coli within water distribution and storage systems

In municipal-scale distribution and storage systems, the number of samples collected for E. coli testing should reflect the size of the population being served, with a minimum of four samples per month. The frequency and sampling points for E. coli testing within distribution and storage systems will be specified and/or approved by the responsible drinking water authority.

Changes to system conditions that result in an interruption of supply or cause low and negative transient pressures can be associated with an increased risk of fecal contamination. These changes can occur during routine distribution system operation/maintenance (e.g., pump start/stops, valve opening and closing) or unplanned events such as power outages or water main breaks. Operational indicators (e.g., disinfectant residual, pressure monitoring) should be used in conjunction with E. coli tests as part of a source-to-tap or water safety plan approach.

3.1.3 Notification

If E. coli is detected in a municipal-scale drinking water system, the system owner/operator and the laboratory processing the samples should immediately notify the responsible authorities. The system owner/operator should resample and test the E. coli-positive site(s) and adjacent sites. If resampling and testing confirm the presence of E. coli in drinking water, the system owner/operator should immediately issue a boil water advisory Footnote 2 in consultation with the responsible authorities, carry out the appropriate corrective actions (Section 3.1.4) and cooperate with the responsible authorities in any surveillance for possible waterborne disease outbreaks. In addition, where E. coli contamination is detected in the first sampling-for example, E. coli-positive sample results from a single site, or from more than one location in the distribution system-the owner/operator or the responsible authority may decide to notify consumers immediately to boil their drinking water or use an alternative supply known to be safe and initiate corrective actions without waiting for confirmation. A decision tree is provided in Appendix A to assist system owners/operators.

3.1.4 Corrective actions

If the presence of E. coli in drinking water is confirmed, the owner/operator of the waterworks system should carry out appropriate corrective actions, which could include the following measures:

- Verify the integrity and the optimal operation of the treatment process.

- Verify the integrity of the distribution system.

- Verify that the required disinfectant residual is present throughout the distribution system.

- Increase disinfectant dosage, flush water mains, clean treated-water storage tanks (municipal reservoirs and domestic cisterns), and check for the presence of cross-connections and pressure losses. The responsible authority should be consulted regarding the correct procedure for dechlorinating water being discharged into fish-bearing waters.

- Sample and test the E. coli-positive site(s) and locations adjacent to the E. coli-positive site(s). At a minimum, one sample upstream and one downstream from the original sample site(s) plus the treated water from the treatment plant as it enters the distribution system should be tested. Other follow-up samples should be collected and tested according to an appropriate sampling plan for the distribution system. Tests performed should include those for E. coli, total coliforms (as a general indicator of microbiological quality and inadequate treatment) and operational monitoring parameters such as disinfectant residual and turbidity. Testing for enterococci as an additional fecal verification indicator may also be performed.

- Conduct an investigation to identify the problem and prevent its recurrence; this would include measuring raw water quality (e.g., bacteriology, turbidity, colour, natural organic matter (NOM), and conductivity) and variability.

- Continue selected sampling and testing (e.g., bacteriology, disinfectant residual, turbidity) of all identified sites during the investigative phase to confirm the extent of the problem and to verify the success of the corrective actions.

3.1.5 Rescinding a boil water advisory

Once the appropriate corrective actions have been taken and only after a minimum of two consecutive sets of bacteriological samples, collected 24 hours apart, produce negative results, an E. coli-related boil water advisory may be rescinded. Additional samples showing negative results may be required by the responsible drinking water authority. Further information on boil water advisories can be found in Health Canada's Guidance for Issuing and Rescinding Boil Water Advisories in Canadian Drinking Water Supplies. Over the long term, only a history of bacteriological and operational monitoring data together with validation of the system's design, operation and maintenance can be used to confirm the quality of a drinking water supply.

3.2 Residential-scale drinking water systems

3.2.1 Monitoring E. coli in water from disinfected and undisinfected supplies

Testing frequencies for residential-scale systems are determined by the responsible drinking water authority in the affected jurisdiction, and should include times when the risk of contamination of the drinking water source is the greatest, for example, in early spring after the thaw, after an extended dry spell, or following heavy rains. Homeowners with private wells should regularly test (at a minimum two times per year) their well for E. coli, ideally during these same at-risk times. New or rehabilitated wells should also be tested before their first use to confirm microbiological safety. The responsible drinking water authority in the affected jurisdiction should be consulted regarding their specific requirements for well construction and maintenance.

3.2.2 Notification

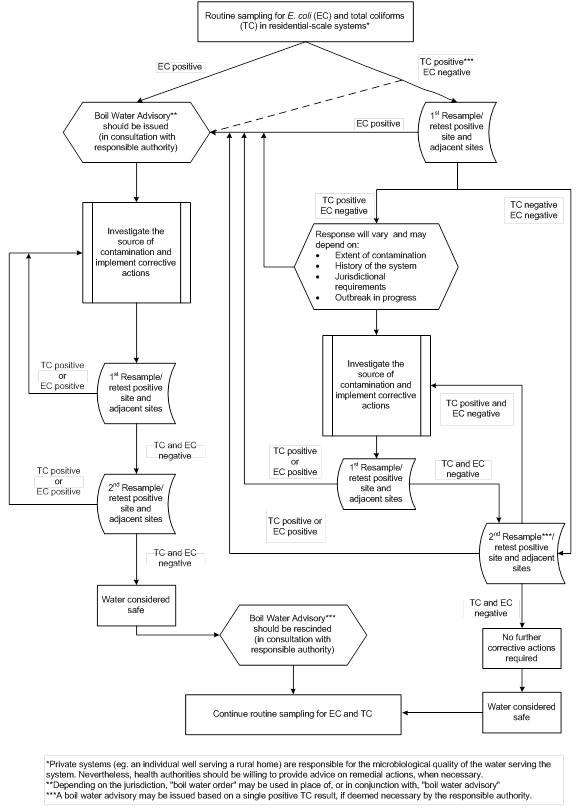

Residential-scale systems that serve the public may be subject to regulatory or legislative requirements and should follow any actions specified by the responsible drinking water authority. If E. coli is detected in a residential-scale drinking water system that serves the public, the system owner/operator and the laboratory processing the samples should immediately notify the responsible authorities.The system owner/operator should resample and test the drinking water to confirm the presence of E. coli. The responsible authority should advise the owner/operator to boil the drinking water or to use an alternative supply that is known to be safe in the interim. Homeowners should also be advised to follow these same instructions if E. coli is detected in their private well. If resampling confirms that the source is contaminated with E. coli, the system owner/operator should immediately carry out the appropriate corrective actions (see Section 3.2.3), and cooperate with the responsible authorities in any surveillance for possible waterborne disease outbreaks. As a precautionary measure, some jurisdictions may recommend immediate corrective actions without waiting for confirmatory results. A decision tree is provided in Appendix B to assist system owners/operators.

3.2.3 Corrective actions for disinfected supplies

The first step, if it has not already been taken, is to evaluate the physical condition of the drinking water system as applicable, including water intake, well, well head, pump, treatment system (including chemical feed equipment, if present), plumbing, and surrounding area.

Any identified faults should be corrected. If the physical conditions are acceptable, some or all of the following corrective actions may be necessary:

- In a chlorinated system, verify that a disinfectant residual is present throughout the system.

- Increase the disinfectant dosage, flush the system thoroughly and clean treated water storage tanks and domestic cisterns. The responsible authority should be consulted regarding the correct procedure for dechlorinating water that may be discharged into fish-bearing waters.

- For systems where the disinfection technology does not leave a disinfectant residual, such as UV, it may be necessary to shock chlorinate the well and plumbing system.

- Ensure that the disinfection system is working properly and maintained according to manufacturer's instructions.

After the necessary corrective actions have been taken, samples should be collected and tested for E. coli to confirm that the problem has been corrected. If the problem cannot be corrected, additional treatment or a new source of drinking water should be considered. In the interim, any initial precautionary measures should continue; for example, drinking water should continue to be boiled or an alternative supply of water known to be safe should continue to be used.

3.2.4 Corrective actions for undisinfected wells

The first step, if it has not already been taken, is to evaluate the condition of the well, well head, pump, plumbing, and surrounding area. Any identified faults should be corrected. If the physical conditions are acceptable, then the following corrective actions should be carried out:

- Shock-chlorinate the well and plumbing system.

- Flush the system thoroughly and retest to confirm the absence of E. coli. Confirmatory tests should be delayed until either 48 hours after tests indicate the absence of a chlorine residual or five days have elapsed since the well was treated. For residential-scale systems that serve the public, the responsible drinking water authority may determine acceptable practice. The responsible authority should also be consulted regarding the correct procedure for dechlorinating water that may be discharged to fish-bearing waters.

If the water remains contaminated after shock-chlorination, further investigation into the factors likely contributing to the contamination should be carried out. If these factors cannot be identified or corrected, either an appropriate disinfection device or well reconstruction or replacement should be considered. Drinking water should be boiled or an alternative supply of water known to be safe should continue to be used in the interim.

3.2.5 Rescinding a boil water advisory

Once the appropriate corrective actions have been taken, an E. coli-related and only after a minimum of two consecutive sets of samples, collected 24 hours apart, produce negative results, boil water advisory may be rescinded. Further information on boil water advisories can be found in Health Canada's Guidance for Issuing and Rescinding Boil Water Advisories in Canadian Drinking Water Supplies. Additional tests should be taken after three to four months to ensure that the contamination has not recurred. Over the long term, only a history of bacteriological and operational monitoring data in conjunction with regular physical inspections and a source water assessment can be used to confirm the quality of a drinking water supply.

Footnotes

- Footnote 1

-

For the purposes of this document, a residential-scale water supply system is defined as a system with a minimal or no distribution system that provides water to the public from a facility not connected to a municipal supply. Examples of such facilities include private drinking water supplies, schools, personal care homes, day care centres, hospitals, community wells, hotels, and restaurants. The definition of a residential-scale supply may vary between jurisdictions.

- Footnote 2

-

For the purpose of this document, the use of the term "boil water advisory" is taken to mean advice given to the public by the responsible authority in the affected jurisdiction to boil their water, regardless of whether this advice is precautionary or in response to an outbreak. Depending on the jurisdiction, the use of this term may vary. As well, the term "boil water order" may be used in place of, or in conjunction with, a "boil water advisory."

Part II. Science and Technical Considerations

4.0 Significance of E. coli in drinking water

4.1 Description

Escherichia coli is a member of the coliform group of bacteria, part of the family Enterobacteriaceae, and described as a facultative anaerobic, Gram-negative, non-spore-forming, rod-shaped bacterium. The vast majority of waterborne E. coli isolates have been found to be capable of producing the enzyme β-D-glucuronidase (Martins et al., 1993; Fricker et al., 2008, 2010), and it is this characteristic that facilitates their detection and identification. Further information on the coliform group of organisms can be found in the guideline technical document on total coliforms (Health Canada, 2018f).

The complexity of the E. coli species has become better understood with the use of advanced molecular characterization methods and the accumulation of whole genome sequence data (Lukjancenko et al., 2010; Chaudhuri and Henderson, 2012, Gordon, 2013). Presently it is recognized that E. coli strains can be categorized into one of several phylogenetic groups (A, B1, B2, C, D, E, F) based on differences in their genotype. Strains in the different groups show some variation in their physical and biological properties (e.g., their ability to utilize different nutrients), the fecal and environmental habitats in which they have been encountered and their predisposition for causing disease (Clermont et al., 2000; Walk et al., 2007; Tenaillon et al., 2010; Chaudhuri and Henderson, 2012; Gordon, 2013; Jang et al., 2017). More research is needed to better understand the practical impacts these differences have on drinking water microbiology and the implications for human health (Van Elsas et al., 2011; Gordon, 2013).

4.2 Sources

E. coli is naturally found in the intestines of humans and warm-blooded animals, comprising about 1% of the total biomass in the large intestine (Leclerc et al., 2001). Within human feces, E. coli is present at a concentration of 107 ̶ 109 cells per gram (Edberg et al., 2000; Leclerc et al., 2001; Tenaillon et al., 2010; Ervin et al., 2013). Numbers in feces of domestic animals can vary considerably, but typically fall within the range from 104 ̶ 109 cells per gram (Lefebvre et al., 2006; Duriez and Topp, 2007; Diarra et al., 2007; Tenaillon et al., 2010; Ervin et al., 2013). Although E. coli are part of the natural intestinal flora, some strains of this bacterium can cause gastrointestinal illness which can also result in more serious health complications (e.g., haemorrhagic colitis, haemolytic uremic syndrome, kidney failure). Some strains of E. coli can also cause urinary tract infections. Concentrations of non-pathogenic E. coli in human and animal feces exceed those of the pathogenic strains (Bach et al., 2002; Omisakin et al., 2003, Fegan et al., 2004; Degnan, 2006). Therefore, during a fecal contamination event, non-pathogenic E. coli will outnumber the pathogenic strains, even during outbreaks (Degnan, 2006; Soller et al., 2010).

Sources of fecal contamination that can impact surface water or ground water source supplies include point sources (e.g., sewage and industrial effluents, septic systems, leaking sanitary sewers) and non-point or diffuse sources (e.g., runoff from agricultural, urban and natural areas) (Gerba and Smith, 2005; Hynds et al., 2012, 2014; Wallender et al., 2014; Lalancette et al., 2014; Staley et al., 2016).

4.3 Survival

The survival time of E. coli in the environment is dependent on many factors including temperature, exposure to sunlight, presence and types of other microflora, availability of nutrients and the type of water involved (e.g., groundwater, surface water, treated distribution water) (Foppen and Schijven, 2006; Van Elsas et al., 2011; Blaustein et al., 2013). As a result, it is not easy to predict the fate of E. coli populations in complex natural environments (Van Elsas et al., 2011, Blaustein et al., 2013; Franz et al., 2014). In general, E. coli survives for less than 1-10 weeks in natural surface waters at a temperature of 14-20°C (Grabow, 1975; Filip et al., 1986; Flint, 1987; Lim and Flint, 1989; Bogosian, 1996; Sampson et al., 2006). Studies have shown that E. coli is capable of surviving in groundwater for 3-14 weeks at 10°C (Keswick et al., 1982; Filip et al., 1986).

Researchers investigating the survival of E. coli in water have observed comparable survival rates for non-pathogenic E. coli strains and E. coli O157:H7 (one of the most recognized pathogenic strains) in surface water and groundwater (Rice et al., 1992; Wang and Doyle, 1998; Rice and Johnson, 2000; Ogden et al., 2001; McGee et al., 2002: Artz and Killham, 2002; Easton et al., 2005; Avery et al., 2008).

Under the stresses of the water environment, E. coli can enter a viable but non-culturable (VBNC) state where they do not grow on laboratory media, but are otherwise alive and capable of resuscitation when conditions become favourable (Bjergbæk and Roslev, 2005). The VBNC state is a primary survival strategy for bacteria that has been observed with numerous species (Lee et al., 2007; van der Kooij and van der Wielen, 2014). A greater understanding of the VBNC state in bacteria relevant to drinking water is needed (van der Kooij and van der Wielen, 2014).

4.3.1 Environmentally-adapted E. coli

It is now well-recognized by the scientific community that E. coli can survive long-term and grow in habitats outside of the lower intestinal tract of human and animals provided that certain factors (e.g., temperature, nutrient and water availability, pH, solar radiation) are within their tolerance limits (Ishii et al., 2010; Byappanahalli et al., 2012b; Tymensen et al., 2015; Jang et al., 2017). It has also become evident that some strains of E. coli can adapt to live independently of fecal material and become naturalized members of the microbial community in environmental habitats (Ishii and Sadowsky, 2008; Ishii et al., 2010; Byappanahalli et al., 2012b). E. coli genotypes that are distinct from those found in human or animal feces have been discovered in sands, soils, sediments, aquatic vegetation, septic waste and raw sewage (Gordon et al., 2002; Byappanahalli et al., 2006; Ksoll et al., 2007; Ishii and Sadowsky, 2008; Ishii et al., 2010; Badgley et al., 2011; Zhi et al., 2016). Over time, research has shown that environmental habitats may serve as potential sources of most of the groups of bacteria that have been used for detecting fecal contamination of drinking water, including total coliforms, thermotolerant coliforms, E. coli and enterococci (Edberg et al., 2000; Whitman et al., 2003; Byappanahalli et al., 2012a). While these findings change the perception that E. coli is exclusively associated with fecal wastes, it is accepted that E. coli is predominantly of fecal origin and remains a valuable indicator of fecal contamination in drinking water (See Section 4.5). More research is needed to improve our understanding of the behaviour of E. coli in the environment.

4.4 Role of E. coli as an indicator of drinking water quality

Of the contaminants that can be found in drinking water, pathogenic microorganisms from human and animal feces pose the greatest danger to public health. Although modern microbiological techniques have made the detection of pathogenic bacteria, viruses and protozoa possible, it is not practical to attempt to routinely isolate these microbes from drinking water (Payment and Pintar, 2006; Allen et al., 2015). For this reason, indicator organisms are used to assess the microbiological safety of drinking water. These indicators are less difficult, less expensive, and less time consuming to monitor. This encourages testing of a higher number of samples which gives a better overall picture of the water quality and, therefore, better public health protection. Different indicator organisms can be used for specific purposes in drinking water risk management, in areas such as source water assessment, operational monitoring, validation of drinking water treatment processes and drinking water quality verification (WHO, 2005).

Worldwide, E. coli is the most widely used indicator of fecal contamination in drinking water supplies (Edberg et al., 2000; Payment et al., 2003). E. coli is predominantly associated with human and animal feces and does not usually multiply in drinking water (Edberg et al., 2000; Payment et al., 2003; Standridge et al., 2008; Lin and Ganesh, 2013). E. coli bacteria are excreted in feces in high numbers and can be rapidly, easily and affordably detected in water. These features in particular make E. coli highly useful for detecting fecal contamination even when the contamination is greatly diluted.

The primary role for E. coli is as an indicator of fecal contamination during monitoring to verify the microbiological quality of drinking water. Drinking water quality verification is a fundamental aspect of a source to tap or water safety plan approach to drinking water systems that includes monitoring to confirm that the system as a whole is operating as intended (WHO, 2005). E. coli can also be used as a parameter in source water assessments and during drinking water system investigations in response to corrective actions or surveillance.

E. coli is not intended to be a surrogate organism for pathogens in water (Health Canada, 2018d, 2018e). Numerous studies have documented that the presence of E. coli does not reliably predict the presence of specific enteric or non-enteric waterborne pathogens (Wu et al., 2011; Payment and Locas, 2011; Edge et al., 2013; Hynds et al., 2014; Lalancette et al., 2014; Ashbolt, 2015; Falkinham et al., 2015; Krkosek et al., 2016; Fout et al., 2017). The presence of E. coli in water indicates fecal contamination and thus, the strong potential for a health risk, regardless of whether specific pathogens are observed.

4.4.1 Role in groundwater sources

The presence of E. coli in a groundwater well indicates that the well has been affected by fecal contamination and serves as a trigger for further action. Studies of the groundwater quality of Canadian municipal wells have demonstrated the importance of historical E. coli data for raw groundwater when evaluating a well's potential susceptibility to fecal contamination (Payment and Locas, 2005; Locas et al., 2007, 2008). Recurrent detection of E. coli in a groundwater source indicates a degradation of the source water quality and a greater likelihood of pathogen occurrence (Payment and Locas, 2005, 2011; Locas et al., 2007, 2008; Fout et al., 2017).

Investigations of outbreaks of waterborne illness from small drinking water supplies have also demonstrated the usefulness of E. coli monitoring in verifying fecal contamination and/or the inadequate treatment of a groundwater source (Laursen et al., 1994; Fogarty et al., 1995; Engberg et al., 1998; Novello, 2000; Olsen et al., 2002; O'Connor, 2002a; Government Inquiry into Havelock North Drinking Water, 2017; Kauppinen et al., 2017).

Groundwater from private wells is generally perceived safe for drinking by consumers (Hynds et al., 2013; Murphy et al., 2017); however this is not always an accurate assumption. Studies have shown that private wells can test positive for E. coli more frequently than municipal-scale systems and residential-scale systems that provide drinking water to the public (Krolik et al., 2013; Invik et al., 2017; Saby et al., 2017). Further, researchers have estimated that the consumption of water from contaminated unregulated private wells may be responsible for a large proportion of the total burden of acute gastrointestinal illness associated with drinking water sources (DeFelice et al., 2016; Murphy et al., 2016b).

The above information emphasizes the importance of regular testing of untreated groundwater as well as treated groundwater to improve the ability of a monitoring program to detect wells affected by fecal contamination.

4.4.2 Role in surface water sources

Although the relationships seems to be site-specific, monitoring for E. coli in raw water can provide data relative to the impact and timing of sources of fecal pollution which affect the drinking water source. Similarly, it can provide information on the effects of source water protection or hazard control measures implemented in the watershed. Source water E. coli data can also be used to provide supplementary information in assessing microbiological risks and treatment requirements for surface water sources (U.S. EPA, 2006b; Hamouda et al., 2016).

Correlations between indicator organisms and pathogens can sometimes be observed in heavily polluted waters, but these quickly deteriorate due to dilution and the differences in the fate and transport of different microorganisms in various water environments (Payment and Locas, 2011). Lalancette et al. (2014) found that E. coli were potentially good indicators of Cryptosporidium concentrations at drinking water intakes when source waters are impacted by recent and nearby municipal sewage, but not at intakes where sources were dominated by agricultural or rural fecal pollution sources or more distant wastewater sources. Increased odds of detecting enteric pathogens (Campylobacter, Cryptosporidium, Salmonella and E. coli O157:H7) in surface water samples have been shown in some studies where densities of E. coli exceeded 100 CFU/100 mL (Van Dyke et al., 2012, Banihashemi et al., 2015; Stea et al., 2015).

4.4.3 Role in treatment monitoring

Detection of E. coli in water immediately after treatment or leaving the treatment plant signifies inadequate treatment and is unacceptable. Cretikos et al. (2010) examined the factors associated with E. coli detection at public drinking water systems in New South Wales, Australia. Undisinfected systems and small water supply systems serving less than 500 people were most strongly associated with E. coli detection. E. coli detections were also significantly associated with systems disinfected with only UV or with higher post-treatment turbidity.

Drinking water outbreaks have been linked to municipal supplies where water quality parameters (including E. coli) were below the acceptable limits recognized at the time (Hayes et al., 1989; Maguire et al., 1995; Goldstein et al., 1996; Jack et al., 2013). E. coli has different removal rates through physical processes and is more sensitive to drinking water disinfectants than enteric viruses and protozoa. While testing for E. coli is useful in assessing the treatment efficacy, it is not sufficient as a parameter in isolation of other factors with respect to assessing the impact on these pathogens (Payment et al., 2003). E. coli can be used as part of the water quality verification process in conjunction with information on treatment performance to show that the water has been adequately treated and is therefore of acceptable microbiological quality (Payment et al., 2003; Stanfield et al., 2003). However, under a source-to-tap or water safety plan approach to drinking water systems, validation of treatment and disinfection processes are also important to show that the system can operate as required and achieve the required levels of hazard reduction (WHO, 2005).

4.4.4 Role in distribution system monitoring

Microorganisms can enter the distribution system by passing through treatment and disinfection barriers during inadequate treatment, or through post-treatment contamination via intrusions, cross-connections or during construction or repairs.

The presence of E. coli in a distribution system sample can indicate that treatment of the source water has been inadequate, or that the treated water has become contaminated with fecal material during distribution. Post-treatment contamination, for example, through cross-connections, back siphonage, low or negative transient pressure events, contamination of storage reservoirs, and contamination of mains from repairs, have been identified as causes of distribution system contamination linked to illness (Craun, 2002; Hunter et al., 2005).

The detection of E. coli is expected to be sporadic and rare in properly designed and well-operated treatment and distribution systems. Water quality reports provided by large municipal drinking water utilities in Canada have shown that the number of distribution system samples that test positive for E. coli is typically less than 1% annually (Health Canada, 2018h). Data demonstrating the quality of the drinking water in individual provinces and territories can be obtained from the responsible drinking water authority or the water utilities. The detection of E. coli in the distribution system can indicate an increased potential of exposure to enteric pathogens for consumers in affected areas. Miles et al. (2009) analyzed point-of-use (POU) filters found in drinking water vending machines in Arizona to evaluate the microbiological quality of large volumes of treated, distributed drinking water and observed that 60% (3/5) of the filters that tested positive for E. coli also tested positive for enteroviruses.

Results from studies of model, pilot-scale and full-scale systems have shown that E. coli can accumulate in low numbers in distribution system biofilms, predominantly in a viable-but-not-culturable state (Fass et al. 1996; Williams and Braun-Howland, 2003; Juhna et al., 2007; Lehtola et al., 2007; Abberton et al., 2016; Mezule and Juhna, 2016). However, once embedded within the biofilm matrix, E. coli concentrations are controlled by the natural microbial community through processes such as predation and competition for nutrients (Fass et al. 1996; Abberton et al., 2016; Mezule and Juhna, 2016). Consequently, the detection of E. coli in a water distribution system is a good indication of recent fecal contamination. The presence of E. coli in any distribution and/or storage system sample is unacceptable and should result in further action (see Section 3.1.4).

4.4.5 Role of E. coli in a decision to issue boil water advisories

Boil water advisories are public announcements advising consumers that they should boil their drinking water prior to consumption in order to eliminate any disease-causing microorganisms that are suspected or confirmed to be in the water. These announcements are used as part of drinking water oversight and public health protection across the country. Health Canada (2015) provides more information on issuing and rescinding drinking water advisories.

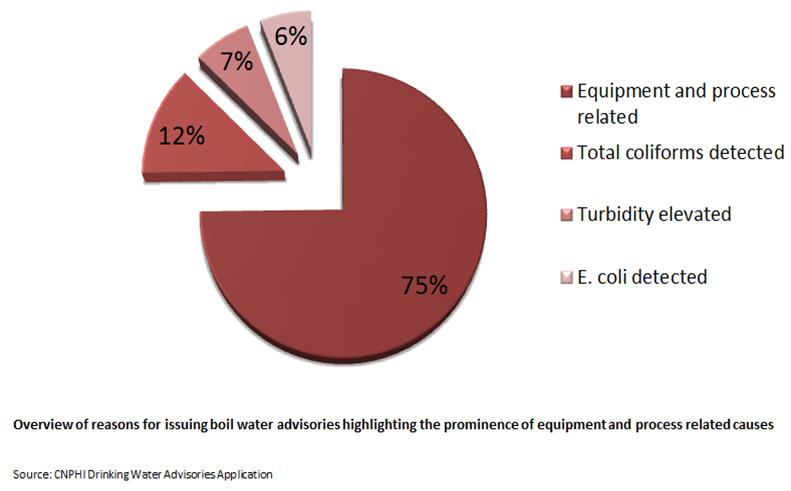

Drinking water data (primarily on boil water advisories) are collected on the Canadian Network for Public Health Intelligence (CNPHI) Drinking Water Advisories application (DWA), a secure, real-time web-based application, and by provincial and territorial regulators. Provincial, territorial and municipal drinking water data resides with and are provided by the responsible drinking water authority in the affected jurisdiction. Although the data in CNPHI does not provide a complete national picture, the trends within these data provide useful insight into the nature of boil water advisories and the challenges that exist in drinking water systems in Canada. A review of the available Canadian boil water advisory records (9,884 boil water advisory records issued between 1984 to the end of 2017) found that 594 (6%) of the boil water advisories noted "E. coli detected in drinking water system" as the reason for issuing the advisory (Health Canada, 2018g). The remaining boil water advisories were issued for other reasons, the most common of these being equipment and process-related (see Figure 1).

Figure 1 - Text Equivalent

Figure 1 is a pie chart showing the overall proportions of reasons for boil water advisories as; 75% equipment and process related, 12% due to detection of total coliforms, 7% due to elevated turbidity and 6% due to detection of E. coli.

Data from 1984 to 2017 (n=9884)

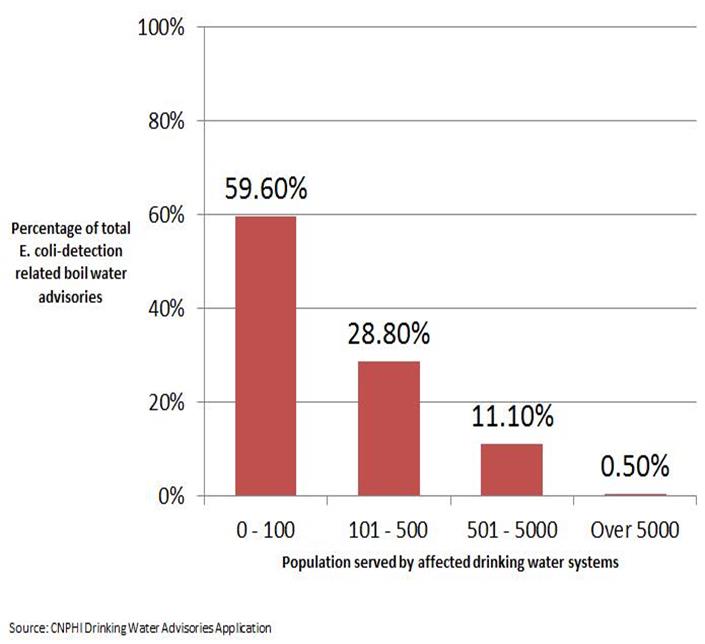

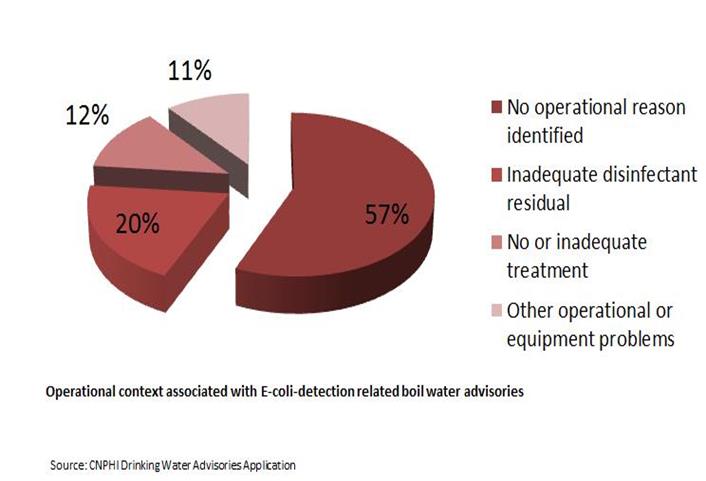

Over 99% of the 594 boil water advisories associated with the detection of E. coli occurred in small drinking water systems (see Figure 2), and were almost equally split between surface water and ground water sources (see Figure 3) (Health Canada, 2018g). More than half of these advisories were issued without any additional operational context recorded (see Figure 4), which may indicate that they were issued solely in response to a positive E. coli test during routine sampling. Overall, the data support the evidence that small drinking water systems face increased contamination risk. The data also highlight the importance of monitoring for operational parameters in addition to conducting regular E. coli testing when confirming the quality of the drinking water supply.

Figure 2 - Text Equivalent

Figure 2 is a bar chart that shows the proportions of population served by drinking water systems affected by boil water advisories (BWAs) issued due to the detection of E. coli. Of the affected drinking water systems, 59.6% serve 0 – 100 people, 28.8% serve 101 – 500 people, 11.1% serve 501-5000 people and 0.5% serve over 5000 people.

*Data from 1984 to 2017 (n=9884)

Figure 3 - Text Equivalent

Figure 3 is pie chart showing the source water used by drinking water systems affected by E. coli-detection related boil water advisories. The proportions show 48% use surface water, 46% use ground water and 6% use other sources including blended (surface and ground water), hauled water and cisterns.

*Data from 1984 to 2017 (n=9884)

Figure 4 - Text Equivalent

Figure 4 is a pie chart showing the operational context associated with E-coli-detection related boil water advisories. 57% do not identify any operational reason, 20% note inadequate disinfectant residual, 12% note no or inadequate treatment and 11% note other operational or equipment related problems.

*Data from 1984 to 2017 (n=9884)

5.0 Analytical methods

All analyses for E. coli should be carried out as directed by the responsible drinking water authority. In many cases, this authority will recommend or require the use of accredited laboratories. In some cases, it may be necessary to use other means to analyze samples in a timely manner, such as on-site testing using commercial test kits by trained operators. It is important to use validated or standardized methods to make correct and timely public health decisions. When purchasing laboratory services or selecting analytical methods for analysis to be performed in-house, water utilities should consult with the analytical laboratory or manufacturer on issues of method sensitivity, specificity and turnaround time. To ensure reliable results, a quality assurance (QA) program, which incorporates quality control (QC) practices, should be in place. Analyses conducted using test kits used should be performed according to the manufacturer's instructions.

5.1 Culture-based methods

Standardized methods available for the detection of E. coli in drinking water are summarized in Table 1. Methods that target E. coli are based on the presence of the β-D-glucuronidase enzyme. This is a distinguishing enzyme that is found in the vast majority of E. coli isolates. The uidA gene which encodes for the β-glucuronidase enzyme is present in > 97% of E. coli isolates (Feng et al., 1991; Martins et al., 1993; Maheux et al., 2009). The gene may also be found in a low proportion of Shigella and Salmonella strains and in some strains of other bacterial species; but is rarely present in other coliforms (Feng et al., 1991; Fricker et al., 2008, 2010; Maheux et al., 2008, 2017.). Although E. coli serotype O157:H7 and some Shigella strains do carry nucleotide sequences for the uidA gene, most isolates do not exhibit enzyme activity (Feng and Lampel, 1994, Maheux et al., 2011). Detection methods also take advantage of biochemical characteristics specific to E. coli and use media additives and incubation temperatures to inhibit the growth of background microorganisms. All of the methods listed in Table 1 are capable of detecting total coliforms and simultaneously differentiating E. coli.

When confirmation is required, there are numerous ways to identify E. coli from other coliforms and other bacteria species. Biochemical tests for differentiating members of the family Enterobacteriaceae, including E. coli, and commercial media and identification kits for verifying E. coli are available (APHA et al., 2017). E. coli confirmation can also be done by subjecting coliform-positive samples to media that tests for the β-D-glucuronidase enzyme (APHA et al., 2017). The use of multiple biochemical tests for confirmation will improve the accuracy of the identification (Maheux et al., 2008).

| Organization - Method | Media | Results format | Total coliforms detected (Y/N) | Turnaround time |

|---|---|---|---|---|

| Membrane Filtration | ||||

| SM 9222 JFootnote a U.S. EPA - N/AFootnote b Footnote c | m-ColiBlue24®broth | P-A, C | Y | 24 h |

| SM 9222 KFootnote a U.S. EPA 1604Footnote b Footnote c | MI agar or broth | P-A, C | Y | 24 h |

| ISO 9308-1:2014Footnote d U.S. EPA - N/A Footnote b Footnote c | Chromocult®Coliform Agar | P-A, C | Y | 21-24 h |

| Enzyme substrate | ||||

| SM 9223 BFootnote a ISO 9308-2: 2012Footnote d ISO 9308-3: 1998Footnote d U.S. EPA - N/Ab,c | Colilert®medium Colilert-18®medium Colisure®medium | P-A, C | Y | 18-24 h |

| U.S. EPA - N/AFootnote b Footnote c | E*Colite®medium | P-A | Y | 28-48 h |

| U.S. EPA - N/AFootnote b Footnote c | Readycult®Coliforms 100 broth | P-A | Y | 24 h |

| U.S. EPA - N/AFootnote b Footnote c | Modified Colitag™ medium | P-A | Y | 16-22 h |

| U.S. EPA - N/AFootnote b Footnote c | Tecta™ EC/TC medium | P-A | Y | 18 h |

|

||||

The results of E. coli test methods are presented as either presence-absence (P-A) or counts (C) of bacteria. P-A testing does not provide any information on the concentration of organisms in the sample. The quantitation of organisms is sometimes used to assess the extent of the contamination, and as such is considered a benefit of the more quantitative methods. For decision-making, the focus is the positive detection of E. coli, regardless of quantity; as the guideline for E. coli in drinking water is none per 100 mL, qualitative results are sufficient for protecting public health.

5.1.1 Accuracy of detection methods

There are limitations in the sensitivity of culture-based methods which rely upon the expression of the β-glucuronidase enzyme for a positive identification of E. coli (Maheux et al., 2008; Zhang et al., 2015). There is also variability in the performance of commercialized E. coli methods observed during laboratory testing of isolates from different settings (e.g., clinical, environmental), water types and geographic locations (Bernasconi et al., 2006; Olstadt et al., 2007; Maheux et al., 2008; Maheux et al., 2017). Factors that can affect the ability of culture-based methods to detect E. coli include: the natural variability in the percentage of β-D-glucuronidase negative strains in the source population (Feng and Lampel, 1994; Maheux et al., 2008); the composition of the media (Hörman and Hänninen, 2006; Olstadt et al., 2007; Maheux et al., 2008, 2017; Fricker et al., 2010); the concentration of the organisms and their physiological state (Ciebin et al., 1995; Maheux et al., 2008; Zhang et al., 2015); and, water quality characteristics (Olstadt et al., 2007).

Standardized methods have been validated against established reference methods to ensure that the method performs to an acceptable level (APHA et al., 2017). Nevertheless, there is a need to continually evaluate the efficacy of E. coli test methods, and to improve their sensitivity and specificity. The accuracy of future methods may be improved with advanced techniques combining biochemical characteristics with molecular tests (Maheux et al., 2008). Other useful strategies can include efforts by approval bodies to conduct regular reviews of screening criteria and method performances, and continued work by manufacturers towards optimizing their medium formulations (Zhang et al., 2015). Criteria for consideration when designing studies for the evaluation of microbiological methods are discussed in other publications (Boubetra et al., 2011; APHA et al., 2017; Duygu and Udoh, 2017).

5.2 Molecular methods

Given the limitations associated with culture-based methods for detecting E. coli (e.g., required time of analysis, lack of universality of the β-D-glucuronidase enzyme signal, their inability to detect VBNC organisms), molecular-based detection methods continue to be of interest (Martins et al., 1993; Heijnen and Medema, 2009; Mendes Silva and Domingues, 2015). No molecular methods for detecting E. coli in drinking water have been standardized or approved for drinking water compliance monitoring.

Polymerase chain reaction (PCR)-based detection methods are the most commonly described molecular methods for the detection of microorganisms in water (Maheux et al., 2011; Gensberger et al., 2014; Krapf et al., 2016). In recent years, the number of techniques available has increased considerably and the costs associated with their use have been significantly reduced (Mendes Silva and Domingues, 2015). However, the most significant challenge associated with PCR analysis of drinking water samples remains the need for method sensitivity at very low concentrations of the target organism. Descriptions of the different types of molecular methods explored for the detection of E. coli in water sources are available elsewhere (Botes et al., 2013; Mendes Silva and Domingues, 2015). At present, the limits of detection reported for the vast majority of methods encountered in the literature are higher than the sensitivity limit of 1 E. coli per 100 mL required for drinking water analysis (Heijnen and Medema 2009; Maheux et al., 2011, Gensberger et al., 2014; Mendes Silva and Domingues, 2015; Krapf et al., 2016). Additional research is needed to further optimize the sensitivity of molecular detection methods for E. coli and to verify the acceptability of these procedures for routine assessment of drinking water quality. More work is needed to develop standardized molecular methods that can be used accurately, reliably, easily and affordably.

5.3 Rapid online monitoring methods

The need for more rapid and frequent monitoring of E. coli in drinking water distribution systems has led researchers to explore on-line water quality sensor technologies capable of detecting E. coli contamination in real-time. Some of the sensors investigated have been based on measurements of electrical impedance (Kim et al., 2015), immunofluorescence (Golberg et al., 2014) or water quality parameters such as conductivity, particle counts, pH, turbidity, UV absorbance, total organic carbon, alone or in combination (Miles et al., 2011; Ikonen et al., 2017).The most significant challenge facing potential rapid online detection methods is the need for sensitivity at very low E. coli concentrations (Kim et al., 2015; Ikonen et al., 2017). Additional obstacles include requirements for equipment, user training and data interpretation (Golberg et al., 2014; Ikonen et al., 2017). As with the molecular methods of detection, more work is needed before rapid methods are suitable for widespread use.

6.0 Sampling for E. coli

6.1 Sample collection

Proper procedures for collecting samples must be observed to ensure that the samples are representative of the water being examined. Detailed instructions on the collection of samples for bacteriological analysis are given in APHA et al. (2017). Generally, samples for microbiological testing should be packed with ice packs but protected from direct contact with them to prevent freezing. Packing the sample with loose ice is not recommended as it may contaminate the sample. During transport, samples should be kept cool but unfrozen at temperatures between 4 and 10°C (Payment et al., 2003; APHA et al., 2017). Commercial devices are available for verifying that the proper transport temperatures are being achieved. During the summer and winter months, additional steps may be required to maintain the optimal temperature of samples while in transport. These steps may include adding additional ice packs, or communicating with couriers to ensure that the cooler will not be stored in areas where freezing or excessive heating could occur.

To avoid unpredictable changes in the bacterial numbers of the sample, E. coli samples should always be analyzed as soon as possible after collection. Where on-site facilities are available or when an accredited laboratory is within an acceptable travel distance, analysis of samples within 6-8 hours is suggested (Payment et al., 2003; APHA et al., 2017). Ideally, for E. coli analysis of drinking water samples, the holding time between the collection of the sample and the beginning of its examination should not exceed 30 h (APHA et al., 2017).

In remote areas, holding times of up to 48 hours may be an unavoidable time interval. Researchers studying the effects of sample holding time on total coliform concentrations stored at 5°C have reported average declines as high as 14% in samples held for 24 hours compared to 6 hours (McDaniels et al., 1985; Ahammed, 2003). In other studies, increasing the holding time to 30 or 48 hours did not result in significant reductions in E. coli concentrations or result in fewer E. coli detections for the majority of samples analyzed (Pope et al., 2003; Bushon et al., 2015; Maier et al., 2015). In two of these studies (Pope et al., 2003, Maier et al., 2015), E. coli concentrations were greater than 10 cfu/100mL in all samples, making it difficult to assess the effects of holding time on samples with lower concentrations. Studies by McDaniels et al., (1985) and Ferguson (1994) have indicated that holding times can be more critical for total coliform and thermotolerant coliforms when concentrations are low.

The implications of an extended holding time should be discussed with the responsible drinking water authority in the affected jurisdiction. Specifically, it is important to consider the likelihood and impact of reporting a false negative result as a result of declines in the bacterial indicator count during extended storage. This should be weighed against the impact of samples being rejected or not being submitted at all if a water utility is unable to have them delivered to the laboratory within the required holding time (Maier et al., 2015).

When long holding times are anticipated, onsite testing with commercialized test methods (see Table 1.) in combination with appropriate training and quality control procedures offers a reliable, standardized analytical option for verification and compliance monitoring. Water utilities should first consult with the responsible drinking water authority about the acceptability of this practice and any other requirements that may apply. The use of a delayed incubation procedure is another option for water utilities encountering challenges in shipping samples within the recommended time frame. A delayed incubation procedure for total coliforms has been described and verification methods can be used to confirm the presence of E. coli from positive samples (APHA et al., 2017).

Samples should be labelled according to the requirements specified by the responsible drinking water authority and the analytical laboratory. In most cases, much of the information and the sample bottle identification number are recorded on the accompanying submission forms and, in cases where samples are collected for legal purposes, chain-of-custody paperwork. When analysis will be delayed, it is particularly important to record the duration and temperature of storage, as this information should be taken into consideration when interpreting the results. Water utilities may wish to consult with the analytical laboratory for specific requirements regarding the submission of samples.

To obtain a reliable estimate of the number of E. coli in treated drinking water, a minimum volume of 100 mL of water should be analyzed. Smaller volumes or dilutions may be more appropriate for testing samples from waters that are high in particulates or where high numbers of bacteria might be expected. Analysis of larger drinking water volumes can increase both the sensitivity and the reliability of testing. Large volume (20 L) sample analysis using a capsule filter was useful in improving the detection of total coliforms (E. coli was not detected) in distribution system samples during field trials at three drinking water utilities (Hargy et al., 2010). More research in the area of large volume sample testing is needed to assess the added value of results and if applicable, to optimize methodologies for routine use by water utilities. Additional statistical and field work are needed that simultaneously consider the parameters of sample volume, monitoring frequency, detection method, false/true positives and negatives, and cost.

6.2 Sampling frequency considerations

When determining sampling frequency requirements for municipal-scale systems, the application of a universal sampling formula is not possible due to basic differences in factors such as source water quality, adequacy and capacity of treatment, and size and complexity of the distribution system (WHO, 2004). Instead, the sampling frequency should be determined by the responsible drinking water authority after due consideration of local conditions, such as variations in raw water quality and history of the treated water quality. As part of operational and verification monitoring in a drinking water quality management system using a source-to-tap or water safety plan approach, water leaving a treatment plant and within the distribution system should be tested at least weekly for E. coli and daily for disinfectant residual and turbidity.

A guide for the minimum number of E. coli samples required is provided in Table 2. In a distribution system, the number of samples for bacteriological testing should be increased in accordance with the size of the population served.

| Population served | Minimum number of samples per month* |

|---|---|

| Up to 5000 | 4 |

| 5000-90 000 | 1 per 1000 persons |

| 90 000+ | 90 + (1 per 10 000 persons) |

* The samples should be taken at regular intervals throughout the month. For example, if four samples are required per month, samples should be taken on a weekly basis.

Sampling frequency in municipal and residential-scale systems may vary with jurisdiction but should include times when the risk of contamination of the source water is greatest, such as during spring thaw, heavy rains, or dry periods. Associations have been observed between climate factors (peak rainfall periods, warmer temperatures) and E. coli detections for small groundwater systems that are susceptible to fecal contamination (Valeo et al., 2016; Invik et al., 2017). Extreme weather events, such as intense rainfall, flash floods, hurricanes, droughts and wildfires can have significant water quality impacts and are expected to increase in frequency and severity with climate change (Thomas et al., 2006; Nichols et al., 2009; Wallender et al., 2014; Khan et al., 2015; Staben et al., 2015). Water utilities impacted by such events should consider conducting additional sampling during and/or following their occurrence.

New or rehabilitated wells should also be sampled before their first use to confirm acceptable bacteriological quality. In municipal systems, increased sampling may be considered when changes occur from the normal operations of the water treatment system.

It must be emphasized that the frequencies suggested in Table 2 are only general guides. In many systems, the water leaving the treatment plant and within the distribution system will be tested for E. coli well in excess of these minimum requirements. The general practice of basing sampling requirements on the population served recognizes that smaller water supply systems have a smaller population at risk. However, small water supplies have more facility deficiencies and are responsible for more disease outbreaks than are large ones (Schuster et al., 2005, Wallender et al., 2014; Murphy et al., 2016a, 2016b). Emphasis on regular physical inspections of the water supply system and monitoring of critical processes and activities is important for all small drinking water supplies and particularly for those where testing at the required frequency may be impractical (Robertson et al., 2003; WHO, 2005).

Supplies with a history of high-quality water may use greater process control and regular inspections as a means for reducing the number of samples taken for bacteriological analysis. Alternatively, supplies with variable water quality may be required to sample on a more frequent basis.

Even at the recommended sampling frequencies for E. coli, there are limitations that should be considered when interpreting the sampling results. Simulation studies have shown that it is very difficult to detect a contamination event in a distribution system unless the contamination occurs in a water main, a reservoir, at the treatment plant, or for a long duration at a high concentration (Speight et al., 2004; van Lieverloo et al., 2007). Some improvement in detection capabilities were seen when sampling programs were designed with the lowest standard deviation in time between sampling events (van Lieverloo et al., 2007), such as samples collected every 5 days regardless of weekends and holidays. This highlights the importance of operational monitoring of critical processes and use of multiple microbiological indicators in verification monitoring.

Disinfectant residual tests should be conducted when bacteriological samples are taken. Daily sampling recommendations for disinfectant residual and turbidity testing may not apply to supplies served by groundwater sources in which disinfection is practised to increase the safety margin. Further information on monitoring for turbidity can be found in the guideline technical document for turbidity (Health Canada, 2012c). Other parameters can be used alongside E. coli as part of the water quality water verification process. These include indicators of general microbiological water quality (total coliforms, heterotrophic plate counts) and additional indicators of fecal contamination (enterococci) (WHO, 2005, 2014). More information can be obtained from the corresponding Health Canada documents (Health Canada, 2012a; 2018b, 2018f).

6.3 Location of sampling points

In municipal-scale systems, the location of sampling points must be selected or approved by the responsible drinking water authority. The sampling locations selected may vary depending on the monitoring objectives. For example, fixed sampling points may be used to help establish a history of water quality within the distribution system, whereas sampling at different locations throughout the distribution system may provide more coverage of the system. A combination of both types of monitoring is common (Narasimhan et al., 2004). Speight et al. (2004) have published a methodology for developing customized distribution system sampling designs that incorporate rotating sample point locations.

Sample sites should include the point of entry into the distribution system and points in the distribution systems that are representative of the quality of water supplied to the consumer. If the water supply is obtained from more than one source, the location of sampling sites should ensure that water from each source is periodically sampled. Distribution system drawings can provide an understanding of water flows and directions and can aid in the selection of appropriate sampling locations. Focus should be placed on potential problem areas, or areas where changes in operational conditions may be expected to occur. Areas with long water detention times (e.g., dead ends), areas of depressurization, reservoirs, locations downstream of storage tanks, areas farthest from the treatment plant, and areas with a poor previous record are suggested sampling sites. Rotating among sampling sites throughout the distribution system may also improve the probability of detecting of water quality issues (WHO, 2014).

In residential-scale systems that provide drinking water to the public, samples are generally collected from the locations recommended by the responsible drinking water authority.

7.0 Treatment technology and distribution system considerations

The primary goal of treatment is to reduce the presence of disease-causing organisms and associated health risks to an acceptable or safe level. This can be achieved through one or more treatment barriers involving physical removal and/or inactivation. A source-to-tap approach, including watershed or wellhead protection, optimized treatment barriers and a well-maintained distribution system is a universally accepted approach to reduce waterborne pathogens in drinking water (O'Connor, 2002b; CCME, 2004; WHO, 2012). Monitoring for E. coli as part of the verification of the quality of the treated and distributed water is an important part of this approach.

7.1 Municipal-scale

An array of options is available for treating source waters to provide high-quality drinking water. The type and the quality of the source water will dictate the degree of treatment necessary. In general, minimum treatment of supplies derived from surface water sources or groundwater under the direct influence of surface waters (GUDI) should include adequate filtration (or technologies providing an equivalent log reduction credit) and disinfection. As most surface waters and GUDI supplies are subject to fecal contamination, treatment technologies should be in place to achieve a minimum 3 log (99.9%) removal and/or inactivation of Giardia and Cryptosporidium, and a minimum 4-log (99.99%) removal and/or inactivation of enteric viruses. Subsurface sources should be evaluated to determine whether the supply is susceptible to contamination by enteric viruses and protozoa. Those sources determined to be susceptible to viruses should achieve a minimum 4-log removal and/or inactivation of viruses. A jurisdiction may consider it acceptable for a groundwater source not to be disinfected if the assessment of the drinking water system has confirmed that the risk of enteric virus presence is minimal (Health Canada, 2018e).

In systems with a distribution system, a disinfectant residual should be maintained at all times. It is essential that the removal and inactivation targets are achieved before drinking water reaches the first consumer in the distribution system. Adequate process control measures and operator training are also required to ensure the effective operation of treatment barriers at all times (Smeets et al., 2009; AWWA, 2011).

Overall, the evidence shows that enteric bacterial pathogens are much more sensitive to chlorination than Giardia, Cryptosporidium, and numerous enteric viruses, and more sensitive to UV inactivation than numerous enteric viruses (Health Canada, 2018d, 2018e). Therefore, water that is treated to meet the guidelines for enteric viruses and enteric protozoa should have an acceptable bacteriological quality, including achieving E. coli concentrations of none detectable per 100 mL of water leaving the treatment plant.

7.1.1 Physical removal

Physical removal of indicator organisms (E. coli, total coliforms, enterococci) can be achieved using various technologies, including chemically-assisted, slow sand, diatomaceous earth and membrane filtration or an alternative proven filtration technology. Physical log removals for indicator organisms (E. coli, total coliforms, enterococci) reported for several filtration technologies are outlined in Table 3. Reverse osmosis (RO) membranes are expected to be as effective as ultrafiltration based on their molecular weight cut-off (LeChevallier and Au, 2004; Smeets et al. 2006). However, there is currently no method to validate the log removal for RO units (Alspach, 2018).

| TechnologyFootnote a | Log removals | |||

|---|---|---|---|---|

| Minimum | Mean | Median | Maximum | |

| Conventional filtration | 1.0 | 2.1 | 2.1 | 3.4 |

| Direct filtration | 0.8 | 1.4 | 1.5 | 3.3 |

| Slow sand filtration | 1.2 | 2.7 | 2.4 | 4.8 |

| Microfiltration | Not given | Not given | Not given | 4.3 |

| Ultrafiltration | Not given | >7 | Not given | Not given |

|

||||

7.1.2 Disinfection

Primary disinfection is required to protect public health by killing or inactivating harmful protozoa, bacteria and viruses, whereas secondary disinfection is used to introduce and maintain a residual in the distribution system. A residual in the distribution helps control bacterial regrowth and provide an indication of system integrity (Health Canada, 2009). Primary disinfection is typically applied after treatment processes that remove particles and organic matter. This strategy helps to ensure efficient inactivation of pathogens and minimizes the formation of disinfection by-products. It is important to note that when describing microbial disinfection of drinking water, the term "inactivation" is used to indicate that the pathogen is non-infectious and unable to replicate in a suitable host, although it may still be present.

The five disinfectants commonly used in drinking water treatment are: free chlorine, monochloramine (chloramine), ozone, chlorine dioxide and UV light. Free chlorine is the most common chemical disinfectant used for primary disinfection because it is widely available, is relatively inexpensive and provides a residual that can also be used for secondary disinfection. Chloramine is much less reactive than free chlorine, has lower disinfection efficiency and is generally restricted to use in secondary disinfection. Ozone and chlorine dioxide are effective primary disinfectants against bacteria, viruses and protozoa, although they are typically more expensive and complicated to implement, particularly for small systems. Ozone decays rapidly after being applied, therefore cannot be used for secondary disinfection. Chlorine dioxide is also not recommended for secondary disinfection because of its relatively rapid decay (Health Canada, 2008a). Through a physical process, UV light provides effective inactivation of bacteria, protozoa and most enteric viruses with the exception of adenovirus, which requires a high dose for inactivation. Similar to ozone and chlorine dioxide, UV light is highly effective for primary disinfection, but an additional disinfectant (usually chlorine or chloramine) needs to be added to for secondary disinfection.

7.1.2.1 Chemical disinfection

The efficacy of chemical disinfectants can be predicted based on knowledge of the residual concentration of a specific disinfectant and factors that influence its performance, mainly temperature, pH, contact time and the level of disinfection required (AWWA, 2011). This relationship is commonly referred to as the CT concept, where CT is the product of "C" (the residual concentration of disinfectant, measured in mg/L) and "T" (the disinfectant contact time, measured in minutes) for a specific microorganism under defined conditions (e.g., temperature and pH). To account for disinfectant decay, the residual concentration is usually determined at the exit of the contact chamber rather than using the applied dose or initial concentration. Also, the contact time T is often calculated using a T10 value, which is defined as the detention time at which 90% of the water meets or exceeds the required contact time. The T10 value can be estimated by multiplying the theoretical hydraulic detention time (i.e., tank volume divided by flow rate) by the baffling factor of the contact chamber. The U.S. Environmental Protection Agency (U.S. EPA, 1991) provides baffling factors for sample contact chambers.

lternatively, a hydraulic tracer test can be conducted to determine the actual contact time under plant flow conditions. Because the T value is dependent on the hydraulics related to the construction of the treatment installation, improving the hydraulics (i.e., increasing the baffling factor) is more effective to achieve CT requirements than increasing the disinfection dose.

CT values for 99% (2 log) inactivation of E. coli using chlorine, chlorine dioxide, chloramine, and ozone are provided in Table 4. For comparison, CT values for Giardia lamblia and for viruses have also been included. The CT values illustrate the fact that compared with most protozoans and viruses, E. coli are easier to inactivate using the common chemical disinfectants. Table 4 also highlights that chloramine is a much weaker disinfectant than free chlorine, chlorine dioxide or ozone, since much higher concentrations and/or contact times are required to achieve the same degree of inactivation. Consequently, chloramine is not recommended as a primary disinfectant.

In a well-operated treatment system, the CT provided for Giardia or viruses will result in a much greater inactivation than 99% for bacteria. The literature indicates that the enteric bacterial pathogens Salmonella, Campylobacter and E. coli O157:H7 are comparable to non-pathogenic E. coli in terms of their sensitivity to chemical disinfection (Lund, 1996; Rice et al., 1999; Wojcicka et al., 2007; Chauret et al., 2008; Rasheed et al., 2016; Jamil et al., 2017). Published CT values for these pathogens have been limited. Laboratory studies have demonstrated that a 2-4 log inactivation of E. coli O157:H7 can be achieved with CT values of < 0.3 mg·min/L for free chlorine and <30 mg·min/L for monochloramine (Chauret et al., 2008; Wojcicka et al., 2007). More information on the considerations when selecting a chemical disinfectant can be found in the guideline technical documents for enteric protozoa and enteric viruses (Health Canada, 2018d, 2018e).

| Disinfectant agent | pH | E. coliFootnote a (mg·min/L) [5°C] | Giardia lambliaFootnote b (mg·min/L) [5°C] | VirusesFootnote c (mg·min/L) [5-15°C] |