Enteric Protozoa: Giardia and Cryptosporidium

Download the alternative format

(PDF format, 1.1 MB, 121 pages)

Organization: Health Canada

Type: Guideline

Published: 2019-04-12

Health Canada, Ottawa, Ontario

April, 2019

Table of Contents

- Part I. Overview and Application

- 1.0 Guideline

- 2.0 Executive summary

- 3.0 Application of the guideline

- Part II. Science and Technical Considerations

- 4.0 Description

- 5.0 Sources and exposure

- 6.0 Analytical methods

- 6.1 Sample collection

- 6.2 Sample filtration and elution

- 6.3 Sample concentration and separation

- 6.4 (Oo)cyst identification

- 6.5 Recovery efficiencies

- 6.6 Assessing viability and infectivity

- 7.0 Treatment technology

- 7.1 Municipal scale

- 7.2 Residential scale

- 8.0 Health effects

- 9.0 Risk assessment

- 10.0 Rationale

- 11.0 References

- Appendix A: Other enteric waterborne protozoa of interest: Toxoplasma gondii, Cyclospora cayetanensis, Entamoeba histolytica, and Blastocystis hominis

- Appendix B: Selected outbreaks related to Giardia and Cryptosporidium

- Appendix C: List of acronyms

Part I. Overview and Application

1.0 Guideline

Where treatment is required for enteric protozoa, the guideline for Giardia and Cryptosporidium in drinking water is a health-based treatment goal of a minimum 3 log removal and/or inactivation of cysts and oocysts. Depending on the source water quality, a greater log removal and/or inactivation may be required. Treatment technologies and source water protection measures known to reduce the risk of waterborne illness should be implemented and maintained if source water is subject to faecal contamination or if Giardia or Cryptosporidium have been responsible for past waterborne outbreaks.

2.0 Executive summary

Protozoa are a diverse group of microorganisms. Most are free-living organisms that can reside in fresh water and pose no risk to human health. Some enteric protozoa are pathogenic and have been associated with drinking water related outbreaks. The main protozoa of concern in Canada are Giardia and Cryptosporidium. They may be found in water following direct or indirect contamination by the faeces of humans or other animals. Person-to-person transmission is a major route of exposure for both Giardia and Cryptosporidium.

Health Canada recently completed its review of the health risks associated with enteric protozoa in drinking water. This guideline technical document reviews and assesses identified health risks associated with enteric protozoa in drinking water. It evaluates new studies and approaches and takes into consideration the methodological limitations for the detection of protozoa in drinking water. Based on this review, the drinking water guideline is a health-based treatment goal of a minimum 3 log reduction of enteric protozoa.

2.1 Health effects

The health effects associated with exposure to Giardia and Cryptosporidium (oo)cysts, like those of other pathogens, depend upon features of the host, pathogen and environment. The immune status of the host, virulence of the strain, infectivity and viability of the cyst or oocyst, and the degree of exposure are all key determinants of infection and illness. Infection with Giardia or Cryptosporidium can result in both acute and chronic health effects.

Theoretically, a single cyst of Giardia would be sufficient to cause infection. However, studies have only demonstrated infection being caused by more than a single cyst, and the number of cysts needed is dependent on the virulence of the particular strain. Typically, Giardia is non-invasive and results in asymptomatic infections. Symptomatic giardiasis can result in nausea, diarrhea (usually sudden and explosive), anorexia, an uneasiness in the upper intestine, malaise and occasionally low-grade fever or chills. The acute phase of the infection commonly resolves spontaneously, and organisms generally disappear from the faeces. Some patients (e.g., children) suffer recurring bouts of the disease, which may persist for months or years.

As is the case for Giardia and other pathogens, a single organism of Cryptosporidium can potentially cause infection, although studies have only demonstrated infection being caused by more than one organism. Individuals infected with Cryptosporidium are more likely to develop symptomatic illness than those infected with Giardia. Symptoms include watery diarrhea, cramping, nausea, vomiting (particularly in children), low-grade fever, anorexia and dehydration. The duration of infection depends on the condition of the immune system. Immunocompetent individuals usually carry the infection for a maximum of 30 days. In immunocompromised individuals, infection can be life-threatening and can persist throughout the immunosuppression period.

2.2 Exposure

Giardia cysts and Cryptosporidium oocysts can survive in the environment for extended periods of time, depending on the characteristics of the water. They have been shown to withstand a variety of environmental stresses, including freezing and exposure to seawater. (Oo)cysts are commonly found in Canadian surface waters. The sudden and rapid influx of these microorganisms into surface waters, for which available treatment may not be adequate, is likely responsible for the increased risk of exposure through drinking water. (Oo)cysts have also been detected at low concentrations in groundwater supplies; contamination is usually as a result of the proximity of the well to contamination sources, including inadequate filtration in some geological formations, as well as improper design and/or maintenance of the well.

Giardia and Cryptosporidium are common causes of waterborne disease outbreaks and have been linked to both inadequately treated surface water and untreated well water. Giardia is the most commonly reported intestinal protozoan in Canada, North America and the world.

2.3 Analysis and treatment

A risk management approach, such as the source-to-tap approach or a water safety plan approach, is the best method to reduce enteric protozoa and other waterborne pathogens in drinking water. This type of approach requires a system assessment to characterize the source water, describe the treatment barriers that are in place, identify the conditions that can result in contamination, and implement the control measures needed to mitigate risks. One aspect of source water characterization is routine and targeted monitoring for Giardia and Cryptosporidium. Monitoring of source water for protozoa can be targeted by using information about sources of faecal contamination, together with historical data on rainfall, snowmelt, river flow and turbidity, to help identify the conditions that are likely to lead to peak concentrations of (oo)cysts. A validated method that allows for the simultaneous detection of these protozoa is available. Where monitoring for Giardia and Cryptosporidium is not feasible (e.g., small community water supplies), identifying the conditions that can result in contamination can provide guidance on necessary risk management measures to implement (e.g., source water protection, adequate treatment, operational monitoring, standard operating procedures and contingency plans).

Once the source has been characterized, pathogen reduction targets should be established and effective treatment barriers should be in place to achieve safe levels in the treated drinking water. In general, all water supplies derived from surface water sources or groundwater under the direct influence of surface waters (GUDI) should include adequate filtration (or equivalent technologies) and disinfection. The combination of physical removal (e.g., filtration) and inactivation barriers (e.g., ultraviolet light disinfection) is the most effective way to reduce protozoa in drinking water, because of their resistance to commonly used chlorine-based disinfectants.

The absence of indicator bacteria (e.g., Escherichia coli, total coliforms) does not necessarily indicate the absence of enteric protozoa. The application and control of a source-to-tap or water safety plan approach, including process and compliance monitoring (e.g., turbidity, disinfection conditions, E. coli), is important to verify that the water has been adequately treated and is therefore of an acceptable microbiological quality. In the case of untreated groundwater, testing for indicator bacteria is useful in assessing the potential for faecal contamination, which may include enteric protozoa.

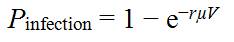

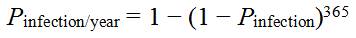

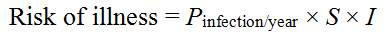

2.4 Quantitative microbial risk assessment

Quantitative microbial risk assessment (QMRA) is a tool that uses source water quality data, treatment barrier information and pathogen-specific characteristics to estimate the burden of disease associated with exposure to pathogenic microorganisms in drinking water. QMRA is generally used for two purposes. It can be used to set pathogen reduction targets during the development of drinking water quality guidelines, such as is done in this document. It can also be used to prioritize risks on a site-specific basis as part of a source-to-tap or water safety plan approach.

Specific enteric protozoa whose characteristics make them a good representative of all similar pathogenic protozoa are reference protozoan pathogens. It is assumed that controlling the reference protozoan would ensure control of all other similar protozoa of concern. Giardia lamblia and Cryptosporidium parvum have been selected as the reference protozoa for this risk assessment because of their high prevalence rates, potential to cause widespread disease, resistance to chlorine disinfection and the availability of dose-response models.

2.5 International considerations

Drinking water guidelines, standards and/or guidance from other national and international organizations may vary due to the age of the assessments as well as differing policies and approaches.

Various organizations have established guidelines or standards for enteric protozoa in drinking water. The U.S. EPA generally requires drinking water systems to achieve a 3 log removal or inactivation of Giardia, and a minimum 2-log removal or inactivation of Cryptosporidium. More treatment may be required for Cryptosporidium depending on the results of Cryptosporidium monitoring in the water source. The World Health Organization recommends providing QMRA-based performance targets as requirements for the reduction of enteric protozoa. Neither the European Union nor the Australian National Health and Medical Research Council have established a guideline value or standard for enteric protozoa in drinking water.

3.0 Application of the guideline

Note: Specific guidance related to the implementation of drinking water guidelines should be obtained from the appropriate drinking water authority in the affected jurisdiction.

Exposure to Giardia and Cryptosporidium should be reduced by implementing a risk management approach to drinking water systems, such as the source-to-tap or a water safety plan approach. These approaches require a system assessment that involves: characterizing the water source; describing the treatment barriers that prevent or reduce contamination; highlighting the conditions that can result in contamination; and identifying control measures to mitigate those risks through the treatment and distribution systems to the consumer.

3.1 Source water assessments

Source water assessments should be part of routine system assessments. They should include: the identification of potential sources of human and animal faecal contamination in the watershed/aquifer; potential pathways and/or events (low to high risk) by which protozoa can make their way into the source water and affect water quality; and conditions likely to lead to peak concentrations. Ideally, they should also include routine monitoring for Giardia and Cryptosporidium in order to establish a baseline, followed by long-term targeted monitoring to identify peak concentrations during specific events (e.g., rainfall, snowmelt, low flow conditions for rivers receiving discharges from wastewater treatment plants). Where monitoring for Giardia and Cryptosporidium is not feasible (e.g., small community water supplies), other approaches, such as implementing a source-to-tap or water safety plan approach, can provide guidance on identifying and implementing necessary risk management measures (e.g., source water protection, adequate treatment, operational monitoring, standard operating procedures and contingency plans).

Where monitoring is possible, sampling sites and frequencies can be targeted by using information about sources of faecal contamination, together with historical data on rainfall, snowmelt, river flow and turbidity. Source water assessments should also consider the "worst-case" scenario for that source water. It is important to understand the potential faecal inputs to the system to determine "worst-case" scenarios, as they will be site-specific. For example, there may be a short period of poor source water quality following a storm. This short-term degradation in water quality may in fact embody most of the risk in a drinking water system. Collecting and analyzing source water samples for Giardia and Cryptosporidium can provide important information for determining the level of treatment and mitigation (risk management) measures that should be in place to reduce the concentration of (oo)cysts to an acceptable level.

Subsurface sources should be evaluated to determine if the supply is vulnerable to contamination by enteric protozoa (i.e., GUDI) and other enteric pathogens. These assessments should include, at a minimum, a hydrogeological assessment, an evaluation of well integrity and a survey of activities and physical features in the area. Chlorophyll-containing algae are considered unequivocal evidence of surface water. Jurisdictions that require microscopic particulate analysis (MPA) as part of their GUDI assessment should ensure chlorophyll-containing algae are a principal component of any MPA test. Subsurface sources determined to be GUDI should achieve a minimum 3 log removal and/or inactivation of enteric protozoa. Subsurface sources that have been assessed as not vulnerable to contamination by enteric protozoa, if properly classified, should not have protozoa present. However, all groundwater sources will have a degree of vulnerability and should be periodically reassessed. It is important that subsurface sources be properly classified as numerous outbreaks have been linked to the consumption of untreated well water contaminated with enteric protozoa and/or other enteric pathogens.

3.2 Appropriate treatment barriers

As most surface waters and GUDI supplies are subject to faecal contamination, treatment technologies should be in place to achieve a minimum 3 log (99.9%) removal and/or inactivation of Giardia and Cryptosporidium. In many surface water sources, a greater log reduction may be necessary.

Log reductions can be achieved through physical removal processes, such as filtration, and/or inactivation processes, such as UV light disinfection. Generally, minimum treatment of supplies derived from surface water or GUDI sources should include adequate filtration (or technologies providing an equivalent log reduction credit) and disinfection. The appropriate type and level of treatment should take into account potential fluctuations in water quality, including short-term water quality degradation, and variability in treatment performance. Pilot testing or other optimization processes may be useful for determining treatment variability.

Private well owners (i.e., semi-public supply or individual household) should assess the vulnerability of their well to faecal contamination to determine if the water should be treated. Well owners should have an understanding of the well construction, type of aquifer material surrounding the well and location of the well in relation to sources of faecal contamination (i.e., septic systems, sanitary sewers, animal waste, etc.). General guidance on well construction, maintenance, protection and testing is typically available from provincial/territorial jurisdictions. If a private well owner is not able to determine if their well is vulnerable to faecal contamination, the responsible drinking water authority in the affected jurisdiction should be contacted to identify possible treatment options.

3.3 Appropriate maintenance and operation of distribution systems

Contamination of distribution systems with enteric protozoa has been responsible for waterborne illness. As a result, maintaining the physical/hydraulic integrity of the distribution system and minimizing negative- or low-pressure events are key components of a source-to-tap or water safety plan approach. Distribution system water quality should be regularly monitored (e.g., microbial indicators, disinfectant residual, turbidity, pH), operations/maintenance programs should be in place (e.g., water main cleaning, cross-connection control, asset management) and strict hygiene should be practiced during all water main construction (e.g., repair, maintenance, new installation) to ensure that drinking water is transported to the consumer with minimum loss of quality.

Part II. Science and Technical Considerations

4.0 Description

Protozoa are a diverse group of eukaryotic, typically unicellular, microorganisms. The majority of protozoa are free-living organisms that can reside in fresh water and pose no risk to human health. However, some protozoa are pathogenic to humans. These protozoa fall into two functional groups: enteric protozoa and free-living protozoa. Human infections caused by free-living protozoa (e.g., Naegleria, Acanthamoeba spp.) are generally the result of contact during recreational bathing (or domestic uses of water other than drinking); as such, this group of protozoa is addressed in the Guidelines for Canadian Recreational Water Quality (Health Canada, 2012a). Enteric protozoa, on the other hand, have been associated with several drinking water-related outbreaks, and drinking water serves as a significant route of transmission for these organisms. A discussion of enteric protozoa is therefore presented here.

Enteric protozoa are common parasites in the gut of humans and other mammals. They, like enteric bacteria and viruses, can be found in water following direct or indirect contamination by the faeces of humans and other animals. These microorganisms can be transmitted via drinking water and have been associated with several waterborne outbreaks in North America and elsewhere (Schuster et al., 2005; Karanis et al., 2007; Baldursson and Karanis, 2011; Efstratiou et al., 2017). The ability of this group of microorganisms to produce (oo)cysts that are extremely resistant to environmental stresses and commonly used chlorine-based disinfectants has facilitated their ability to spread and cause illness.

The enteric protozoa that are most often associated with waterborne disease in Canada are Giardia and Cryptosporidium. These protozoa are commonly found in surface waters: some strains are highly pathogenic, can survive for long periods of time in the environment and are highly resistant to chlorine-based disinfection. Thus, they are the focus of the following discussion. A brief description of other enteric protozoa of human health concern (i.e., Toxoplasma gondii, Cyclospora cayetanensis, Entamoeba histolytica, and Blastocystis hominis) is provided in Appendix A. It is important to note that throughout this document, the common names for enteric protozoa are used for clarity. Proper scientific nomenclature is used only when necessary to accurately present scientific findings.

4.1 Giardia

Giardia is a flagellated protozoan parasite (Phylum Metamonada, Subphylum Trichozoa, Superclass Eopharyngia, Class Trepomonadea, Subclass Diplozoa, Order Giardiida, Family Giardiidae) (Cavalier-Smith, 2003; Plutzer et al., 2010). It was first identified in human stool by Antonie van Leeuwenhoek in 1681 (Boreham et al., 1990). However, it was not recognized as a human pathogen until the 1960s, after community outbreaks and its identification in travellers (Craun, 1986; Farthing, 1992). Illness associated with this parasite is known as giardiasis.

4.1.1 Life cycle

Giardia inhabits the small intestines of humans and other animals. The trophozoite, or feeding stage, lives mainly in the duodenum but is often found in the jejunum and ileum of the small intestine. Trophozoites (9–21 µm long, 5–15 µm wide and 2–4 µm thick) have a pear-shaped body with a broadly rounded anterior end, two nuclei, two slender median rods, eight flagella in four pairs, a pair of darkly staining median bodies and a large ventral sucking disc (cytostome). Trophozoites are normally attached to the surface of the intestinal villi, where they are believed to feed primarily upon mucosal secretions. After detachment, the binucleate trophozoites form cysts (encyst) and divide within the original cyst, so that four nuclei become visible. Cysts are ovoid, 8–18 µm long by 5–15 µm wide (U.S. EPA, 2012), with two or four nuclei and visible remnants of organelles. Environmentally stable cysts are passed out in the faeces, often in large numbers. A complete life cycle description can be found elsewhere (Adam, 2001; Carranza and Lujan, 2010).

4.1.2 Species

The taxonomy of the genus Giardia continuously changes as data on the isolation and identification of new species and genotypes, strain phylogeny and host specificity become available. The current taxonomy of the genus Giardia is based on the species definition proposed by Filice (1952), who defined three species: G. duodenalis (syn. G. intestinalis, G. lamblia), G. muris and G. agilis, based on the shape of the median body, an organelle composed of microtubules that is most easily observed in the trophozoite. Other species have subsequently been described on the basis of cyst morphology and molecular analysis. Currently, six Giardia species are recognized (Table 1), although recent work suggests Giardia duodenalis (syn. G. intestinalis, G. lamblia) assemblages A and B may be distinct species and should be renamed (Prystajecky et al., 2015). Three synonyms (G. lamblia, G. intestinalis and G. duodenalis) have been and continue to be used interchangeably in the literature to describe the Giardia isolates from humans, although this species is capable of infecting a wide range of mammals. This species will be referred to as G. lamblia for this document. Molecular characterization of this species has demonstrated the existence of genetically distinct assemblages: assemblages A and B infect humans and other mammals, whereas the remaining assemblages (C, D, E, F and G) have not yet been isolated from humans and appear to have restricted host ranges (and likely represent different species or groupings) (Adam, 2001; Thompson, 2004; Thompson and Monis, 2004; Xiao et al., 2004; Smith et al., 2007; Plutzer et al., 2010). Due to the genetic diversity within assemblages A and B, these grouping have also been characterized into sub-assemblages (Cacciò and Ryan, 2008; Plutzer et al., 2010).

| Species (assemblage) | Major host(s) |

|---|---|

| G. agilis | Amphibians |

| G. ardeae | Birds |

| G. lamblia, syn. G. intestinalis, syn. G. duodenalis | |

(A) |

Humans, livestock, other mammals |

(B) |

Humans |

(C) |

Dogs |

(D) |

Dogs |

(E) |

Cattle, other hoofed livestock |

(F) |

Cats |

(G) |

Rats |

| G. microti | Muskrats, voles |

| G. muris | Rodents |

| G. psittaci | Birds |

In addition to genetic dissimilarities, the variants of G. lamblia also exhibit phenotypic differences, including differential growth rates and drug sensitivities (Homan and Mank, 2001; Read et al., 2002). The genetic differences have been exploited as a means of distinguishing human-infective Giardia from other strains or species (Amar et al., 2002; Cacciò et al., 2002, 2010; Read et al., 2004); however, the applicability of these methods to analysis of Giardia within water has been limited (see section 6.6). Thus, at present, it is necessary to consider that any Giardia cysts found in water are potentially infectious to humans.

4.2 Cryptosporidium

Cryptosporidium is a protozoan parasite (Phylum Apicomplexa, Class Gregarinomorphea, Subclass Cryptogregaria; Ryan et al., 2016). The genus Cryptosporidium is currently the sole member of the newly described Cryptogregaria subclass. The illness caused by this parasite is known as cryptosporidiosis. It was first recognized as a potential human pathogen in 1976 in a previously healthy three-year-old child (Nime et al., 1976). A second case of cryptosporidiosis occurred two months later in an individual who was immunosuppressed as a result of drug therapy (Meisel et al., 1976). The disease became best known in immunosuppressed individuals exhibiting the symptoms now referred to as acquired immunodeficiency syndrome, or AIDS (Hunter and Nichols, 2002).

4.2.1 Life cycle

The recognition of Cryptosporidium as a human pathogen led to increased research into the life cycle of the parasite and an investigation of the possible routes of transmission. Cryptosporidium has a multi-stage life cycle. The entire life cycle takes place in a single host and evolves in six major stages, including both sexual and asexual stages: 1) excystation, where sporozoites are released from an excysting oocyst; 2) schizogony (syn. merogony), where asexual reproduction takes place; 3) gametogony, the stage at which gametes are formed; 4) fertilization of the macrogametocyte by a microgamete to form a zygote; 5) oocyst wall formation; and 6) sporogony, where sporozoites form within the oocyst (Current, 1986). A complete life cycle description and diagram can be found elsewhere (Smith and Rose, 1990; Hijjawi et al., 2004; Fayer and Xiao, 2008). Syzygy, a sexual reproduction process that involves association of the pre-gametes end to end or laterally prior to the formation of gametes, was described in two species of Cryptosporidium, C. parvum and C. andersoni, providing new information regarding Cryptosporidium's biology (life cycle) and transmission (Hijjawi et al., 2002; Rosales et al., 2005).

As a waterborne pathogen, the most important stage in Cryptosporidium's life cycle is the round, thick-walled, environmentally stable oocyst, 4–6 µm in diameter. The nuclei of sporozoites can be stained with fluorogenic dyes such as 4′,6-diamidino-2-phenylindole (DAPI). Upon ingestion by humans, the parasite completes its life cycle in the digestive tract. Ingestion initiates excystation of the oocyst and releases four sporozoites, which adhere to and invade the enterocytes of the gastrointestinal tract (Spano et al., 1998; Pollok et al., 2003). The resulting intracellular parasitic vacuole contains a feeding organelle along with the parasite, which is protected by an outer membrane. The outer membrane is derived from the host cell. The sporozoite undergoes asexual reproduction (schizogony), releasing merozoites that spread the infection to neighbouring cells. Sexual multiplication (gametogony) then takes place, producing either microgametes ("male") or macrogametes ("female"). Microgametes are then released to fertilize macrogametes and form zygotes. A small proportion (20%) of zygotes fail to develop a cell wall and are termed "thin-walled" oocysts. These forms rupture after the development of the sporozoites, but prior to faecal passage, thus maintaining the infection within the host. The majority of the zygotes develop a thick, environmentally resistant cell wall and four sporozoites to become mature oocysts, which are then passed in the faeces.

4.2.2 Species

Our understanding of the taxonomy of the genus Cryptosporidium is continually being updated. Cryptosporidium was first described by Tyzzer (1907), when he isolated the organism, which he named Cryptosporidium muris, from the gastric glands of mice. Tyzzer (1912) found a second isolate, which he named C. parvum, in the intestine of the same species of mice. This species has since been renamed to C. tyzzeri (Ryan et al., 2014). At present, 29 valid species of Cryptosporidium have been recognized (Table 2) (Ryan et al., 2014; Zahedi et al., 2016). The main species of Cryptosporidium associated with illness in humans are C. hominis and C. parvum. They account for more than 90% of human cryptosporidiosis cases (Bouzid et al., 2013). The majority of remaining human cases are caused by C. meleagridis and C. cuniculus. A minority of cases have been attributed to C. ubiquitum, C. canis, C. felis, and C. viatorum. Other species have been found in rare instances. Although many species of Cryptosporidium have not yet been found to cause illness in humans, it should not be inferred that they are incapable of causing illness in humans, only that to date, they have not been implicated in sporadic cases or outbreaks of cryptosporidiosis. These findings have important implications for communities whose source water may be contaminated by faeces from animal sources (see Table 2). The epidemiological significance of these genotypes is still unclear, but findings suggest that certain genotypes are adapted to humans and transmitted (directly or indirectly) from person to person. Thus, at present, all Cryptosporidium oocysts found in water are usually considered potentially infectious to humans, although genotyping information may be used to further inform risk management decisions.

| Species (genotype) | Major host | Human health concernTable 2 footnote a |

|---|---|---|

| C. andersoni | Cattle | + |

| C. baileyi | Poultry | - |

| C. bovis | Cattle | + |

| C. canis | Dogs | ++ |

| C. cuniculus | Rabbits | ++ |

| C. erinacei | Hedgehogs and horses | + |

| C. fayeri | Marsupials | + |

| C. felis | Cats | ++ |

| C. fragile | Toads | - |

| C. galli | Finches, chickens | - |

| C. hominis (genotype H, I or 1) | Humans, monkeys | +++ |

| C. huwi | Fish | - |

| C. macropodum | Marsupials | - |

| C. meleagridis | Turkeys, humans | ++ |

| C. molnari | Fish | - |

| C. muris | Rodents | + |

| C. parvum (genotype C, II or 2) | Cattle, other ruminants, humans | +++ |

| C. rubeyi | Squirrel | - |

| C. ryanae | Cattle | - |

| C. scophthalmi | Turbot | - |

| C. scrofarum | Pigs | + |

| C. serpentis | Reptiles | - |

| C. suis | Pigs | + |

| C. tyzzeri | Rodents | + |

| C. ubiquitum | Ruminants, rodents, primates | ++ |

| C. varanii | Lizards | - |

| C. viatorum | Humans | ++ |

| C. wrairi | Guinea pigs | - |

| C. xiaoi | Sheep, goats | + |

Table 2 footnotes

- Table 2 footnote a

-

Human health concern is based solely on the frequency of detection of the species from human cryptosporidiosis cases, designation may change as new cases of cryptosporidiosis are identified

- +++ Most frequently associated with human illness

- ++ Has caused human illness, but infrequently

- + Has caused human illness, but only a few very rare cases (very low risk)

- - Has never been isolated from humans

In addition to the 29 species of Cryptosporidium that have been identified, over 40 genotypes of Cryptosporidium, for which a strain designation has not been made, have also been proposed among various animal groups, including rodents, marsupials, reptiles, fish, wild birds and primates (Fayer, 2004; Xiao et al., 2004; Feng et al., 2007; Smith et al., 2007; Fayer et al., 2008; Xiao and Fayer, 2008; Ryan et al., 2014). Research suggests that these genotypes vary with respect to their development, drug sensitivity and disease presentation (Chalmers et al., 2002; Xiao and Lal, 2002; Thompson and Monis, 2004; Xiao et al., 2004).

5.0 Sources and exposure

5.1 Guardia

5.1.1 Sources

Human and other animal faeces are major sources of Giardia. Giardiasis has been shown to be endemic in humans and in over 40 other species of animals, with prevalence rates ranging from 1% to 5% in humans, 10% to 100% in cattle, and 1% to 20% in pigs (Olson et al., 2004; Pond et al., 2004; Thompson, 2004; Thompson and Monis, 2004). Giardia cysts are excreted in large numbers in the faeces of infected humans and other animals (both symptomatic and asymptomatic). Infected cattle, for example, have been shown to excrete up to one million (106) cysts per gram of faeces (O'Handley et al., 1999; Ralston et al., 2003; O'Handley and Olson, 2006). Other mammals, such as beaver, dog, cat, muskrat and horses have also been shown to shed human-infective species of Giardia in their faeces (Davies and Hibler, 1979; Hewlett et al., 1982; Erlandsen and Bemrick, 1988; Erlandsen et al., 1988; Traub et al., 2004, 2005; Eligio-García et al., 2005). Giardia can also be found in bear, bird and other animal faeces, but it is unclear whether these strains are pathogenic to humans (refer to section 5.1.3). Cysts are easily disseminated in the environment and are transmissible via the faecal–oral route. This includes transmission through faecally contaminated water (directly, or indirectly through food products), as well as direct contact with infected humans or animals (Karanis et al., 2007; Plutzer et al., 2010).

Giardia cysts are commonly found in sewage and surface waters and occasionally in groundwater sources and treated water.

Surface water

Table 3 highlights a selection of studies that have investigated the occurrence of Giardia in surface waters in Canada. Typically, Giardia concentrations in surface waters range from 2 to 200 cysts/100 L (0.02 to 2 cysts/L). Concentrations as high as 8,700 cysts/100 L (87 cysts/L) have been reported and were associated with record spring runoff, highlighting the importance of event-based sampling (Gammie et al., 2000). Recent studies in Canada have also investigated the species of Giardia present in surface waters. G. lamblia assemblages A and B were the most common variants detected (Edge et al., 2013; Prystajecky et al., 2014). This has also been found internationally (Cacciò and Ryan, 2008; Alexander et al., 2014; Adamska, 2015).

| Province | Site/watershed | Frequency of positive samples | Unit of measure | Giardia concentration (cysts/100 L)Table 3 footnote b | Reference |

|---|---|---|---|---|---|

| National survey | Various | 245/1,173 | Maximum | 230 | Wallis et al., 1996 |

| Alberta | Not available | 1/1 | Single sample | 494 | LeChevallier et al., 1991a |

| Alberta | North Saskatchewan River, Edmonton | N/A | Annual geometric mean | 8–193 | Gammie et al., 2000 |

| Maximum | 2,500Table 3 footnote c | ||||

| Alberta | North Saskatchewan River, Edmonton | N/A | Annual geometric mean | 98 | EPCOR, 2005 |

| Maximum | 8,700 | ||||

| British Columbia | Black Mountain Irrigation District | 24/27 | Geometric mean | 60.4 | Ong et al., 1996 |

| Vernon Irrigation District | 68/70 | 26 | |||

| Black Mountain Irrigation District | 24/27 | Range | 4.6–1,880 | ||

| Vernon Irrigation District | 68/70 | 2–114 | |||

| British Columbia | Seymour | 12/49 | AverageTable 3 footnote d | 3.2 | Metro Vancouver, 2009 |

| Capilano | 24/49 | 6.3 | |||

| Coquitlam | 13/49 | 3.8 | |||

| Seymour | Maximum | 8.0 | |||

| Capilano | 20.0 | ||||

| Coquitlam | 12.0 | ||||

| British Columbia | Salmon River | 38/49 | Median | 32 | Prystajecky et al., 2014 |

| Coghlan Creek | 59/65 | 107 | |||

| Salmon River | Maximum | 730 | |||

| Coghlan Creek | 3,800 | ||||

| Nova Scotia | Collins Park | 1/26 | Maximum | 130 | Nova Scotia Environment, 2013 |

| East Hants | 2/12 | 10 | |||

| Stewiacke | 3/12 | 140 | |||

| Stellarton | 4/12 | 200 | |||

| Tatamagouche | 0/12 | <10 | |||

| Bridgewater | 0/12 | <10 | |||

| Middle Musquodoboit | 4/25 | 1,067 | |||

| Ontario | Grand River | 14/14 | Median | 71 | Van Dyke et al., 2006 |

| Maximum | 486 | ||||

| Ontario | Grand River watershed | 101/104 | Median | 80 | Van Dyke et al., 2012 |

| Maximum | 5,401 | ||||

| Ontario | Ottawa River | N/A | Average | 16.8 | Douglas, 2009 |

| Ontario | Lake Ontario | Maximum | Edge et al., 2013 | ||

| Water Treatment Plant Intakes | |||||

| WTP1 | 17/46 | 70 | |||

| WTP2 | 4/35 | 12 | |||

| WTP3 | 6/43 | 18 | |||

| Humber River | 32/41 | 540 | |||

| Credit River | 19/35 | 90 | |||

| Quebec | ROS Water Treatment Plant, Thousand Islands River, Montreal | 4/4 | Geometric mean | 1,376 | Payment and Franco, 1993 |

| STE Water Treatment Plant, Thousand Islands River, Montreal | 8/8 | 336 | |||

| REP Water Treatment Plant, Assomption River, Montreal | 4/5 | 7.23 | |||

| Quebec | Saint Lawrence River | N/A | Geometric mean | 200 | Payment et al., 2000 |

| Quebec | 15 surface water sites impacted by urban and agricultural runoff | 191/194 | Medians | 22–423 | MDDELCC, 2016 |

| Maximums | 70–2278 |

Table 3 footnotes

- Table 3 footnote a

-

The studies were selected to show range of concentrations, not as a comprehensive list of all studies in Canada. The sampling and analysis methods employed in these studies varied; as a result, it may not be appropriate to compare cyst concentrations. The viability and infectivity of cysts were rarely assessed; little information is therefore available regarding the potential risk to human health associated with the presence of Giardia in these samples.

- Table 3 footnote b

-

Units were standardized to cysts/100 L.

- Table 3 footnote c

-

Associated with heavy spring runoff.

- Table 3 footnote d

-

Average results are from positive filters only.

The typical range for Giardia concentrations in Canadian surface waters is at the lower end of the range described in an international review (Dechesne and Soyeux, 2007). Dechesne and Soyeux (2007) found that Giardia concentrations in surface waters across North America and Europe ranged from 0.02 to 100 cysts/L, with the highest levels reported in the Netherlands. Source water quality monitoring data (surface and GUDI sources) were also gathered for nine European water sources (in France, Germany, the Netherlands, Sweden and the United Kingdom) and for one Australian source. Overall, Giardia was frequently detected at relatively low concentrations, and levels ranged from 0.01 to 40 cysts/L. An earlier survey by Medema et al. (2003) revealed that concentrations of cysts in raw and treated domestic wastewater (i.e., secondary effluent) typically ranged from 5,000 to 50,000 cysts/L and from 50 to 500 cysts/L, respectively.

Groundwater

There is a limited amount of information on groundwater contamination with Giardia in Canada and elsewhere. The available studies discuss various types of sources that emanate from the subsurface. Most of these studies use the term "groundwater", although it is clear from the descriptions provided by the authors that the water sources would be classified as GUDI or surface water (e.g., infiltration wells, springs). However, for this document, the terminology used by the authors has been maintained.

A review and analysis of enteric pathogens in groundwaters in the United States and Canada (1990–2013), conducted by Hynds et al. (2014), identified 102 studies, of which only 10 investigated the presence of Giardia. Giardia was found in 3 of the 10 studies; none of the positive sites were in Canada. Three studies were conducted in Canada (out of the 10 studies identified). Two of the studies were conducted in Prince Edward Island and one was conducted in British Columbia. The PEI studies included a total of 40 well water samples on dairy and beef farms (Budu-Amoako et al., 2012a, 2012b). None of the samples tested positive for Giardia. The study conducted in BC also did not find any Giardia present in well water samples (Isaac-Renton et al., 1999).

Other published studies have reported the presence of cysts in groundwaters (Hancock et al., 1998; Gaut et al., 2008; Khaldi et al., 2011; Gallas-Lindemann et al., 2013; Sinreich, 2014; Pitkänen et al., 2015). As noted above, many of the sources described in these studies would be classified as GUDI or surface water. Hancock et al. (1998) found that 6% (12 /199) of the sites tested were positive for Giardia. Of the positive sites, 83% (10/12) were composed of springs, infiltration galleries and horizontal wells while the remaining positive sites (2/12) were vertical wells. The same study also reported that contamination was detected intermittently; many sites required multiple samples before Giardia was detected. However, sites that were negative for Giardia in the first sample were not always resampled. This may have led to an underestimation of contamination prevalence. Many of the other studies reported in the literature have focused on vulnerable areas, such as karst aquifers (Khaldi et al., 2011; Sinreich, 2014) or areas where human and animal faecal contamination are more likely to impact the groundwater (i.e., shallow groundwaters, or close proximity to surface water sources or agricultural or human sewage sources; Gaut et al., 2008; Pitkänen et. al., 2015). These studies have reported Giardia prevalence rates ranging from none detected in any of the wells (Gaut et al., 2008) to 20% of the wells testing positive for Giardia (Pitkänen et. al., 2015). Khaldi et al., (2011) also found that Giardia positive samples increased from 0%, under non-pumping conditions, to 11% under continuously pumping conditions. Hynds et al. (2014) reported that well design and integrity have a significant impact on the likelihood of detecting enteric protozoa.

The above studies highlight the importance of assessing the vulnerability of subsurface sources to contamination by enteric protozoa to ensure they are properly classified. Subsurface sources that have been assessed as not vulnerable to contamination by enteric protozoa, if properly classified, should not have protozoa present. However, all groundwater sources will have a degree of vulnerability and should be periodically reassessed.

Treated water

Treated water in Canada is rarely tested for the presence of Giardia. When testing has been conducted, cysts are typically not present or are present in very low numbers (Payment and Franco, 1993; Ong et al., 1996; Wallis et al., 1996, 1998; EPCOR, 2005; Douglas, 2009), with some exceptions. In 1997, a heavy spring runoff event in Edmonton, Alberta resulted in the presence of 34 cysts/1,000 L in treated water (Gammie et al., 2000). Cysts have also been detected in treated water derived from unfiltered surface water supplies (Payment and Franco, 1993; Wallis et al., 1996).

5.1.2 Survival

Giardia cysts can survive in the environment from weeks to months (or possibly longer), depending on a number of factors, including the characteristics specific to the strain and of the water, such as temperature. The effect of temperature on survival rates of Giardia has been well studied. In general, as the temperature increases, the survival time decreases. For example, Bingham et al. (1979) observed that Giardia cysts can survive up to 77 days in tap water at 8°C, compared with 4 days at 37°C. DeRegnier et al. (1989) reported a similar effect in river and lake water. This temperature effect is, in part, responsible for peak Giardia cyst prevalence reported in winter months (Isaac-Renton et al., 1996; Ong et al., 1996; Van Dyke et al., 2012). Other factors such as exposure to ultraviolet (UV) light (McGuigan et al., 2006; Heaselgrave and Kilvington, 2011) or predation (Revetta et al., 2005) can also shorten the survival time of Giardia.

The viability of Giardia cysts found in water does not seem to be high. Cysts found in surface waters often have permeable cell walls, as shown by propidium iodide (PI) staining (Wallis et al., 1995), indicating they are likely non-viable. Wallis et al. (1996) found that only approximately 25% of the drinking water samples that were positive for Giardia contained viable cysts using PI staining. Observations by LeChevallier et al. (1991b) also suggest that most of the cysts present in water are non-viable; 40 of 46 cysts isolated from drinking water exhibited "non-viable-type" morphologies (i.e., distorted or shrunken cytoplasm). More recent work in British Columbia also found that the vast majority of cysts detected from routine monitoring of two drinking water reservoirs displayed no internal structures using DAPI staining and differential interference contrast (DIC) microscopy, suggesting they are aged or damaged and, therefore, unlikely to be viable (Metro Vancouver, 2013). Studies have frequently revealed the presence of empty cysts ("ghosts"), particularly in sewage.

5.1.3 Exposure

Person-to-person transmission is by far the most common route of transmission of Giardia (Pond et al., 2004; Thompson, 2004). Persons become infected via the faecal–oral route, either directly (i.e., contact with faeces from a contaminated person, such as children in daycare facilities) or indirectly (i.e., ingestion of contaminated drinking water, recreational water and, to a lesser extent, food). Since the most important source of human infectious Giardia originates from infected people, source waters impacted by human sewage are an important potential route of exposure to Giardia.

Animals may also play an important role in the (zoonotic) transmission of Giardia, although it is not clear to what extent. Cattle have been found to harbour human-infective (assemblage A) Giardia, as have dogs and cats. Assemblage A Giardia genotypes have also been detected in wildlife, including beavers and deer (Plutzer et al., 2010). Although there is some evidence to support the zoonotic transmission of Giardia, most of this evidence is circumstantial or compromised by inadequate controls. Thus, it is not clear how frequently zoonotic transmission occurs or under what circumstances. Overall, these data suggest that, in most cases, animals are not the original source of human-infective Giardia. However, in some circumstances, it is possible that they could amplify zoonotic genotypes present in other sources (e.g., contaminated water). In cattle, for example, the livestock Giardia genotype (assemblage E) predominates (Lalancette et al., 2012); however, cattle are susceptible to infection with human-infective (zoonotic) genotypes of Giardia. Given that livestock, such as calves, infected with Giardia commonly shed between 105 and 106 cysts per gram of faeces, they could play an important role in the transmission of Giardia. Other livestock, such as sheep, are also susceptible to human-infective genotypes. It is possible that livestock could acquire zoonotic genotypes of Giardia from their handlers or from contaminated water sources, although there is also evidence to the contrary. In a study investigating the use of reclaimed wastewater for pasture irrigation, the human infective genotypes present in the wastewater could not be isolated from the faeces of the grazing animals (Di Giovanni et al., 2006).

The role that wildlife plays in the zoonotic transmission of Giardia is also unclear. Although wildlife, including beavers, can become infected with human-source G. lamblia (Davies and Hibler, 1979; Hewlett et al., 1982; Erlandsen and Bemrick, 1988; Erlandsen et al., 1988; Traub et al., 2004, 2005; Eligio-García et al., 2005) and have been associated with waterborne outbreaks of giardiasis (Kirner et al., 1978; Lopez et al., 1980; Lippy, 1981; Isaac Renton et al., 1993), the epidemiological and molecular data do not support zoonotic transmission via wildlife as a significant risk for human infections (Hoque et al., 2003; Stuart et al., 2003; Berrilli et al., 2004; Thompson, 2004; Hunter and Thompson, 2005; Ryan et al., 2005a). The data do, however, suggest that wildlife acquire human-infective genotypes of Giardia from sources contaminated by human sewage. As population pressures increase and as more human-related activity occurs in watersheds, the potential for faecal contamination of source waters becomes greater, and the possibility of contamination with human sewage must always be considered. Erlandsen and Bemrick (1988) concluded that Giardia cysts in water may be derived from multiple sources and that epidemiological studies that focus on beavers may be missing important sources of cyst contamination. Some waterborne outbreaks have been traced back to human sewage contamination (Wallis et al., 1998). Ongerth et al. (1995) showed that there is a statistically significant relationship between increased human use of water for domestic and recreational purposes and the prevalence of Giardia in animals and surface water. It is known that beaver and muskrat can be infected with human-source Giardia (Erlandsen et al., 1988), and these animals are frequently exposed to raw or partially treated sewage in Canada. The application of genotyping procedures has provided further proof of this linkage. Thus, it is likely that wildlife and other animals act as a reservoir of human-infective Giardia from sewage-contaminated water and, in turn, amplify concentrations of Giardia cysts in water. If infected animals live upstream from or in close proximity to drinking water treatment plant intakes, they could play an important role in the waterborne transmission of Giardia. Thus, watershed management to control both human and animal fecal inputs is important for disease prevention.

As is the case for livestock and wildlife animals, it is unclear what role domestic animals play in the zoonotic transmission of Giardia. Although dogs and cats are susceptible to infection with zoonotic genotypes of Giardia, few studies have provided direct evidence of transmission between them and humans (Eligio-García et al., 2005; Shukla et al., 2006; Thompson et al., 2008).

5.2 Cryptosporidium

5.2.1 Sources

Humans and other animals, especially cattle, are important reservoirs for Cryptosporidium. Human cryptosporidiosis has been reported in more than 90 countries over six continents (Fayer et al., 2000; Dillingham et al., 2002). Reported prevalence rates of human cryptosporidiosis range from 1 to 20%, with higher rates reported in developing countries (Caprioli et al., 1989; Zu et al., 1992; Mølbak et al., 1993; Nimri and Batchoun, 1994; Dillingham et al., 2002; Cacciò and Pozio, 2006). Livestock, especially cattle, are a significant source of C. parvum (Pond et al., 2004). In a survey of Canadian farm animals, Cryptosporidium was detected in faecal samples from cattle (20%), sheep (24%), hogs (11%) and horses (17%) (Olson et al., 1997). Overall, prevalence rates in cattle range from 1 to 100% and in pigs from 1 to 10% (Pond et al., 2004). Oocysts were more prevalent in calves than in adult animals; conversely, they were more prevalent in mature pigs and horses than in young animals. Infected calves can excrete up to 107 oocysts per gram of faeces (Smith and Rose, 1990) and represent an important source of Cryptosporidium in surface waters (refer to section 5.2.2). Wild ungulates (hoofed animals) and rodents are not a significant source of human-infectious Cryptosporidium (Roach et al., 1993; Ong et al., 1996).

Oocysts are easily disseminated in the environment and are transmissible via the faecal–oral route. Major pathways of transmission for Cryptosporidium include person-to-person, contaminated drinking water, recreational water, food and contact with animals, especially livestock. A more detailed discussion of zoonotic transmission is provided in section 5.2.3.

Cryptosporidium oocysts are commonly found in sewage and surface waters and occasionally in groundwater sources and treated water.

Surface water

Table 4 highlights a selection of studies that have investigated the occurrence of Cryptosporidium in surface waters in Canada.

| Province | Site/watershed | Frequency of positive samples | Unit of measure | Cryptosporidium concentration (oocysts/100 L)Table 4 footnote b | Reference |

|---|---|---|---|---|---|

| National survey | Various | 55/1,173 | Maximum (for most samples) | 0.5 | Wallis et al., 1996 |

| Alberta | Not available | 1/1 | Single sample | 34 | LeChevallier et al., 1991a |

| Alberta | North Saskatchewan River, Edmonton | N/A | Annual geometric mean | 6–83 | Gammie et al., 2000 |

| Maximum | 10,300Table 4 footnote c | ||||

| Alberta | North Saskatchewan River, Edmonton | N/A | Annual geometric mean | 9 | EPCOR, 2005 |

| Maximum | 69 | ||||

| British Columbia | Black Mountain Irrigation District | 14/27 | Geometric mean | 3.5 | Ong et al., 1996 |

| Vernon Irrigation District | 5/19 | 9.2 | |||

| Black Mountain Irrigation District | 14/27 | Range | 1.7–44.3 | ||

| Vernon Irrigation District | 5/19 | 4.8–51.4 | |||

| British Columbia | Seymour | 0/49 | AverageTable 4 footnote d | 0.0 | Metro Vancouver, 2009 |

| Capilano | 5/49 | 2.4 | |||

| Coquitlam | 1/49 | 2.0 | |||

| Seymour | Maximum | 0.0 | |||

| Capilano | 4.0 | ||||

| Coquitlam | 2.0 | ||||

| British Columbia | Salmon River | 36/49 | Median | 11 | Prystajecky et al., 2014 |

| Coghlan Creek | 36/65 | 333 | |||

| Salmon River | Maximum | 126 | |||

| Coghlan Creek | 20,600 | ||||

| Nova Scotia | Collins Park | 1/26 | Maximum | 130 | Nova Scotia Environment, 2013 |

| East Hants | 0/12 | <10 | |||

| Stewiacke | 0/12 | <10 | |||

| Stellarton | 0/12 | <10 | |||

| Tatamagouche | 0/12 | <10 | |||

| Bridgewater | 0/12 | <10 | |||

| Middle Musquodoboit | 0/25 | <10 | |||

| Ontario | Grand River | N/A | Maximum | 2,075 | Welker et al., 1994 |

| Ontario | Grand River | 33/98 | Average | 6.9 | LeChevallier et al., 2003 |

| Maximum | 100 | ||||

| Ontario | Grand River | 13/14 | Median | 15 | Van Dyke et al., 2006 |

| Maximum | 186 | ||||

| Ontario | Grand River watershed | 92/104 | Median | 12 | Van Dyke et al., 2012 |

| Maximum | 900 | ||||

| Ontario | Ottawa River | N/A /53 | Average | 6.2 | Douglas, 2009 |

| Ontario | Lake Ontario | Maximum | Edge et al., 2013 | ||

| Water Treatment Plant Intakes | |||||

| WTP1 | 5/46 | 40 | |||

| WTP2 | 5/35 | 3 | |||

| WTP3 | 3/43 | 1 | |||

| Humber River | 18/41 | 120 | |||

| Credit River | 21/35 | 56 | |||

| Ontario | South Nation (multiple sites) | 317/674 | Mean | 3.3–170 | Ruecker et al., 2012 |

| Quebec | ROS Water Treatment Plant, Thousand Islands River, Montreal | Geometric mean | 742 | Payment and Franco, 1993 | |

| STE Water Treatment Plant, Thousand Islands River, Montreal | <2 | ||||

| REP Water Treatment Plant, Assomption River, Montreal | <2 | ||||

| Quebec | Saint Lawrence River | Geometric mean | 14 | Payment et al., 2000 | |

| Quebec | 15 surface water sites impacted by urban and agricultural runoff | 99/194 | Medians | 2–31 | MDDELCC, 2016 |

| Maximums | 7–150 |

Table 4 footnotes

- Table 4 footnote a

-

The sampling and analysis methods employed in these studies varied; as a result, it may not be appropriate to compare oocyst concentrations. The viability and infectivity of oocysts were rarely assessed; little information is therefore available regarding the potential risk to human health associated with the presence of Cryptosporidium in these samples.

- Table 4 footnote b

-

Units were standardized to oocysts/100 L. However, the text cites concentrations/units as they were reported in the literature.

- Table 4 footnote c

-

Associated with heavy spring runoff.

- Table 4 footnote d

-

Average results are from positive filters only.

Typically, Cryptosporidium concentrations in Canadian surface waters range from 1 to 100 oocysts/100 L (0.001 to 1 oocyst/L), although high concentrations have been reported. Concentrations as high as 10,300 oocysts/100 L (103 oocysts/L) were associated with a record spring runoff (Gammie et al., 2000), and as high as 20,600 oocysts/100 L (206 oocysts/L) during a two-year bi weekly monitoring program (Prystajecky et al., 2014). These results highlight the importance of both event-based sampling and routine monitoring to characterize a source water. Analysis of data collected in the United States showed that median oocyst densities ranged from 0.005/L to 0.5/L (Ongerth, 2013a).

Recent studies have also investigated the species of Cryptosporidium present in source waters. Two studies in Ontario watersheds reported the frequency of detection of C. parvum and C. hominis, the species most commonly associated with human impacts, at less than 2% of positive samples (Ruecker et al., 2012; Edge et al., 2013). In contrast, a study in British Columbia in a mixed urban-rural watershed reported that around 30% of the species detected were potentially human infective types (Prystajecky et al., 2014). Other studies in Canada report human infective genotypes somewhere between these two levels (Pintar et al., 2012; Van Dyke et al., 2012). These findings are not unique. In a recent research project that genotyped 220 slides previously confirmed to be positive by the immunofluorescence assay (IFA), 10% of the slides contained human genotypes (Di Giovanni et al., 2014).

An international review of source water quality data (surface and GUDI sources) demonstrated that concentrations of Cryptosporidium in source waters across North America and Europe ranged from 0.006 to 250 oocysts/L (Dechesne and Soyeux, 2007). Although this range is large, a closer look at nine European sites and one Australian site showed that, overall, Cryptosporidium was frequently detected at relatively low concentrations, and levels ranged from 0.05 to 4.6 oocysts/L. In an earlier survey of wastewater effluent, Medema et al. (2003) reported concentrations of oocysts in raw and treated domestic wastewater (i.e., secondary effluent) ranging from 1,000 to 10,000 oocysts/L and from 10 to 1,000 oocysts/L, respectively.

Groundwater

There is a limited amount of information on groundwater contamination with Cryptosporidium in Canada and elsewhere. The available studies discuss various types of sources that emanate from the subsurface. Most of these studies use the term "groundwater", although it is clear from the descriptions provided by the authors that the water sources would be classified as GUDI or surface water (e.g., infiltration wells, springs). However, for this document, the terminology used by the authors has been maintained.

Hynds et al. (2014) reviewed groundwater studies in Canada and the United States between 1990 and 2013. Nine studies looked for Cryptosporidium; of these nine studies, only three occurred in Canada. Two of the studies were conducted in Prince Edward Island (Budu-Amoako et al., 2012a, 2012b) and one study was conducted in BC (Isaac-Renton et al., 1999). The study in BC did not find any Cryptosporidium in the community well during the study period. The PEI studies tested 40 well water samples on beef and dairy farms, and they found that 4 of 40 samples tested positive for Cryptosporidium with a concentration density range of 0.1 to 7.2/L in 100 L samples. To confirm their findings, the positive wells were retested and both Cryptosporidium and bacterial indicators were found.

Other published studies have reported the presence of oocysts in groundwaters (Welker et al., 1994; Hancock et al., 1998; Gaut et al., 2008; Khaldi et al., 2011; Füchslin et al., 2012; Gallas-Lindemann et al., 2013; Sinreich, 2014). As noted above, many of the sources described in these studies would be classified as GUDI or surface water. Welker et al. (1994) reported the presence of Cryptosporidium in low concentrations in induced infiltration wells located adjacent to the Grand River. Hancock et al. (1998) found that 11% (21 /199) of the sites tested were positive for Cryptosporidium. Of the positive sites, 67% (14 /21) were composed of springs, infiltration galleries and horizontal wells, and 33% (7 /21) were from vertical wells. The same study also reported that contamination was detected intermittently; many sites required multiple samples before Cryptosporidium was detected. However, sites that were negative for Cryptosporidium in the first sample were not always resampled. This may have led to an underestimation of contamination prevalence. Many of the other studies reported in the literature have focused on vulnerable areas, such as karst aquifers (Khaldi et al., 2011; Sinreich, 2014) or areas where human and animal faecal contamination are more likely to impact the groundwater (i.e., shallow groundwaters, or close proximity to surface water sources or agricultural or human sewage sources; Gaut et al., 2008; Füchslin et al., 2012; Gallas-Lindemann et al., 2013). These studies have reported Cryptosporidium prevalence rates ranging from 8 to 15 % of tested samples (Gaut et al., 2008; Gallas-Lindemann et al., 2013; Sinreich, 2014) to 100% of samples (from 3 wells) testing positive for Cryptosporidium in a small study in Switzerland (Füchslin et al., 2012). Khaldi et al. (2011) also found that Cryptosporidium positive samples increased from 44% under non-pumping conditions to 100% under pumping conditions. Hynds et al. (2014) reported that well design and integrity have been shown to have a significant impact on the likelihood of detecting enteric protozoa.

The above studies highlight the importance of assessing the vulnerability of subsurface sources to contamination by enteric protozoa to ensure they are properly classified. Subsurface sources that have been assessed as not vulnerable to contamination by enteric protozoa, if properly classified, should not have protozoa present. However, all groundwater sources will have a degree of vulnerability and should be periodically reassessed.

Treated water

The presence of Cryptosporidium in treated water in Canada is rarely assessed. When testing has been conducted, oocysts are typically not present or are present in very low numbers (Payment and Franco, 1993; Welker et al., 1994; Ong et al., 1996; Wallis et al., 1996; EPCOR, 2005; Douglas, 2009), with some exceptions (Gammie et al., 2000). Oocysts have been detected in treated water derived from unfiltered surface water supplies (Wallis et al., 1996) and after extreme contamination events. For example, in 1997, a heavy spring runoff event in Edmonton, Alberta resulted in the presence of 80 oocysts/1,000 L in treated water (Gammie et al., 2000). During the cryptosporidiosis outbreak in Kitchener-Waterloo, Welker et al. (1994) reported that two of 17 filtered water samples contained 0.16 and 0.43 oocysts/1000 L.

Treated waters have been extensively monitored in other countries. Daily monitoring of finished water in the United Kingdom (between 1999 and 2008) showed that the prevalence of positive samples was as high as 8% but, through improvements to the drinking water systems, this dropped to approximately 1% (Rochelle et al., 2012). The method used for daily monitoring did not provide information on the viability or infectivity of the oocysts. However, genotyping was conducted on a subset of positive samples and it was found that the species of Cryptosporidium most often detected were C. ubiquitum (12.5%), C. parvum (4.2%) and C. andersonni (4.0%) (Nichols et al., 2010).

5.2.2 Survival

Cryptosporidium oocysts have been shown to survive in cold waters (4°C) in the laboratory for up to 18 months (AWWA, 1988). In warmer waters (15°C), Cryptosporidium parvum has been shown to remain viable and infectious for up to seven months (Jenkins et al., 2003). In general, oocyst survival time decreases as temperature increases (Pokorny et al., 2002; King et al., 2005; Li et al., 2010). Robertson et al. (1992) reported that C. parvum oocysts could withstand a variety of environmental stresses, including freezing (viability greatly reduced) and exposure to seawater; however, Cryptosporidium oocysts are susceptible to desiccation. In a laboratory study of dessication, it was shown that within two hours, only 3% of oocysts were still viable, and by six hours, all oocysts were dead (Robertson et al., 1992).

Despite the common assumption that the majority of oocysts in water are viable, Smith et al. (1993) found that oocyst viability in surface waters is often very low. A study by LeChevallier et al. (2003) reported that 37% of oocysts detected in natural waters were infectious. Additionally, a study by Swaffer et al. (2014) reported that only 3% of the Cryptosporidium detected was infectious. In fact, research has shown that even in freshly shed oocysts, only 5–22% of the oocysts were infectious (Rochelle et al., 2001, 2012; Sifuentes and Di Giovanni, 2007). Although the level of infectivity reported to date is low and is dependent on the infectivity method employed, recent advancements in the cell-culture methodology used for determining Cryptosporidium infectivity have shown significant increases in the level of infectious oocysts (43–74% infectious) (King et al., 2011; Rochelle et al., 2015).

Low oocyst viability has also been reported in filtered water. A survey by LeChevallier et al. (1991b) found that, in filtered waters, 21 of 23 oocysts had "non-viable-type" morphology (i.e., absence of sporozoites and distorted or shrunken cytoplasm). In a more recent study of 14 drinking water treatment plants, no infectious oocysts were recovered in the approximately 350,000 L of treated drinking water that was filtered (Rochelle et al., 2012).

5.2.3 Exposure

Direct contact with livestock and indirect contact through faecally contaminated waters are major pathways for transmission of Cryptosporidium (Fayer et al., 2000; Robertson et al., 2002; Stantic-Pavlinic et al., 2003; Roy et al., 2004; Hunter and Thompson, 2005). Cattle are a significant source of C. parvum in surface waters. For example, a weekly examination of creek samples upstream and downstream of a cattle ranch in the BC interior during a 10-month period revealed that the downstream location had significantly higher levels of Cryptosporidium oocysts (geometric mean 13.3 oocysts/100 L, range 1.4–300 oocysts/100 L) compared with the upstream location (geometric mean 5.6/100 L, range 0.5–34.4 oocysts/100 L) (Ong et al., 1996). A pronounced spike was observed in downstream samples following calving in late February. During a confirmed waterborne outbreak of cryptosporidiosis in British Columbia, oocysts were detected in 70% of the cattle faecal specimens collected in the watershed close to the reservoir intake (Ong et al., 1997). Humans can also be a significant source of Cryptosporidium in surface waters. A study in Australia showed that surface waters that allowed recreational activities had significantly more Cryptosporidium than those with no recreational activities (Loganthan et al., 2012).

Waterfowl can also act as a source of Cryptosporidium. Graczyk et al. (1998) demonstrated that Cryptosporidium oocysts retain infectivity in mice following passage through ducks. However, histological examination of the avian respiratory and digestive systems at seven days post-inoculation revealed that the protozoa were unable to infect birds. In an earlier study (Graczyk et al., 1996), the authors found that faeces from migratory Canada geese collected from seven of nine sites on Chesapeake Bay contained Cryptosporidium oocysts. Oocysts from three of the sites were infectious to mice. Based on these and other studies (Graczyk et al., 2008; Quah et al., 2011), it appears that waterfowl carry infectious Cryptosporidium oocysts from their habitat to other locations, including drinking water supplies.

5.3 Waterborne illness

Giardia and Cryptosporidium are the most commonly reported intestinal protozoa in North America and the world (Farthing, 1989; Adam, 1991). Exposure to enteric protozoa through water can result in both an endemic rate of illness in the population and waterborne disease outbreaks. As noted in section 4.0, certain species and genotypes of Giardia and Cryptosporidium, respectively, are more commonly associated with human illness. Giardia lamblia assemblages A and B are responsible for all cases of human giardiasis. For cryptosporidiosis, Cryptosporidium parvum and C. hominis are the major species associated with illness, although C. hominis appears to be more prevalent in North and South America, Australia and Africa, whereas C. parvum is responsible for more infections in Europe (McLauchlin et al., 2000; Guyot et al., 2001; Lowery et al., 2001b; Yagita et al., 2001; Ryan et al., 2003; Learmonth et al., 2004).

5.3.1 Endemic illness

The estimated burden of endemic acute gastrointestinal illness (AGI) annually in Canada, from all sources (i.e., food, water, animals, person-to-person), is 20.5 million cases (0.63 cases/person-year; Thomas et al., 2013). Approximately 1.7% (334,966) of these cases, or 0.015 cases/person-year, are estimated to be associated with the consumption of tap water from municipal systems that serve >1000 people in Canada (Murphy et al., 2016a). Over 84% of the total Canadian population (approximately 29 million) rely on these systems; with 73% of the population (approximately 25 million) supplied by a surface water source; 1% (0.4 million) supplied by a groundwater source under the direct influence of surface water (GUDI); and the remaining 10% (3.3 million) supplied by a groundwater source (Statistics Canada, 2013a, 2013b). Murphy et al. (2016a) estimated that systems relying on surface water sources treated only with chlorine or chlorine dioxide, GUDI sources with no or minimal treatment, or groundwater sources with no treatment, accounted for the majority of the burden of AGI (0.047 cases/person-year). In contrast, an estimated 0.007 cases/person-year were associated with systems relying on lightly impacted source waters with multiple treatment barriers in place. The authors also estimated that over 35% of the 334,966 AGI cases were attributable to the distribution system.

In Canada, just over 3,800 confirmed cases of giardiasis were reported in 2012, which is a significant decline from the 9,543 cases that were reported in 1989. In fact, the total reported cases have decreased steadily since the early 1990s. The incidence rates have similarly declined over this period (from 34.98 to 11.12 cases per 100,000 persons; PHAC, 2015). On the other hand, since cryptosporidiosis became a reportable disease in 2000, the number of reported cases in Canada has been relatively constant, ranging from a low of 588 cases (in 2002) to a high of 875 cases (in 2007) (PHAC, 2015). The exception was in 2001, where the number of cases reported was more than double (1,763 cases) due to a waterborne outbreak in North Battleford, Saskatchewan. For the Canadian population, this corresponds to an incidence rate between 1.82 and 2.69 cases per 100,000 persons per year, with the exception of 2001, where the incidence rate was 7.46 cases per 100,000 persons (PHAC, 2015). The reported incidence rates are considered to be only a fraction of the illnesses that are occurring in the population due to under-reporting and under-diagnosis, especially with respect to mild illnesses such as gastrointestinal upset. It is estimated that the number of illnesses acquired domestically from Giardia and Cryptosporidium are approximately 40 and 48 times greater, respectively, than the values reported nationally (Thomas et al., 2013). However, even though Giardia and Cryptosporidium are commonly reported intestinal protozoa, they still only accounted for approximately 16% and 4%, respectively, of all foodborne and waterborne related illnesses in 2013 (PHAC, 2015).

Similar to illness rates in Canada, giardiasis rates in the United States have been declining, and in 2012, the average rate of giardiasis was 5.8 cases per 100,000 people (Painter et al., 2015a). Although the rate of giardiasis reported in the United States is lower than in Canada, this difference can at least partially be explained by differences in disease surveillance. In the United States, giardiasis is not a reportable illness in all states, whereas it is a nationally notifiable disease in Canada. The incidence rate of cryptosporidiosis in the United States in 2012-2013 was 2.6 to 3 cases per 100,000 people (from all sources, including drinking water) (Painter et al., 2015b). This is similar to the incidence rate in Canada.

5.3.2 Outbreaks

Giardia and Cryptosporidium are common causes of waterborne infectious disease outbreaks in Canada and elsewhere (Fayer, 2004; Hrudey and Hrudey, 2004; Joachim, 2004; Smith et al., 2006). Between 1974 and 2001, Giardia and Cryptosporidium were the most and the third most commonly reported causative agents, respectively, associated with infectious disease outbreaks related to drinking water in Canada (Schuster et al., 2005). Giardia was linked to 51 of the 138 outbreaks for which causative agents were identified and Cryptosporidium was linked to 12 of the 138 outbreaks. The majority of Giardia (38/51; 75%) and Cryptosporidium (11/12; 92%) outbreaks were associated with public drinking water systems; a selection of these outbreaks can be found in Appendix B. From 2002 to 2016, only one outbreak of giardiasis has been reported in Canada with an association to a drinking water source (PHAC, 2009; Efstratiou et al., 2017; Moreira and Bondelind, 2017). No outbreaks of cryptosporidiosis related to drinking water have been reported in the same time period.

In the United States, drinking water related outbreaks have been reported for both Giardia and Cryptosporidium (Craun, 1979; Lin, 1985; Moore et al., 1993; Jakubowski, 1994; CDC, 2004; U.S. EPA, 2006a; Craun et al., 2010). Giardia was the most frequently identified etiological agent associated with waterborne outbreaks in the United States between 1971 and 2006, accounting for 16% of outbreaks (126/780); Cryptosporidium accounted for 2% (15/780). These outbreaks were associated with 28,127 cases of giardiasis and 421,301 cases of cryptosporidiosis (Craun et al., 2010). Most of the cryptosporidiosis cases (403,000) were associated with the Milwaukee outbreak in 1993 (U.S. EPA, 2006a).

In a worldwide review of waterborne protozoan outbreaks, G. lamblia and Cryptosporidium accounted for 40.6% and 50.6%, respectively, of the 325 outbreaks reported between 1954 and 2003 from all water sources, including recreational water (Karanis et al., 2007). The largest reported Giardia drinking water related outbreak occurred in 2004, in Norway, with an estimated 2500 cases (Robertson et al., 2006; Baldursson and Karanis, 2011). Updates to this review were published in 2011 and 2017, capturing 199 protozoan outbreaks between 2004 and 2010 (Baldursson and Karanis, 2011) and a further 381 protozoan outbreaks between 2011 and 2016 (Efstratiou et al., 2017). Giardia still accounted for 35.2% and 37%, and Cryptosporidium still accounted for 60.3% and 63%, respectively, of the outbreaks from all water sources, including recreational water.

Several authors have investigated whether there are commonalities in the causes of the drinking water outbreaks related to enteric protozoa. For the outbreaks identified in Canada, contamination of source waters from human sewage and inadequate treatment (e.g., poor or no filtration, relying solely on chlorination) appear to have been major contributing factors (Schuster et al., 2005). An analysis by Risebro et al. (2007) of outbreaks occurring in the European Union (1990–2005) found that most outbreaks have more than one contributing factor. Similar to the findings of Schuster et al. (2005), contamination of the source waters with sewage or livestock faecal waste (usually following rainfall events) and treatment failures (related to problems with filtration) frequently occurred in enteric protozoa outbreaks. Risebro et al. (2007) also noted that long-term treatment deficiencies, resulting from a poor understanding of, or lack of action upon, previous test results resulted in drinking water outbreaks. Although less common, distribution system issues were also reported to have been responsible for outbreaks, mainly related to cross-connection control problems (Risebro et al., 2007; Moreira and Bondelind, 2017).

A recent review, focusing on outbreaks occurring between 2000 and 2014 in North America and Europe, reported very similar problems still occurring (Moreira and Bondelind, 2017). The outbreaks caused by enteric protozoa were the result of source water contamination by sewage and animal faeces, usually following heavy rains, and by ineffective treatment barriers for the source water quality. Some of the source waters were described as untreated groundwater supplies, however, it is unknown if some would be classified as GUDI. Wallender et al. (2014) reported that of 248 outbreaks in the United States between 1971 and 2008 involving untreated groundwater, 14 (5.6%) were due to G. intestinalis, two (0.8%) due to C. parvum and G. intestinalis and five (2.0%) due to multiple etiologies some of which involved G. intestinalis. The same study also noted that 70% of these 248 outbreaks were related to semi-public and private drinking water supplies using untreated well water. Further information on outbreaks of enteric protozoa from both Canada and worldwide can be found in Appendix B.

5.4 Impact of environmental conditions

The concentrations of Giardia and Cryptosporidium in a watershed are influenced by numerous environmental conditions and processes, many of which are not well characterized and vary between watersheds. However, there are some consistent findings that seem to be applicable to a variety of source waters. Lal et al. (2013) provides a good review of global environmental changes—including land-use patterns, climate, and social and demographic determinants—that may impact giardiasis and cryptosporidiosis transmission.