Evaluation 101 Backgrounder

On this page

- Definition of evaluation in the Government of Canada

- Key evaluation requirements, as specified by the Policy on Results

- Overview of evaluation approaches

- Overview of key supporting evaluation products

- Overview of the evaluation process

- Annex A: Comparison of evaluation requirements stemming from the Policy on Evaluation (rescinded) and the Policy on Results

- Annex B: Additional evaluation types

- Annex C: Evaluation types and where they apply in an intervention’s life-cycle

- Annex D: Example of a generic logic model

- Annex E: Summary of an evaluation unit’s roles and responsibilities

The purpose of this note is to provide individuals at all levels and positions that are new to evaluation with a high-level overview of the key policy requirements, approaches, tools, methods and activities in Government of Canada evaluation.

Note: This document will be regularly updated as evaluation approaches, tools, methods and activities evolve.

Definition of evaluation in the Government of Canada

Evaluation is the systematic and neutral collection and analysis of evidence to judge merit, worth or value. Evaluations:

- inform decision making, improvements, innovation and accountability;

- focus on programs, policies and priorities, but, depending on user needs, can also examine other units, themes and issues, including alternatives to existing interventions;

- examine questions related to relevance, effectiveness and efficiency; and

- employ social science research methods.

Key evaluation requirements, as specified by the Policy on Results

The Policy on Results sets out the requirements for evaluation in the Government of Canada. Key highlights include:

- All organizational spending should be evaluated periodically; however, departments have the flexibility to plan their evaluations based on need, risk and priority.

- Programs of Grants and Contributions (Gs&Cs) with five-year average actual expenditures of $5 million or more per year must be evaluated at least once every five years (as per Section 42.1 of the Financial Administration Act [FAA]).

- Programs of Gs&Cs that fall below this threshold are exempted from the requirement, but should be considered for ‘periodic’ evaluation based on need, risk and priority.

- Evaluations are planned with consideration of using relevance, effectiveness and efficiency as primary evaluation issues, where relevant to the goals of the evaluation.

- Evaluations of Gs&Cs over the $5 million expenditure threshold must always include an assessment of these issues.

- Large departments and agencies must consult with central agencies in the development of their annual Departmental Evaluation Plan (DEP).Footnote 1

- Departments must produce evaluation summaries in addition to full evaluation reports. Both summaries and full reports must also be posted on their departmental website within 120 days following approval by their Deputy Head.

For a comparison on how the evaluation requirements under the Policy on Results have changed from those that existed under the previous Policy on Evaluation (2009), see Annex A.

Overview of evaluation approaches

There are many types of evaluation. Two of the most common employed in the Government of Canada are:

- Delivery evaluation (also known as formative evaluation): focuses on implementation, output production and efficiency.

- Outcome evaluation (also known as summative evaluation): focuses on outcome achievement, the attribution and contribution of an intervention’s activities to its stated outcomes, and identifying alternative delivery methods and improvements.

An evaluation’s approach can be based on a single evaluation type or a combination of types (e.g., an evaluation could cover both delivery and outcome). For examples of additional evaluation types, see Annex B.

Evaluations can happen at any stage of an intervention’s life-cycle. Stages include:

- Ex-ante: evaluations that occur before an intervention is implemented. These typically focus on supporting design by testing an intervention’s assumptions and theory, including the feasibility (in terms of relevance and performance) of multiple scenarios.

- Mid-term: evaluations that occur during the implementation of an intervention. These focus on providing feedback to support early course correction.

- Ex-post: evaluations that occurs after an intervention has been in operation for some time or at its conclusion. These typically focus on assessing performance, and identifying lessons learned and improvements.

For an illustration of a logic model, see Annex D.

Overview of key supporting evaluation products

An Evaluation Framework, developed during the planning phase of an evaluation, lays out the evaluation plan:

- It describes the initiative to be evaluated, identifies particular issues of interest or focus, and delineates the overall evaluation approach, including the questions to be answered, data to be analyzed, and methods and methodologies to be employed to produce the findings, conclusions and recommendations.

- It is recommended that evaluation frameworks be considered in the development of a Performance Information Profile’s (PIP) ‘Summary of Evaluation Needs’ and, once developed, be appended to the PIP.

There are two key components in an evaluation framework:

- A Logic Model and accompanying Theory of Change. These are tools that explain the mechanisms through which an intervention is expected to achieve its expected outcomes.

- A logic model is a graphical representation of an initiative’s inputs, activities, outputs, and outcomes, as well as the logical links between these different elements.

- The theory of change outlines the theory that supports the logic model. It illustrates the links between the different elements in the logic model by describing their assumptions, internal and external risks, and key mechanisms, articulating how each element will lead to the achievement of the desired outcomes of the initiative.

- An Evaluation Matrix.This isa table that summarizes the details of the evaluation approach used to assess an intervention. A matrix includes, among other things, the questions to be addressed by the evaluation, outputs and/or outcomes linked to each question, indicators linked to each output and/or outcome, the data collection method linked to each indicator, an identification of how data will be used and analyzed, and any additional information useful to the evaluation (e.g., data validity, limitations, etc.).

For an illustration of a logic model, see Annex D.

Overview of the evaluation process

A typical evaluation process runs as follows:

- Planning Phase: This phase involves developing and/or finalizing the evaluation framework. It generally includes engaging those involved in the program (e.g., program representatives, responsible senior management) and, in some cases, the development of an advisory or steering committee chaired by the head of evaluation or a delegate.

- Conducting Phase: This phase involves data collection and analysis. It is often concluded by a presentation of preliminary evaluation findings to the program being evaluated.

- Reporting Phase: This phase involves the drafting of the evaluation report and evaluation summary, and includes the drafting of the evaluation recommendations by the evaluators as well as the drafting of the Management Response and Action Plan (MRAP) by the responsible program official or relevant manager(s). Evaluation reports and summaries are reviewed by the Performance Measurement and Evaluation Committee (PMEC), and, as applicable, are recommended for approval by the PMEC to the Deputy Head. Following approval, the evaluation report and summary is submitted to TBS.

- Dissemination Phase: This phase involves the posting of the evaluation report and evaluation summary online within 120 days of Deputy Head approval.

A summary of an evaluation unit’s roles and responsibilities, in addition to those related to the typical evaluation process, can be found in Annex E.

| Area | Policy on Evaluation (2009) | Policy on Results (2016) |

|---|---|---|

| Coverage |

|

|

| Scope |

|

|

| Type |

|

Flexibility in scope creates space for the use of an expanded range of evaluation types:

|

| Methods |

|

|

Annex B: Additional evaluation types

- Economic evaluation: assesses the economic impacts of an initiative and/or issues such as cost-effectiveness, cost-benefit or cost-utility to support resource allocation decisions.

- Innovation evaluation: assesses pilot projects/initiatives to determine their efficacy and feasibility (i.e., for full-out implementation and/or replication).

- Theory-of-change evaluation: tests the logic of the results chain and associated mechanisms (including assumptions, internal and external risks, and other factors) that support the ongoing relevance of an intervention.

- Prevaluation: supports the planning and development of an intervention.

- Developmental evaluation: involves evaluation being embedded in, or working with, an intervention. Evaluators are involved throughout the intervention’s life-cycle, acting as advisors to provide near-real-time and/or ongoing feedback. This approach to evaluation may encompass any combination of the aforementioned evaluation types.

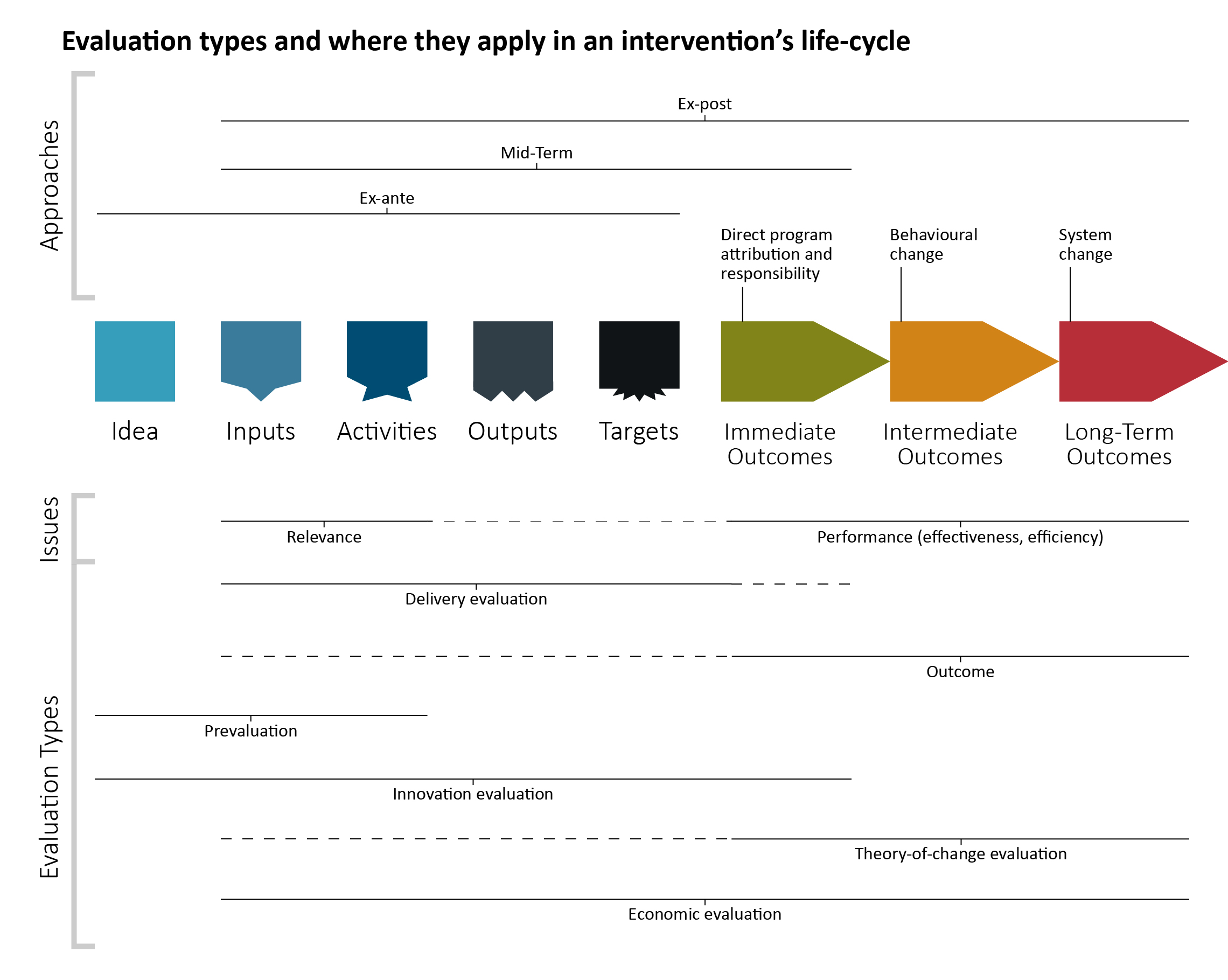

The following figure shows the evaluation approaches, as well as the issues (relevance, effectiveness and efficiency) and the various evaluation types that those issues pertain to. The ex-ante, mid-year and ex-post approaches are selected based on the intent of the evaluation. In fact, an ex-ante evaluation will include the inputs, activities, and targets of the intervention. A mid-year evaluation will add the immediate outcomes to that, i.e. the initial observable outcomes. Lastly, an ex-post evaluation will also look at behavioural changes across the intermediate and long-term outcomes.

Ex-ante evaluations analyze the relevance of the interventions; whereas ex-post evaluations, and the mid-year one to some extent, analyze the effectiveness and efficiency of the interventions.

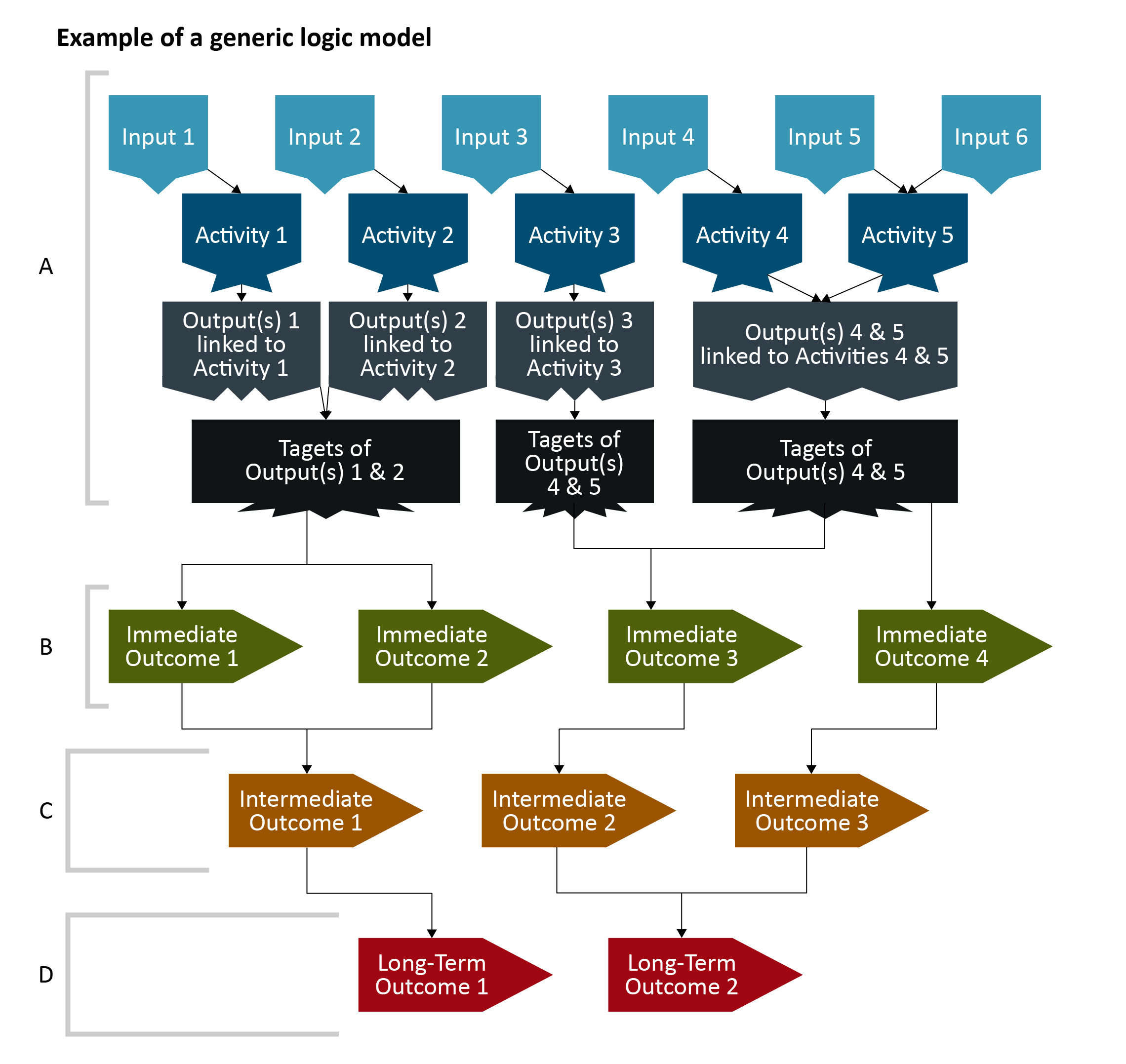

A logic model, like the one shown, is used, as the name suggests, to illustrate the logic of an intervention, i.e. to describe the relationship between the resources or inputs (1,2,3, etc.), the activities (1,2,3, etc.), the outputs (1,2,3, etc.) and the beneficiaries (i, ii, iii, etc.). Immediate outcomes refer to the initial outcomes directly attributed to the intervention itself. The intermediate and long-term outcomes, which refer to behavioural and systemic changes respectively, are instead attributable in part to the intervention. The downward arrow at the right of the image shows that the initial interventions have a declining influence at each subsequent stage of the logic model.

Annex E: Summary of an evaluation unit’s roles and responsibilities

- Developing a five-year, rolling Departmental Evaluation Plan (DEP) and updating it annually through:

- An annual exercise that assesses evaluation needs in consultation with departments (initiative leads and Assistant Deputy Ministers).

- Within the parameters established by the Policy on Results.

- Evaluations first scheduled to meet legislated and policy requirements. Additional evaluations scheduled based on a risk assessment of needs and context (e.g., audit schedule, needs due to program changes, etc.).

- For each evaluation, the approach and level of effort are based on a calibration exercise conducted by the evaluation function.

- Consulting with the Treasury Board Secretariat (TBS).

- Approval by the Performance Measurement and Evaluation Committee (PMEC) of the annual DEP update.

- Approval by Deputy Head of the annual DEP update.

- Delivery to TBS of the annual DEP update.

- Release of the annually updated, five-year evaluation coverage tables.

- An annual exercise that assesses evaluation needs in consultation with departments (initiative leads and Assistant Deputy Ministers).

- Conducting evaluations:

- Developing evaluation reports, one-page summaries and infographics.

- Reviewing and supporting program officials in Management Response Action Plan (MRAP) development.

- Delivering evaluation reports and one-page summaries to TBS.

- Publishing evaluation reports (including MRAPs), one-page summaries and infographics on the web within 120 days of approval by TBS.

- Monitoring and follow-up on MRAP implementation.

- Providing evaluation and performance measurement advice to program officials (e.g., on types of evaluation, methods, etc.).

- Reviewing and supporting program officials in Memorandum to Cabinet and Treasury Board Submission development with respect to plans for performance measurement and evaluations, as well as the accuracy and balance of information on past evaluations.

- Reviewing and supporting program officials in Departmental Results Framework (DRF) development.

- Reviewing and advising program officials on the availability, quality, validity and reliability of Performance Information Profile (PIP) indicators, including their utility for evaluation.

- Working with program officials to develop the PIP ‘Summary of Evaluation Needs’ section.

- Advising PMEC on the validity and reliability of DRF indicators, including their usefulness for supporting evaluations.

- Reporting to PMEC, at least once annually, on the implementation of MRAPs, the impacts of evaluations (lessons learned, corrective actions taken, influence on resource allocation decisions, etc.), DEP progress (evaluation delivery according to plan, timeliness for transmission to TBS, timeliness of public release), and availability, quality, utility and use of performance measurement to support evaluations.

- Conducting a neutral assessment of the evaluation function, at least once every five years.