Supporting Effective Evaluations: A Guide to Developing Performance Measurement Strategies

Notice to the reader

The Policy on Results came into effect on July 1, 2016, and it replaced the Policy on Evaluation and its instruments.

Since 2016, the Centre of Excellence for Evaluation has been replaced by the Results Division.

For more information on evaluations and related topics, please visit the Evaluation section of the Treasury Board of Canada Secretariat website.

This guide has been designed to support departments—and, in particular, program managers and heads of evaluation—in activities around the development of performance measurement to ensure that these effectively support evaluation, as required in the Policy on Evaluation, and related Directive on the Evaluation Function. This guide outlines the key content of performance measurement strategies, provides a recommended process for developing clear, concise performance measurement strategies, and presents examples of tools and frameworks for that purpose. It also provides an overview of the roles of program managers and heads of evaluation for developing performance measurement strategies. This guide also outlines key points to consider when developing performance measurement strategies, including linkages to the Policy on Management, Resources and Results Structures and the Policy on Transfer Payments.

Table of Contents

- Introduction

- Overview of Performance Measurement Strategy

- Components of the Performance Measurement Strategy

- Program Profile

- Logic Model

- Performance Measurement Strategy Framework

- 6.1 Overview of the Performance Measurement Strategy Framework

- 6.2 Content of the Program Performance Measurement Strategy Framework

- 6.3 Performance Measurement Frameworks and the Performance Measurement Strategy Framework

- 6.4 Accountabilities and Reporting

- 6.5 Considerations when Developing the Performance Measurement Strategy Framework

- Evaluation Strategy

- Conclusion

- Appendix 1: Sample Logic Model

- Appendix 2: Review Template and Self-Assessment Tool

- Appendix 3: Glossary

- Appendix 4: References

1.0 Introduction

The purpose of this guide is to support program managers and heads of evaluation in meeting the requirements related to Performance Measurement (PM) Strategies as outlined in the Policy on Evaluation (2009), the Directive on the Evaluation Function (2009) and the Standard on Evaluation for the Government of Canada (2009). This guide also aims to support results-based management (RBM) practices across departments and agencies1 by promoting a streamlined and cohesive approach to performance monitoring and evaluation.

This guide is aligned with and complements the Policy on Transfer Payments (2008), the Directive on Transfer Payments (2008) and the Office of the Comptroller General's (OCG) Guidance on Performance Measurement Strategies under the Policy on Transfer Payments.2 While these policy instruments and OCG guidance establish the general requiremnts for a PM Strategy, this guide provides a more detailed step-by-step process for its development. The guide will also help ensure that the PM Strategies developed are aligned with the Policy on Management, Resources and Results Structures (2008).3

In developing this guide, the Centre of Excellence for Evaluation (CEE) considered the lessons learned from nearly a decade of working with departments on the development, review and implementation of the Results-Based Management Accountability Frameworks that were required under the former Transfer Payment Policy. This guide was also informed by the Independent Blue Ribbon Panel on Grant and Contribution Programs' report From Red Tape to Clear Results (2006).

2.0 Overview of Performance Measurement Strategy

2.1 The Purpose of the Performance Measurement Strategy

The PM Strategy is a results-based management tool that is used to guide the selection, development and ongoing use of performance measures. Its purpose is to assist program managers and deputy heads to:

- continuously monitor and assess the results of programs as well as the economy and efficiency of their management;

- make informed decisions and take appropriate, timely action with respect to programs;

- provide effective and relevant departmental reporting on programs; and

- ensure that credible and reliable performance data are being collected to effectively support evaluation.

It is important to remember that performance monitoring and evaluation play complementary and mutually reinforcing roles. In the Government of Canada, evaluation is defined as "the systematic collection and analysis of evidence on the outcomes of programs to make judgments about their relevance, performance and alternative ways to deliver them or to achieve the same results" (Policy on Evaluation, Section 3.1). Implementing effective performance measurement, in addition to supporting ongoing program monitoring, can also support and facilitate effective evaluation. Evaluation can help establish whether observed results are attributable (in whole or in part) to the program intervention and provide an in-depth understanding of why program outcomes were (or were not) achieved.

2.2 Programs Requiring a Performance Measurement Strategy

2.3 Roles and Responsibilities

Program managers are responsible for developing, implementing and updating PM Strategies.

Heads of evaluation are responsible for reviewing and providing advice on all PM Strategies.

Heads of evaluation are to report annually to the Departmental Evaluation Committee on the status of performance measurement in support of evaluation.

Under the Directive on the Evaluation Function, program managers are responsible for developing and implementing PM Strategies for their programs(Section 6.2.1)5 and for consulting with the head of evaluation on these strategies (Section 6.2.3). Program managers should also update their PM Strategies to ensure they remain relevant and that credible and reliable performance data are being collected to effectively support evaluation (Directive on the Evaluation Function, Section 6.2.1).

Heads of evaluation are responsible for "reviewing and providing advice on the performance measurement strategies for all new and ongoing direct program spending, including all ongoing programs of grants and contributions, to ensure that they effectively support an evaluation of relevance and performance" and for "submitting to the Departmental Evaluation Committee an annual report on the state of performance measurement of programs in support of evaluation" (Directive on the Evaluation Function, Section 6.1.4).

2.4 When to Develop the Performance Measurement Strategy

For new programs, the PM Strategy should be developed at the program design stage when key decisions are being made about the programming model, delivery approaches, reporting requirements, including those of third parties, and evaluations.6 For ongoing programs for which no PM Strategy exists, one should be developed in a timely manner to ensure the availability of performance data for program monitoring and evaluation activities.

Developing the PM Strategy is only the first step in the performance measurement process. During its implementation, the PM Strategy should be reviewed periodically and revised (if required) to maintain its relevance in supporting effective program monitoring and evaluation activities.

2.5 Defining a Program within the Context of the Performance Management Strategy

In both the Policy on Evaluation and the Policy on Transfer Payments, a program is defined as "a group of related activities that are designed and managed to meet a specific public need and are often treated as a budgetary unit" (also in keeping with the definition provided in the Policy on Management, Resources and Results Structures). This may include, for example, a program established and funded through approval of a Treasury Board submission, a transfer payment program or other groupings of programmatic activities.

At the same time, it is also important to remember that, for evaluation purposes, the "units" that will be evaluated may not always correspond to a single program represented in the PAA. Units of evaluation could be:

- a single program

- a grouping of multiple programs

- a sub-element7 of a single program

- a grouping of sub-elements of multiple programs

- a cross-cutting theme, function or policy embedded in multiple programs

- other configurations

Depending on the unit of evaluation, there may be efficiencies to be gained in developing common performance measures and systems across programming units. Therefore, it is important to consult the head of evaluation and the Departmental Evaluation Plan when developing a PM Strategy to support evaluation.

3.0 Components of the Performance Measurement Strategy

3.1 Key Components of a Performance Measurement Strategy

In Annex A of the Policy on Evaluation, a PM Strategy is defined as "the selection, development and ongoing use of performance measures for program management and decision making." To support the PM Strategy process and align it with future evaluations, the document outlining the PM Strategy should include the following components:

- a program profile

- a logic model

- a Performance Measurement Strategy Framework

- an Evaluation Strategy8

These components represent the foundation of the PM Strategy and ensure that the performance measures selected and developed produce useful and comprehensive information for program monitoring and evaluation.

3.2 Determining the Scope and Complexity

The program profile, logic model, PM Strategy Framework and Evaluation Strategy should be succinct and focused. Their length and level of detail should reflect the program's scope and complexity, the challenges associated with producing quality data for monitoring and evaluation, and the risks associated with the program. Risks and issues to consider when establishing the scope and complexity of the PM Strategy include:

- risks to the health and safety of the public or the environment (including both the degree and magnitude of the consequences that could result from the policy, program or initiative's failure as well as the probability of risk materializing);

- risks associated with public confidence or political sensitivity (both current and potential), including media, parliamentary or ministerial interests;

- risks related to size of the population affected or targeted by the program;

- known problems, challenges or weaknesses in the program (ideally based on previous evaluative assessment);

- materiality of the program;

- complexity of the program (e.g. in terms of its components and delivery mechanisms);

- time available to prevent or mitigate risks; and

- other factors that are of significance to a particular program.

In the introductory remarks of the PM Strategy, explain how the program's scope and complexity, the challenges associated with producing quality data for monitoring and evaluation, and the risks associated with the program were considered when developing the PM Strategy.

4.0 Program Profile

4.1 Overview of the Program Profile

The Program Profile section of the PM Strategy document should be concise and focused and provide readers (e.g. heads of evaluation, program stakeholders) with key information required to understand the program. Its purpose is to:

- support the development of the logic model, the PM Strategy Framework and the Evaluation Strategy;

- serve as a reference for evaluators in upcoming evaluations; and

- facilitate communication about the program to program staff and other program stakeholders.

4.2 Program Profile Content

The program profile should include the elements shown in Table 1.

| Element | Requirements |

|---|---|

| 1.1 Need for the program |

|

| 1.2 Alignment with government priorities |

|

| 1.3 Target population(s) |

|

| 1.4 Stakeholders |

|

| 1.5 Governance |

Note: More specific responsibilities associated with performance measurement and evaluation should be included in later sections of the PM Strategy. |

| 1.6 Resources |

|

4.3 Considerations when Developing the Program Profile

Developing the program profile should not be an onerous process. Typically, most of the information required for the program profile should already have been compiled when the program's initial planning documents were developed. Sources of information for the program profile generally include the program's Treasury Board submission, terms and conditions, business plans and Memorandum of Understanding.

5.0 Logic Model

5.1 Overview of the Logic Model

The logic model serves as the program's road map. It outlines the intended results (i.e. outcomes) of the program, the activities the program will undertake and the outputs it intends to produce in achieving the expected outcomes. The purpose of the logic model is to:

- help program managers verify that the program theory is sound and that outcomes are realistic and reasonable;

- ensure that the PM Strategy Framework and the Evaluation Strategy are clearly linked to the logic of the program and will serve to produce information that is meaningful for program monitoring, evaluation and, ultimately, decision making;

- help program managers interpret the monitoring data collected on the program and identify implications for program design and/or operations on an ongoing basis;

- serve as a key reference point for evaluators in upcoming evaluations; and

- facilitate communication about the program to program staff and other program stakeholders.

5.2 Logic Model Content

There are many ways to present logic models (see Appendix 1 for a sample logic model). While each organization can use the format that best suits its audience, a standard series of components (sometimes referred to as the "results-chain"

) should be included in order for the logic model to effectively support an evaluation. These components, which are logically linked, are the program inputs, activities, outputs and outcomes. As shown in Figure 1, there are three types of outcomes: immediate, intermediate and ultimate. The key components of the logic model are defined in Table 2.

Figure 1 - Text version

Main components of a Logic Model:

- Inputs

- Activities

- Outputs

- Immediate Outcomes

- Intermediate Outcomes

- Ultimate Outcomes

An important design feature of logic models is that they are, ideally, contained on a single page. As the logic model is intended to be a visual depiction of the program, its level of detail should be comprehensive enough to adequately describe the program but concise enough to capture the key details on a single page.

5.3 Logic Model Narrative (Including Theories of Change)

Every program is based on a "theory of change" - a set of assumptions, risks and external factors that describes how and why the program is intended to work. This theory connects the program's activities with its goals. It is inherent in the program design and is often based on knowledge and experience of the program, research, evaluations, best practices and lessons learned.

A logic model is a visual expression of the rationale behind a program. However, on its own, the logic model does not provide enough detail on how the program activities will contribute to its intended outcomes and how lower-level outcomes will lead to higher-level outcomes. As such, the logic model should be accompanied by a narrative that outlines how certain activities or actions are intended to produce results. This is sometimes referred to as the "theory of change" or "program theory." A good narrative explains the linkages between activities, outputs and outcomes by describing the underlying assumptions of the program, risks and external factors that influence whether or not the outcomes will be achieved.

5.4 Considerations when Developing the Logic Model

There are many different approaches for developing the logic model. A participatory process9 is recommended, as it helps to improve the accuracy of the logic model and provides stakeholders with a common understanding of what the program is supposed to achieve and how it is supposed to achieve it. It is important to remember that the logic model is not static; it is an iterative tool. As the program changes, the logic model should be revised to reflect the changes, and these revisions should be documented.

The following key questions should be considered once the program logic model is completed:

- Are all activities, outputs and outcomes included?

- Does each outcome state an intended change?

- Is it reasonable to expect that the program's activities will lead to the program's outcomes?

- Are the causal linkages plausible and substantiated by the program theory?

- Are all the elements clearly stated?

- Are the outcomes measurable?

- Do the activities and outcomes address a demonstrated need?

- Is the final outcome at a lower level than the expected results of the departmental PAA?

6.0 Performance Measurement Strategy Framework

6.1 Overview of the Performance Measurement Strategy Framework

The PM Strategy Framework identifies the indicators required to monitor and gauge the performance of a program. Its purpose is to support program managers in:

- continuously monitoring and assessing the results of programs as well as the efficiency of their management;

- making informed decisions and taking appropriate, timely action with respect to programs;

- providing effective and relevant departmental reporting on programs; and

- ensuring that the information gathered will effectively support an evaluation.

Program managers should consult with heads of evaluation on the selection of indicators to help ensure that the indicators selected will effectively support an evaluation of the program. See Section 2.3 of this guide for more information on the roles and responsibilities of program managers and heads of evaluation.

6.2 Content of the Program Performance Measurement Strategy Framework

Table 3 summarizes the major components of the PM Strategy Framework. The framework should include the program's title as shown in the departmental PAA as well as the PAA elements that are directly linked to the program, i.e. the program activities, subactivities and/or sub-subactivities. It should also include the program's outputs, immediate and intermediate outcomes (as defined in the logic model), as well as one or more indicators for each output and outcome. For each indicator, provide:

- the data source(s)

- the frequency of data collection

- baseline data

- targets and timelines for when targets will be achieved

- the organization, unit and position responsible for data collection

- the data management system used

6.3 Performance Measurement Frameworks and the Performance Measurement Strategy Framework

The Policy on Management, Resources and Results Structures (MRRS) requires the development of a departmental Performance Measurement Framework (PMF), which sets out the expected results and the performance measures to be reported for programs identified in the PAA. The PMF is intended to communicate the overarching framework through which a department will collect and track performance information about the intended results of the department and its programs. The indicators in the departmental PMF are limited in number and focus on supporting departmental monitoring and reporting.

The PM Strategy Framework is used to identify and plan how performance information will be collected to support ongoing monitoring of the program and its evaluation. It is intended to more effectively support both day-to-day program monitoring and delivery and the eventual evaluation of that program. Accordingly, the PM Strategy Framework may include expected results, outputs and supporting performance indicators beyond the limits established for the expected results and performance indicators to be included in the MRRS PMF (see Figure 2 below). Unlike the MRRS PMF, the PM Strategy Framework has no imposed limit on the number of indicators that can be included; however, successful implementation of the PM Strategy is more likely if indicators are kept to a reasonable number.

As illustrated in Figure 2, the indicators in the PM Strategy Framework focus on supporting ongoing program monitoring and evaluation activities and therefore align with and complement the indicators included in the departmental PMF. In instances where the program is shown as a distinct program in the PAA and indicators have been identified in the departmental PMF, the PM Strategy Framework should include, at a minimum, the indicators reported in the departmental PMF. When a PM Strategy is developed for a new program that is not represented in the departmental PAA, the outcomes, outputs and related indicators developed for the PM Strategy Framework should be considered for inclusion in the departmental MRRS.10

6.4 Accountabilities and Reporting

The PM Strategy Framework should be accompanied by a short text that describes:

- the reporting commitments and how they will be met, including who will analyze the data, who will prepare the reports, to whom they will be submitted, by when, what information will be included, the purpose of the reports and how they will be used to improve performance; and

- if relevant, the potential challenges associated with data collection and reporting, as well as mitigating strategies for addressing these challenges (e.g. there may not be a system that can be used for data management).

6.5 Considerations when Developing the Performance Measurement Strategy Framework

The chart below provides guidance on how to develop the PM Strategy Framework.11

| Step | Description | Comments |

|---|---|---|

| 1. | Start with the MRRS PMF: Review the MRRS PMF that the department developed in accordance with the Policy on MRRS. Include, as appropriate, the performance indicators from the MRRS PMF in the PM Strategy Framework. |

The PM Strategy Framework should not be developed in isolation. In accordance with the Policy on MRRS, all programs represented in a department's PAA must contribute to its strategic outcome(s). As such, when developing the PM Strategy Framework, the performance indicators should complement those already established in the departmental PMF. |

| 2. | Identify performance indicators: Develop or select at least one performance indicator for each output and each outcome (immediate, intermediate and ultimate) that has been identified in the program logic model. Keep in mind that, in addition to day-to-day program monitoring, the performance indicators will also be used for evaluation purposes. As such, it is recommended that program managers also consider the core issues12 for evaluation (i.e. relevance and performance) and consult with heads of evaluation when developing the performance indicators. |

There are two types of indicators: quantitative and qualitative.

Keep the number of performance indicators to a manageable size. A small set of good performance indicators are more likely to be implemented than a long list of indicators. |

| 3. | Identify data sources: Identify the data sources and the system that will be used for data management. |

There are a number of possible data sources:

Use readily available information. Take advantage of any available sources of information at your disposal. |

| 4. | Define frequency and responsibility for data collection: Identify frequency for data collection and the person(s) or office responsible for the activity. |

Describe how often performance information will be gathered. Depending on the performance indicator, it may make sense to collect data on an ongoing, monthly, quarterly, annual or other basis. When planning the frequency and scheduling of data collection, an important factor to consider is management's need for timely information for decision making. In assigning responsibility, it is important to take into account not only which parties have the easiest access to the data or sources of data but also the capacities and system that will need to be put in place to facilitate data collection. |

| 5. | Establish baselines, targets and timelines. |

Performance data can only be used for monitoring and evaluation if there is something to which it can be compared. A baseline serves as the starting point for comparison. Performance targets are then required to assess the relative contribution of the program and ensure that appropriate information is being collected. Baseline data for each indicator should be provided by the program when the PM Strategy Framework is developed. Often, and particularly for indicators related to higher-level outcomes, this information will have already been collected by program managers as part of their initial needs assessment and to support the development of their business case. Targets are either qualitative or quantitative, depending on the indicator. Some sources and reference points for identifying targets include:

If a target cannot be established or if the program is unable to establish baseline data at the outset of the program, explicit timelines for when these will be established as well as who is responsible for establishing them should be stated. |

| 6. | Consult and verify: As a final step, program managers should consult 13 with heads of evaluation and other specialists in performance measurement to validate the performance indicators and confirm that the resources required to collect data are budgeted for. Consultation with other relevant program stakeholders (e.g. information management personnel) is also encouraged.

|

Key questions to be considered during the consultation and verification stage include:

If the answer to any of the above questions is "no," adjustments need to be made to the indicators or to the resources allocated for implementation of the PM Strategy. |

Implementation

The full benefits of the PM Strategy Framework can only be realized when it is implemented.14 As such, program managers should ensure that the necessary resources (financial and human) and infrastructure (e.g. data management systems) are in place for implementation. Program managers should begin working at the design stage of the program to create databases, reporting templates and other supporting tools required for effective implementation. Following the first year of program implementation, they should undertake a review of the PM Strategy to ensure that the appropriate information is being collected and captured to meet both program management and evaluation needs.

Keep in mind that, according to the Directive on the Evaluation Function, the head of evaluation is responsible for "submitting to the Departmental Evaluation Committee an annual report on the state of performance measurement of programs in support of evaluation"

(Section 6.1.4, subsection d). Given that the PM Strategy Framework represents a key source of evidence for this annual report, its implementation is especially important.

7.0 Evaluation Strategy

7.1 Overview of the Evaluation Strategy

In the Government of Canada, evaluation is defined as "the systematic collection and analysis of evidence on the outcomes of programs to make judgments about their relevance, performance and alternative ways to deliver them or to achieve the same results" (Policy on Evaluation, Section 3.1).Evaluations can be conducted in a variety of ways to serve a variety of purposes and, consequently, can be designed to address a multiplicity of issues.

The Evaluation Strategy is a high-level overview of the evaluation plans for a program. The inclusion of an Evaluation Strategy in the PM Strategy is important because it allows program managers and heads of evaluation to:

- Ensure that the data generated through the PM Strategy Framework can effectively support evaluation and will be available in a timely manner. It also allows for the identification of additional data that may need to be collected to support the evaluation.

- Identify the rationale and need to undertake an evaluation well in advance of legislated or policy-driven evaluation deadlines (e.g. the FAA, Policy on Evaluation). This is done, in part, to inform the development of the Departmental Evaluation Plan, which deputy heads are required to approve annually in accordance with the Policy on Evaluation (Section 6.1.7).15

- Engage in early planning for evaluations and develop rigorous cost-effective evaluation approaches and designs (which might not be possible if evaluation planning is left until later in the programming cycle).

Consultation between program managers and heads of evaluation on the Evaluation Strategy also supports heads of evaluation in developing the annual report on the state of performance measurement of programs in support of evaluation that is submitted to the Departmental Evaluation Committee (Directive on the Evaluation Function, Section 6.1.4).

7.2 Content of the Evaluation Strategy

It is recommended that the Evaluation Strategy include details on:

- the drivers and rationale for the evaluation (e.g. information needs of deputy heads, program renewal, support for expenditure management, FAA requirements, commitments expressed in Treasury Board submissions, or policy commitments under the Policy on Evaluation or Cabinet Directive on Streamlining Regulations, among others);

- resource commitments in support of evaluation, including resources as described in Treasury Board submissions, and other resource commitments;

- an initial evaluation framework or the timing and responsibilities for the development of the evaluation framework (along with a rationale for not including the evaluation framework in the PM Strategy); and

- other details related to the evaluation.

It is important to note that the program manager is responsible for developing and implementing the PM Strategy and for consulting with the head of evaluation in doing so (Directive on the Evaluation Function, Section 6.2.3), whereas the evaluation framework ultimately falls under the purview of the head of evaluation. While departments are strongly encouraged to develop an initial evaluation framework (even at a rudimentary level) at the outset of a program, there may be instances where the head of evaluation decides (in consultation with the program manager) that, based on the risks and other characteristics of the program, developing an initial evaluation framework is not feasible at the time the PM Strategy is created. For example, the head of evaluation may decide that in a given low-risk program, the data being proposed for collection by the program manager in implementing the PM Strategy is sufficient to support an evaluation that addresses the five core evaluation issues (see Table 5). In such cases, an initial evaluation framework does not need to be included in the Evaluation Strategy. Nevertheless, the rationale for the head of evaluation's decision to forego the early development of a draft evaluation framework at the outset of a program should be documented in the Evaluation Strategy. Furthermore, the Evaluation Strategy should outline a clear plan to develop an initial evaluation framework at the earliest possible time.

7.3 Evaluation Frameworks

An important element to include in the Evaluation Strategy is the draft evaluation framework, as it outlines proposed evaluation questions and identifies the data required to address these questions (see Table 4). The development of the evaluation framework is, ultimately, the responsibility of the head of evaluation. The evaluation framework is an important preparatory tool in the evaluation process because it allows the head of evaluation to give advance consideration to the evaluation approach, to identify data requirements for the evaluation and to determine how these requirements will be met. Certain data requirements for addressing the questions in the evaluation framework will be fulfilled by the indicators identified in the PM Strategy Framework (see Section 6.0 of this guide). Additional data requirements identified in the evaluation framework may require adjustments or additions to the monitoring data being collected on the program. In some cases, the additional data required will be collected by evaluators as part of the evaluation process.

The draft evaluation framework should include:

- initial evaluation questions covering the five core evaluation issues and other issues as identified by the program manager and others (e.g. the deputy head);

- indicators;

- data sources and methods of data collection (Note that in some cases, the data source may be the monitoring data collected on the program as the PM Strategy is implemented.);

- where applicable, information on what baseline data needs to be collected and timelines for data collection; and

- where required, a description of simple adjustments that can be made to administrative protocols and procedures (e.g. third-party reporting templates, financial record keeping) by the program area to ensure that the evaluation's data requirements are met.

The head of evaluation should work with the program manager to revisit and revise (as required) the evaluation framework (e.g. on an annual basis) and to develop a final evaluation framework at the start of the evaluation process.

| Questions | Indicators | Data sources and methods | Baseline data needed | Timelines for data collection | Adjustments to administrative protocols and procedures needed |

|---|---|---|---|---|---|

| Note: It is expected that in addressing core evaluation issues 4 and 5 (see Table 5 below), evaluation questions addressing specific program outcomes as well as more general questions about program performance will be developed. |

|||||

| Relevance | |||||

| Question 1 | Indicators 1 to n | ||||

| Question 2 | Indicators 1 to n | ||||

| Question 3 | Indicators 1 to n | ||||

| Question n | Indicators 1 to n | ||||

| Performance (effectiveness, efficiency and economy)* | |||||

| Question 1 | Indicators 1 to n | ||||

| Question 2 | Indicators 1 to n | ||||

| Question 3 | Indicators 1 to n | ||||

| Question n | Indicators 1 to n | ||||

8.0 Conclusion

This guide is intended to support the development of PM Strategies that will effectively support evaluation. To establish a sound foundation for the PM Strategy and align it with future evaluations, it is important that the document outlining the PM Strategy also include a program profile, a logic model, a Performance Measurement Strategy Framework and an Evaluation Strategy.

The guide will be updated periodically as required. Enquiries concerning this guide should be directed to:

Centre of Excellence for Evaluation

Results-Based Management Division

Treasury Board of Canada Secretariat

222 Nepean Street

Ottawa, Ontario

K1A 0R5

Email: evaluation@tbs-sct.gc.ca

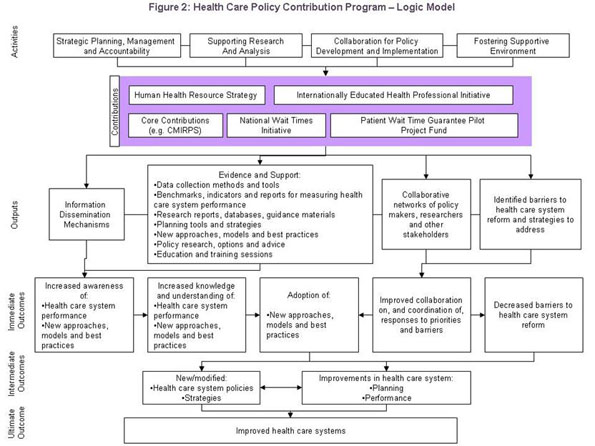

Appendix 1: Sample Logic Model

As stated in section 5.2 there are many ways to present a logic model, this is simply one example.

Figure 3 - Text version

The example in Appendix 1 illustrates one of the many ways to present a logic model. As described in further detail under Section 5.2 Logic Model Content, a logic model is one of the four components of the PM Strategy. Its level of detail should be comprehensive enough to adequately describe the program but concise enough to capture the key details on a single page. Appendix 1 provides an illustration of a particular health care program's logic model, featuring its inputs, activities, outputs, immediate outcomes, intermediate outcomes and ultimate outcomes.

Appendix 2: Review Template and Self-Assessment Tool

This review template and self-assessment tool can be used by program managers, performance measurement specialists and evaluation specialists when developing and reviewing the PM Strategy.

Performance Measurement Strategy - Review Template

Program Manager:

Departmental Evaluation Official:

Program Title:

Program Status (new, renewal, other):

Department:

Overall Value and Period of Funding:

Reviewed by:

Date:

| Section | Reviewer Comments | Reviewer's Overall Assessment | ||

|---|---|---|---|---|

| Strong | Acceptable | To be improved | ||

| Introduction | ||||

| 1 Program Profile | ||||

| 1.1 Need for the program | ||||

| 1.2 Alignment with government priorities | ||||

| 1.3 Target population(s) | ||||

| 1.4 Stakeholders | ||||

| 1.5 Governance | ||||

| 1.6 Resources | ||||

| 2. Logic Model | ||||

| 2.1 Logic model | ||||

| 2.2 Narrative | ||||

| 3. PM Strategy Framework | ||||

| 3.1 PM Strategy Framework | ||||

| 3.2 Accountabilities and reporting | ||||

| 4. Evaluation Strategy | ||||

| 4.1 Requirements, timelines and responsibilities | ||||

| 4.2 Draft evaluation framework and data requirements | ||||

| Introduction | Review Criteria | Comments |

|---|---|---|

| Introduction |

|

| 1. Program Profile | Review Criteria | Comments |

|---|---|---|

| 1.1 Need for the program |

|

|

| 1.2 Alignment with government priorities |

|

|

| 1.3 Target population(s) |

|

|

| 1.4 Stakeholders |

|

|

| 1.5 Governance |

|

|

| 1.6 Resources |

|

| 2. Logic Model | Review Criteria | Comments |

|---|---|---|

| 2.1 Logic model |

|

|

| 2.2 Narrative |

|

| 3. PM Strategy Framework | Review Criteria | Comments |

|---|---|---|

| 3.1 PM Strategy Framework |

|

|

| 3.2 Accountabilities and reporting |

|

| 4. Evaluation Strategy | Review Criteria | Comments |

|---|---|---|

| 4.1 Requirements, timelines and responsibilities |

|

|

| 4.2 Draft evaluation framework and data requirements (if applicable) |

|

Appendix 3: Glossary

- Activity

-

An operation or work process that is internal to an organization and uses inputs to produce outputs (e.g. training, research, construction, negotiation, investigation).

- Baseline

-

The starting point against which subsequent changes are compared and targets are set.

- Benchmarking

-

The action of identifying, comparing, understanding and adapting outstanding practices found either inside or outside an organization. Benchmarking is based mainly on common measures and the comparison of obtained results both internally and externally. Comparing results against those of a best practice organization will help the organization to know where it is in terms of performance and to take action to improve its performance.

- Cost

-

A resource expended to achieve an objective.

- Delivery partner

-

A public or private organization that assists the Government of Canada in delivering the program.

- Departmental Evaluation Plan

-

A clear and concise framework that establishes the evaluations a department will undertake over a five-year period, in accordance with the Policy on Evaluation and its supporting directive and standard.

- Departmental Evaluation Committee

-

A senior executive body chaired by the deputy head or senior-level designate. This committee serves as an advisory body to the deputy head with respect to the Departmental Evaluation Plan, resourcing and final evaluation reports and may also serve as the decision-making body on other evaluation and evaluation-related activities of the department. Refer to Annex B of the Policy on Evaluation for a full description of the roles and responsibilities of the committee.

- Economy

-

Minimizing the use of resources. Economy is achieved when the cost of resources used approximates the minimum amount of resources needed to achieve expected results.

- Effectiveness

-

The extent to which a program is achieving expected outcomes.

- Efficiency

-

The extent to which resources are used such that a greater level of output is produced with the same level of input or a lower level of input is used to produce the same level of output. The level of input and output could be increases in quantity or quality, decreases in quantity or quality, or both.

- Evaluation

-

The systematic collection and analysis of evidence on the outcomes of policies and programs to make judgments about their relevance, performance and alternative ways to deliver programs or to achieve the same results.

- Expected result

-

An outcome that a program, policy or initiative is designed to produce.

- Indicator

-

A qualitative or quantitative means of measuring an output or outcome with the intention of gauging the performance of a program.

- Input

-

The financial and non-financial resources used by organizations, policies, programs and initiatives to produce outputs and accomplish outcomes (e.g. funds, personnel, equipment, supplies).

- Logic model

-

A depiction of the causal or logical relationship between the inputs, activities, outputs and outcomes of a given policy, program or initiative.

- Management, Resources and Results Structures (MRRS)

-

Management, Resources and Results Structures provide a common, government-wide approach to the collection, management and public reporting of financial and non-financial information. The MRRS consists of a department's strategic outcome(s), Program Activity Architecture (PAA), supporting Performance Measurement Framework (PMF) and governance structure.

- Needs assessment

-

Policy tool used to shape a new program. A needs assessment involves primary data collection to identify the need for the program and includes a preliminary description of the program intervention required to address that need. It also assesses whether there are any existing services in the federal, provincial or municipal government spheres or in the private sector that are within the scope of the proposed program intervention.

- Objective

-

The high-level, enduring benefit toward which effort is directed.

- Outcome

-

An external consequence attributed, in part, to an organization, policy, program or initiative. Outcomes are not within the control of a single organization, policy, program or initiative; instead, they are within the area of the organization's influence. Usually, outcomes are further qualified as immediate, intermediate, ultimate (or final), expected, direct, etc. There are three types of outcomes related to the logic model, defined as follows:

- Immediate outcome: an outcome that is directly attributable to a policy, program or initiative's outputs. In terms of time frame and level of reach, these are short-term outcomes involving an increase in a target population's awareness.

- Intermediate outcome: an outcome that is expected to logically occur once one or more immediate outcomes have been achieved. In terms of time frame and level of reach, these are medium-term outcomes and often involve a change in the behaviour of the target population.

- Ultimate (or final) outcome: the highest-level outcome that can be reasonably and causally attributed to a policy, program or initiative and is the consequence of one or more intermediate outcomes having been achieved. These outcomes usually represent the raison d'être of a policy, program or initiative. They are long-term outcomes that represent a change in the target population's state. Ultimate outcomes of individual programs, policies or initiatives contribute to the higher-level departmental strategic outcome(s).

- Output

-

Direct products or services that stem from the activities of an organization, policy, program or initiative and are usually within the control of the organization itself (e.g. pamphlet, water treatment plant, training session).

- Performance

-

The extent to which economy, efficiency and effectiveness are achieved by a policy or program.

- Performance measure

-

See indicator.

- Performance Measurement Framework (PMF)

-

A requirement of the Policy on Management, Resources and Results Structures, a PMF sets out an objective basis for collecting information related to a department's programs. A PMF includes the department's strategic outcome(s), expected results of programs, performance indicators and associated targets, data sources and data collection frequency, and actual data collected for each indicator.

- Performance Measurement Strategy (PM Strategy)

-

A PM Strategy is the selection, development and ongoing use of performance measures to guide program or corporate decision making. In this guide, the recommended components of the PM Strategy are the program profile, logic model, Performance Measurement Strategy Framework and Evaluation Strategy.

- Program

-

A group of related activities that are designed and managed to meet a specific public need and are often treated as a budgetary unit.

- Program Activity Architecture (PAA)

-

A structured inventory of all programs of a department. Programs are depicted according to their logical relationship to each other and the strategic outcome(s) to which they contribute. The PAA includes a supporting Performance Measurement Framework (PMF).

- Program of grants and contributions / Transfer payment programs

-

As defined in section 42.1 of the Financial Administration Act, a program of grants or contributions made to one or more recipients that is administered so as to achieve a common objective and for which spending authority is provided in an appropriation Act.

- Program theory

-

An explicit theory of how a program causes the intended or observed outcomes, including assumptions about resources and activities and how these lead to realizing intended outcomes.

- Reach

-

The individuals and organizations targeted and directly affected by a policy, program or initiative.

- Relevance

-

The extent to which a policy or program addresses a demonstrable need, is appropriate to the federal government and is responsive to the needs of Canadians.

- Reliability

-

The degree to which the results obtained by a measurement procedure can be replicated.

- Result

-

See outcome.

- Risk-based approach to determining evaluation approach and level of effort

-

A method whereby risk is considered when determining the evaluation approach for individual evaluations. Departments should determine, as required, the specific risk criteria relevant to their context. Specific risk criteria may include the size of the population that could be affected by non-performance of the program, the probability of non-performance, the severity of the consequences that could result, the materiality of the program and its importance to Canadians. Additional criteria could include the quality of the last evaluation and/or other studies, their findings, when they were conducted and whether these findings remain relevant, and the extent of change experienced in the program's environment.

- Strategic outcome

-

A long-term and enduring benefit to Canadians that stems from a department's mandate, vision and efforts. It represents the difference a department wants to make for Canadians and should be a clear, measurable outcome that is within the department's sphere of influence.

- Target

-

A measurable performance or success level that an organization, program or initiative plans to achieve within a specified time period. Targets can be expressed quantitatively or qualitatively.

- Target population

-

The group of individuals that a program intends to influence and who will benefit from the program.

- Value for money

-

The extent to which a program demonstrates relevance and performance.

Appendix 4: References

Legislation

Treasury Board policy instruments

- Policy on Evaluation

- Directive on the Evaluation Function

- Standard on Evaluation for the Government of Canada

- Policy on Transfer Payments

- Directive on Transfer Payments

Guidance documents

- The Evaluation Guidebook for Small Agencies

- Guidance on Performance Measurement Strategies under the Policy on Transfer Payments

- Guide to Costing

- Preparing and Using Results-based Management and Accountability Frameworks

Other relevant publications

- From Red Tape to Clear Results – The Report of the Independent Blue Ribbon Panel on Grant and Contribution Programs

- Results-Based Management Lexicon