Artificial Intelligence Strategy: Immigration, Refugees and Citizenship Canada

Message from the Deputy Minister

Artificial intelligence (AI) has become an integral part of our daily lives. It enhances productivity and offers convenience in ways we sometimes take for granted. AI’s ability to analyze vast amounts of data at unprecedented speeds is enabling advancements in fields ranging from climate science and healthcare to finance, transportation, education and manufacturing.

Despite AI's immense power, a humanistic approach is essential to harness its potential responsibly. This is particularly true in fields like immigration that impact people's lives in ways that are deeply personal and often significant. While AI excels at data processing, it lacks nuanced understanding and ethical judgment. For AI to truly benefit society and avoid harm to vulnerable populations, human values like transparency, anti-racism and accountability must govern its use.

To meet this moment, Immigration, Refugees and Citizenship Canada has formalized its governance of AI within the inaugural IRCC AI Strategy, ensuring our approach aligns with the broader AI Strategy for the Federal Public Service 2025-2027. This alignment ensures that IRCC’s use of AI supports the Government of Canada’s commitment to responsible, transparent, and secure AI adoption across federal institutions. Our strategy also reinforces the Government of Canada’s focus on central AI capacity, policy and governance, workforce readiness, and public engagement – all of which are essential to maintaining public trust in AI-enabled government services.

Our strategy sets the guardrails for adapting to departmental needs, managing cybersecurity threats, and ensuring program integrity. Grounded in international best practices and Government of Canada guidance, it relies on employees and stakeholders to stay on track through continuous engagement and improvement. The IRCC AI Strategy is a team effort, born from years of careful experimentation and collaboration across the department. As we set out on the path forward, this vision will guide our work and support the ethical and inclusive use of AI at our department.

Dr. Harpreet S. Kochhar

Deputy Minister of Immigration,

Refugees and Citizenship Canada

Message from the Chief Digital Officer

I am delighted to present IRCC’s Artificial Intelligence Strategy.

IRCC plays a crucial role in shaping Canada’s socio-economic landscape by facilitating immigration, promoting diversity and supporting newcomer integration.

As IRCC collects a vast amount of personal information to deliver its program and services, our AI strategy affirms our ongoing commitment to protecting and securing the privacy of our clients.

As an organization, we continually explore emerging technologies to help us automate steps in our operations to improve efficiency and program integrity. Since 2018, the department has used advanced analytics and machine learning to help us gain deeper insights from program and applicant data. Now, as we carefully learn about the opportunities that newer AI technologies have to offer, our AI strategy builds on existing governance to chart the path for sound experimentation and the safe adoption of AI for a wider range of purposes.

We recognize the substantial benefits that AI brings and the pressing need to embrace it in today’s world. We are exploring or continuing to explore leveraging AI to improve client service, drive efficiencies in our operations, improve program integrity, and protect the immigration system from fraud and cyber incidents. We are committed to being at the forefront of responsible technology adoption. To ensure we are on the right track, we will also conduct reviews of our AI initiatives, to learn from best practices and integrate those gleaned insights into future iterations of our evergreen strategy.

While AI offers many positive, transformative capabilities, it comes with inherent risks, such as bias, privacy concerns and cybersecurity threats. This strategy isn’t just a document—it’s our commitment to implementing the right checks and balances to protect the Government of Canada, our clients and Canadians.

Our strategy is a flexible roadmap. It will evolve in response to emerging trends, policies and practices that shape the AI landscape, both domestically and internationally.

As we continue modernizing our immigration system, let us remember that innovation thrives at the intersection of ambition and responsibility. Our strategy sets the stage for a future where technology empowers our teams and helps us create a more welcoming experience for all.

Jason Choueiri

Chief Digital Officer and

Senior Assistant Deputy Minister, Client Service, Innovation

Executive Summary

Immigration, Refugees and Citizenship Canada (IRCC) is at the forefront of integrating artificial intelligence (AI) into government operations. The IRCC AI Strategy outlines how we will use AI to boost efficiency, enhance service delivery and strengthen program integrity, all while adhering to the highest ethical standards. It also proposes key priority areas for its ongoing implementation.

The department has already been exploring AI’s potential, recognizing its capacity to streamline processes and inform decision-making. Our success in experimenting with early, low-risk AI technologies has come up against new pressures and demands, underscoring the need for a more deliberate framework for adopting AI.

The IRCC AI Strategy is aligned with the AI Strategy for the Federal Public Service 2025-27, ensuring that our use of AI is consistent with government-wide priorities on responsible adoption, governance, talent development and transparency. As a leader in AI experimentation within the Government of Canada, IRCC will continue to contribute insights and best practices to the federal AI ecosystem.

Our AI strategy operationalizes a framework that is built on Government of Canada guidance and internationally recognized principles, ensuring that we use AI only in ways that benefit the public, protect privacy and program integrity, and promote equitable outcomes. IRCC is committed to being fully transparent and accountable in our use of AI. To reinforce privacy and program integrity, we will explore AI approaches that maintain Canadian data residency, Canadian AI solutions and innovation.

IRCC’s AI Strategy is not just a plan, but a commitment to continuous engagement and refinement. As the AI landscape evolves, so too will the department’s measured approach, ensuring that IRCC remains at the leading edge of innovation while maintaining the trust and confidence of Canadians and our clients.

This strategy sets the stage for a future where AI is a tool to increase operational effectiveness and a force for positive change, helping the department meet the complex challenges of tomorrow.

Vision - Where we want to be

AI adoption framework - How we will use AI to achieve our objectives

Implementation |

Break new barriers | ||

|---|---|---|---|

Usage |

Everyday

AI to perform administrative tasks not part of the decision-making process. |

Program

AI to inform program operations. |

Experimental

No current ambition for adoption due to risk and complexity. |

Tasks |

Employee productivity Performing administrative tasks:

|

Program productivity Inform decision makers by:

|

Conduct responsible experiments with emerging AI technologies, such as using AI-powered and adjustable predictive analytics to model immigration flows and forecast impacts on Canada's economy. |

Benefits |

|

|

|

Risk |

|

|

|

AI Charter

Our approach will:

- Contribute to the public good

- Put people first

- Respect privacy

- Promote equity

- Offer transparency

- Produce reliable results

- Ensure accountability

- Remain secure

- Align with best practices

- Continuously improve

AI Principles

How we will do things right

Human-centered and accountable

Human-centered and accountable Transparent and explainable

Transparent and explainable Fair and equitable

Fair and equitable Secure and privacy-protecting

Secure and privacy-protecting Valid and reliable

Valid and reliable

Priorities (Key activities)

How we will do the right things

Establish and AI Centre of Expertise

Establish and AI Centre of Expertise Bolster our Governance Framework

Bolster our Governance Framework Building an AI-ready workforce

Building an AI-ready workforce Experiment with AI

Experiment with AI Develop an Engagement Strategy

Develop an Engagement Strategy

Background

IRCC has a diverse mandate, befitting the diversity of our country and the crucial role immigration continues to play in shaping it. Since 1994, the department has facilitated the entry of temporary residents; managed the selection, settlement and integration of newcomers;Footnote 1and granted Canadian citizenship to those eligible. In 2013, we took on the additional responsibility of issuing passports.

To deliver on our mandate, IRCC has been leveraging advances in technology to create efficiencies and better manage workloads. The most rapid advances are now in the field of artificial intelligence (AI), a catch-all term for different technologies that enable computers and machines to simulate human intelligence and problem-solving capabilities (such as, learning, reading, writing, talking, seeing, analyzing and making predictions and recommendations), and do other things humans do.

Harnessing the power of AI

Around the world, researchers, businesses and governments are harnessing AI to help them make extraordinary leaps in areas such as automating farm management, detecting cancer and predicting where people will flee to after disasters.

Our department recognizes that people are increasingly finding value in using publicly available AI tools in both professional and personal settings. Technology suppliers are also adding more AI-powered applications into their products and services by the minute.

With the help of AI, employees can get through routine administrative tasks faster, allowing them to focus on more complex assessments of risk, fraud, admissibility and other considerations that require their judgment and expertise.

While AI has immense potential, it also poses risks. We have seen that AI systems can perpetuate bias and discrimination, mistrust, a lack of accountability for decisions, and issues with privacy and data protection. They can also be misused by bad actors. When these problems occur, they can cause harm to individuals and groups, particularly to the most vulnerable among us. But IRCC’s approach to automated decision-making is deliberately transparent and governed.

We must take into careful consideration the ethical, social and legal implications of using AI in order to ensure the integrity and fairness of our programs. We must also weigh its environmental impacts. And we must always protect the safety, rights, dignity and privacy of our clients and employees.

That necessarily means a slow and cautious approach to incorporating AI into our operations. Given the far-reaching implications of IRCC’s mandate and the life-altering consequences of some of our decisions, we cannot afford to move forward with new technologies and tools until they’ve been thoroughly tested and proven reliable, safe and secure.

How AI can help

As IRCC continues to process growing volumes of applications, we believe AI can help us navigate a variety of operational and administrative challenges, and unlock new business opportunities, such as:

- ✔ Improving service delivery amid complex security concerns and global humanitarian crises

- ✔ Monitoring program outcomes for integrity and equity

- ✔ Expediting our efforts to reduce backlogs and improve processing times

- ✔ Navigating growing resource constraints, policy intricacies and legal compliance

- ✔ Staying ahead of AI-enabled program integrity threats by developing more effective anti-fraud measures

Technological scope

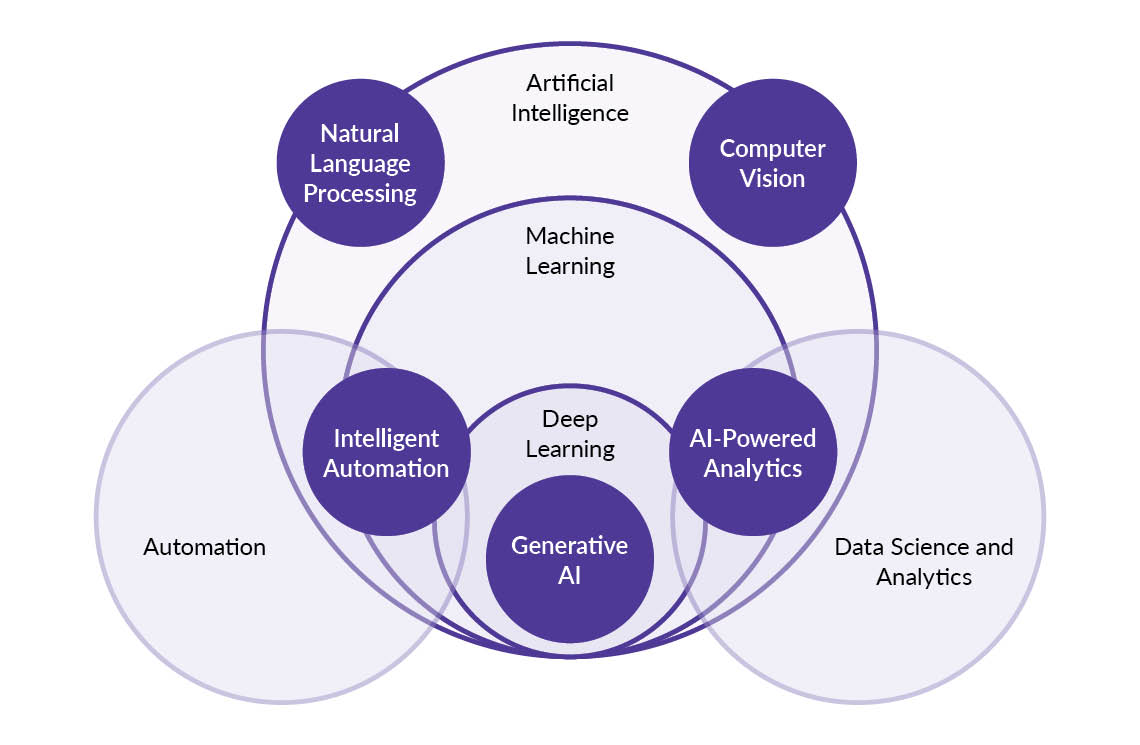

Building on our success in tapping into technological progress, we’re exploring AI and its related disciplines to find new opportunities we can leverage to better deliver on our mandate.

Here’s how we see the different types of AI and their relationships to each other, including how they intersect with automation.

AI relationships

Text version: AI relationships

Venn diagram illustrating AI technologies. Natural language processing and computer vision under machine learning. Intelligent automation, AI-powered analytics and generative AI at the intersection, and automation and data science overlapping on the outer edges.

IRCC's AI journey

IRCC has been a federal government leader in incorporating advancements in automation and AI for more than a decade.

Did you know that IRCC has used AI since 2013?

The Advanced Analytics Solutions Centre in IRCC’s Service Delivery sector was founded in 2013. Early on, the centre experimented with AI and developed proofs of concept. In 2017, senior management approved a proposal to use AI-based models to generate “if-then” rules for automation to manage the rise in temporary resident visa volumes.

Since then, this approach has been used in other temporary and permanent resident programs to accelerate the processing of routine cases, and to strengthen program integrity by identifying fraud patterns. Based on internal data, more than 7 million applications have been assessed by this automation.

In 2020, the first email triage system was developed for clients requesting an exemption from pandemic-related travel restrictions. This cloud-based system is now in use in IRCC’s Client Support Centre and in more than 50 offices overseas. It triages about 4 million emails annually, using AI to identify what clients are enquiring about, so that their emails can be handled more efficiently.

Automation

IRCC has used simple rule-based automation for years to perform administrative tasks and free up employees to focus on more complex activities. For example, robotic process automation technology can copy information from one place and paste it in another, something employees used to have to do manually. Another example is IRCC’s electronic travel authorization system that is built leveraging automated “if-then” rules. Automation remains a valuable tool for increasing efficiency. Our approach to implementing it has helped us improve our internal processes as well as establish guardrails for the governance of technology.

Machine learning

Since 2018, IRCC has used predictive analytics enabled by machine learning to generate insights from decisions on past client applications. These insights aid in the design of automated systems to triage incoming client applications based on their complexity, and to optimize workload distribution across our global processing network. In some lines of business, these systems identify applications that are straightforward and low risk, and then use business rules automation to validate their eligibility to help inform the officer’s final decision. Decision support tools based on machine learning have increased our department’s productivity, reduced processing times and improved our ability to detect risks.

Computer vision

Moving forward, machine learning can help IRCC improve program outcomes. For example, IRCC is experimenting with machine learning to provide recommendations on places in Canada where economic immigrants could settle. These recommendations are informed by an income prediction model that analyzes past earnings, a suite of statistical data focused on regional characteristics and clients’ locational preferences. The department is also experimenting with machine learning tools that detect anomalies and possible manipulation of documents such as academic records and bank statements. These tools have the potential to support us in detecting fraud in real time.

Intelligent automation

It is important to distinguish between the routine automation of repetitive tasks based on static rules, and what’s known as “intelligent automation,” which leverages AI to perform more complex, adaptive tasks that involve learning and decision-making. IRCC does not use any autonomous AI agents or intelligent automation systems that can refuse client applications. Systems that learn and adapt on their own are generally not suitable for use in an administrative decision-making context because their logic can be difficult to explain or reproduce.

Natural language processing

IRCC uses natural language processing technology to triage client enquiries into specific categories, enabling our officers around the world to spend more time supporting clients instead of sorting their questions. Natural language processing also supports conversational agents (or “chatbots”) we use to answer general enquiries with preprogrammed responses on social media.

Computer vision technology has several applications: it can help validate the identity of individuals and protect against fraud (e.g., photo morphing); it can automatically validate and crop passport photos according to our requirements (e.g., International Civil Aviation Organization (ICAO) standards) expediting the preprocessing of applications; and it can help protect the integrity of the online citizenship test against cheating.

Generative AI

Progress in AI has made it possible for systems to solve increasingly complex problems at a speed and scale not seen in the past. Operating under federal guidelines, employees are experimenting with publicly available generative AI tools to assist with advanced tasks such as brainstorming, conducting research, and summarizing or analyzing information. We are also developing in-house generative AI that can, for example, help our internal library employees generate annotated bibliographies for researchers.

Formalizing the department’s AI strategy

We are now formalizing our AI strategy to capture and transparently communicate how we are seizing the opportunities AI has to offer in a responsible way. Our AI strategy also positions IRCC to respond effectively to AI-related threats to program integrity.

The strategy’s foundations

IRCC is authorized to leverage electronic means, including artificial intelligence, to administer our immigration, refugee, citizenship and passport programs.

The department has devoted significant time and effort to AI governance, not only to support IRCC’s work, but to advance a whole-of-government approach. The department has dedicated multidisciplinary experts in legal, technology, privacy, policy and operations issues who are collaborating on issues related to ethical AI. There is also an oversight process and executive-level committee to ensure that AI solutions are developed and deployed in a responsible manner.

IRCC has worked with the Treasury Board of Canada Secretariat on the development of their Directive on Automated Decision-Making and related tools, such as the Algorithmic Impact Assessment and peer review process. IRCC has published more algorithmic impact assessments than any other federal department or agency. Additionally, IRCC has created internal guidelines for the use of generative AI in application processing.

Thanks to these efforts, IRCC is recognized as a Government of Canada leader in the responsible use of technology. We have a set of institutional ethics that has been shared with other federal, provincial and territorial departments, as well as with governments and academic institutions around the world.

Alignment with other guidance

IRCC has tailored our AI strategy to align with existing and emerging Government of Canada guidance, including the AI Strategy for the Federal Public Service 2025-2027 and other policies. Examples include Canada’s Digital Ambition to leverage modern technologies to improve service delivery and the Guide on the use of generative AI, which encourages cautious experimentation across the federal government.

The Directive on Automated Decision-Making remains central to our approach. It mandates that automated decision-making systems be used in a manner that reduces risks to clients, federal institutions and Canadian society, and leads to more efficient, accurate, consistent and interpretable decisions in compliance with Canadian law.

Other policies and directives that have directed our approach to AI include the Access to information and privacy policy suite, Directive on Privacy Practices, Policy on Service and Digital, Directive on Service and Digital, Government of Canada digital standards playbook, Directive on Digital Talent, Greening Government Strategy, Gender-based Analysis Plus and other guidance on security, identity management, anti-racism, equity and inclusion, disaggregated data, official languages, and more.

IRCC is collaborating with our partners, including the Canada Border Services Agency, Statistics Canada and the Royal Canadian Mounted Police, to build consistency in our AI approaches and principles, enabling us to meet our shared objectives. To reinforce privacy and program integrity, IRCC will explore AI approaches that maintain Canadian data residency, Canadian AI solutions and innovation to reinforce privacy and program integrity.

Additional work is under way to regulate AI in Canada’s private and public sectors. IRCC will continue to assess our legal obligations regarding how we use AI.

The legislative and regulatory landscape is evolving as rapidly as the field of AI itself, as governments around the world take different approaches to dealing with the opportunities and challenges posed by AI. IRCC continues to monitor conversations around Canada’s Digital Charter Implementation Act and the EU Artificial Intelligence Act, among other developments. We will keep adjusting our approach to AI in conversation with federal and international partners as we learn from each other’s experiences.

New opportunities, new framework

Rather than prematurely committing to a single approach to AI that risks obsolescence, the AI strategy proposes a framework upon which the department can establish foundations and guardrails, build and test ideas, and implement projects as opportunities arise. Risks will be mitigated, successes celebrated and failures shared openly, helping to inform continuous improvement and learning.

The AI strategy is a living document. It will evolve in response to emerging trends, policies and practices that shape the AI landscape, both domestically and internationally.

The path forward: AI in action

The IRCC AI Strategy is a roadmap guiding our department’s use of AI for service delivery, as well as other external and internal functions. It is also a public pledge. It lays down the guardrails we’ll follow as we harness the power of AI to respond to evolving departmental needs and priorities, as well as to threats to cybersecurity and program integrity.

Vision statement

AI guiding principles

In line with widely acknowledged best practices as well as Government of Canada values and digital principles, IRCC has formalized a set of principles that will serve as a high-level framework guiding the responsible use of AI at all stages of its life cycle.Footnote 2 This approach will help leverage AI’s benefits while mitigating its risks and protecting IRCC’s clients and stakeholders in the process.

IRCC will use AI in ways that are human-centred and accountable, transparent and explainable, fair and equitable, secure and privacy-protecting, and valid and reliable. These five principles form the foundation of IRCC’s AI Strategy and will guide its implementation, including future AI policies, processes and training.

Text version: IRCC AI principles

IRCC’s AI principles include being:

- human-centered and accountable

- transparent and explainable

- fair and equitable

- secure and privacy-protecting

- valid and reliable

Human-centred and accountable

- Engage in the responsible stewardship of AI systems to achieve beneficial outcomes for clients and Canadians. This includes using AI to help employees meet service expectations, advance inclusion of underrepresented populations, better meet labour market needs, improve the economic and social well-being of Canadians, and protect their safety and security.

- Use AI in ways that uphold fundamental rights and freedoms, aligning with human-centred values, such as democracy, equality, fairness, the rule of law, social justice, and the protection of data and privacy.

- Implement safeguards, including keeping a human in the loop, to ensure that AI systems are assisting people and that any risks arising from missed context or system errors are mitigated.

Transparent and explainable

- Provide meaningful information, appropriate to the context and consistent with best practices, to foster a general understanding of how it uses AI and how these systems function. This may involve sharing details about the data inputs, decisions made by various stakeholders throughout the AI life cycle, and the interactions between humans and the system.

- Avoid “black box” AI models(where a system’s logic is opaque, unknowable or unexplainable)to make decisions on applications. Doing so would run counter to the administrative law principle that clients are entitled to a meaningful explanation of decisions and to a transparent appeals process.

Fair and equitable

- Use AI with explicit safeguards against bias in data and model design, recognizing heightened risks to protected or marginalized groups, and proactively prevent, detect, and correct unfair outcomes.

- Use methods to test for, identify, understand, measure and reduce unintended bias, recognizing that poorly designed AI systems can harm individuals, groups, communities, organizations and society as a whole.

Secure and privacy-protecting

- Keep AI systems secure and safe at every stage of their life cycle, making sure they function properly under both normal and challenging conditions. This includes preventing risks like unexpected control problems, data tampering, hacking, or unauthorized access to models, training data or personal information.

- Ensure protected, classified, personal or de-identified information is not entered in public AI tools, in line with guidance in Generative AI in your daily work.

- Apply and uphold standards and practices to protect privacy, including respecting people’s autonomy, identity and dignity. Privacy principles, a privacy-by-design approach, and values like anonymity, confidentiality and control will guide our decisions in procuring, developing and using AI systems.

Valid and reliable

- Use only AI systems that are trustworthy, consistent and capable of delivering meaningful outcomes.

- Design AI systems that are both valid and reliable by:

- using diverse, high-quality and representative training data

- continuously monitoring performance and adjusting models as needed

- testing the system under varied scenarios to ensure both accuracy and consistency

Protecting privacy

Protecting privacy is top of mind at IRCC. A principal guardrail in our AI strategy is a privacy by design approach. We’ve put privacy safeguards in place at every stage of the process of acquiring, developing, experimenting with and using AI systems in our operations. That includes completing any mandatory privacy impact assessments, establishing any mandatory privacy protocols and implementing robust privacy controls for the protection of personal and de-identified information within these systems.

The initial stage involves making decisions about the types of information that can be entered into an AI system. In line with the IRCC privacy framework, AI systems must handle only the minimum personal information necessary for specific, justified purposes. Prior to implementing AI systems or tools, the department must complete a mandatory privacy needs assessment and implement appropriate mitigation measures.

As we develop AI systems for specific purposes, we will integrate additional privacy measures, including trying to use anonymized or internally generated synthetic data wherever possible to minimize risks to privacy. Where this is not possible, we will make sure that any personal information entered into an AI system can be corrected and deleted from the training datasets and models, and that privacy controls are commensurate with the sensitivity of the personal information involved. For sensitive or protected information, IRCC will deploy AI capabilities within Government of Canada controlled environments that enforce Canadian data residency, access controls and model governance to uphold privacy by design.

Once an AI system that uses personal information goes live, we will monitor it closely and make detailed reports available to meet stringent timelines for review. We will also be transparent by clearly identifying AI-generated output as coming from a specific AI system. For accuracy, all AI-generated information used in support of our administrative processes will be rigorously validated against reliable sources.

Finally, we are establishing a systematic approach to regular testing and auditing tailored to each type of AI technology. This will ensure that our AI systems comply with privacy laws, policies and standards while also validating that all privacy controls are operating effectively.

AI Charter

The AI Charter is a set of rules that spells out what our department will and won’t do with artificial intelligence. It’s designed to guide our teams in their work with AI and to foster public trust by providing a reference point to hold IRCC accountable.

We will use AI only in ways that:

- Contribute to the public good: The purpose of any AI system is to improve our services for the benefit of our country and clients. We regularly approach our stakeholders for feedback.

- Put people first: AI is there to empower employees and enhance their capabilities. Human oversight must be retained and human rights upheld every step of the way.

- Respect privacy: If the department must use personal information in an AI system, it will only be used in a reasonable and secure manner, and only the necessary elements of personal information will be used. We will not use vision technologies to profile, target or track individuals.

- Promote equity: AI is designed in ways that avoid or mitigate unintended bias and promote equitable outcomes for all.

- Offer transparency: AI systems are explainable, not opaque, to ensure any outcomes related to their use are fair, and people have clear information and processes to challenge them.

- Produce reliable results: AI systems are regularly audited, tested and updated to maintain the accuracy, reliability and authenticity of their outputs.

- Ensure accountability: AI systems never run autonomously. They are supervised to ensure they’re running as expected and comply with the relevant frameworks, guidelines and laws. The department is responsible for everything AI does.

- Remain secure: AI systems are designed with robust safeguards to make them resilient to attacks and to protect the data they hold from security threats.

- Align with best practices: AI is governed according to best practices and principles derived from ongoing monitoring of the global legislative and regulatory landscape.

- Continuously improve: AI systems are refined to address feedback, adapt to new challenges and evolve with emerging technologies and guidance, ensuring these systems remain effective and responsible.

Framework for AI adoption

An overview of the framework can be found in the executive summary.

We’ve developed a framework for adopting AI tools. This framework will guide us in prioritizing new AI initiatives based on their relative benefits and risks. Throughout an AI tool’s life cycle, we will ensure compliance with policies on IT security, architecture, privacy, information management and other essential domains.

Framework categories

The framework has three distinct categories.

1. Every day: using AI to perform administrative tasks

We’re ready to accelerate our adoption of AI systems that can drive efficiencies in routine tasks that otherwise use significant time and resources. These tasks are often simple, repetitive and predictable in their inputs and outputs. Using AI for these functions poses minimal risk to clients. While human decision makers remain central to program delivery, AI is set to enhance many of our internal processes. For example, for human resources, it could boost administrative efficiency and accuracy, as well as employee productivity. Through an access to information and privacy (ATIP) analyst support tool, it could provide quick summaries on ATIP requests, including by identifying where personal information could be redacted based on the Access to Information Act and Privacy Act.

2. Program: using AI to inform program operations

We will use AI technologies to inform operational decisions in our programs on a case-by-case basis. We will carefully assess operational needs and select AI solutions that meet specific objectives rather than apply them broadly. Continuous monitoring will ensure these systems operate effectively and ethically, helping us mitigate biases or unintended consequences. By taking a thoughtful and targeted approach to adopting AI in specific program areas, we can maximize its benefits while minimizing risks.

3. Break new barriers: Experimenting with AI

IRCC is not looking to adopt fully autonomous AI. Instead, we will continue to experiment with AI technologies to inform future operational and policy decisions related to automation. A proactive approach to understanding and preparing for future uses of AI helps us be more responsive and resilient in the face of unexpected challenges, ultimately supporting current priorities, such as more effective crisis management and decision-making.

Areas of interest

AI can be used in innovative ways to help meet our department’s needs and priorities. Through internal consultations, several areas of interest have been identified, with real use cases illustrating how business value can be achieved through the responsible use of AI as guided by IRCC’s AI principles and adoption framework.

Quaid

Quaid is a rules-based chatbot used to respond to web-based enquiries about IRCC’s programs and services. It was trained using questions from real clients, and it’s continuously updated based on data from new questions.

Quaid can answer approximately 80% of the questions it receives with pre-programmed responses—no human intervention required. The tool has helped improve the client experience while reducing the workload at our Client Support Centre.

Provide more individualized, intuitive services to clients

Building on Quaid, AI presents an opportunity for us to “level up” the client service we provide. By integrating AI into IRCC‘s Digital Platform Modernization, our portals and platforms can tailor interactions to individual needs and give clients comprehensive insights into their applications, while also improving the overall efficiency and transparency of the process for them. Every client interaction serves to build trust in Canada’s immigration system and strengthens our reputation as a top-tier destination country, enhancing our global competitive edge.

Access new insights

In line with IRCC’s Data Strategy, and aligned with the 2023‑2026 Data Strategy for the Federal Public Service, AI can analyze data from sources within the department and partner organizations to gain powerful insights into emerging trends and correlations in client behaviour, visualize immigration flows and model the potential impacts of different policies. It also emphasizes the importance of high-quality, cleansed data for model training and bias mitigation, ensuring AI initiatives are supported by robust governance. This alignment enhances the accuracy and reliability of generative AI solutions while also strengthening the feedback loop that enables the department to measure the success of our efforts and guide program modernization.

Finding the best place for newcomers to call home

In partnership with Stanford University’s Immigration Policy Lab, we are experimenting with an algorithmic landing recommendation system for newcomers. Machine learning algorithms analyze an applicant’s data alongside socioeconomic indicators and historical outcomes to recommend locations where they are most likely to succeed based on their background. Clients are not obligated to follow the recommendation; it is offered as handy information for them to consider.

Advance equity

AI has the potential to analyze patterns that may indicate biases, helping to inform decision-making that is fair and equitable for all. Additionally, AI can provide personalized recommendations to clients, addressing inequalities and making services more accessible to marginalized communities. These applications of AI align with IRCC’s Anti-Racism Strategy 2.0 (2021-2024) and other Government of Canada initiatives designed to combat racism and discrimination in all its forms.

Strengthen program integrity

Some external actors are using AI to create false narratives, fabricate supporting evidence or circumvent IRCC’s program integrity measures. Fortunately, AI also provides new tools to help us detect misrepresentation and fraud, as well as protect more broadly against bad actors and cybersecurity threats.

Support employee productivity

AI can automate routine and administrative tasks, freeing employees to focus on higher-value work, such as making complex decisions, supporting clients and improving their own knowledge and skills. AI can also assist with customized learning opportunities to support employee growth. In line with the Accessibility plan at IRCC, AI can empower people with disabilities, for example, through virtual assistants that enhance accessibility.

Library services

We’re testing large language models (LLMs) to enhance our library services with advanced research, analytical and summarization capabilities. If successful, library staff will be empowered with better ways of finding and sharing knowledge, leading to greater productivity and improved service for our internal clients.

Support partner organizations

An important part of IRCC’s work is the Settlement Program, which involves funding third-party organizations that provide settlement and resettlement services to newcomers. Some of these organizations are calling upon IRCC for guidance and/or additional funding to leverage AI in service delivery. The department will collaborate with these organizations to understand how they use or plan to use AI in ways that could impact a client’s settlement experience. We will also share best practices to help them implement AI solutions that align with the principles, frameworks and guidelines in this strategy.

Streamline operations

Building on our progress with automation and advanced analytics, the department is leveraging AI to reduce application backlogs and wait times for clients while driving greater efficiency and consistency in our operations. AI can assist with managing client enquiries, verifying the completeness and validity of applications and documents, assessing client eligibility, and routing straightforward, low risk files for expedited officer review (i.e., files that meet defined, checklist based criteria and were not flagged for risk or complexity), with all outcomes subject to officer verification. In the Settlement Program, AI can assist employees with project oversight, monitoring of agreements with settlement service providers and analysis of program effectiveness. In these and other ways, AI enables us to streamline routine decisions and support more complex decision-making.

Prioritize AI initiatives

Alongside our AI Adoption Framework, we are implementing a strengthened governance process to help prioritize AI initiatives and ensure they are used transparently, fairly, proportionately and responsibly. This process assesses an initiative’s level of risk and potential return on investment while considering IRCC’s business and program priorities, available resources and funding, and stakeholder engagement, among other criteria.

This process will be iterative, allowing new AI initiatives to be assessed and ongoing ones re-prioritized based on insights we’ve gained in the course of research and development.

Next step: Implementation

IRCC’s AI Strategy outlines the department’s approach to using AI. We are now working on the next step: solidifying our plan to implement this strategy.

While integrating AI into our operations is a whole-of-department effort, the Chief Digital Officer is leading the implementation plan. IRCC is committed to continuously reporting on its use of AI, ensuring clarity in its objectives with stakeholders, sharing the results of experiments with AI-enabled tools and technologies, meeting stringent safety and privacy requirements, and governing AI systems effectively at every stage of their life cycle.

The implementation plan will be updated as needed to reflect ongoing work and the evolution of departmental AI priorities.

We have already begun work on five priority areas to support the implementation of IRCC’s AI Strategy.

1. Establish an AI Centre of Expertise

We are establishing an AI Centre of Expertise (AI CoE) under the leadership of the Chief Digital Officer. The AI CoE will oversee and coordinate AI initiatives, collaborate with internal and external experts, research AI trends, experiment with emerging AI technologies, and promote the responsible use of AI across the department.

2. Bolster our governance framework

We are applying a robust framework to govern the responsible use of AI and mitigate the risks outlined in this AI strategy.

Our framework will define roles and responsibilities across the AI life cycle, helping us prioritize and avoid duplication of efforts. It will also incorporate feedback from stakeholders, including experts in information management, information technology, data, risk management, privacy, legal services, policy and communications.

3. Build an AI-ready workforce

We are building an AI-ready workforce by equipping employees with the skills and mindset to harness AI responsibly. That starts with fostering workforce diversity to mitigate bias and broaden perspectives. We will leverage federal AI training programs, such as those offered by the Canada School of Public Service. In addition to mandatory basic training, specialized learning will cover areas such as AI-related risks and legal implications. The department will share best practices and pursue professional certifications to strengthen its AI capacity and leadership.

4. Experiment with AI

We are working to accelerate experimentation with emerging AI technologies across the department. Our experimentation approach will follow the AI principles outlined in this strategy, align with existing development and oversight methods, and incorporate new, emerging approaches as part of the AI life cycle. We will share our insights with other federal departments to advance collective knowledge and best practices.

Experiment on fraud detection of electronic documents

We are testing an AI-powered document fraud detection tool to assess whether it can help the department identify fraudulent submissions in real time.

An AI-powered anomaly detection system can scan datasets and identify potentially fraudulent activities throughout the client application process. It can point out irregular travel patterns, inconsistent information, unusual changes in application or biometric data, document forgery, identity theft or morphing, or when someone’s overstayed their visa. Once flagged, human agents can investigate these activities further.

5. Develop an engagement strategy

In tandem with our AI strategy, we are developing an engagement strategy to promote the department’s responsible use of AI while strengthening our reputation for innovation and leadership in this field. The engagement strategy will reinforce these messages consistently through appropriate channels. It will also solicit ideas for addressing IRCC business challenges using AI, leveraging surveys and initiatives like the employee idea fund and innovation fairs. A key part of the strategy is frequent engagement with stakeholders, including employees, clients and vulnerable groups, to understand their needs and concerns around our use of AI. We will also engage with IRCC partners, such as settlement service providers who use AI in ways that impact the department or its clients. The input we gather will help us proactively manage relationships and inform our evolving approach to engagement, communications and change management.

Conclusion

Releasing IRCC’s AI Strategy is only the first step in an iterative, interactive process. Our implementation priorities will continue to be tailored to business needs and to ensure ongoing compliance with Government of Canada guidance.

We also know that the department’s use of AI can only mature at a pace that maintains the confidence of Canadians and our clients. We are committed to engaging with stakeholders, partners and the public on IRCC’s AI Strategy and taking their feedback into account as we move forward.

The future of AI is daunting and exciting. We are ready to explore it, together.

Glossary

| Term | Definition |

|---|---|

| Advanced analytics | The use of complex techniques to analyze data, extract insights and uncover patterns or trends to inform decision-making. |

| Algorithmic impact assessment (AIA) | A tool used to assess the potential effects of an AI system, particularly regarding fairness, accountability and transparency. |

| Artificial intelligence | Technology capable of performing tasks that typically require human intelligence, such as understanding spoken language, learning behaviours or solving problems. |

| Chatbot | An AI-powered software application designed to simulate human conversation, commonly used in customer service. |

| Computer vision | AI technology that captures and interprets visual information from digital images or videos, enabling systems to analyze and make sense of the visual world. |

| Generative artificial intelligence (GenAI) | AI technology that creates content—such as text, audio, code, videos and images—from simple prompts. Large language models (e.g., ChatGPT) are widely used to assist users in performing tasks like writing, summarizing and translating text. |

| Intelligent automation | A combination of AI and automation technologies used to perform tasks and processes more efficiently, often mimicking human decision-making to improve accuracy and speed. |

| Large language model (LLM) | An advanced AI system capable of understanding and generating human-like text by analyzing vast amounts of written data. Used for tasks such as conversation, translation and content creation. |

| Machine learning (ML) | A vast subset of AI that enables algorithms to learn from data and make predictions or decisions without being explicitly programmed to do so. |

| Models | Mathematical representations that learn from data to make predictions or decisions. They help the system understand patterns and perform tasks like recognizing images or generating text. |

| Morphing | The gradual transformation of one image, shape or piece of data into another, often used in image editing or animation to create smooth transitions and variations. |

| Natural language processing (NLP) | A technique that enables AI systems to understand, interpret and generate human language in a way that is contextually relevant. |

| Rules-based automation | The automation of repetitive tasks using predefined "if-then" rules and instructions to ensure consistency and accuracy. |

| Synthetic data | Artificially created data that mimic real data but exclude actual personal or sensitive information. Used for training models, testing algorithms and protecting privacy. |