Guidance on the Governance and Management of Evaluations of Horizontal Initiatives

Notice to the reader

The Policy on Results came into effect on July 1, 2016, and it replaced the Policy on Evaluation and its instruments.

Since 2016, the Centre of Excellence for Evaluation has been replaced by the Results Division.

For more information on evaluations and related topics, please visit the Evaluation section of the Treasury Board of Canada Secretariat website.

Centre of Excellence for Evaluation

Expenditure Management Sector

Treasury Board of Canada Secretariat

Acknowledgements

Guidance on the Governance and Management of Evaluations of Horizontal Initiatives was developed by the Centre of Excellence for Evaluation (CEE) and the Working Group on the Evaluation of Horizontal Initiatives (WGEHI), with the support of Government Consulting Services.

The WGEHI, composed of heads of evaluation and evaluators from a range of departments (see the list below) and a team from CEE, was established in 2009 to share ideas and develop guidance related to the evaluation of horizontal initiatives in accordance with the Treasury Board evaluation policy suite. The experience and efforts of the WGEHI and its subcommittee, the Governance Task Team, were instrumental in the development of this guidance document.

The CEE would like to thank the departmental and agency representatives who were interviewed during the development of the guidance document, as well as the members of the WGEHI and those who were actively involved in this work and provided comments on draft versions.

WGEHI membership:

- Atlantic Canada Opportunities Agency

- Economic Development Agency of Canada for the Regions of Quebec

- Environment Canada

- Fisheries and Oceans Canada

- Health Canada

- Human Resources and Skills Development Canada

- Industry Canada

- Infrastructure Canada

- Library and Archives Canada

- Public Health Agency of Canada

- Public Safety Canada

- Public Works and Government Services Canada

- Royal Canadian Mounted Police

- Western Economic Diversification Canada

- Treasury Board of Canada Secretariat

Table of Contents

- 1.0 Introduction.

- 2.0 Governance of the Evaluation of Horizontal Initiatives: Concepts, Principles, Guidelines and Approaches

- 3.0 Conclusion

- Appendix A: Governance Toolkit

- Appendix B: Possible Questions for Inclusion in an Evaluation of a Horizontal Initiative

- Appendix C: References

- Appendix D: Departments and Agencies Interviewed in Preparation of Guidance

- Appendix E: Glossary

- Appendix F: List of Acronyms Used

1.0 Introduction

1.1 Evaluation in the Government of Canada

Results-based management is a life-cycle approach to management that integrates strategy, people, resources, processes and performance evidence to improve decision making, transparency and accountability for investments made by the Government of Canada on behalf of Canadians. In support of overall management excellence, the Government of Canada’s Expenditure Management System (EMS) is designed to help ensure aggregate fiscal discipline, effective allocation of government resources to areas of highest relevance, performance and priority, and efficient and effective program management. Across the Government of Canada, evaluation plays a key role in supporting these government initiatives and ensuring the value for money of federal government programs.

Evaluation: Why and for Whom?

Evaluation supports:

- Accountability, through public reporting on results

- Expenditure management, including strategic reviews of direct program spending

- Management for results

- Policy and program improvement

- Assessment of results attribution

Evaluation information is made available to:

- Canadians

- Parliamentarians

- Deputy heads

- Program managers

- Central agencies

Since its introduction as a formal tool for generating performance information in the early 1970s—and in particular after the Government of Canada adopted its first Evaluation Policy in 1977—evaluation has been a source of evidence that is used to inform expenditure management and program decision making. In the Government of Canada, evaluation is defined as the systematic collection and analysis of evidence on the outcomes of programs, policies or initiatives to make judgments about their relevance and performance, and to examine alternative ways to deliver programs or achieve the same results. Evaluation serves to establish whether a program contributed to observed results, and to what extent. It also provides an in-depth understanding of why program outcomes were, or were not, achieved.

1.2 Evaluating Horizontal Initiatives

Evaluations of horizontal initiatives are important in that they enhance the accountability and transparency of expenditures made through multiple organizations. They provide a source of evidence to inform expenditure management and program decisions on initiatives involving multiple organizations. They support the deputy heads of those organizations, in particular, the deputy head of the lead organization, in fulfilling their responsibilities for reporting on horizontal initiatives through Reports on Plans and Priorities and Departmental Performance Reports.

1.3 Purpose

Guidance on the Governance and Management of Evaluations of Horizontal Initiatives has been developed to support departments and agencies1 in designing, managing and conducting evaluations of horizontal initiatives while meeting the requirements under the Policy on Evaluation (2009), the Directive on the Evaluation Function (2009) and the Standard on Evaluation for the Government of Canada (2009).

This guidance document presents governance concepts, principles and approaches that pertain to all phases of the evaluation of horizontal initiatives. It brings together current thinking and practices for addressing governance challenges inherent in evaluating horizontal initiatives within the federal government. Given the complexity of evaluating horizontal initiatives, it is important to remember that this guidance does not provide a singular approach to governance; rather, the material provided here will assist departmental officials in implementing approaches to governance that suit the complexity of the initiative being examined.

1.4 Intended Users

The primary audiences for this guidance document are heads of evaluation (HoEs), evaluation managers and evaluators who are responsible for undertaking evaluations.

The guidance document will also serve program managers who are responsible for performance measurement and those involved in the development of Memoranda to Cabinet (MCs) and Treasury Board submissions. It will assist them in understanding their role in supporting the governance and management of evaluations of horizontal initiatives.

Further, the document will also be of use to senior executives who are members of a Departmental Evaluation Committee (DEC) and who provide support to their deputy head related to the departmental evaluation plan, resourcing and final evaluation reports. It will assist them in understanding the role of the evaluation function in the design, development and management of horizontal initiatives.

1.5 Organization

This guidance document presents concepts related to the evaluation of horizontal initiatives; it recommends governance principles and guidelines for the evaluation of horizontal initiatives across the key phases in the evaluation life cycle, and it illustrates approaches to be considered for the evaluation of a horizontal initiative. It focuses primarily on the incremental differences in governance faced by the evaluation of horizontal initiatives in comparison with the evaluation of programs within one department.

The document also builds on the evaluation policy suite and other guidance on evaluation in the federal government, and discusses its application to the governance of the evaluation of horizontal initiatives.

The evaluation policy suite includes the following materials:

- Directive on the Evaluation Function

- Policy on Evaluation

- Standard on Evaluation for the Government of Canada

1.6 Suggestions and Enquiries

Guidance on the Governance and Management of Evaluations of Horizontal Initiatives will be updated periodically as required. Suggestions are welcome.

Enquiries concerning this guidance document should be directed to:

Centre of Excellence for Evaluation

Expenditure Management Sector

Treasury Board of Canada Secretariat

Email: evaluation@tbs-sct.gc.ca

2.0 Governance of the Evaluation of Horizontal Initiatives: Concepts, Principles, Guidelines and Approaches

2.1 Concepts

2.1.1 Definition of a horizontal initiative

The Treasury Board of Canada Secretariat (Secretariat) defines a horizontal initiative in the Results-Based Management Lexicon as “an initiative in which partners from two or more federal organizations have established a formal funding agreement [e.g., a Memorandum to Cabinet, a Treasury Board submission] to work toward the achievement of shared outcomes.” This guidance document focuses on initiatives that fit this definition, taking into consideration the accountabilities and responsibilities often entailed by these types of initiatives.

It is noted, however, that there are other types of collaborative arrangements that support the delivery of programs or initiatives by more than one organization.2 These types of collaborative arrangements might include: federal-provincial partnerships, multi-jurisdictional collaborative arrangements involving non-federal partners, informal arrangements involving more than one organization, or whole-of-government thematic initiatives. These types of arrangements may have a level of complexity that requires additional governance considerations than those presented in this guidance document.

Although these other types of arrangements fall outside the current definition, suggestions provided in this document may be applicable during their evaluations.

2.1.2 Definition of governance

The Policy on Management, Resources and Results Structures defines governance as “the processes and structures through which decision-making authority is exercised.”

In a similar vein, the following is a definition of corporate governance based on that developed by the Organisation for Economic Co-operation and Development (OECD): “Corporate governance is the way in which organizations are directed and controlled. It defines the distribution of rights and responsibilities among stakeholders and participants in the organisation; determines the rules and procedures for making decisions on corporate affairs, including the process through which the organization’s objectives are set; and provides the means of attaining those objectives and monitoring performance.”3

Although the latter definition refers to organizations, for the purposes of this guidance document governance can be defined as the way in which participants from multiple departments organize themselves to collaboratively conduct an evaluation of a horizontal initiative, including the development and implementation of process and control structures for decision making.

2.1.3 Policy requirements and considerations for the evaluation of horizontal initiatives

It is important to remember that the governance principles and practices used for the evaluation of horizontal initiatives must remain consistent with Treasury Board’s current evaluation policy suite that includes the Policy on Evaluation, the Directive on the Evaluation Function, and the Standard on Evaluation for the Government of Canada.

Key Policy Requirements to Consider in the Evaluation of a Horizontal Initiative

- Heads of evaluation (HoEs) are responsible for directing the evaluation;

- HoEs are responsible for issuing evaluation reports directly to deputy heads and the Departmental Evaluation Committee (DEC) in a timely manner;

- Program managers are responsible for developing and implementing ongoing performance measurement strategies and ensuring that credible and reliable performance data is being collected to effectively support evaluation;

- Program managers are responsible for developing and implementing management responses and action plans for the evaluation report;

- DECs are responsible for ensuring follow-up on action plans approved by deputy heads; and

- While the HoEEs direct evaluation projects and the DEC provides advice to the deputy head on the evaluation, it is the deputy head’s responsibility to approve the evaluation report, management responses and action plans.

Under the Policy on Evaluation, deputy heads are responsible for establishing a robust, neutral evaluation function in their departments.

Deputy heads are required to:

- Designate a head of evaluation (HoE) at an appropriate level as the lead for the evaluation function in the department;

- Approve evaluation reports, management responses and action plans;

- Ensure that a committee of senior officials (known as the Departmental Evaluation Committee (DEC)) is assigned the responsibility of advising the deputy head on all evaluation and evaluation-related activities of the department;

- Ensure that the DEC and the HoE have full access to information and documentation needed or requested to fulfill their responsibilities; and

- Ensure that complete, approved evaluation reports along with management responses and action plans are made easily available to Canadians in a timely manner, while ensuring that the sharing of reports respects the Access to Information Act, the Privacy Act, and the Policy on Government Security.

The DEC is a senior executive body chaired by the deputy head or senior-level designate. This committee serves as an advisory body to the deputy head on matters related to the departmental evaluation plan, resourcing, and final evaluation reports. It may also serve as the decision-making body for other evaluation and evaluation-related activities of the department.4

In accordance with the Directive on the Evaluation Function, HoEs are responsible for:

- Supporting a senior committee of departmental officials (the DEC) that is assigned the responsibility for guiding and overseeing the evaluation function;

- Issuing evaluation reports (and other evaluation products, as appropriate) directly to the deputy head and the DEC in a timely manner;

- Reviewing and providing advice on all Performance Measurement Strategies for all new and ongoing direct program spending;

- Reviewing and providing advice on the accountability and performance provisions to be included in Cabinet documents (e.g., MCs, Treasury Board Submissions);

- Reviewing and providing advice on the Performance Measurement Framework embedded in the department’s Management, Resources and Results Structure;

- Submitting to the DEC an annual report on the state of the performance measurement of programs in support of evaluation;

- Making approved evaluation reports along with management responses and action plans available to the public; and

- Consulting appropriately with program managers, stakeholders, and peer review or advisory committees during evaluation project design and implementation.

In accordance with the Standard on Evaluation for the Government of Canada:

- HoEs are responsible for directing evaluation projects and for ensuring that the roles and responsibilities of project team members involved in specific evaluations are articulated in writing and agreed upon at the outset of the evaluation.

- Peer review, advisory, or steering committee groups are used where appropriate to provide input on evaluation planning and processes and the review of evaluation products in order to improve their quality. The HoE or the evaluation manager directs these committees.

In accordance with the Directive on the Evaluation Function, program managers are responsible for:

- Developing and implementing ongoing Performance Measurement Strategies for their programs and ensuring that credible and reliable performance data are being collected to effectively support evaluation;

- Consulting with the HoE on Performance Measurement Strategies for all new and ongoing direct program spending; and

- Developing and implementing a management response and action plan for all evaluation reports.

The above-noted distinction between the roles and responsibilities of evaluation personnel versus those of program personnel is maintained throughout this guidance document.

The guidance document is to be read in conjunction with Supporting Effective Evaluations: A Guide to Developing Performance Measurement Strategies, which outlines the content of Performance Measurement Strategies, a key element in supporting evaluations; provides a recommended process for developing clear, concise Performance Measurement Strategies; and presents examples of tools and a framework for that purpose. It also provides an overview of the roles of program managers and HoEs in developing Performance Measurement Strategies.

The following policies and Acts are also related to the subject of evaluation:

- Access to Information Act

- Management Accountability Framework

- Policy on Financial Management Governance

- Policy on Government Security

- Policy on Management, Resources and Results Structures

- Policy on Transfer Payments

- Privacy Act

2.2 Governance Principles and Guidelines for the Evaluation of Horizontal Initiatives

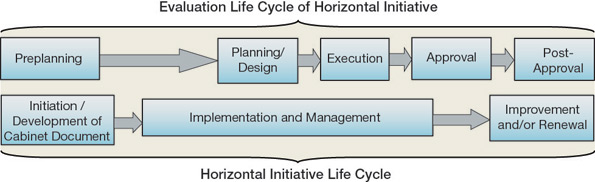

For the purpose of this section, the life cycle for the evaluation of the horizontal initiative is divided into five phases, as illustrated in Figure 1.

Figure 1 - Text version

This diagram illustrates the various stages in the life cycle of a horizontal initiative mapped against the evaluation life cycle. The top half of the illustration divides the evaluation life cycle of the horizontal initiative into five distinct stages in the following sequence: preplanning, planning and design, execution, approval, and post-approval. The bottom half of the illustration divides the life cycle of the horizontal initiative into three stages in the following sequence: implementation and development of planning documents, implementation and management, and improvement and/or renewal.

This section first presents overarching governance principles related to the evaluation of the horizontal initiative as a whole and then provides key governance-related tasks and associated guidelines for each of the life-cycle phases.

The considerations presented in this section are supported by generic illustrative examples in section 2.3, which demonstrate how the guidance can be applied in the evaluation of horizontal initiatives of varying complexity. In Appendix A, a toolkit consisting of graphic representations, crosswalks of roles and responsibilities, and sample templates elaborates on these principles and approaches. The contents of the toolkit are cited throughout this guidance document.

2.2.1 Overarching guiding principles

The following overarching principles apply to good governance for all phases of the evaluation of a horizontal initiative and are based on previous departmental experiences that have been identified as important determinants of success.

- A lead department is established that provides direction and is delegated with appropriate decision-making authority. Defining who will take the lead for the evaluation and what that entails often helps to clarify understanding for participating departments. In most cases, the lead role is conferred in an MC, a Treasury Board submission or other senior-level directive or decision. If no lead is identified in the MC or Treasury Board submission, a lead department for the evaluation should be established and agreed to by partner departments. The evaluation function of the lead department should engage the evaluation function of all participating departments throughout all phases of the evaluation, including when evaluation strategy decisions are being made. Communication among evaluators in all partnering departments is very important to ensure awareness and understanding of different organizational cultures, clear understanding of accountabilities among the participating evaluation functions, and the smooth conduct of the evaluation.

- Buy-in and commitment from participants is obtained. Ensure that all those involved know their responsibilities with respect to the evaluation and have the capacity to discharge those responsibilities as required (e.g., authority, time, resources, knowledge, support, connections, experience, competencies). It is important that the commitment of each partnering department is articulated by those at an appropriate level so as to ensure that competing priorities do not impede evaluation activities. These commitments include responsibilities that are outside the evaluation function, such as implementing the Performance Measurement Strategy, drafting the management response and developing management action plans. It is important to ensure that senior management is engaged throughout the evaluation phases.

- The evaluation strategy consists of an agreed-upon purpose and approach that is clearly articulated and well understood. Ensure that sufficient time and resources exist for the necessary collective work that will define, and later communicate, the objectives, scope, approach and plan for the evaluation. This collaboration should involve senior management and program and evaluation personnel from each participating department.

- Conditions for effective decision making are present. To foster effective decision making, ensure decision-making bodies are of a manageable size, have clear and agreed-upon processes, and receive effective support and timely information. This will allow sufficient time and resources for collective decision making that builds and maintains the trust and confidence of participants throughout the evaluation. Encourage all members to participate in an open, honest and forthright manner. This will help establish a policy of full participation and transparency.

- The decision-making process is transparent with clear accountabilities and is consistent with governance of the horizontal initiative, departmental governance and the requirements of the Treasury Board’s evaluation policy suite. It is important to establish an inclusive, effective and equitable decision-making process that respects participating departments’ individual accountabilities for their respective evaluation functions, while maintaining a collective sense of purpose and responsibility in conducting the evaluation.

- A Performance Measurement Strategy is in place. The evaluation function, in collaboration with program managers, ensures that a Performance Measurement Strategy is in place to support the evaluation and that data collection and reporting is occurring.

- Risks to the evaluation are understood and managed. Identify risks to the successful completion of the evaluation and develop corresponding mitigation strategies. Examples of possible risks to be considered are expansion of scope, resourcing and continuity of program and evaluation staff, risk of project delay, organizational change, political risk, changes in strategic priority within different departments, and level of public interest or media attention. Each risk should be scored against its likelihood and impact, and significant risks should be addressed in the risk management plan, which will need to be revisited periodically through the phases of the evaluation.

2.2.2 Preplanning phase

Evaluation preplanning generally starts with the activities associated with the development of Cabinet documents at the inception of the horizontal initiative and should continue until a sound foundation for the evaluation is in place. Given the length of time that may be required to reach agreement among the participating departments, preplanning is particularly important in the case of the evaluation of a horizontal initiative.

The following tasks and related guidelines are recommended during the preplanning phase. They are intended to help establish a governance approach for the upcoming evaluation, develop an approach to monitoring and mitigating risks until the evaluation has been successfully completed, and support the implementation of the Performance Measurement Strategy.

Preplanning Phase Tasks

- Contributing to the preparation of Cabinet documents;

- Ascertaining the context of the horizontal initiative and the evaluation;

- Establishing the scope and objectives of the evaluation of the horizontal initiative;

- Obtaining agreement on the overall evaluation approach;

- Defining the governance structure, participant roles and responsibilities, and key processes;

- Developing terms of reference for evaluation committees and working groups;

- Identifying costs and a source of funds for the evaluation;

- Setting the overall schedule for the evaluation;

- Including provisions for evaluation in interdepartmental Memoranda of Understanding (MOUs) for the horizontal initiative;

- Identifying evaluation-related risks and resolving evaluation issues prior to the planning/design phase; and

- Contributing to the development of the Performance Measurement Strategy.

Although a number of these tasks support activities led by the program areas of participating departments, they should involve the evaluation unit from each participating department.

Contributing to the preparation of Cabinet documents

The Cabinet documents that provide policy and funding support for the horizontal initiative should make provision for its evaluation. In this regard, guidance for the preparation of MCs and Treasury Board submissions should be consulted.

Input into Cabinet documents for the evaluation of horizontal initiatives should be developed in a collaborative and consultative manner among departmental evaluation and program personnel. It should be noted that the HoEs of participating departments are responsible for reviewing and providing advice on the accountability and performance provisions that are included in Cabinet documents (as per section 6.1.4 b of the Directive on the Evaluation Function).

It is recommended that the MC makes a clear commitment to the evaluation of the horizontal initiative, identifies the lead department responsible for the evaluation, and outlines to what extent each department will be involved in that evaluation.

Recommended Provisions for Evaluation in Cabinet Documents

- Commitment to evaluate the horizontal initiative;

- Identification of lead and partner departments;

- Governance structure, roles and responsibilities (See Appendix A: Governance Toolkit, Roles and Responsibilities Matrix);

- Definition of evaluation objectives and general approach;

- Performance Measurement Strategy or a commitment to develop one;

- Funding for evaluation;and

- Overall schedule.

The Treasury Board submission should provide more detailed information regarding the evaluation than the MC. The submission identifies the lead department for the evaluation and confirms the role of participating departments. The submission should briefly describe the governance including the general assignment of roles and accountabilities among the partners for the evaluation, the objectives and general approach, the sources of funding for the evaluation, and an indication of whether the other participating organizations have committed to a horizontal Performance Measurement Strategy that includes common outcomes and performance indicators. Clarity and precision at this point will help prevent potential misunderstandings when it comes to conducting the evaluation.

Participating departments should identify the status of the Performance Measurement Strategy and how it will effectively support the evaluation of the horizontal initiative. Ideally, a Performance Measurement Strategy should have been designed at the Treasury Board submission stage. However, where this is not possible due to timing considerations, there should be a commitment, which includes the expected or actual date of implementation, to produce a Performance Measurement Strategy as a key deliverable within an appropriate timeframe after Treasury Board approval (i.e., three to six months). At the very least, developing a logic model during the Treasury Board submission stage can be very useful in understanding partners’ contributions to the horizontal initiative and can provide a springboard to the development of other components of the Performance Measurement Strategy.

These tasks cannot be accomplished without the active involvement of the evaluation units from partnering departments during the development of the Treasury Board submission.

The development of the Treasury Board submission provides an excellent opportunity to lay the groundwork for future collaboration on the evaluation itself. In particular, working together and obtaining agreement on the logic model and on requirements for performance measurement can help foster a culture of collaboration among the participating departments. For particularly complex horizontal initiatives, it is advisable to engage central agencies such as the Secretariat or the Privy Council Office (PCO) early in order to clarify and address any evaluation-related issues.

Ascertaining the context of the horizontal initiative and the evaluation

Preplanning should start with a clear understanding of the nature and context of the horizontal initiative and the implications for the evaluation. Issues to be explored by evaluation units with respect to evaluation include:

- What is the rationale for working collaboratively as a horizontal initiative?

- What is the policy framework for the horizontal initiative?

- What are the objectives of the horizontal initiative?

- What is the nature and extent of collaboration required to achieve the objectives of the horizontal initiative?

- Who are the key stakeholders, and what are their interests?

- What are the funding arrangements and the related accountability requirements?

- What are the risks related to the horizontal initiative? (These may include its complexity, the number of partners and their history of working together, the materiality of the initiative, and the importance of the initiative to the mandates of participating departments.)

- What is the merit of evaluating as a horizontal initiative?

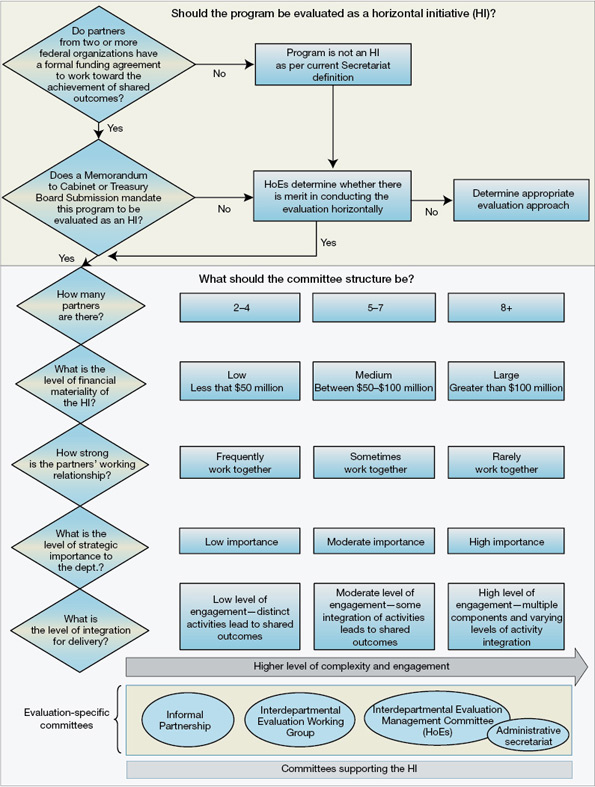

Additional information on planning considerations can be found in the Planning Considerations Diagram in Appendix A: Governance Toolkit

Establishing the scope and objectives of the evaluation of the horizontal initiative

Participating departments should come to an agreement on the objectives and scope of the evaluation as soon as possible since this may have implications for resource requirements and for the data that need to be collected in advance. The scope of the evaluation issues should address the core issues identified in Annex A of the Directive on the Evaluation Function; consideration should be given, as required, to additional questions that may be specific to the horizontal nature of the initiative.

See Appendix B for a list of additional questions for possible inclusion in an evaluation of a horizontal initiative

The lead department would normally be responsible for preparing an initial draft of the objectives and scope of the evaluation. It is essential that all participating departments be consulted in the development of these items and that they have an opportunity to review and approve the final version of the document that establishes them. This will ensure that the evaluation addresses all issues of key importance to participating departments and facilitates their buy-in and ownership of the evaluation. Care should be taken not to include too many special questions, topics or components since this could result in an unmanageable and unrealistic scope for the evaluation, given the resources and time frame available.

Obtaining agreement on the overall evaluation approach

Participating departments should come to an agreement on the need or merit of conducting the evaluation horizontally and on whether the evaluation will be conducted as a unified exercise or will be based on component studies5 that are subsequently rolled up into an overview report, or a combination of the two. This is important in that it will determine the distribution of responsibilities for data collection and analysis. It will also be important to obtain agreement on common data elements in advance to facilitate the aggregation of results.

The rationale for using a common unified framework, component studies or a combination of the two to conduct the evaluation of the horizontal initiative will depend on the nature and characteristics of the initiative itself. It should be noted that component studies often examine the particulars of program delivery within individual departments; consideration will have to be given as to whether the results of these studies can be incorporated into a horizontal overview of the initiative, allowing the evaluation to provide the strategic perspective that a unified exercise would offer. While the approach to conduct the evaluation using component studies might be the appropriate choice, it must be noted that a strong rationale should exist for using this approach; it should not oblige a reader to consult both the horizontal overview report and the separate component studies in order to understand the horizontal links and results of the initiative.

At this stage, departments may wish to consider how the evaluation will contribute toward evaluation coverage requirements, as per the Policy on Evaluation. A discussion with the department’s Treasury Board Secretariat analyst may be helpful in this regard.

Defining the governance structure, participant roles and responsibilities, and key processes

Defining governance structure for evaluating the horizontal initiative

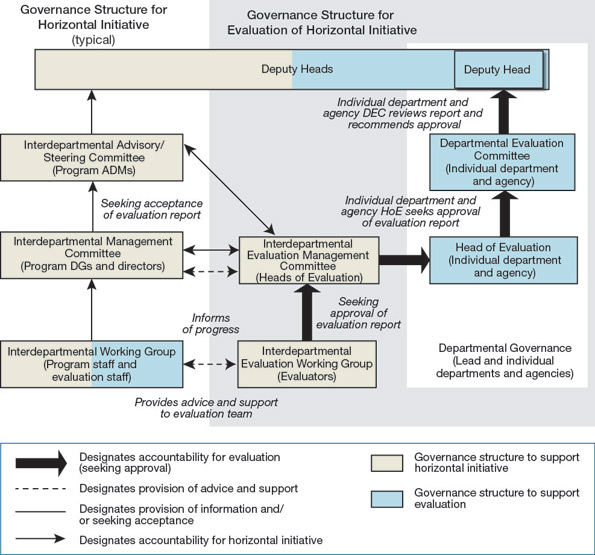

The governance and management of the evaluation of a horizontal initiative will depend, in part, on the arrangements that are in place for the governance and management of the initiative itself and governance within departments for the evaluation function. Typically, governance arrangements for the initiative as a whole include an advisory/steering committee, often at the assistant deputy minister (ADM) level; a management committee, often at the director or director general (DG) level of the program area; and a number of interdepartmental working groups to address specific issues. A secretariat function is generally provided by the lead department. The degree of formality of these arrangements tends to increase with the number of participating departments and the overall size and importance of the initiative. When planning an evaluation, it is important to determine how the evaluation’s governance will fit into this overall structure, without compromising the independence of the evaluation. The ADM advisory/steering committee can play an advisory role for the evaluation, while the management committee for the horizontal initiative will expect to be kept informed and may be required to provide input. At the working level, some evaluations include an interdepartmental subcommittee or working group comprised of a mix of program and evaluation staff from each participating department.

The oversight and overall management of the evaluation should rest with an Interdepartmental Evaluation Management Committee (IEMC) comprised of HoEs who may delegate day-to-day coordination management, depending on the size and complexity of the horizontal initiative, to Interdepartmental Evaluation Working Group(s) (IEWG) comprised of senior-level evaluators. It is good practice for all partners to be represented on equal terms on the IEMC, thereby helping to ensure that partners who have less significant roles, make fewer material contributions and have relatively little representation on the working group still have a voice in the final decisions.

The IEMC and IEWG may be supported by the horizontal initiative’s secretariat function or may require their own secretariat function, again depending on size and complexity.

For an example of a governance structure for a medium-to-complex horizontal initiative, see Appendix A: Governance Toolkit, Possible Governance Structures of Program Versus Evaluation.

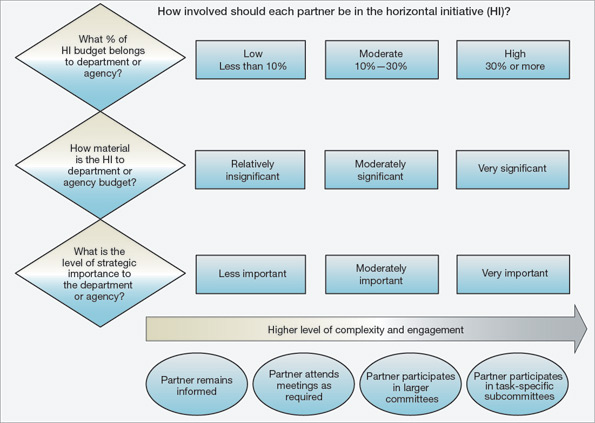

Determining the degree of formality required

Departments consulted during the development of this guidance document have noted that the specific arrangements required for governing an evaluation of a horizontal initiative will depend on a number of factors. Similar to the arrangements for the initiative as a whole, the degree of formality that is required will depend on the number of partner departments and the size and importance of the initiative. The larger and more complex the initiative is, the greater the need for formal structures to ensure efficiency. However, the need for formal coordination structures will also depend on whether the evaluation is to be carried out in a centralized or decentralized manner and on the extent to which the various components (such as field work or case studies) need to be conducted jointly or separately.

For further information see Appendix A: Governance Toolkit, Considerations for Level of Partner Involvement.

Interdepartmental Evaluation Management Committee

An IEMC, likely consisting of the HoEs of the participating departments, should be established at the preplanning stage to direct and oversee all stages of the evaluation. This committee should be responsible for key decisions at set points in the evaluation process, such as approving provisions for evaluation in Cabinet documents, approving evaluation plans and terms of reference, approving key intermediate products, and tabling the final evaluation report for higher-level approval, as required. The HoE from the lead department should chair this committee.

Interdepartmental Evaluation Working Group

The need for an IEWG made up of evaluation specialists from participating departments should be considered at this phase for the day-to-day coordination and management of the evaluation. Members should be selected not only for their knowledge of the subject matter, but also for their ability to work in a collaborative fashion and to establish informal networks with colleagues in other participating departments. Members should also be able to link the objectives of the initiative to the priorities of their own departments and should have sufficient delegated authority to make routine decisions on their own. They could also provide support to the IEMC in fulfilling its responsibilities as a committee.

Roles and responsibilities

Participating departments should be clear from the outset regarding the extent of their involvement in performance measurement and evaluation activities, and what their specific responsibilities will be. This may require that partnering departments work out these responsibilities in greater detail than was developed for inclusion in Cabinet documents. During this phase, participating departments should make a final decision on what role they will play in the evaluation and what level of support (including financial contribution) they will be providing to the evaluation of the horizontal initiative.

Requirements for departmental approvals

It is highly recommended that information for orchestrating the approvals of the evaluation report be gathered during this phase, as approval procedures can be very time-consuming if not properly orchestrated. HoEs should consult their respective DECs and inform the lead department about their departments’ needs around the departmental approval process. This information should include procedures related to approvals for the evaluation report and recommendations (directed at their own departments or at the initiative as a whole) and the management response and/or management action plan(s). Providing this information to the lead department can assist the lead in developing a proposal for timelines associated with the orchestration of approvals.

It should be noted that, in accordance with the policy, deputy heads cannot delegate the approval of evaluation reports. It is recommended that the IEMC review the evaluation report before it proceeds for individual departmental approval, as well as ensure that the deputy heads of participating departments have approved the evaluation report before submitting it for approval by the deputy head of the lead department.

Collecting, analyzing and sharing data

Participating departments should agree on an approach for data collection (e.g., public opinion research, interviews), especially regarding the collection of ongoing performance measurement data to support the evaluation of the horizontal initiative.

To help ensure an effective and coordinated approach to performance measurement, it is important that clear expectations and ground rules on the specific responsibilities of participating departments for collecting and analyzing data on an ongoing basis be established. To this end, it is recommended that a process be established for providing performance and other data required for evaluation at specified intervals in an agreed-upon format. To the extent possible, this process should respect and be integrated into the planning and reporting cycles of participating departments. In the case of a common unified evaluation of the horizontal initiative, the normal practice is for performance data to be sent to the secretariat function of the horizontal initiative, which is typically provided by the lead department. The lead department is also usually responsible for the overall coordination of the performance measurement process. In some cases, however, this responsibility may be taken on by one of the other participating departments. It is recommended that relevant performance information that is not subject to privacy restrictions be shared among the participating departments.

Establishing provisions for dispute resolution

Given the complexity of horizontal evaluations and the potential for disagreements and misunderstandings, participating departments should ensure that there are mechanisms in place to resolve disputes in a timely manner. The governance structure should allow for issues that cannot be resolved at the working level to be escalated to higher levels for resolution. For example, the IEMC provides a forum for resolving issues that are referred to it by the IEWG. In escalating issues, it is important to determine if they are operational or evaluation-related. Operational issues should be escalated through the governance structure of the horizontal initiative, while evaluation-related issues should be referred to the IEMC in order to maintain the independence of the evaluation. It is normal practice for the lead department to ensure that issues are referred to the appropriate level in a timely manner. Ultimately, decisions rest with the deputy heads of participating departments; central agencies may be consulted as required to identify a path toward resolution if a resolution cannot be found at the departmental level.

Departments interviewed for the preparation of this guidance document have also indicated that maintaining good communication with partner departments throughout the evaluation phases will help reduce disputes resulting from misunderstandings.

Developing terms of reference for evaluation committees and working groups

Once the governance and management elements have been agreed upon, formal terms of reference (ToRs) for the evaluation committees and working groups should be developed and signed off at the appropriate level. These ToRs should lay out the mandate, composition, roles and responsibilities, and decision-making authorities of each entity, as well as their respective operating procedures. It is important that all participating departments be clear on which types of decisions each evaluation committee or working group will be required to make.

It is also recommended that formal ToRs be developed for the conduct of the evaluation itself. These would include a statement of the objectives, scope and methodology of the evaluation, together with the ground rules for operation, the specific roles and responsibilities of the participating departments, and their expected contributions.

Identifying costs and a source of funds for the evaluation

During the preparation of Cabinet documents, the costs and source of funds (i.e., from existing reference levels or new resource requirements to be approved through a Treasury Board submission) should be identified. It is also recommended that appropriate allowances be made for the often added complexity of implementing the Performance Measurement Strategy and conducting an evaluation of a horizontal initiative.

Setting the overall schedule for the evaluation

A high-level evaluation schedule should be developed and agreed to by participating departments, at a minimum in order to identify the expected start and end dates for the evaluation of the horizontal initiative. The HoE from each participating department should review the proposed timing in the context of the approved Departmental Evaluation Plan (DEP) for his or her department, as well as in light of the planned level of resources for carrying out the evaluations.

Including provisions for evaluation in interdepartmental Memoranda of Understanding (MOUs) for the horizontal initiative

It is common practice for departments that participate in horizontal initiatives to develop a formal accountability framework, such as a letter of agreement (LOA) or an MOU. These documents set out participants’ understanding of the common objectives of the initiative and state, in some detail, what they will contribute toward the realization of these objectives. In drafting these documents for the whole initiative, participating departments should include information on their contributions to the evaluation of the initiative, be they financial, personnel or other types, and their control over these resources. In the LOAs or MOUs, care must be taken to ensure that the responsibility for the evaluation remains with the HoE and deputy heads. It should be noted that these agreements are different from the ToRs, which will be developed for the committees that support the evaluation.

Identifying evaluation-related risks and resolving issues prior to the planning/design phase

Participating departments should try to anticipate risks that might cause issues or problems leading up to or during the evaluation itself. Thus, it is advisable to consider conducting an evaluability assessment (which examines the readiness of the program for evaluation) during the planning/design phase to help manage such risks.

For example, the discussions that take place during the preplanning phase on the scope, objectives and strategy of the evaluation may disclose conflicting agendas or misunderstandings among the participating departments. If this occurs, it is important that participants address such issues and resolve them early on.

Contributing to the development of the Performance Measurement Strategy

Although it is clear that developing, implementing and monitoring ongoing Performance Measurement Strategies for programs and the horizontal initiative is the responsibility of program managers, the HoE reviews and provides advice on Performance Measurement Strategies to ensure that they effectively support future evaluations of program relevance and performance. In that context, the HoEs of participating departments should collectively review and provide advice on all aspects of the Performance Measurement Strategy of the horizontal initiative to ensure that it will effectively support the objectives of the evaluation.

It is recommended that participating departments come to an agreement on an overall Performance Measurement Strategy during the preplanning phase so that they can start collecting data to be used in the evaluation.

The Performance Measurement Strategy should include a logic model as well as common indicators to support the evaluation. Normally, performance measurement plans can be refined on an ongoing basis during the subsequent phases to continuously improve the process. While continuous improvement is also encouraged for horizontal initiatives, it must be recognized that this is more difficult and time-consuming when multiple parties are involved. Supporting Effective Evaluations: A Guide to Developing Performance Measurement Strategies should also be consulted in the development of Performance Measurement Strategies.

For additional information on the development of a Performance Measurement Strategy see Appendix A: Governance Toolkit, Performance Measurement Strategy Considerations.

Keep in mind that, according to the Directive on the Evaluation Function, the HoE is responsible for “submitting to the Departmental Evaluation Committee an annual report on the state of performance measurement of programs in support of evaluation” (Section 6.1.4 d). To support the management or coordinating body for the evaluation of the horizontal initiative, the HoE should be prepared to issue periodic assessments of the state of the Performance Measurement Strategy for the horizontal initiative that can inform departmental annual reports. Given that the Performance Measurement Strategy represents a key source of evidence for this annual report, its implementation is especially important.

Following the first year of implementation of the horizontal initiative, program managers should consider conducting a review of the Performance Measurement Strategy to ensure that the appropriate information is being collected and captured to meet both program management monitoring and evaluation needs.

2.2.3 Planning/design phase

Usually, the planning/design phase begins one to two years ahead of the commencement of the execution phase. The timing for beginning this phase will depend on the number of partners involved in the evaluation, with larger horizontal initiatives (i.e., higher number of partners) requiring more planning time. The scope of the planning/design phase includes the following tasks:

Planning/Design Phase Tasks

- Confirming the scope and objectives of the evaluation;

- Refining the evaluation approach;

- Refining the governance structure and establishing key procedures for the evaluation;

- Managing the evaluation’s Interdepartmental Evaluation Working Group

- Determining the level of involvement of participating departments;

- Obtaining agreement on the conduct of the evaluation, including requirements for component studies;

- Developing a viable schedule; and

- Establishing a control framework.

The following guidelines related to these tasks are recommended during the planning/design phase in order to prepare for the subsequent execution phase.

Confirming the scope and objectives of the evaluation

The scope and objectives developed during the preplanning phase should be confirmed and refined, if necessary. This requires participating departments to be clear about the intended audiences for the evaluation and the potential use of the evaluation findings. At this stage, the overarching research questions, which include the core evaluation issues as presented in Annex A of the Directive on the Evaluation Function, should be confirmed.

Refining the evaluation approach

It is also recommended at this stage that the evaluation approach be confirmed and, if necessary, refined. This will confirm whether the evaluation will be conducted as a single unified exercise, a series of component studies leading to an overview evaluation report, or a combination of both approaches. At this time, it is also important to identify and agree on any strategic issues from participating departments that will require special consideration and/or inclusion in the final evaluation report.

Refining the governance structure and establishing key procedures for the evaluation

The governance structure and procedures established during the preplanning phase, including the ToRs, should be confirmed and refined, if necessary. If an IEWG was not established during the preplanning phase, it should be considered for the planning and execution phase, and appropriate ToRs should be developed.

Managing the Interdepartmental Evaluation Working Group

Keeping the IEWG(s) to a manageable size

Efforts should be made to keep the IEWG to a manageable size; ideally, there should be no more than ten core members. However, this may not be realistic in the case of evaluations of large initiatives with many players. In such cases, agreed-upon rules around who needs to be involved, and when, can help. It may be possible to engage participating departments only when their input is required or when decisions are being made that may have an impact on their interests. However, it is advisable for the full IEWG to meet regularly at the start of the evaluation in order to establish a sufficient level of comfort and understanding around the planning for the evaluation of the horizontal initiative. Subsequently, it may be possible to reduce the frequency of plenary meetings and to establish subgroups from participating departments to focus on particular issues that can then be reported back to the full IEWG for comments.

Recognizing the importance of leadership

The effective operation of the IEWG depends on the voluntary cooperation of its members. Securing such cooperation, fostering an atmosphere of collaboration, and building a shared understanding of what is required makes considerable demands on the working group’s chairperson. Thus, it is important for the success of the evaluation to identify someone as chair of the IEWG (normally selected from the lead department) who also has the leadership competencies to meet these demands.

Conducting meetings

From the outset, it is important that the chair of the IEWG encourage all members to participate in an open, honest and forthright manner. This will help to establish a policy of full participation and avoid future misunderstandings. Prior to all meetings, the chair may find it useful to determine possible points of contention. It is good practice to maintain an inventory of such issues, indicating whether the issues have been resolved, have been referred to a higher level for resolution, or remain as points of disagreement.

Addressing possible marginalization

Evaluations of horizontal initiatives often include a mix of departments that vary considerably in size and in the amount of resources they contribute. In such cases, there is a risk that the interests of smaller departments and of those that contribute fewer resources will be overshadowed by the interests of the larger departments. This risk can be mitigated by incorporating mechanisms that allow departments with less investment in the initiative to have a voice on the IEMC or IEWG, when required. This could be achieved by establishing procedural and decision-making rules, such as whether decisions require consensus or a majority. These rules should recognize everyone’s voice, but include adequate provisions for addressing the issues at play while considering the significance of the risks these issues represent. More fundamentally, it is important to ensure that any concerns of possible marginalization are tabled early on and discussed in a frank and open manner.

Holding a kick-off meeting

The lead department should organize a launch meeting. To the extent possible, this meeting should be attended by all those who have a significant role in the evaluation, including members of the IEWG and any other evaluation and program staff involved. The objectives of the meeting should be to build a common understanding of the purpose of the evaluation, determine how common outcomes will be evaluated, and develop the plans for conducting the evaluation. The meeting should review all aspects of the scope of evaluation, clarify the roles and responsibilities of team members (and their relationships with the management group), and explain how the evaluation team will function together.

Determining the level of involvement of participating departments

Although overall responsibilities for the evaluation should have been identified in the preplanning phase, these will have to be established in greater detail at the time of planning. In general, the level of involvement of each participating department will depend on a range of factors, including:

- Overall size and importance of the horizontal initiative;

- Relevance to the department’s mandate (relevance will normally be highest for the lead department);

- Proportion of the overall funding of the initiative allocated to each department;

- Materiality of funding allocations in the context of the department’s total budget;

- Extent to which funding supports activities that the department would not otherwise undertake;

- Degree to which the department’s activities are integrated with those of other participating departments, and the level at which such integration takes place (activity, output, or outcome);

- Strength and frequency of the department’s collaboration with the other participants in the past; and

- Evaluation capacity within the department.

Lead department for the evaluation

The lead department for the evaluation should already have been established at the preplanning stage. The lead department should be responsible for leading the evaluation, taking the initiative to contact the other participating departments, and providing secretariat and other support, as required. The lead normally exercises responsibility through a secretariat for coordinating input, preparing draft evaluation documents, monitoring progress of the evaluation, and reporting on the results.

Participating departments

The role played by other participating departments will be determined in each case by the listed factors. In general, participating departments will be involved in:

- Participating in the overall guidance and direction of the evaluation;

- Sharing the costs of the evaluation (where applicable);

- Providing input, such as previous evaluation reports, administrative files, databases and policy papers;

- Conducting or responding to evaluation interviews or questionnaires;

- Reviewing key evaluation documents at critical stages;

- Providing approvals, as necessary; and

- Addressing any recommendations coming out of the evaluation directed at their respective departments.

Depending on the significance of the horizontal initiative for their respective organizations, departments will also need to decide on the extent of their involvement, if any, in the day-to-day management of the evaluation, their investment (and effort) in the evaluation activities, and their level of participation in any task groups that may be established.

Assigning representatives for each participating department

A common practice is for participating departments to assign an evaluation officer to represent the department on any advisory committee, steering committee or working group for the evaluation and to act as a liaison with the lead department’s evaluation function. This individual will also ensure that his or her department is appropriately engaged in the research phase of the evaluation, as well as in the reviews of data and the draft versions of the evaluation reports. He or she will usually also coordinate the approval process within his or her department, coordinate the development of the management response and, where applicable, coordinate the preparation of the management action plan by the program area for evaluation recommendations for his or her department.

Obtaining agreement on the conduct for the evaluation including requirements for component studies

It is important to establish the ground rules for the conduct of the evaluation, including how it will be governed and managed, the requirements to which it will be subject, and the expectations of participating departments. In particular, the ground rules should specify the contribution that each participating department will make to the evaluation in terms of resources (e.g., funding, information, people) and effort or engagement (e.g., participation in the governance and management of the evaluation). Sometimes this may require adjustments in the roles and responsibilities to ensure that the overall responsibility is viewed as equitably shared by those involved, while recognizing that the significance of the evaluation varies across participating departments. These adjustments are sometimes necessary because the context for the evaluation of the horizontal initiative may have changed since its initial approval. Thus, some departments may have to consider a different role than the one for which they initially planned.

If the evaluation requires a number of component studies, participating departments should agree in advance on their objectives, format and language requirements and on how any privacy and security issues will be addressed. If component studies are required, it is very important that there is a well-defined scope and data-gathering plan so that the synthesis of the information is facilitated at the time of preparing the overview report. This should include a plan for data-gathering around common outcomes and indicators. It can also be useful to develop the structure or outline of the planned horizontal report at this stage in order to facilitate understanding on what is expected in terms of information requirements. This will facilitate the synthesis of component studies. Specifying the final report outline and level of detail to be included in executive summaries can be especially useful if component studies are to be subsequently synthesized into an overarching evaluation report.

Developing a viable schedule

An evaluation schedule that includes specifying the timing of its various phases, establishing due dates for providing information and submitting reports, and specifying who will be responsible in each case should be developed. To the extent possible, the schedule should also include information and dates for planned briefings, important meetings, or other means of communicating and disseminating evaluation findings. As noted earlier, the complexity associated with a horizontal initiative may increase the overall total level of effort and the length of time associated with the conduct of the evaluation. This could be the case if there is a variation in the protocols and mandates of the participating organizations. In particular, evaluations of horizontal initiatives often require frequent meetings, negotiation of agreements, and other forms of communication that require increased demands on management time. It is also important to ensure that schedules allow sufficient time for review and commenting on draft reports. Moreover, if the final evaluation report is based on component studies, additional time may be required to ensure a reliable synthesis and review by partnering departments. Participating departments that conduct component studies should commit to a timeline that respects the horizontal evaluation needs. Experience has shown that joint evaluations can take up to twice as long to carry out as evaluations involving a single organization. Thus, it is important to be realistic when establishing the schedule and not underestimate the level of effort required or what can be accomplished with available resources. Participating departments should ensure that their respective DEPs take into account their participation in the evaluation of horizontal initiatives, including identification of the lead department and other participating departments.

Establishing a control framework

The lead department should ensure that an effective control framework for monitoring the conduct of the evaluation, the preparation of deliverables, and the management of the risks associated with the evaluation is in place before the launch of the evaluation. This would take the form of a typical project management framework with specific milestones, and control and approval points. Lead departments may wish to have this control framework reflected in the ToRs of the evaluation committee(s) and working group(s).

It is important to ensure that, to the extent possible, potential risks have been identified up front and that a plan to manage them is in place. As part of the risk management process, it is also advisable to develop a quality assurance checklist for all stages of the evaluation.

At this point, it is also advisable to consult with the access to information and privacy (ATIP) and legal services units within the lead department and partnering departments to put in place a control framework and process that deals with external information requests. Consideration should be given to developing a consistent approach to dealing with access to information requests that may arise during and after the evaluation, particularly when an evaluation report may contain classified or sensitive information.

2.2.4 Execution phase

The execution phase for the evaluation of most horizontal initiatives would normally begin one to two years ahead of the approvals phase. The appropriate start time should take into consideration the number of partners involved in the evaluation and whether or not component studies are planned. Larger horizontal initiatives with a higher number of partners and those evaluations based on component studies will likely require more time for data-gathering and report production.

Execution Phase Tasks

- Maintaining transparency and effective communications;

- Gathering and analyzing data;

- Monitoring progress and managing risks;

- Preparing reports;

- Developing management responses and action plans; and

- Reviewing and finalizing reports.

The following guidelines related to these tasks are recommended during the execution phase.

Maintaining transparency and effective communications

Ensuring transparency

All evaluation committees and working groups should endeavour to provide timely and comprehensive records of meetings. These records should reflect the key individual contributions and opinions of departmental members and list decisions made. Preferably, these records should be written and circulated immediately after the event and allow members the opportunity to comment.

Maintaining effective communications

Maintaining effective communications throughout the process is an important matter for the evaluation of horizontal initiatives, given the number of people and departments involved. Committee and working group meetings should be held at regular intervals during the evaluation. In addition, consideration should be given to tasking a secretariat with setting up a secure intranet site or similar facility to share documents and ideas, and hold virtual meetings.

Gathering and analyzing data

At this point, participating departments should already have a clear understanding of data requirements (i.e., for performance measurement), including timing and format, as well as who is responsible for collecting, assembling and analyzing the data. IEMC and IEWG members from each participating department should act as a liaison with program staff to facilitate access to their respective departmental data to be used in the evaluation. It is also important to ensure that those responsible for providing and using the data are aware of any related privacy or security requirements.

Monitoring progress and managing risks

The risk management strategies developed during the earlier phases should be reviewed periodically to ensure that the necessary risk mitigation strategies are in place and that associated responsibilities are clearly understood. The lead department is normally responsible for monitoring progress and ensuring that risks are managed. It is, therefore, important that the lead department be informed quickly of emergent risks and potential delays. The lead department should closely monitor the evaluation to detect any potentially significant problems early on, and act quickly to address them.

To monitor progress effectively, it is useful to establish key control points in the project schedule and put mechanisms in place to share progress. It can also be helpful to utilize a tool such as a critical path diagram that identifies interdependencies in the project schedule. In this way, the impacts of any delays can be fully understood, and any resultant modifications to the project schedule are realistic and acceptable to all key stakeholders.

Preparing reports

This is arguably one of the most critical and potentially most contentious stages of the evaluation. While the lead department has primary responsibility for drafting the overview report, it is important to develop rules and procedures for deciding what goes into the report, such as key findings and recommendations that are acceptable to all concerned. It is also important to consult with participating departments at various stages in the preparation of the draft and final reports, to deal promptly with any disagreements (using the dispute resolution measures already in place), and to ensure that all departments can see themselves in the final product. One way to promote buy-in is to prepare a presentation of the preliminary findings so that all partners can provide comments and offer precisions to clarify any perceived gaps.

The lead department is responsible for ensuring a quality overview report on the evaluation of the horizontal initiative; therefore, consideration should be given to allow for a peer review to ensure that the findings are adequately supported by the evidence and that the recommendations are logical and internally consistent.

Developing management responses and action plans

It is the responsibility of program managers to develop a management response, and develop and implement an action plan for the evaluation. Management responses should be developed in consultation with senior management in participating departments. If these management responses are shared across multiple departments, each deputy head will have to formally approve them. Management responses to department-specific recommendations and action plans should be developed and approved by the management of the department in question.

Reviewing and finalizing reports

It is important to share drafts as widely as possible, seeking feedback from all those who have been actively involved in the evaluation. This is important to confirm the accuracy of the findings, reveal additional information and views, build a sense of ownership by participants, and generally add to the quality and credibility of the report. It is also important to allow adequate time for incorporating comments, although if time is short, consideration could be given to holding an inclusive workshop where the report’s findings and conclusions may be presented, discussed, and commented on by the workshop participants. Such a workshop can also provide a useful forum for addressing any disagreements over the content of the report.

If there has been effective communication and an opportunity to review preliminary findings, the final report should contain no elements of surprise for the interdepartmental working groups, the interdepartmental management and advisory committee, and the departmental committees. The report should first proceed for review and acceptance by the IEMC before being referred to the senior officials of participating departments for approval.

It may also be advantageous at this stage to have the report proactively reviewed by the communications and legal units of each department. This action, although precautionary for high-profile or sensitive subject matter, allows the appropriate awareness and action by communications offices to prepare briefing materials for ministerial offices, and ensures that final evaluation reporting material is presented respecting the provisions of the Access to Information Act, the Privacy Act, and the Policy on Government Security. This can provide assurance that improper disclosure of a record will not be made, which contravenes legislation and the policy of the Government of Canada.

2.2.5 Approvals phase

Once an evaluation report is drafted, it will then go through the approval process agreed to during the preplanning phase. Building on the approval process, it is useful to obtain agreement on which findings apply to which department. The evaluation unit of each partnering department will need to manage its own department’s input and approval process while respecting overall approval timelines.

Departments have generally noted that longer time frames and additional efforts are required for the approval of an evaluation of a horizontal initiative. The time frame will depend on the number of deputy heads to be consulted and the requirements of the approval process in each participating department. The scope of the approvals phase includes the following task:

Approval Phase Task

- Obtaining deputy head approvals.

The following guidelines related to this task are recommended during the approvals phase.

Obtaining deputy head approvals

Approvals by the deputy heads of all partnering departments could be carried out either in parallel or sequentially. The final stage in the approval process is the sign-off by the deputy head of the lead department.

2.2.6 Post-approval phase

Post-Approval Phase Tasks

- Posting and distributing the report;

- Managing external communications;

- Responding to access to information requests; and

- Monitoring and tracking management responses and action plans.

The post-approval phase includes the following tasks:

The following guidelines related to these tasks are recommended during the post-approval phase.

Posting and distributing the report

The lead department is responsible for submitting the completed evaluation report including the management response and action plan section (in electronic format) to the Secretariat immediately upon approval of the report by the deputy heads.

The lead department is also responsible for posting the final report on its website. If participating departments wish to make the report available on their respective websites, it is recommended that they do so by linking to the lead department’s website. In each case, department evaluation units should appropriately brief their respective deputy heads and ministers, informing them that the report is about to be posted. It is recommended that the lead department also notify participating departments of the report’s planned release.

Managing external communications

It is recommended that the lead department be responsible for developing media lines and questions and answers for the findings and recommendations of the report in consultation with participating departments, as and when necessary.

Responding to access to information requests

It should also be noted that once the report has been posted, it is possible that it may prompt access to information requests, in spite of its accessibility to the public. Consideration should be given to proactively develop a consistent approach to responding to external information requests.

Individual departments are responsible for responding to access to information requests directed at their department with respect to the evaluation of the horizontal initiative. It is recommended that they consult with the lead department when responding to such requests.

Monitoring and tracking management responses and action plans

As per the Policy on Evaluation, the DEC of each participating department is responsible for ensuring follow-up to the action plans approved by its deputy head. In order to support the DECs of participating departments, it is recommended that the IEMC track progress against recommendations and that members be prepared to issue periodic reports to their respective DECs.

2.3 Approaches

2.3.1 Generic illustrative examples

The following section illustrates considerations and potential approaches to the governance of evaluations of horizontal initiatives by providing generic illustrative examples for horizontal initiatives of low, medium and high complexity. Each example illustrates the principles and guidelines outlined in section 2.2 as they are applied to the governance practices of the evaluation in order to find the “right size” of governance for each of the three varying levels of horizontal initiative complexity. It should be noted that, due to the inherent complexity of a horizontal initiative, a “one size fits all” approach cannot be used in the evaluation of a horizontal initiative.

As an introduction, Table 1 illustrates the governance considerations and characteristics of horizontal initiatives exhibited by three levels of complexity. This table will assist in establishing the level of complexity of the horizontal initiative.

| Overarching Governance Considerations | Characteristics of Horizontal Initiatives | ||

|---|---|---|---|

| Low Complexity | Moderate Complexity | High Complexity | |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Governance Practices for Varying Types of Horizontal Initiatives

The following tables provide examples of how the principles and guidelines contained in the preceding chapters might translate into governance practices for each phase of the evaluation based on the complexity of the horizontal initiative.

| Tasks | Governance Practices Used | ||

|---|---|---|---|

| Low Complexity | Moderate Complexity | High Complexity | |

| Contributing to the preparation of Cabinet documents |

|

||

|

|

|

|

|

|

|

|

|

|

||

|

|