Executive (EX) Group Job Evaluation Standard 2022

Amendments

| Amendment Number | Date | Description |

|---|---|---|

| 1 | 2024 |

|

On this page

- Introduction

- Executive (EX) Group Definition

- Introduction to Job Evaluation using the Korn Ferry Hay Guide Chart Profile Method

- Measuring Know-How

- Measuring Problem Solving

- Measuring Accountability

- Job Profiles

- Validating Against and Using the Benchmarks

- Classification Levels: Executive Group

- Appendix A – Guide Charts

- Appendix B – Benchmark Index by Level

- Appendix C – Benchmark Positions

Introduction

The Executive Group Job Evaluation Standard (formerly known as the Executive Group Position Evaluation Plan) has been used to evaluate Executive Group positions in the Federal Public Service since 1980. The plan is based on the Korn Ferry Hay Guide Chart – Profile Methodology and is more commonly known as the Hay Plan. This methodology is used by over 12,000 organizations in over 90 countries in both public and private sector jurisdictions across a variety of industries and sectors.

This latest version of the Executive Group Job Evaluation Standard came into effect on October 3rd, 2022 and replaces the September 2005 version. Changes made to this latest version include:

- Revised guide charts and updated language to facilitate the use of the standard and reduce potential bias.

- Revised benchmark positions to reflect the full range of modern Executive Group jobs.

- Revised Accountability Magnitude Index (AMI) from 8.0 to 9.0.

This guide is designed to:

- Provide guidelines that will foster consistency in the evaluation of Executive Group jobs while retaining the flexibility required to properly reflect the diverse nature of these jobs.

- Provide an overview of the basic concepts and principles underlying the job evaluation process.

- Serve as an adjunct to the materials and experience received during basic job evaluation training or refresher courses.

Executive (EX) Group Definition

This guide is intended for classification advisors and those involved in the evaluation of jobs allocated to the Executive (EX) Group.

- Group Definition

-

The Executive Group comprises jobs located no more than three hierarchical levels below the Deputy or Associate Deputy level and that have significant executive managerial or executive policy roles and responsibilities or other significant influence on the direction of a department or agency. Jobs in the Executive Group are responsible and accountable for exercising executive managerial authority or providing recommendations and advice on the exercise of that authority.

- Inclusions

-

Notwithstanding the generality of the foregoing, it includes jobs that have, as their primary purpose, responsibility for one or more of the following activities:

- Managing programs authorized by an Act of Parliament, or an Order-in-Council, or major or significant functions or elements of such programs.

- Managing substantial scientific or professional activities.

- Providing recommendations on the development of significant policies, programs or scientific, professional, or technical activities; and

- Exercising a primary influence over the development of policies or programs for the use of human, financial or material resources in one or more major organizational units or program activities in the Public Service.

- Exclusions

-

- Jobs excluded from the Executive Group are those whose primary purpose is included in the definition of any other group.

Introduction to Job Evaluation using the Korn Ferry Hay Guide Chart Profile Method

-

In this section

Job Evaluation Fundamentals

Purpose

Job evaluation provides a foundation for an organization to:

- Establish the appropriate rank order of jobs

- Establish the relative distance between jobs within the ranking

- Provide a systematic measurement of job size relative to other positions, to make salary comparisons possible

- Provide a reliable basis for connecting to external market data

- Provide a source of information on the work being done in a unit prior to making restructuring decisions

Fundamental Premises

The evaluation of Executive (EX) Group jobs is based on the Korn Ferry Hay Guide Chart Profile Method. The logic behind the Method is:

- Every organization exists to produce identifiable end results

- An organization is created when more than one individual is required to accomplish the tasks to produce those end results

- Every viable job in an organization has been designed to make some contribution toward reaching those end results

- That contribution can be systematically measured using a common set of factors across all executive jobs

The Ranking / Validation Process

The Method is a process to determine the relative value of EX Group positions. In other words, value determinations are made based on the relative degree to which any position, competently performed, contributes to what its unit has been created to accomplish within a specific organizational context and to other EX positions.

| Concept | Application |

|---|---|

| The notion of competent performance in job evaluation | Job evaluation measures the contribution made by a position, not the contribution an incumbent may or may not make in the position. Since jobs are designed on the assumption that they can and will be competently performed, the evaluator assumes that competent performance exists and makes no judgements about performance. |

The contribution the position makes to the organization is determined by measuring job content, as set out in the job description, using three factors:

- Know-How

- Problem Solving

- Accountability

The three factors are inter-dependent. We are concerned with the Know-How that is required by the role to Solve the Problems which must be overcome to achieve the results for which the role is Accountable.

The Method uses these factors and their sub-factors in combination to determine the value of positions using numerical points. The Korn Ferry Hay Guide Charts are the tools used to determine the degree to which the factors (or dimensions), are found in one job relative to the degree to which they are found in another, and the numerical values (points) to be assigned. See Appendix A – Guide Charts.

It is important to remember that there are no absolutes. It is simply a matter of determining how much more or less of each factor any job has relative to others around it. As a result, three key steps in the evaluation process are:

- Looking at jobs within their organizational context, not in isolation.

- Looking at the descriptions and definitions of each sub-factor and applying evaluation rules on a consistent basis to establish the appropriate rating for each sub-factor.

- Validating the evaluations for each of the factors, through precise Benchmark comparisons.

Overview of the Evaluation Process

1. Understanding the Job

An accurate job description is an essential component of the job evaluation process. It provides much of the necessary information from which to construct an evaluation of the job. To do that, it must provide a clear and succinct description of:

- The job's purpose and the end results for which it is accountable (found in the General and Specific Accountability statements)

- Where the job sits in organizational terms (found in the Organization Structure statement and the organization charts)

- The dimensions of the job (found in the Dimensions statement)

- The nature of the job/role (found in the Nature and Scope of duties statements)

Three key concepts which govern the use of job descriptions in arriving at a valid evaluation are:

| Concept | Application |

|---|---|

| The need for up-to-date job descriptions | The job description should be up to date so that the job can be evaluated as it is, not as it was and not as it might be or could be. It should describe what is required of the job. Jobs change, and it is important to have accurate, complete, and current information. |

| Avoiding title comparisons | The title of a position can provides a strong clue about where to look for appropriate Benchmark comparators. However, by themselves, title comparisons can distort valid evaluations, because what the job holder does and what occurs in another job with a similar title may not be the same at all. For this reason, titles are never adequate for making proper evaluations. |

| Examining the meaning behind the words | There are no “magic words”. Job descriptions may contain words/phrases that are designed to impress evaluators (e.g., strategic, complex, transformational); evaluators must look beyond the words used to understand the reality of the job. |

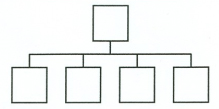

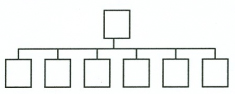

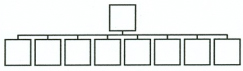

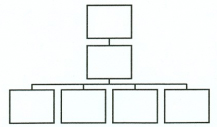

2. Understanding the Job Context: Using the Organization Charts

It is important to avoid viewing the job as though it exists in isolation. Organization Charts show two things:

- Where the position fits within the unit structure (its hierarchical level). This is very important information for identifying potential Benchmark comparators.

- The impact and influence of other jobs on the position. Organizational interrelationships, particularly where one job provides functional guidance to another, have a strong influence on job size. Organizational interrelationships can also indicate potential overlaps or duplications, which the job descriptions, taken in isolation, could mask. The evaluator should always consider which other positions are also involved in the work and their contribution to the work.

A key concept for weighing the influence of organizational relationships is:

| Concept | Application |

|---|---|

| The need to recognize both lateral and vertical relationships | Both vertical and lateral relationships affect job size. It is a common mistake to overlook the lateral relationships between peer positions and overemphasize the vertical ones between superior and subordinate. It is important to look at both equally critically. |

3. Evaluating the Job: Using the Three Factors

The three evaluation factors provide a common yardstick which makes it possible for actual job comparisons to be made. The three factors represented on the charts are:

- Know-How, which encompasses three scaled sub-factors:

- Depth and scope of practical/technical/specialized Know-How

- Planning, organizing, and integrating knowledge

- Communicating and influencing skills

- Problem Solving, which encompasses two scaled sub-factors:

- Thinking environment

- Thinking challenge

- Accountability, which encompasses three scaled sub-factors:

- Freedom to act

- Area of Impact (Magnitude)

- Nature of Impact

Three key concepts which underlie the Know-How, Problem Solving and Accountability factors are:

| Concept | Application |

|---|---|

| Comparing jobs according to universal factors | Work is a process through which skills, knowledge and abilities are applied to challenges, issues, or problems in order to achieve outcomes or deliverables for which the job is accountable. It is possible to evaluate diverse jobs using the three factors of the Method and Working Conditions, because they incorporate the fundamental characteristics that researchers have found are common to the nature of work and are therefore present to some degree in every job. These factors form a common measure that can be appropriately applied to any job in order to evaluate the work done in the job. |

| The need to focus on job content | The purpose of job evaluation is to establish, as objectively as possible, each job's relationship to others in terms of content and requirements. This is particularly difficult if the current classification level, rating, or historical relationship is referred to during evaluation. The evaluator must take pains to ignore the related assumptions that may go with knowing the suggested organizational level of the job, the incumbent, or the (likely) salary connected with the position. |

| The interrelationships of the factors | The Korn Ferry Hay Guide Chart Methodology differs from most Job Evaluation tools used by the Federal Government in that the factors are not evaluated or assessed independently. The Know-How, Problem Solving and Accountability factors and their evaluations are linked. This is described further in application guidelines for each factor and should be kept in mind throughout the job evaluation process. There are logical evaluations which further reflect job and organization design. |

4. Using the Numbering Pattern of the Guide Charts

The numbering system on the Guide Charts is geometric, with point values increasing in steps of approximately 15%. For example: 100, 115, 132, 152, 175, 200 and so on, with the value doubling every five steps. This numbering progression runs through all Guide Charts.

| Concept | Application |

|---|---|

| Step differences: the building blocks of job evaluation | In developing the Guide Charts the decision to adopt a geometric progression was made because in comparing objects generally, we perceive relative (rather than absolute) differences. Fifteen percent (rather than 10, 12 or 20%) was chosen because in tests it gave the best inter-rater reliability and it reflects the human capability to perceive a noticeable difference in the relative size; each 15% Step difference represents the smallest perceptible difference on which consensus can be established. Conveniently, it also gives a pattern of numbers that is easy to remember. Starting by 100 and continuing in (rounded) 15% Steps, the pattern of numbers doubles every five Steps. The notion of Step differences is critical because it provides a framework for consistent, quantified judgements to be made based on the minimum perceptible difference (just noticeable difference) that well-informed and experienced evaluators can discern between jobs or elements of jobs. |

The Guide Charts used for EX Group positions are a subset of the Korn Ferry Hay Master Charts. As such, they have been sized to include only the relevant portions for evaluating EX Group positions, plus a suitable floor and ceiling to provide the outer parameters for the evaluation context.

The structure of the Guide Charts also allows for an evaluation to be nuanced or “shaded.” Modifiers (pull-up or pull-down) illustrated as + or -, can be added to several of the factors (as indicated in the respective factor sections below) to support the selection of a higher or lower point value. Modifiers allow the evaluator to recognize that some jobs that might have the same general factor rating are in fact, closer to the top or bottom of the value range provided for that rating.

5. Validate the Evaluation Logic

There are several ways to ensure the quality of EX Group position evaluations. The first of these quality assurance measures involves double checking that the combination and value assigned to each factor makes evaluation sense. Factor-specific validation checks are included in the explanation of each factor in this manual.

6. Ranking the Position

Once points have been assigned to all three factors, it is a straightforward matter to make a preliminary ranking of the position based on the sum of those points. The minimum and maximum points for each of the levels in the EX-Group indicate its classification.

7. Reconciling the Evaluation with the Benchmarks

The most important test of the validity of the evaluation is finding comparable reference evaluations in the standardized continuum of the Benchmarks.

The method for validating against the continuum is to “prove” the evaluation by finding several comparable reference positions from the standardized Benchmark positions. Generally, the benchmark validation step is done after the position has been evaluated against all three factors.

8. Reconciling the Evaluation with Others Around It (Classification Relativity)

The other aspect of quality assurance involves ensuring that the evaluation makes sense within the continuum of EX Group evaluations. This means checking the evaluation against the evaluations of other positions around it in the unit (based on the organization charts), and more broadly checking the evaluation against similar roles in other organizations (relativity).

9. Job Profiles

A key step in validating the evaluation logic is the concept of short profiles. This is a check on the relative proportions of Know-How, Problem Solving and Accountability in a position. Positions that exist to deliver operations and services will have a relatively high score in Accountability, while those focused on long-term planning and policy development will be more focused on Problem Solving. The combination of the points should reflect the nature of the work.

10. Documenting the Job Evaluation

The final evaluation should be supported by a written rationale.

Measuring Know-How

Know-How is the sum of every kind of knowledge and skill, however acquired, that is required for fully competent job performance. It can be thought of as “how much skill and/or knowledge about how many things and how complex each is”.

Know-How has three sub-factors:

- Practical, Technical, Specialized Know-How measures the depth and scope of the subject matter knowledge and recognizes increasing specialization (depth) and/or the requirement for greater breadth (scope) of knowledge.

- Planning, Organizing and Integrating Knowledge measures the knowledge required for integrating and managing programs/functions. It involves combining the elements of planning, organizing, coordinating, directing, executing, and controlling over time and is related to the size of the organization, the functional and geographic diversity, the diversity and complexity of stakeholder relationships, and the time horizon.

- Communicating and Influencing measures the criticality of interpersonal relationships with individuals and/or groups internal and/or external to the organization in achieving objectives.

Practical, Technical, Specialized Know-How

There are three important concepts to grasp in order to apply this sub-factor scale correctly. These concepts are as follows and are covered on the pages that follow:

- Equivalency of depth and breadth

- The Know-How required to manage specialist positions

- Equivalency of work experience and formal education

| Concept | Application |

|---|---|

| Equivalency of depth and breadth in Practical, Technical, Specialized Know-How | It is important to appreciate the combination of both the breath and the depth of the varieties of Know-How required by a role. Generalists may need a broader range of knowledge than their specialist colleagues who may well require more depth of knowledge in their area of specialization. It is important to recognize that the demands for Practical Know-How in operational/service positions such as line management and business partner roles (e.g., human resources or communications), can be as great as the Technical/Specialized knowledge requirements of professional jobs such as engineering, science, law, or information technology. |

| The Know-How required to oversee specialist positions | Executives do not necessarily need the same depth of subject-specific Technical or Specialized Know-How as those working below them. This is because managers are not required to do their subordinates’ jobs. However, they do require sufficient understanding of their subordinates’ areas of expertise to be able to manage their activities and evaluate their performance (e.g., The position may not be the subject matter expert but would still need enough knowledge to be able evaluate the work of their subordinates.). Furthermore, executives are likely to require a broader range of knowledge than their subordinates, as subordinates are likely to focus on a sub-set of the activities that the manager needs to understand. |

| Equivalency of work experience and formal education | While it is true that some Know-How can only be gained formally (e.g., an M.D. in Medicine), it is important to focus on the knowledge and skill required to do the work, not on how an incumbent might come to possess that knowledge. Know-How can be acquired in multiple ways and does not necessarily need specialized formal education.

|

EX Group positions fall in the range of F to G for Practical, Technical, Specialized Know-How. An EX-Group position is unlikely to be below F, as this applies to positions requiring a seasoned professional having command of their subject area. Similarly, EX Group positions are unlikely to be above G as this would suggest a global authority on a complex domain.

Note: A modifier (pull-up or pull-down) can be used on the Practical, Technical, Specialized Know-How sub-factor.

The following provides some additional guidance on this sub-factor for EX Group work:

| Degree | Deep Expert (Depth) | Generalist Leader (Breadth) |

|---|---|---|

| F- | Senior practitioners providing guidance to more junior colleagues but that are not the approval authority for complex/technical advice. Requires deep/specialized knowledge of an area of a sub-domain (e.g., Costing as an accounting sub-function). | Roles requiring a breadth of knowledge to provide leadership to multiple disciplines, but where technical authority is provided by another role. |

| F | Roles that provide technical leadership and guidance to other specialists. Roles are regarded as technical specialists when they do not require additional review or approval (from a technical perspective). Requires complete knowledge of a domain and common interrelationships with other relevant domains (e.g., Accounting). E.g., Director, Financial Accounting |

Roles requiring a breadth of knowledge to provide leadership to multiple disciplines. E.g., Director, Health Program Integrity and Control |

| F+ | Roles requiring complete knowledge of a domain, its common interrelationships with other relevant domains, and new/emerging applications of the subject domain (e.g., Application of nascent technologies to substantially change the work of the domain). E.g., Director, Drug Policy | Roles requiring a breadth of knowledge to provide leadership to multiple disciplines within multiple domains (e.g., Corporate Services roles providing leadership to distinctly different disciplines.) E.g., Director, Contracting, Materiel Management and Systems |

| G- | Roles requiring very deep specialization in complex fields of knowledge and that provide authoritative and determinative knowledge and insights to the organization but limited by direction from a central authority or peer position with specialization in an interrelated field. E.g., Assistant Deputy Commissioner, Correctional Operations, Prairie Region | Roles requiring substantial comprehension of all relevant fields of knowledge within a complex organization and that provide leadership to multiple disciplines in a large and complex organization, with considerable organizational guidance. E.g., Chief Executive Officer, Canadian Forces Housing Agency |

| G | Roles requiring very deep specialization in complex fields of knowledge and that provide authoritative and determinative knowledge and insights to the organization. E.g., Vice-President, Health Security Infrastructure | Roles requiring substantial comprehension of all relevant fields of knowledge within a complex organization and that provide leadership to multiple disciplines in a large and complex organization. E.g., Assistant Deputy Minister, Americas |

| G+ | Pull-towards H as a “Global Authority” which is relevant in fields where Canada has a Global Leadership role. This position is the primary Government of Canada expert. E.g., Assistant Deputy Minister, International Trade and Finance | Appropriate for first hierarchical level roles expected to be the subject matter authority within the branch/sector versus an integrator of expertise from subordinate roles. E.g., Assistant Deputy Minister, Science and Technology |

The depth and breadth of Practical, Technical, Specialized Know-How is measured on the vertical axis of the Chart.

Planning, Organizing and Integrating Know-How

EX Group positions must know how to do such things as plan, organize, motivate, co-ordinate internally and externally, direct, develop, control, evaluate or check the results of others' work. These skills are required in directing activities, such as those inherent with daily operational activities (line management); or through consultative activities, such as those inherent with directing policy development; or both (as in positions which direct secondary business activities that support the business). The following key concept should be considered when evaluating this sub-factor:

| Concept | Application |

|---|---|

| Glossary of Terms - Planning, Organizing, and Integrating | Function: a group of diverse activities which, because of common objectives, similar skill requirements, and strategic importance to an organization, are usually directed by a member of top management. Subfunction: a major activity which is part of and more homogeneous than a function. Element: A part of a subfunction, usually very specialized in nature, and restricted in scope or impact. |

| The more complex the job, the broader the management skills required. | The following affect the complexity of the job and the degree to which the need for planning, organizing, and integrating skills are required: Functional Diversity – the range of programs/functional activities and the complexity of their inter-relatedness, requiring planning, organizing and integrating to achieve unit objectives and the extent to which interests and objectives are aligned. Stakeholder Diversity –the number and diversity of stakeholders internal to the department, horizontally across one or more departments and external to the Government of Canada and the frequency and complexity of these interactions, as well as the extent to which interests and objectives are aligned. Time Horizon –the degree to which jobs deal with long or short-term issues, for example, building / evolving organizational capacity for future challenges rather than delivering today’s services. Physical Scale – the size of the operation required to achieve expected results and geographic dispersion of the work. Consideration should be given to the combination of these elements and a balanced judgment made. |

Note: A modifier (pull-up or pull-down) can be applied to the Planning, Organizing Know-How sub-factor.

The selection of a reasonable degree for this sub-factor is based on the collective weight of the above indicators and should not be driven by the highest or lowest indicator. Benchmark jobs are to be referenced to validate the evaluation. The chart below provides guidance on how to assess and measure Planning, Organizing, and Integrating (POI).

| POI | II+/III- | III | III+/IV- | IV | IV+ |

|---|---|---|---|---|---|

| Functional Diversity | Related – Management of operations or services which are generally related in nature and objective, where there may be a requirement to coordinate with associated functions. |

Differing – Management of major program with activities differing in objectives or Management and integration of function(s) with multiple business lines and/or of differing elements that have an impact on all the organization |

Diverse – Integration and management of multiple programs with divergent purposes or Management of a significant function critical to the achievement of a broader mandate. (e.g., entire Public Affairs program in a substantial organization) |

Broad – Strategic integration and business / functional leadership of: programs with significant differences and divergent purposes (e.g., a sector that is a major component of the departmental mandate) or strategic functions that affect the whole organization (e.g., complete Corporate Services) |

Heterogeneous – Strategic integration and business/ functional leadership for the national delivery of multiple major programs/functions that are broad in scope or government-wide leadership of broad key initiatives. |

| Stakeholder Diversity | Local / internal and aligned; consider effect on other groups | Regional / some external and differing priorities | National / significant external and cross government; consensus building is key | International / all levels of government; competing objectives to reconcile | Global / alignment around key government priorities; conflicting objectives to reconcile |

| Time Horizon | Months to Annual | Current year and next | Two to three years | Three to five years | Five years + |

| Physical Scale (FTEs) | 20-60 | 60-200 | 200-600 | 600-2000 | 2000-6000+ |

| Examples of Benchmarks | Director, Program Evaluation | Director General, Operations and Departmental Security Officer | Director General, Human Resources Operations | Assistant Deputy Minister, Small Business, Tourism and Marketplace Services | Assistant Deputy Minister, Real Property Services |

The requirement for Planning, Organizing and Integrating Know-How is measured on the horizontal axis of the Guide Chart.

Communicating and Influencing

This final Know-How sub-factor measures the degree to which establishing and maintaining effective interpersonal relationships is central to the position achieving its end results.

The requirement for using communicating and influencing skills on the job is represented by three possible levels. For most executive positions, because of their size and/or nature, the achievement of objectives hinges on the establishment and maintenance of effective interpersonal relations.

In assessing each executive position, evaluators must weigh a variety of considerations in making their judgements, such as:

- The degree to which leadership and engagement of others are both integral to achieving results and highly complex or difficult in nature.

- The importance of service to clients and client contact (both internal and external) as integral elements of the job.

- The nature of the client relationship(s).

| Concept | Application |

|---|---|

| Assessing the Frequency and Nature/Intensity of Contacts | In assessing the significance of client contact, evaluators should consider such factors as the frequency and nature or intensity of these contacts. There is a significant difference in communicating and influencing skills where contact is established simply to gather or exchange information and where contact is established and maintained to influence decisions, processes or behaviors which are crucial to the organization successfully achieving its goals. It is also important to relate the nature of the job’s contacts to its objectives. Evaluators should avoid being misled by statements in job descriptions which ascribe contacts to a job that are not in keeping with its objectives and accountabilities. |

There are three levels of Communicating and Influencing:

Level 1 Common courtesy must be employed, and appropriate working relationships established and maintained with subordinates, colleagues, and superiors to accomplish the position’s objectives. However, there is no significant need to influence others in carrying out assignments. Interaction with others is generally for the purpose of a straightforward information exchange or seeking instruction or clarification. This degree of Communicating and Influencing skills is not consistent with EX Group work and should not be used at these levels.

Level 2 In dealing with subordinates, colleagues, and superiors, and in the course of some contact with clients inside and/or outside government, it is necessary to establish and maintain the kind of relationships that will facilitate the acceptance and utilization of the position’s conclusions, recommendations, and advice. In order to achieve desired results, positions must interact regularly with subordinates, colleagues and superiors and have some contact with clients. The nature of these contacts is such that tact and diplomacy beyond the demands of normal courtesy are required. This degree of Communicating and Influencing skills is extremely unlikely for EX Group work.

Level 3 Successful achievement of the position’s program delivery and/or service and/or advisory objectives hinges on the establishment and maintenance of appropriate interpersonal relationships in dealings with subordinates, colleagues, and superiors and in ensuring the provision of service through substantive contact with clients inside or outside government. Skills of persuasiveness or assertiveness as well as empathy and sensitivity to the other person's point of view are essential to ensuring the delivery of service. This involves understanding the other's point of view, determining whether a behavioral change is warranted and, most importantly, causing such a change to occur through the exercise of interpersonal skills.

The Communicating and Influencing Know-How sub-factor is measured, along with Planning, Organizing and Integrating Know-How, on the Guide Chart's horizontal axis.

Note: A modifier (pull-up or pull-down) cannot be applied to the Communicating and Influencing sub-factor.

Combining the Know-How Elements

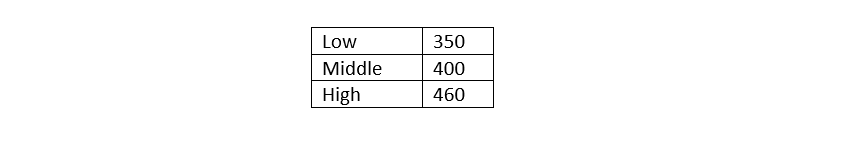

To this point, three independent assessments regarding Know-How have been made – one for each sub-factor. For example:

| Practical/ Technical/ Specialized | Planning, Organizing, and Integrating | Communicating and Influencing Skills | |

|---|---|---|---|

| Position 1 | F | II | 3 |

| Position 2 | G | III | 3 |

| Position 3 | G | II | 2 |

The total weight of Know-How is derived from the combination of the three sub-factors. The values assigned to the sub-factors will lead the evaluator to a cell on the chart. This cell will contain three numbers, representing three step values.

Example 1 - Text version

- 350

- 400

- 460

The final decision about which of these numbers to choose to represent the job's total Know-How requirement will be based on the degree of confidence in the validity of the cell selected. Normally, a solid fit on all three sub-factors would lead you to select the middle number in the cell (e.g., for FII3, 400). If you believe one of the sub-factors might be approaching another degree definition, you can “pull-up” (+) the evaluation by choosing the upper value in the cell or “pull-down” (-) to the lower value. When contemplating the use of such modifiers, evaluators should always consider the relative strength of the position as compared to Benchmark ratings, regarding the sub-factor being evaluated.

Example 2 - Text version

- 350

- 400

- 460

Regardless of the number chosen, you should record any shadings in your evaluation (i.e., any “pulls” up or down). You can do this by using a + or – beside the sub-factor(s) as a modifier. For the example above, F+ II 3, illustrates a pull-up on the Practical, Technical, Specialized Know-How (degree F) resulting in the high value (e.g., 460). Should there be two modifiers in the same direction, for example F+II+3, the result would remain the highest value (e.g., 460). Where there are two opposing modifiers, for example F+II-3, these modifiers would cancel each other, resulting in a middle value (e.g., 400 points).

| Concept | Application |

|---|---|

| Making numbering differentiations | The overlapping numbering system is designed to allow different kinds of jobs to receive equivalent points, if appropriate. The numbering system also permits the evaluator to show relative differences between jobs whose evaluations put them in the same cell. This is done by assigning a higher number from the cell to the stronger job. |

| The continuum of the cells | The cells on the Guide Chart represent steps along a continuum. It is possible to carefully evaluate a job on each of the sub-factors and still be aware that the cell selected does not completely reflect your final opinion. In this case, you might choose the top or bottom number in the cell, depending on whether you thought there was a “pull” up or down on the evaluation. The notion of “pull” reflects the fact that evaluation is not an exercise in precision but rather a judgmental process, with answers in shades of grey. Therefore, the differentiation between one level and another may not be clear. For instance: an evaluator could decide a job is G IV 3 (920) based on comparisons to Benchmarks, however they may also feel it is moving towards the H level of Practical/Technical/Specialized Know-How, and with a pull towards III for Planning Organizing and Integrating. This would represent 920 points for the evaluation, expressed as G+ IV-3 (920). Since the numbering patterns overlap with adjacent cells, it really is about selecting the correct cell and then determining if it “pulls” in the direction of one of the sub-factors, but the selection of the cell is key. |

Double Check - Checking the Step Relationships of a Know-How Evaluation Relative to its Superior

There are some guidelines that can assist you in making/validating your judgments. It is important to bear in mind that these are not hard-and-fast rules. They should not be used as a substitute for thorough analysis of the job and interpretation of the Guide Charts.

As a rule, when you are considering a hierarchy of jobs in a job family, technical ladder or reporting structure, the number of steps in the Know-How score can give some insight into the vertical structure of the hierarchy:

No difference

e.g., 608 to 608

When a supervisor and subordinate are evaluated at the same Know-How level it means that the reporting relationship is administrative only. The supervisor will not be managing or evaluating the content of the subordinate’s work, only whether it was delivered on time / on budget. Such situations are extremely rare.

One-step difference

e.g., 460 to 528

A one-step difference between a supervisor and subordinate position generally indicates a point of compression in the structure, giving reason to question the need for the number of organizational layers found. These situations can be found where the superior is responsible for a portfolio of areas (e.g., Assistant Deputy Minister, Corporate Services) and the subordinates have the subject-matter expertise (e.g., Director General, IM/IT). In this type of organizational design, the superior would be responsible for bringing the organizational requirements to the subordinate and assessing whether a proposed solution satisfies the requirements (the “what”) but would not assess the methodology of the solution (the “how”).

Two-step difference

e.g., 460 to 608

This is the typical or logical relationship/vertical distance in a reporting sequence, where the positions operate in the same or related domains. The supervisor provides subject-matter guidance.

Three-step difference

e.g., 460 to 700

Three steps between levels are characteristic of reporting relationships in organizations with relatively homogenous/ well-defined work. These situations also support a broad span of control as the superior does not need to spend a lot of time with each subordinate. It is often found when the subordinates deliver the services in the short-term and the superior focusses on the medium to long-term evolution of the services and the organization.

Four-step difference

e.g., 460 to 800

This represents a very significant difference, perhaps suggesting that a level may be missing in the organizational structure. Care should be taken to ensure that it is not the result of an evaluation error.

Measuring Problem Solving

Problem Solving is the requirement on the part of the position to put Know-How to use in original, self-starting thinking to deal with issues and solve problems on the job.

Measuring Problem Solving involves evaluating the intensity of the mental processes required by the position. Activities include employing Know-How to analyze, identify, define, evaluate, create, and to use judgement to draw conclusions about and resolve issues. To the extent that thinking is circumscribed by policies, centrally guided or referred to others, the Problem Solving requirement of the job is diminished.

Problem Solving is viewed as the mental manipulation of Know-How and is different from the straight application of skills measured by the Know-How factor. For this reason, not all of the Know-How required in a job will necessarily be applied in the Problem Solving elements of that job. Problem Solving is treated and measured as a percentage of Know-How, and the numbering pattern on the chart is comprised of a series of percentages rather than point values. This percentage can be thought of as the proportion of thinking that is above and beyond the straight-forward Know-How (e.g., Self-starting, and original thinking). For example, knowing all the control buttons and knobs on an airplane is Know-How, but landing the plane safely in an emergency is Problem Solving.

Lower-level jobs tend to follow standard procedures and policies most of the time, so Problem Solving is relatively low. High level jobs operate in grey areas with nebulous situations and incomplete / conflicting information, so their Know-How is “stretched” and Problem Solving is relatively high.

Problem Solving has two sub-factors:

- Thinking Environment (vertical axis) – how much assistance is available to help the incumbent do the thinking required and the degree of ambiguity with respect to parameters that the thinking environment presents.

- Thinking Challenge (horizontal axis) – the complexity of the problems encountered and the extent to which original thinking must be employed to arrive at solutions.

| Concept | Application |

|---|---|

| The difference between Thinking Environment and Thinking Challenge | Thinking Environment measures the context in which problem solving takes place. Its main consideration is the amount of help available in that context and the degree of ambiguity that a problem situation presents. Thinking Challenge measures the inherent difficulty of the problems encountered. Its main consideration is the novelty of the solutions being considered. Does the issue require adaptation or innovation/creativity to address it? |

Thinking Environment

Thinking environment is concerned with the freedom to think and/or the degree of guidance available in approaching problems. It is measured by the presence and/or absence of assistance or constraints affecting how problems are addressed. Thinking may be limited by precedents, people, and service-wide, department-wide, or functional goals, policies, objectives, procedures, instructions, or practices. In general:

- Goals, policies, standards, and objectives provide help by describing the “why” of an issue and establish boundaries.

- Procedures detail the steps necessary to follow through on a policy (how, where, when, and by whom).

- Instructions and practices outline the specific procedures or steps.

The degree to which help is available to job holders varies. For example: help from functional specialists and superiors may be less readily available to managers in geographically remote or organizationally isolated areas, or operations that run 24/7. The degree to which help is available is evaluated along the vertical axis of the chart. There are no hard-and-fast rules. However, here are some guidelines:

- At the D level, what must be done is often defined and how things must be done is defined, with options to be selected. This is a less likely option for EX Group work.

- At the E level, the what is clearly stated, but the how is determined by the incumbent’s own judgement and experience.

- At F levels, thinking is more broadly about what must be done. Naturally, how things are to be done is also not clearly defined.

- At the G level thinking is more about why things should be done. What must be done is generally less defined, and how things must be done is not defined at all.

- At the H level thinking is constrained only by general laws of nature, science, public morality, etc. and is about setting the strategic direction for the entire Government. The question is “Where should we be going?” H is extremely unlikely for EX Group work as these roles in the public service respond to the direction of the elected Government.

Note: Only a “pull-up” (+) to a higher Thinking Environment can be applied (not a “pull-down”).

The key concept to remember when evaluating the Thinking Environment is as follows:

| Concept | Application |

|---|---|

| The relationship between the Know-How level and the Thinking Environment level | Logically, jobs do not require the incumbent to think beyond the limit of the Know-How required for the job. Conversely, the Know-How required is dependent on the thinking that needs to be done. Therefore, the Thinking Environment level (as designated by its letter) should generally be no greater than the Practical, Technical, Specialized Know-How level/letter previously assigned. (E.g., When Practical, Technical, Specialized Know-How is at the F level, the Thinking Environment will likely be E or F but not G). |

Thinking Challenge

Thinking Challenge, the second sub-factor of Problem Solving, is concerned with the degree of novelty or original thought required to resolve problems. It assesses the complexity of the problem and the extent to which its solution lies within previous practices. The complexity of the problem faced depends on how clear cut the solution is. The more complex it becomes, the more the job holder must select from experience and adapt previous solutions to similar problems: “Is there a right or wrong answer?” “Is the solution clear cut or does it require more judgement?”.

| Concept | Application |

|---|---|

| The definition of problems | Problems, in this context, refer to the wide range of challenges confronting job holders. The concept is not restricted to things that have gone wrong, although such things must certainly be considered. |

Here are some guidelines:

Roles at degree 3 encounter differing situations where there is a need to search for the appropriate solution: “Which of these possible answers is the most appropriate given the situation?”.

At degree 4, roles encounter new / unique / ambiguous situations, requiring adaptive thinking; this includes the search for new and better ways of doing things and even challenging "is this the right question". This degree is most likely for EX Group work.

At degree 5, situations encountered are uncharted or novel – both inside and outside the organization - and require the development of new concepts or imaginative solutions for which there are no precedents.

Note: Only a “pull-up” ( + ) to a higher Thinking Challenge can be applied (not a “pull-down”).

The levels of Thinking Challenge appear across the top of the Problem Solving chart.

Combining the Problem Solving Sub-Factors

The result of making independent judgements for each of the two Problem Solving sub-factors is that the evaluation falls within a cell that contains two percentage step values.

Example 3 - Text version

- 50%

- 57%

Two percentage step values: your choice of which specific Problem Solving percentage to use to represent the job's total Problem Solving requirements will be a judgment, based on your analysis of the strength or weakness of the job's fit in relation to the Guide Chart definitions of the two sub-factors and comparison to relevant benchmarks at the percentage levels.

Generally, a solid fit in relation to the definitions should result in choosing the lower (standard) number in the cell. A “pull” to a higher Thinking Environment and/or Thinking Challenge would change the choice to the higher percentage. A pull-down modifier cannot be applied to this factor.

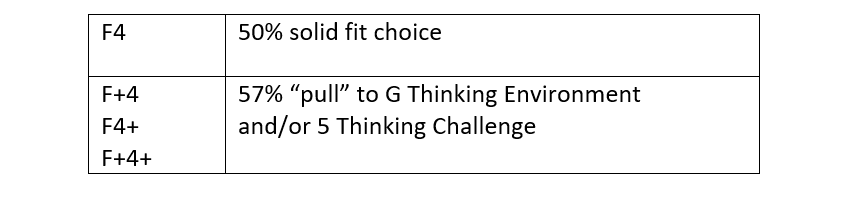

Example 4 - Text version

- F4: 50% solid fit choice

- F+4, F4+, F+4+: 57% “pull” to G Thinking Environment and/or 5 Thinking Challenge

To determine Problem Solving points, you can use the chart illustrating the most likely combinations of Problem Solving and Know-How points (second page). Simply locate the Problem Solving percentage in the left or right columns and the Know-How points along the top or bottom. The resulting Problem Solving points are found at the intersection.

Double Check - Checking the Problem Solving Evaluation

Evaluators should take the time to review their Problem Solving evaluations. Since Problem Solving is the application of Know-How, experienced evaluators have found that the relationship between the two factors tends to fall into patterns. These patterns are shown by the shadings on the most likely, less likely, and unlikely combinations of Problem Solving and Know-How points on the chart. They will serve as a general guide for checking the Problem Solving evaluation:

- Normally, an evaluation should fall in the Most Likely areas.

- An evaluation can fall in the Less Likely areas if it can be rationalized.

- If an evaluation falls in the unlikely shaded areas, the evaluation of both the Know-How and the Problem Solving factors should be re-checked. It is possible that the body of knowledge the incumbent is expected to have is insufficient for thinking at the level indicated by the Problem Solving evaluation, or that too much knowledge is expected of the position given the degree to which it will be put to use, as indicated by the Problem Solving evaluation.

The following outlines the types of roles / work found at specific Problem Solving (PS) percentages:

| PS% | Interpretation |

|---|---|

76+ |

Enterprise Strategy Formation Roles that envision the long-term strategic direction of the Government and/or the implications for the entire Department/Agency. This work is typically found at the Minister and Deputy Minister level and therefore unlikely within the EX Group. |

66 |

Strategy Formation – Branch / Sector Leadership These roles define the overall strategy of the Branch / Sector and determine new / future directions of the organization. This work is typically found at the Assistant Deputy Minister level or those roles which are delegated some significant part of the Deputy Head’s authority. (e.g., Assistant Deputy Minister, Policy) |

57 |

Strategic Alignment These roles are focused on the strategic direction of a major function or operation of the Department/Agency. The work involves setting the policy framework and objectives for others to ensure integration between function and sub-functions and are typically Assistant Deputy Ministers / Large Director General roles. (e.g., Director General, Resource Management) |

50 |

Strategic Implementation These roles are associated with varying the application of policy locally. There is a requirement to re-shape policy to fit the specific environments for which the job holder is accountable (i.e., turning functional policy into reality). This is typically found within Director General / Director type roles. (e.g., Director, National Security Assessment and Analysis) |

43 |

Tactical Implementation Roles are concerned with the translation of policy into operating procedures, with contributions to policy made based on an understanding of local/specific applications. These are found typically at the Director / Manager levels. |

Measuring Accountability

-

In this section

- Freedom to Act

- Area of Impact – Magnitude

- Use of the Accountability Magnitude Index to Adjust for Inflation

- “Pass-Through Dollars”

- Nature of Impact on end-results

- Choosing the Correct Area of Impact (Magnitude) and Nature of Impact Combination

- Examples of Impact for Various Dimensions

- Combining the Accountability Sub-Factors

- Double-Check: Checking the Complete Evaluation

Accountability measures the degree to which a job is accountable for action and the consequences of that action. It is the measured effect of the job on the end results of the organization.

Up to this point, judgments have been made about the total Know-How required for fully competent job performance and the degree of novelty and ambiguity employed in Problem Solving. Now the task is to consider the job's ability to bring about or assist in bringing about specific end results. This includes considering the nature of that ability in terms of how direct or indirect its impact as well as the scope of that impact on those results.

Accountability considers the following sub-factors in the following order of importance:

- Freedom to Act: The freedom the incumbent has to make decisions and carry them out. It is the delegated decision-making authority and is the most important sub-factor.

- Nature of Impact on end results: How direct the job's influence (direct or indirect) is on the end results of a unit, function, or program.

- Area of Impact (Magnitude): The general size of the unit, function, program, or the element of society affected. This is the least important sub-factor.

Freedom to Act is a stand alone sub factor while the Nature and Magnitude are two elements of how we measure Impact. The latter must always be evaluated in combination. In this sense, they are not stand alone sub-factors; together they constitute the sub-factor of Impact. This is why Freedom to Act is the most important and carries the most quantitative weight in the evaluation of the job’s total Accountability rating. Each perceptible difference in Freedom to Act step generates a 15% increase in points with no overlap between levels. (e.g., E+ and F- are different on the Accountability guide chart, but the same on the Know-How guide chart.)

Freedom to Act

By examining the nature and extent of the controls – or the lack of the controls – that surround the job, this sub-factor directly addresses the question of the job's freedom to take action or implement decisions.

The controls placed on the position's Freedom to Act can be supervisory or procedural or both. In assessing Freedom to Act we focus on the core responsibilities of the position. A key difference to keep in mind when considering Freedom to Act is:

| Concept | Application |

|---|---|

| The difference between Freedom to Act and Thinking Environment | It is a common mistake to confuse the restraints placed on Freedom to Act with the help available in the Thinking Environment:

|

Since controls tend to diminish as you rise in the organization, Freedom to Act increases with organizational rank. However, while it is true that no job can have as much Freedom to Act as its superior, the evaluator should be wary of automatic slotting according to organization level alone.

Note: Modifiers (“pull-up” or “pull-down”) can be applied to the Freedom to Act sub-factor.

Here are some broad guidelines that can help in assessing Freedom to Act:

-

At the E level, positions are relatively free to decide, within approved plans, what the objectives or end results will be. Review of work is typically on an annual/quarterly basis. Roles at this level determine “how” and “when” objectives are to be achieved/completed (e.g., Director, Legislative and Regulatory Affairs).

Roles reporting at the third hierarchical level may be at E+ where they are delivering activities / operations within a well-defined departmental framework (e.g., Director, Program Evaluation at degree E+).

-

At the F level, positions are relatively free to determine what the general end results are to be. Managerial direction received will be general in nature. Assessment of end results must be viewed over longer time spans (e.g., six months to a year or longer).

Roles reporting at the third hierarchical level may be at F- when establishing how to implement government-wide frameworks within the departmental context (e.g., Director, Risk Management at degree F-).

Positions at the second hierarchical level are typically at F or F+. (e.g. Regional Director, Health Services, Prairie Region at degree F, and BM 7-X-1 Director General, Centralized Operations at degree F+).

-

At the G level, the “what” is communicated only in very general terms. Positions are subject to guidance rather than direction or control. Any job evaluated here is subject only to broad policy and strategic objectives. This is typically the level applied to Assistant Deputy Minister level roles (e.g., Assistant Deputy Minister, Infrastructure and Environment).

Area of Impact – Magnitude

This sub-factor of Accountability measures the size of the area affected by a position. While it does give an indication of the weight to be assigned to the position, it is the least influential and sensitive of the three sub-factors of Accountability used to determine the overall Accountability evaluation. The evaluation of the Area of Impact (magnitude) must always be considered with the Nature of Impact.

To support a common way of measuring the size or area impacted in the context of a job’s accountability, a common quantifiable means or proxy for representing the diverse units, functions and programs that could be affected by the job was needed. Dollars have proven to be the most widely applicable proxy for measuring the Area of impact (Magnitude) of Accountability. The Area of Impact (Magnitude) scale is the least influential because the change or increase in dollar value to generate a 15% difference in point score is a 233% change (e.g., a budget increase from 300M to 400M is a 33% increase in dollar value but does not change the Magnitude rating).

However, to make a logical, rational determination of the Area of impact (Magnitude), the evaluator must remember that dollars are simply a proxy, not an absolute measure.

| Concept | Application |

|---|---|

| Dollars are only a proxy to represent Magnitude | Dollars are the most convenient measure to quantify the size of the accountability affected by a job. However, this does not mean that jobs impact on dollars. Jobs impact the results of functions, programs, or operations of organizational units. The use of dollars is simply a mean to quantify contributions to assess the position’s impact on a scale. A helpful way to think about this is the concept of return on investment. The government decides to invest a certain amount of money in a program / activity / service and executives are responsible for the stewardship of these investments – ensuring that the government gets the desired return. The magnitude is the amount of the investment being made and the nature of impact is the role that any particular executive plays in bringing about the desired returns. |

The Area of Impact (Magnitude) continuum on the Guide Chart has seven degrees, from Very Small to Largest. These headings provide a rough idea of the appropriate Magnitude for the subject position. References to the appropriate Benchmarks will help refine this initial determination. In this way, evaluators can arrive at a reasonable determination of the Area of Impact (Magnitude) and avoid jumping immediately to a premature consideration of budget dollars.

Evaluators should use the following process for applying the proxy to establish the appropriate Area of Impact (Magnitude):

- Determine and describe (in words) what part(s) and/or function(s) of the organization the job affects and the nature of the job’s effect on each of them.

- Once the part(s) and/or function(s) most appropriate to the job have been identified, think about the relative size of the part(s) or function(s) under consideration and describe these in words (Found in Dimensions section of the job description, or obtain this information through supporting documents.).

- Once these relationships have been articulated, verify them and the “size” selected for the job against the dimensions of the Benchmark positions.

| Concept | Application |

|---|---|

| Applying modifiers to the Area of Impact (Magnitude) of the Proxy Selected | The scale reflects a geometric progression with overlap between levels (e.g., 3+ and 4- both generate the same points when Freedom to Act and Impact are the same for both). A simple calculation can be used to determine how modifiers should be applied to Area of Impact (Magnitude): Double the bottom and halve the top: Using a Magnitude of 4 with 10M to 100M gives you 20M and 50M. Thus, a (-) modifier would be applied to values between 10M and 20M, a solid 4 would be reflective of values between 20M and 50M, and above 50M, but less than 100M would have a (+) modifier applied. |

Use of the Accountability Magnitude Index to Adjust for Inflation

Unfortunately, the value of money does not remain constant over time. To compensate for this change, the dollar values used as proxies for the Area of Impact (Magnitude), reflected in the Benchmarks have been converted into constant dollars to ensure a continuous alignment with the monetary amounts identified in the Guide charts.

Therefore, to make comparisons between a subject job's proxy dollars (which are expressed in current dollars) and the constant dollars in the Benchmarks, it is necessary to convert the current dollars into constant dollars. The Accountability Magnitude Index (AMI) provides the factor used for this purpose. To convert a current dollar value into constant dollars, divide the current dollar value by the approved AMI.

The AMI is adjusted periodically by Korn Ferry to reflect the value of Canadian currency on a global stage. This adjustment is reviewed annually and periodically adopted by the Office of the Chief Human Resources Officer (OCHRO) in the Treasury Board of Canada Secretariat for application in evaluating EX Group jobs. Any AMI adjustments to be used in the Core Public Administration for EX Group job evaluation are announced by the OCHRO. The AMIs from financial year 1980/81 to the publication date of this guidance are as follows:

| Year | Magnitude Index |

|---|---|

| 1980/81 | 2.45 |

| 1981/82 | 2.77 |

| 1982/83 | 3.06 |

| 1983/84 | 3.41 |

| 1984/85 | 3.61 |

| 1985/86 | 3.72 |

| 1986/87 | 3.83 |

| 1987/88 | 3.91 |

| 1988/89 | 4.03 |

| 1989/90 | 4.17 |

| 1990/91 | 4.37 |

| 1991/92 | 4.5 |

| 1992/93 | 4.6 |

| 1993/94 | 4.7 |

| 1994/95 | 4.8 |

| 1995/96 | 5 |

| 1996/97 | 5 |

| 1997/98 | 5 |

| 1998/99 | 5.2 |

| 1999/2000 | 5.4 |

| Sept. 2000 | 6 |

| Sept. 2002 | 6.5 |

| Apr. 2006 | 7 |

| Sept. 2010 | 8 |

| Oct. 2022 | 9 |

“Pass-Through Dollars”

Many positions may appear to have a very large Magnitude, but the dollars being used are “Pass-Through Dollars” where little or no value is added to the position (transfer payments to individuals or other jurisdictions under social programs which are controlled largely by legislation, regulation or formula fall into this category). An example would be Canada Pension Plan payments. The key to handling Pass-Through Dollars is as follows:

| Concept | Application |

|---|---|

| Pass-Through Dollars are unlikely to be an appropriate Magnitude proxy | In cases of Pass-Through Dollars, the position deals with the process of payment. As such, the accountability is limited to ensuring the processing of a payment, not for the amount or type of payment. These dollars cannot adequately represent the Magnitude of the position. A more appropriate proxy should be found. While there is potential to consider an Indirect impact on the Pass-Through dollar proxy, a better measure could be a Primary impact on the salary, operating and management budget of the unit to reflect the work of the staff processing payments. |

Nature of Impact on end-results

The Nature of Impact measures the directness of the position's effect on end results. The evaluation of the Nature of Impact must always be considered with the Area of Impact (Magnitude). The Nature of Impact levels are as follows:

I - Indirect: The position provides information or other supportive services for use by others. Activities are noticeably removed from final decision and end results. The position's contribution is modified by or merged with other support before the end result stage. For example:

Director, Financial Accounting, accountable for the processing, accounting, and reporting of departmental financial transactions. The role provides financial transaction support services; however, it is noticeably removed from the end results achieved by the departmental budget, having an indirect influence on the overall departmental budget.

The use of + or – with an Indirect impact suggest that, for some jobs, the influence and contributions can be more closely tied to the end results, while for others, the link is more obscure.

C - Contributory: The position provides interpretative, advisory, or facilitating services for use by others or by a team in taking action. The position's advice and counsel are influential and closely related to actions or decisions made by others. The position’s contribution significantly influences decisions related to various units or programs. For example:

Director General, Integrity Risk Guidance, acts as the authority on risk management and integration, and provides advice and guidance to senior departmental officials to ensure the integrity of departmental policies, programs and service delivery strategies and frameworks. The position influences the design and delivery of departmental policies and programs, reflected as a contributory impact on the organization’s overall budget.

To the extent that Contributory pertains to only a portion of the investment represented by the Area of Impact (Magnitude), or the advice given relates more to remaining compliant with governing frameworks, a C- might apply. Conversely, if the advice is expected to materially impact the value delivered across the bulk of the investment represented, it might be a C+.

S - Shared: The position is jointly accountable with others for taking action and exercising a controlling Impact on end results. Positions with this type of Impact have noticeably more direct control over actions than positions evaluated at the Contributory level, but do not have total control over all the variables in determining the end result(s).

A basic rule is that Shared Impact does not exist vertically in an organization (e.g., between superior and subordinate). Shared Impact can exist between peer jobs within the same organization or with a position or positions from outside the organizational unit or outside of the Federal Government. Shared Impact suggests a degree of partnership in, or joint accountability for, the total result. In this way it differs from Contributory Impact, where the position is only accountable for an input to the end result. For example:

The departmental Project Manager could be considered to have a Shared Impact on all design and construction activities carried out by Public Services and Procurement Canada in the construction of a major facility.

The use of + or – with a Shared impact suggest “we are all equal, but some are more impactful than others”. It is a relative assessment of the impact of the different players on a file or issue where they are part of the decision-making team.

P - Primary: The position has controlling Impact on end results, and the accountability of others is subordinate or advisory. Such an Impact is commonly found in managerial positions which have line accountability for key end result areas, be they large or small. For example:

The Director of a research unit may have Primary Impact upon the research activities done by all sections of the unit. A subordinate Manager within the unit may be accountable for the research activities in a section of the unit. Both positions could be evaluated at the Primary level, but the Area of Impact (the size of the unit or function or activity) would vary.

Note: There are no + or - modifiers for this impact.

| Concept | Application |

|---|---|

| The relation between control and Primary Impact | The relative size of the unit is not an issue in deciding whether the position has Primary Impact on its results. The key is that:

|

Choosing the Correct Area of Impact (Magnitude) and Nature of Impact Combination

An evaluation score may differ depending on the combination of Area and Nature of Impact used. It is likely that an Executive role can be evaluated with more than one reasonable combination. For example:

- A function head (e.g., a Director General of Human Resources) may be seen to have a Contributory Impact on the investment in the workforce of the Department (e.g., Departmental salary budget) or a Primary Impact on the operations of the Human Resources Branch (e.g., Branch Salary and O&M budget).

- A Director General, Procurement may be seen to have a Primary Impact on the effective operation of the branch (e.g., Branch Salary and O&M budget), or an impact on the value of the goods and services being procured for the organization where the nature of that Impact may vary from Indirect to Shared depending on the influence that procurement has on obtaining the optimum return on investment on the money spent.

Evaluators are encouraged to identify the different possible combinations for the position being evaluated before selecting which might be more suitable. Very often the point totals available in the alternative slots for the possible combinations of Area and Nature of Impact will be the same; in such cases it is advisable to select the combination that best expresses the core purpose of the role. Where they are not the same, it is advisable to use the higher score to properly reflect the full job size.

The key is to find the combination of Area and Nature of Impact that results in the highest legitimate evaluation. This is because it is vital to get the fullest, most complete measure of the position for these two sub-factors as to properly reflect the job size. The table on the following pages provides some guidelines for evaluating certain types of expenditures when these are used as the proxy.

Examples of Impact for Various Dimensions

| Dimension | Impact to Consider |

|---|---|

1. Salary, operation, and maintenance budget (O&M) as used to represent a divisional budget |

A Primary Impact is selected when the main accountability for a unit or a program’s end results rests with the job/role being evaluated. A Contributory or Indirect Impact can be considered if the job plays an advisory or facilitating role either through direct action or indirectly through a modified contribution (e.g., Policy Advisor/Expert role advising on how to manage/organize/develop a unit.) |

2. Capital budget (used to represent a capital program) |

A Primary Impact is considered when the entire lifecycle of the capital program/project is controlled by the role. This includes feasibility, design, construction, installation, and utilization. This is rare. Such a project would most likely have a multi-year time horizon and the total investment would be divided by the planned number of years to obtain an annual value. A Primary Impact can also be selected when responsibility for return on investment is maximized (e.g., a Regional Director accountable for delivering services that require the use of Government of Canada assets, such as vehicles). Note: The complete value of the assets is not considered, as assets have multi-year lifespans. The evaluator needs to use the annual value, which is reflected as the Capital budget.) More typically, the Impact is Shared or Contributory as responsibility for capital programs/projects lies with several managers, each responsible for major components of the program/project. |

3. Full organizational budget (used to represent an organization’s entire annual budget) |

Typically, no one position in the EX Group will be fully accountable for an entire organization’s budget. However, roles providing organizational-wide expert advice and direction can be considered to have a Contributory Impact on an organization’s overall budget. (e.g., Chief Financial Officer roles which provide oversight, recommendations and ensure financial stewardship of a department’s resources.) |

4. Human resources costs (used to represent the human resources function) |

A Contributory Impact is considered when the role plays a significant advisory function or is accountable for all (or a large portion) of an organization’s Human Resources program direction (e.g., Heads of Human Resources, Central Agency roles accountable for setting overall Public Service direction.) A Primary impact is not appropriate as many jobs play a role with respect to human resources management within an organization. While a Head of Human Resources can set direction, define policies, etc., they cannot be directly accountable for the human resources-related decisions of delegated managers in their organizations. (e.g., A Director of a unit will have direct (Prime) accountability of their unit’s salary and operating budget.) |

5. Purchased materials and equipment (used to represent the purchasing function) |

The Impact considered would be Contributory for a normal supply and service role (prepares and issues Requests for Proposals) or Shared where the role is one of heavy involvement in determining specifications, in addition to the normal supply and service role. The impact could also be Indirect if the role simply orders supplies/services from pre-approved suppliers with pre-negotiated prices and terms and conditions of service. Larger procurement roles are typically found in organizations whose mandate is to provide services/support for Public Service/Canadians (e.g., Public Services and Procurement Canada and Shared Services Canada). |

6. Grants and Contributions (used to represent a program) |

A Contributory or Indirect Impact is considered for roles with discretion in Grants and Contribution amounts, and/or control over the end results expected from the grant or contribution. The degree to which the role impacts the Grants and Contribution will determine whether Contributory (e.g., Director General, Communications) or Indirect (e.g., Director, Financial Policy) is most appropriate. Note: In certain cases, Grants and Contribution may not be applicable as pass-through dollars (e.g., Transfer Payments). Grants and Contribution would only be contributory if the role determined who is eligible, and evaluated the use of the money. |

7. Transfer Payments (used to represent a program) |

For roles with accountability for Transfer Payments that are determined by a formula with no discretion, the Impact would likely be none (e.g., Transfer Payments are viewed as Pass-Through dollars: Where the role oversees the process of payment but has no influence on whether the payment should be made, or what the payment amount should be.). However, if the role has some discretion in determining amounts and/or use, the Impact would be Indirect because the position has some effect on the program. |

8. Revolving Funds (represents payment received from clients for services rendered) |

These positions do not have sufficient impact on what is to be measured. The impact is none. Note: Payments received should not be double counted against corresponding expenditures, nor should they be used to reduce operating expenditures to a net figure. |

9. Dimensions lying outside the Public Service such as value of the GDP |

The relationship of Public Service positions to these dimensions is, in most cases, too remote for the evaluation of any Impact. Where influence can be clearly identified, the Impact of positions is normally Indirect and is typically exerted through legislative, regulatory or enforcement authorities. (e.g., Senior roles at Global Affairs Canada which significantly influence and shape trade relationships with other countries, or senior level roles in organizations with a broad regulatory role with impact on a sector/industry, such as Fisheries and Oceans.) |

| Concept | Application |

|---|---|

| Evaluating the Impact of large initiatives shared across several organizations | It is not uncommon to find jobs involved in initiatives shared across several government departments, where each department plays a key role in delivering the end result. It is reasonable to consider a Primary Impact on an individual role’s portion of the total annualized dollar value of the project or initiative, or a Shared Impact on the annualized aggregate value of the initiative. Where one of the participating organizations is identified as the lead organization and has overall accountability for the total end results, a pull-up on the Freedom to Act should be considered; if this is judged to be appropriate, then a pull-down on the Freedom to Act for the roles in non-lead organizations should also be applied, thus reflecting the expectation that the lead organization would be vetting and endorsing the decisions taken by the other organizations. If the lead organization performs the role of coordinator (reporting on, but not accountable for the totality of the program end results), then the coordinator role is unlikely to have a material impact on the evaluation. |

| Concept | Application |

|---|---|